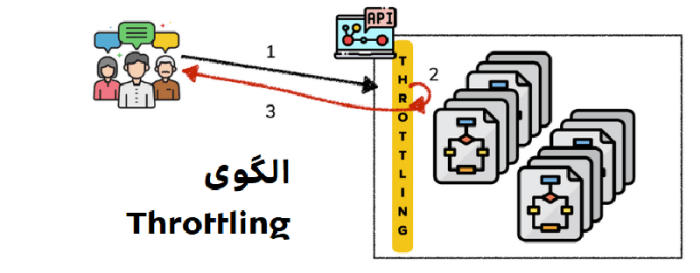

Understanding throttling patterns is crucial for building robust and scalable applications, especially in high-traffic environments. This comprehensive guide delves into the intricacies of throttling, exploring its various mechanisms, implementations, and practical applications. We’ll cover everything from defining throttling to implementing it in different programming languages, highlighting the critical security and performance considerations.

Throttling, in essence, controls the rate at which requests are processed. By strategically managing resource allocation and request frequency, throttling helps prevent overload, enhance performance, and maintain a positive user experience. This document provides a practical, step-by-step approach to effectively implement throttling mechanisms, empowering developers to build more resilient and adaptable systems.

Defining Throttling

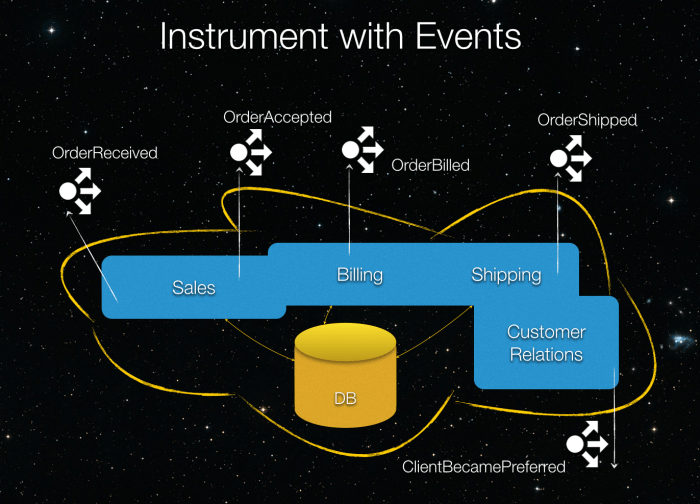

Throttling, in the context of system performance, is a crucial mechanism for managing resource consumption and preventing overload. It’s a deliberate technique to regulate the rate at which requests or actions are processed, ensuring stability and responsiveness under varying loads. This approach protects the system from becoming overwhelmed by a sudden influx of demands, preventing performance degradation or complete failure.Effective throttling strategies are essential for maintaining a robust and reliable system architecture.

Understanding the various throttling patterns enables developers to design applications that can gracefully handle fluctuating workloads and guarantee a consistent user experience. This careful regulation is particularly important in applications dealing with high traffic, real-time data processing, or systems with limited resources.

Throttling Mechanisms

Throttling mechanisms are diverse, encompassing various strategies to control resource access and utilization. These methods often employ specific algorithms and configurations to achieve the desired control. Different types of throttling strategies are suitable for different contexts and applications.

- Rate Limiting: Rate limiting is a common throttling technique that restricts the frequency of requests or actions from a particular source. It imposes a limit on the number of requests allowed within a specific time window. This is widely used in APIs to prevent abuse and ensure fair access for all users. For instance, a social media platform might limit the number of posts a user can make per minute to prevent spamming.

This approach helps to maintain a stable system and avoids overload situations.

- Resource Allocation: Resource allocation throttling manages the distribution of limited system resources, like CPU cycles, memory, or network bandwidth. This technique is critical for preventing a single application or user from consuming all available resources, potentially affecting other applications or users. A web server might limit the amount of memory a single request can consume, preventing a malicious or resource-intensive request from crashing the entire server.

- Queueing: Queueing mechanisms are often used in conjunction with other throttling strategies. They temporarily store requests that exceed the system’s capacity, ensuring that they are processed when resources become available. This approach can help manage peak loads and maintain responsiveness during periods of high demand. In a large e-commerce platform, order processing might use a queue to handle a surge of orders during a sale.

Significance of Understanding Throttling Patterns

Understanding different throttling patterns is crucial for designing robust, scalable, and responsive software systems. It allows developers to proactively manage resource consumption and prevent performance bottlenecks. This is particularly relevant in applications where the volume of requests or actions can fluctuate significantly, such as online gaming platforms, e-commerce sites, and financial transaction processing systems.

Throttling Strategies Comparison

The table below summarizes the key characteristics of different throttling strategies, highlighting their strengths and weaknesses.

| Strategy | Mechanism | Advantages | Disadvantages |

|---|---|---|---|

| Rate Limiting | Limits the frequency of requests within a time window | Simple to implement, effective for abuse prevention | May not address resource exhaustion, can lead to unfairness for legitimate users with high request rates. |

| Resource Allocation | Controls the amount of resources allocated to a request | Prevents resource exhaustion by a single entity, improves system stability | Can be complex to implement, might need detailed resource monitoring. |

| Queueing | Temporarily stores requests exceeding capacity | Handles peak loads gracefully, maintains system responsiveness | May introduce latency, requires efficient queue management |

Identifying Throttling Patterns

Throttling, a crucial technique in application design, strategically controls the rate at which requests are processed. Understanding various throttling patterns and their implementation is vital for maintaining application stability and performance under high load. Effective throttling prevents resource exhaustion and ensures a responsive user experience. This section delves into common throttling patterns, showcasing their benefits and potential drawbacks.Identifying and implementing appropriate throttling mechanisms are essential for robust applications, especially those handling a significant volume of requests.

The choice of throttling pattern often depends on the specific application requirements and the anticipated load.

Common Throttling Patterns

Various throttling patterns address different application needs. Understanding these patterns enables developers to select the most suitable approach for their specific use cases.

- Rate Limiting: This pattern limits the number of requests an entity can make within a specific time frame. For instance, a user might be allowed a maximum of 10 API calls per minute. This is a common method for preventing abuse and protecting resources. Rate limiting ensures fair access for all users and prevents overload of the system.

- Token Bucket: This method allows requests to be processed as tokens are available. A bucket fills with tokens at a constant rate. If the bucket is full, requests are queued or rejected. This approach offers more flexibility than simple rate limiting, allowing for bursts of requests while still controlling the overall rate. Token bucket is well-suited for applications with variable request patterns.

- Leaky Bucket: This pattern releases requests at a constant rate, regardless of the incoming request rate. Any excess requests are discarded. Leaky bucket is suitable for applications needing a consistent response time, such as real-time data feeds.

- Fixed-Window Throttling: This method limits requests based on a fixed time window. Requests are counted within a defined interval, and any exceeding the limit are blocked. Fixed-window throttling is relatively simple to implement but might not handle bursty traffic effectively.

Scenarios Benefiting from Throttling

Throttling proves beneficial in various scenarios.

- Protecting APIs from abuse: Throttling prevents malicious actors from overwhelming APIs with excessive requests, safeguarding the service’s availability and integrity.

- Maintaining application responsiveness: Throttling prevents the application from becoming unresponsive under high load by controlling the rate of requests being processed.

- Preventing resource exhaustion: By limiting resource consumption, throttling prevents the application from depleting critical resources, ensuring stability and preventing crashes.

- Ensuring fair access for all users: Throttling promotes a fair distribution of resources among users, preventing any single user from monopolizing the system’s capacity.

Negative Impacts of Inappropriate Throttling

Careless implementation of throttling can lead to performance issues.

- Reduced performance under normal load: Excessive throttling can lead to slower response times even when the system isn’t under significant load.

- Poor user experience: Unnecessary delays due to throttling can negatively impact user experience, potentially leading to user frustration and abandonment.

- Inability to handle peak traffic: Insufficient throttling can lead to system overload during peak demand, resulting in service disruptions.

- Loss of legitimate requests: Overly aggressive throttling may lead to the rejection of legitimate requests, impacting functionality and user access.

Examples of Throttling Patterns

Throttling techniques are applicable across various programming languages.

| Pattern | Python Example (Illustrative) | Java Example (Illustrative) | JavaScript Example (Illustrative) |

|---|---|---|---|

| Rate Limiting | Using `time.sleep()` to pause execution after a certain number of requests. | Implementing a `RateLimiter` class using a `ConcurrentHashMap` and a `ScheduledExecutorService`. | Using `setTimeout()` to enforce a delay between requests. |

| Token Bucket | Maintaining a queue and a token counter. | Implementing a `TokenBucket` class with a `BlockingQueue` and a token generation mechanism. | Using a queue and a token generation function to control request rate. |

Implementing Throttling Mechanisms

Implementing effective throttling mechanisms is crucial for ensuring system stability and responsiveness under varying traffic loads. Properly designed throttling prevents overwhelming servers and ensures fair resource allocation, ultimately improving the user experience. These mechanisms, often employing algorithms like token buckets or leaky buckets, meticulously control the rate at which requests are processed, preventing performance degradation and system overload.Implementing a throttling mechanism involves careful consideration of the desired rate limits and the specific needs of the application.

This process ensures that the system remains responsive and avoids performance bottlenecks under high load conditions. By strategically adjusting the throttling parameters, we can tailor the system’s behavior to the current workload, thereby preventing system overload and ensuring that resources are utilized effectively.

Rate-Limiting Throttling Mechanism Implementation

A rate-limiting throttling mechanism is implemented by establishing a set of rules that govern the rate at which requests are processed. These rules define the allowed request frequency within a specific timeframe, ensuring that the system does not get overwhelmed by a sudden surge in requests. These mechanisms are vital for maintaining system stability and preventing resource exhaustion.

- Defining Rate Limits: Establish clear thresholds for the maximum number of requests allowed within a given time window. For instance, a rate limit of 10 requests per second allows a maximum of 10 requests to be processed within any one-second interval. Defining these limits is a critical step in managing traffic effectively.

- Monitoring Request Arrival: Implement mechanisms to track the arrival of requests and timestamp them. Accurate timestamping is essential for calculating the rate of requests and enforcing the defined rate limits. A request queue can be used for storing incoming requests before processing.

- Enforcing Rate Limits: Develop a system for evaluating incoming requests against the defined rate limits. If a request exceeds the limit, the request can be rejected, delayed, or placed in a queue. This mechanism effectively manages the flow of requests, ensuring that the system operates within its defined capacity.

- Handling Rejected Requests: Establish a strategy for handling requests that exceed the rate limit. This might involve rejecting the requests, queuing them, or providing feedback to the client. A key aspect of this is to provide an appropriate response to clients to prevent application issues.

Token Bucket Algorithm Implementation

The token bucket algorithm is a popular rate-limiting technique. It works by maintaining a bucket that holds a fixed number of tokens. Tokens are added to the bucket at a constant rate. Requests are allowed only if there are sufficient tokens in the bucket. If there are no tokens, the request is either rejected or queued.

- Initialize the Token Bucket: Create a bucket with a predefined capacity (maximum number of tokens) and a refill rate (the rate at which tokens are added to the bucket). The bucket starts with a certain number of tokens.

- Generate Tokens: Add tokens to the bucket at the specified refill rate. For example, if the refill rate is 5 tokens per second, 5 tokens will be added every second. The algorithm ensures that tokens are added consistently.

- Consume Tokens: When a request arrives, check if there are sufficient tokens in the bucket. If there are, remove the required number of tokens from the bucket. If not, the request is rejected or queued.

- Handle Queueing or Rejection: If there are no tokens available, requests are handled as determined by the system’s configuration, either by being rejected or added to a queue to be processed later.

Leaky Bucket Algorithm Implementation

The leaky bucket algorithm maintains a buffer (bucket) with a finite capacity. Requests are added to the bucket. A constant rate (the leak rate) removes tokens from the bucket. Requests are allowed only when there’s space in the bucket. Requests arriving faster than the leak rate will be queued.

- Initialize the Leaky Bucket: Define the bucket’s capacity and the leak rate. The bucket starts empty.

- Add Requests: When a request arrives, add it to the bucket. If the bucket is full, the request is either rejected or queued.

- Drain Tokens: Drain tokens from the bucket at a constant rate (the leak rate). This ensures that requests are processed at a controlled pace.

- Process Requests: Process requests from the bucket as tokens are drained. The system processes requests only when tokens are available in the bucket.

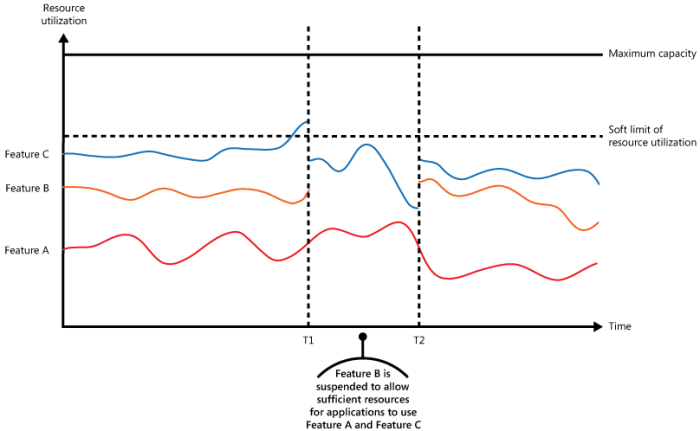

Dynamic Throttling Mechanism

A dynamic throttling mechanism adapts to varying traffic loads by adjusting the rate limits in real-time. This approach ensures optimal resource utilization and responsiveness under fluctuating workloads.

- Monitor Traffic Load: Track the incoming request rate and other relevant metrics to determine the current workload.

- Adjust Rate Limits: Based on the observed traffic load, dynamically adjust the rate limits to maintain system performance. Increase the rate limits during low load periods and decrease them during high load periods.

- Implement Feedback Loops: Develop a system for monitoring the system’s response time and resource utilization to provide feedback to the throttling mechanism. Adjustments are made based on the observed system performance.

Comparison of Throttling Algorithms

| Algorithm | Pros | Cons |

|---|---|---|

| Token Bucket | Fair allocation, handles bursts | Implementation complexity |

| Leaky Bucket | Simple implementation | Cannot handle bursts effectively |

| Dynamic Throttling | Adapts to changing loads | Requires complex monitoring and feedback loops |

Choosing the Right Throttling Strategy

Selecting the appropriate throttling strategy is crucial for maintaining system stability and performance. The choice depends heavily on the specific characteristics of the application, the expected load, and the desired level of responsiveness. A well-chosen strategy ensures that the system can handle fluctuating demands without overwhelming resources or sacrificing user experience.Understanding the trade-offs between different throttling approaches, and the performance implications of various algorithms, is key to effective implementation.

The optimal strategy will balance resource utilization, responsiveness, and scalability. Factors like request volume, resource availability, and desired latency levels play a significant role in this decision.

Factors to Consider When Selecting a Throttling Strategy

Several factors influence the selection of a throttling strategy. These include the system’s architecture, the nature of the requests being throttled, and the anticipated load. A comprehensive understanding of these elements is essential for making an informed decision.

- Application Architecture: The underlying architecture of the application dictates the feasibility and effectiveness of different throttling strategies. Microservices, for instance, might benefit from per-service throttling, whereas a monolithic application might utilize a global throttling mechanism. A thorough understanding of the application’s components is vital.

- Request Characteristics: The nature of requests impacts the suitability of throttling strategies. If requests have varying resource consumption patterns, a dynamic throttling mechanism might be more appropriate than a fixed rate limit. Furthermore, the frequency and burstiness of requests influence the strategy’s effectiveness.

- Anticipated Load: Predicting future load patterns is crucial. A strategy that effectively handles peak loads may be inefficient during periods of low activity. Thorough load testing and analysis help identify the optimal throttling approach for various load conditions.

Trade-offs Between Throttling Approaches

Different throttling strategies come with their own set of advantages and disadvantages. A careful evaluation of these trade-offs is essential for selecting the most suitable approach.

- Fixed Rate Limiting: Simple to implement, but can be inefficient during periods of low activity. It might not adequately address bursts of high-volume requests, potentially leading to system instability during spikes.

- Leaky Bucket: Handles bursts better than fixed rate limiting, but still relies on a fixed rate. This approach is more robust in handling unpredictable bursts but may not be ideal for highly variable workloads.

- Token Bucket: Offers more flexibility in managing bursts and variable workloads compared to leaky bucket. It allows for a configurable rate and burst capacity, making it suitable for applications with unpredictable or fluctuating demands.

- Dynamic Throttling: Adapts to changing load conditions, providing a more sophisticated approach. However, its implementation can be more complex and require more sophisticated monitoring and adjustment mechanisms.

Performance Implications of Throttling Algorithms

The performance of a throttling algorithm is directly linked to its implementation and the chosen strategy. Overhead introduced by the throttling mechanism needs careful consideration.

- Overhead Considerations: The computational overhead of the throttling algorithm should be minimized to avoid performance bottlenecks. Efficient data structures and algorithms are crucial for achieving optimal performance.

- Latency Impacts: Throttling mechanisms can introduce latency, especially during periods of high load. The impact of latency on user experience needs to be evaluated for each strategy.

- Resource Consumption: Different strategies consume varying amounts of system resources (CPU, memory). Strategies that consume fewer resources are more suitable for resource-constrained environments.

Efficiency and Scalability of Throttling Strategies

The efficiency and scalability of a throttling strategy directly influence the system’s overall performance and ability to handle increasing loads.

- Scalability Considerations: The strategy should scale effectively as the application’s load increases. Strategies that are not scalable can become performance bottlenecks under high loads.

- Resource Utilization: The resource utilization of a throttling strategy is crucial for maintaining system performance. Strategies that minimize resource usage are more efficient.

Comparison of Throttling Strategies

The following table summarizes the different throttling strategies and their suitability for various use cases.

| Strategy | Suitability | Pros | Cons |

|---|---|---|---|

| Fixed Rate Limiting | Simple applications with consistent load | Easy to implement | Inefficient during low load, vulnerable to bursts |

| Leaky Bucket | Applications with some burstiness | Handles bursts better than fixed rate | Still relies on fixed rate |

| Token Bucket | Applications with unpredictable load | Handles bursts effectively, configurable rate | More complex to implement |

| Dynamic Throttling | Applications with highly variable load | Adapts to changing load | More complex implementation, requires monitoring |

Monitoring and Evaluating Throttling

Effective throttling mechanisms require ongoing monitoring and evaluation to ensure they function as intended and remain aligned with system needs. This involves understanding how to track the performance of the throttling system and address any potential bottlenecks or issues that arise. Thorough analysis allows for adjustments to optimize resource allocation and user experience.Monitoring and evaluation provide critical insights into the effectiveness of throttling, enabling proactive adjustments to prevent performance degradation or resource exhaustion.

This proactive approach ensures that the system maintains optimal performance under various load conditions.

Methods for Monitoring Throttling Effectiveness

Understanding the different methods for monitoring throttling effectiveness is crucial for maintaining system performance. Various techniques are employed to assess the performance of the throttling mechanism and identify potential areas for improvement. These include:

- Log Analysis: Examining system logs for errors, warnings, or unusual patterns related to throttling can highlight potential issues. Analyzing log data, especially over time, can reveal trends in throttling behavior and identify specific events that might indicate a problem. For instance, a sudden increase in throttling events or a particular type of error can signal a bottleneck.

- Performance Metrics Collection: Gathering metrics like request latency, throughput, error rates, and resource utilization (CPU, memory, network) during and after implementing throttling provides valuable data. These metrics, when correlated with throttling activity, offer insight into the impact of the throttling strategy. Monitoring these metrics before and after implementing a throttling mechanism allows for a comprehensive analysis of its impact on system performance.

- Real-time Monitoring Tools: Employing tools to track key performance indicators (KPIs) in real-time allows for quick detection of performance anomalies. Real-time data analysis enables rapid responses to changes in system load or throttling behavior. This continuous monitoring provides valuable feedback on how the throttling strategy adapts to varying conditions.

Metrics for Evaluating Throttling Performance

A comprehensive evaluation of throttling performance necessitates the use of appropriate metrics. These metrics allow for a quantitative assessment of the throttling mechanism’s effectiveness.

- Request Latency: Measuring the time it takes for requests to be processed is crucial. Lower latency indicates better performance, and any significant increase in latency after implementing throttling warrants investigation. High latency might indicate that the throttling mechanism is introducing unnecessary delays.

- Throughput: Evaluating the number of requests processed per unit of time allows for a comparison before and after throttling. A significant drop in throughput might indicate that the throttling mechanism is overly restrictive. Conversely, a steady or increasing throughput, despite throttling, suggests the mechanism is well-tuned.

- Error Rate: Tracking the frequency of errors related to throttling helps identify potential issues in the implementation. A high error rate could indicate that the throttling mechanism is malfunctioning or inappropriately restricting legitimate requests.

- Resource Utilization: Monitoring CPU, memory, and network usage before and after implementing throttling allows for an assessment of the impact on system resources. High resource utilization can signify a bottleneck in the throttling mechanism or an issue requiring further optimization.

Identifying and Addressing Bottlenecks in Throttling Implementation

Identifying bottlenecks in throttling implementation is essential for optimization. Bottlenecks can arise from various sources, including the throttling mechanism itself or external dependencies.

- Throttling Mechanism Design: Review the code and algorithms to determine whether there are performance bottlenecks within the throttling logic. Ensure the throttling mechanism is designed with scalability in mind to handle expected load variations. For example, a poorly designed queue or lock mechanism within the throttling implementation can severely limit performance.

- External Dependencies: Evaluate whether dependencies like database queries or external APIs are contributing to delays. Potential bottlenecks in external systems might necessitate changes in throttling strategies to mitigate the impact of external delays. For example, throttling requests to external APIs might improve performance if external API throttling is not implemented properly.

Troubleshooting Throttling Issues

Troubleshooting throttling issues requires a systematic approach to identify and rectify the root cause. A clear procedure ensures efficient resolution.

- Reproduce the Issue: Attempt to recreate the problem to understand the conditions under which it occurs. Understanding the circumstances when throttling issues arise is vital for effective troubleshooting.

- Analyze Logs and Metrics: Review logs and performance metrics to pinpoint specific events or patterns related to the throttling issue. Correlating these metrics with the problem helps in isolating the root cause.

- Isolate the Source: Identify the specific component or section of the code causing the issue. This step is crucial for targeting the fix directly.

- Implement and Test Fixes: Implement solutions to address the identified bottleneck or issue and thoroughly test the changes to confirm their effectiveness.

Monitoring Tools for Throttling Evaluation

Choosing the right monitoring tool is essential for effectively tracking throttling performance. The table below highlights various tools and their capabilities.

| Tool | Use Case |

|---|---|

| Prometheus | Collect and expose metrics, providing insights into request latency, throughput, and resource utilization. |

| Grafana | Visualize metrics collected by Prometheus, allowing for a clear understanding of throttling performance over time. |

| Datadog | Real-time monitoring and alerting capabilities for identifying performance issues related to throttling and other system components. |

| New Relic | Comprehensive monitoring for applications, including detailed metrics on application performance, resource utilization, and throttling behavior. |

Handling Throttling Exceptions

Throttling mechanisms, while crucial for resource management, can sometimes encounter unexpected situations, leading to exceptions. Proper exception handling is paramount to maintaining application stability and providing a smooth user experience. Effective error management ensures that applications continue to function gracefully even when faced with throttling limitations.Robust exception handling for throttling involves anticipating potential issues and designing mechanisms to gracefully degrade application behavior in response to throttling limitations.

This proactive approach safeguards against application crashes or unresponsive behavior under stress.

Exception Handling Strategies

Effective exception handling for throttling requires a structured approach to deal with various failure scenarios. This involves anticipating potential errors, such as network problems, database issues, or service outages, which can disrupt throttling operations. The key is to implement mechanisms that provide a fallback strategy when throttling operations fail.

- Error Logging: Comprehensive logging of throttling exceptions is vital for debugging and performance analysis. Detailed logs capture crucial information, including the type of exception, the affected resource, and the timestamp of the failure. This data allows for proactive identification and resolution of recurring issues. By systematically recording exceptions, developers gain insights into patterns and potential bottlenecks in the throttling mechanism.

Furthermore, this data allows for the identification of underlying causes and the mitigation of future failures.

- Retry Mechanisms: Implementing retry mechanisms for transient failures can significantly improve application reliability. A configurable retry strategy can determine the number of attempts and the delay between retries. These mechanisms can be used to handle temporary network issues or database lock conflicts, preventing unnecessary application downtime and improving the responsiveness of throttling operations. The key is to ensure that retry attempts are not excessive, to avoid overwhelming the system with requests and potentially exacerbating the problem.

- Graceful Degradation: A critical aspect of exception handling involves gracefully degrading application behavior when throttling limits are reached. This strategy minimizes the impact on users by providing alternative functionalities or simplified responses. For example, if a user attempts an operation that exceeds the throttling limit, the application can return a controlled error message indicating the temporary limitation, instead of completely blocking the request.

This ensures that the user experience remains smooth and functional, even under heavy load.

Implementing Error Handling Mechanisms

Thorough error handling mechanisms are essential to minimize the negative impact of throttling failures on the overall application. These mechanisms should be integrated seamlessly into the throttling logic, allowing the application to continue functioning without major disruptions. Consider implementing specific error handling strategies for different types of throttling exceptions.

- Rate Limiting Exceeded: When the rate limit is exceeded, the application should return a specific error response, such as a HTTP 429 (Too Many Requests) status code. This response clearly communicates to the client that their request has been rejected due to the rate limit. The response should also contain information about the expected wait time or the appropriate retry strategy.

- Service Unavailable: If the throttling service is unavailable, the application should return a specific error response, such as a HTTP 503 (Service Unavailable) status code. This informs the client about the temporary unavailability of the service and provides guidance for possible retry attempts. The response should clearly communicate the expected time until the service is available.

- Resource Exhaustion: When a resource is exhausted, the application should return a specific error response, indicating the temporary unavailability of the resource. This response should provide information about the expected time for the resource to become available again, or suggest alternative actions for the user. A HTTP 507 (Insufficient Storage) could be appropriate in this scenario.

User Notifications

Communicating throttling limitations to users is critical for maintaining a positive user experience. Clear and concise notifications help users understand the reason for delays or limitations, promoting better compliance and reducing frustration.

- Clear Error Messages: When a user encounters a throttling limitation, provide a clear and concise error message explaining the situation. Avoid technical jargon and focus on providing actionable information to the user.

- Visual Feedback: Visual cues, such as loading indicators or progress bars, can help users understand the status of their request. This enhances the user experience by providing real-time feedback.

- Suggestion of alternative actions: When a throttling limit is reached, provide the user with alternative actions to take, such as waiting a specific amount of time before retrying or using alternative functionalities.

Exception Handling Strategies Table

| Exception Type | Error Response | Handling Strategy |

|---|---|---|

| Rate Limiting Exceeded | HTTP 429 | Return error message, provide wait time, or suggest retry |

| Service Unavailable | HTTP 503 | Inform user, suggest retry after delay |

| Resource Exhaustion | HTTP 507 | Inform user, provide alternative options |

Security Considerations in Throttling

Implementing throttling mechanisms is crucial for resource management and preventing abuse, but it also introduces security considerations that must be addressed proactively. Properly designed throttling systems protect applications from overload and maintain responsiveness, while also safeguarding against malicious actors seeking to exploit vulnerabilities. This section delves into the security implications of throttling, focusing on abuse prevention, DoS attack mitigation, and vulnerability identification.

Security Implications of Throttling Mechanisms

Throttling mechanisms, while designed to protect systems, can inadvertently create vulnerabilities if not implemented carefully. A poorly configured throttling system might expose sensitive information about the system’s architecture or resource limits, potentially aiding attackers in crafting more effective attacks. Incorrectly applied throttling rules can also lead to unexpected operational disruptions, impacting legitimate users. A critical aspect of secure throttling is to ensure that the rules are transparent, consistent, and proportionate to the potential threats.

Preventing Abuse and Misuse of Throttling Mechanisms

Preventing abuse of throttling mechanisms requires a multi-faceted approach. First, the throttling rules should be designed to be impervious to manipulation. For example, rules based on IP address or user agent should be robust and not easily bypassed. Secondly, careful consideration should be given to the granularity of throttling. Implementing overly broad throttling rules can inadvertently impact legitimate users.

It’s important to strike a balance between protecting resources and ensuring a positive user experience. Finally, logging and monitoring are essential for identifying and responding to suspicious activity related to throttling rules.

Protecting Against Denial-of-Service (DoS) Attacks

Throttling mechanisms can be effectively incorporated into a comprehensive defense strategy against DoS attacks. By limiting the rate of requests from a specific source, throttling can prevent a flood of traffic from overwhelming the system. This can include rate limiting based on IP address, user ID, or other relevant identifiers. Implementing adaptive throttling, which adjusts the rate limits based on observed traffic patterns, can provide even greater resilience against sophisticated DoS attacks.

Furthermore, combining throttling with other security measures, such as intrusion detection systems and firewall rules, can significantly enhance overall system protection.

Security Vulnerabilities Related to Throttling and Mitigation Strategies

Several security vulnerabilities can arise from improperly implemented throttling. One potential vulnerability is the disclosure of resource limits. An attacker might exploit a poorly designed throttling mechanism to deduce the maximum allowable requests per second, enabling them to craft targeted attacks. To mitigate this, internal resource limits should not be exposed directly in the throttling rules. Another vulnerability is the possibility of circumvention through the use of proxies or automated tools.

To mitigate this, throttling should be multi-layered, encompassing different access points and incorporating techniques like CAPTCHAs to deter automated attacks.

Security Best Practices for Throttling Implementations

| Security Best Practice | Description |

|---|---|

| Rate Limiting | Implement fine-grained rate limits based on user, IP address, or other relevant identifiers. |

| Adaptive Throttling | Adjust rate limits dynamically based on observed traffic patterns to adapt to evolving attack vectors. |

| Robust Input Validation | Validate all inputs to prevent manipulation of throttling rules. |

| Transparency and Documentation | Document throttling policies and mechanisms clearly to avoid confusion and ensure fairness. |

| Monitoring and Logging | Monitor throttling mechanisms for anomalies and log suspicious activities. |

Scalability and Performance Optimization

Throttling mechanisms are crucial for ensuring the stability and responsiveness of high-traffic applications. Proper design and implementation are vital to maintain performance under pressure. This section details strategies for building scalable throttling systems, optimized for various hardware architectures and load conditions.Effective throttling mechanisms not only limit the rate of requests but also gracefully manage surges in traffic without compromising application availability.

This requires a deep understanding of the system’s architecture and the ability to adapt to fluctuating demands.

Designing Throttling Mechanisms for High-Traffic Applications

To handle high-traffic applications, throttling mechanisms must be designed with scalability in mind. This involves using distributed systems and techniques to manage the throttling logic across multiple servers. Choosing the right data structures and algorithms is critical for efficient request processing. Redis, for example, provides a powerful in-memory data store suitable for rate limiting.

Strategies for Improving Throttling Implementation Scalability

Several strategies can enhance the scalability of throttling implementations. These include employing caching to store frequently accessed throttling data, using message queues to decouple throttling logic from the application’s core processing, and implementing a distributed throttling system.

- Employing caching significantly reduces the load on the throttling service by storing frequently accessed throttling data. This technique accelerates request processing and improves overall performance, especially during periods of high traffic.

- Utilizing message queues effectively decouples the throttling logic from the application’s core processing. This separation allows the throttling system to scale independently, enhancing the overall system’s responsiveness and resilience to spikes in traffic.

- A distributed throttling system distributes the throttling logic across multiple servers. This approach enhances scalability and fault tolerance, ensuring that the system can handle a large volume of requests without performance degradation.

Importance of Load Balancing in Conjunction with Throttling

Load balancing plays a critical role in ensuring a robust throttling system. By distributing incoming requests across multiple servers, load balancers prevent any single server from becoming overwhelmed, thus maintaining the overall performance and availability of the application. A properly configured load balancer, combined with effective throttling, forms a powerful defense against overload.

Optimizing Throttling for Different Hardware Architectures

Throttling mechanisms need to be tailored to the specific hardware architecture of the application. Consider the processing power, memory capacity, and network bandwidth of the system. For example, a system with limited processing power might benefit from a simpler throttling algorithm. A cloud-based architecture, with its elastic scalability, might leverage cloud-native services for rate limiting.

- For systems with limited processing power, simpler throttling algorithms can improve performance by reducing the computational overhead. This approach prioritizes efficiency over complex mechanisms, particularly in resource-constrained environments.

- Cloud-based architectures, with their inherent scalability, can leverage cloud-native services for rate limiting. These services often handle the complexity of distributing and managing throttling logic across multiple servers, reducing the burden on the application itself.

Scaling Techniques for Throttling Implementations

Different scaling techniques can be applied to optimize throttling implementations. Choosing the right technique depends on the specific requirements of the application and the expected traffic patterns.

| Scaling Technique | Description | Advantages | Disadvantages |

|---|---|---|---|

| Horizontal Scaling | Adding more servers to distribute the load. | Improved scalability, high availability. | Increased infrastructure costs, potential complexity in data consistency. |

| Vertical Scaling | Increasing the resources of a single server. | Simpler implementation, potentially faster results. | Limited scalability, reaching a maximum capacity. |

| Caching | Storing frequently accessed data to reduce server load. | Improved performance, reduced latency. | Requires careful design to avoid cache misses. |

Practical Use Cases of Throttling

Throttling is a vital technique for ensuring application stability, preventing service outages, and maintaining a positive user experience. It controls the rate at which requests are processed, preventing overload and maintaining responsiveness. By understanding its diverse applications, developers can effectively optimize performance and reliability.Implementing throttling mechanisms is crucial in various domains, from safeguarding APIs against abuse to managing network traffic and load balancing.

The strategic use of throttling ensures that resources are utilized efficiently and that systems remain resilient to unexpected surges in demand.

API Design and Protection

Throttling is paramount in API design for mitigating abuse and maintaining service quality. API abuse, characterized by excessive or malicious requests, can cripple an API, rendering it unavailable to legitimate users. By implementing rate limits, developers can prevent such abuse and safeguard their APIs against denial-of-service attacks.

- Protecting against brute-force attacks: APIs often receive a high volume of requests. Throttling prevents malicious actors from overwhelming the API with requests, which could result in the service being rendered unavailable.

- Maintaining fair access for all users: Throttling ensures that all users have a fair chance to access the API without being blocked or delayed by excessive requests from other users.

- Preventing resource exhaustion: High volumes of requests can consume server resources, leading to slowdowns or complete outages. Throttling helps to maintain server capacity, ensuring the service remains available for legitimate users.

Network Management and Resource Allocation

Throttling plays a significant role in network management, ensuring efficient bandwidth utilization and preventing congestion. In scenarios where network traffic is unpredictable or fluctuates dramatically, throttling can help maintain stable network performance.

- Controlling network traffic flow: Throttling can be used to manage network traffic, ensuring that resources are allocated appropriately and congestion is avoided.

- Preventing network overload: Throttling helps prevent network overload by limiting the rate of data transmission, ensuring the network doesn’t become overwhelmed by sudden increases in traffic.

- Ensuring consistent service quality: By throttling network traffic, applications can maintain a consistent level of service quality, even when dealing with fluctuating network conditions.

Load Balancing and System Stability

Throttling is an essential component of load balancing strategies. By distributing incoming requests across multiple servers, throttling can prevent individual servers from becoming overloaded, thereby enhancing system stability.

- Preventing server overload: Throttling helps to prevent any single server from being overwhelmed by a surge in requests, which could lead to performance degradation or complete failure.

- Improving system responsiveness: By distributing requests across multiple servers, throttling helps to maintain system responsiveness, even under heavy load.

- Ensuring high availability: Throttling contributes to the overall high availability of the system, as individual servers are protected from being overloaded, preventing service interruptions.

Practical Use Cases Table

| Use Case | Domain | Impact of Throttling |

|---|---|---|

| Protecting a social media API from abuse | API Design | Prevents denial-of-service attacks, maintains fair access, prevents resource exhaustion. |

| Managing network traffic in a high-volume e-commerce site | Network Management | Ensures consistent service quality, prevents network overload, and controls bandwidth usage. |

| Preventing a web application from crashing during peak hours | Load Balancing | Maintains system responsiveness, ensures high availability, prevents individual server overload. |

Advanced Throttling Techniques

Advanced throttling techniques move beyond static rate limits, dynamically adjusting to varying demands and resource availability. These methods often incorporate feedback mechanisms and predictive models to optimize performance and prevent overload, ensuring a more resilient and adaptable system. This is particularly crucial in environments with fluctuating traffic patterns or unpredictable resource consumption.

Adaptive Throttling

Adaptive throttling mechanisms dynamically adjust throttling parameters based on real-time system performance metrics. This contrasts with static throttling, which maintains fixed limits regardless of conditions. By observing factors like server load, network latency, and resource utilization, adaptive throttling can proactively reduce or increase the rate limit to maintain optimal system performance. This ensures responsiveness under high loads without sacrificing overall efficiency.

Dynamic Rate Limiting

Dynamic rate limiting extends the concept of adaptive throttling by incorporating more sophisticated techniques to determine the appropriate rate limit. This involves analyzing historical data, current trends, and predicted future demands to tailor the rate limit for specific user groups, applications, or even individual requests. This approach allows for a more granular control over resource allocation and ensures that critical functionalities are prioritized.

The goal is to avoid bottlenecks and ensure responsiveness even under fluctuating loads.

Machine Learning in Throttling

Machine learning can be integrated into throttling mechanisms to predict future resource needs and proactively adjust throttling parameters. Algorithms can be trained on historical data to identify patterns in resource usage and predict future demands. This enables systems to anticipate potential overload situations and adjust throttling parameters in advance, ensuring smooth operation and preventing performance degradation. For example, a machine learning model could learn the correlation between specific user actions and resource consumption, enabling more precise throttling for those users.

Implementing Dynamic Adjustment of Throttling Parameters

Implementing a system for dynamically adjusting throttling parameters involves several key steps. First, establish key performance indicators (KPIs) to monitor system health and resource utilization. Then, develop algorithms to analyze these metrics and predict future resource needs. Next, integrate these algorithms into the throttling mechanism to automatically adjust rate limits based on the predictions. Finally, establish feedback loops to continuously monitor the effectiveness of the adjustments and refine the algorithms over time.

This iterative process allows the system to learn and adapt to changing conditions.

Examples of Advanced Throttling Techniques in Action

A streaming service might employ dynamic rate limiting to adjust the bitrate of video streams based on network conditions. During peak hours, the rate limit could be lowered for all users to ensure smooth playback. Conversely, during periods of low traffic, the rate limit could be increased to provide a better user experience. Similarly, an e-commerce platform could use adaptive throttling to manage the load on its payment gateway.

By monitoring transaction volume and server load, the platform can dynamically adjust the rate limit for new transactions, preventing the system from being overwhelmed.

Summary of Advanced Throttling Techniques

| Technique | Description | Applications |

|---|---|---|

| Adaptive Throttling | Dynamically adjusts throttling parameters based on real-time system performance metrics. | High-traffic web applications, cloud services, real-time data processing systems. |

| Dynamic Rate Limiting | Tailors the rate limit based on historical data, trends, and predicted future demands. | E-commerce platforms, online gaming services, social media platforms. |

| Machine Learning in Throttling | Predicts future resource needs and proactively adjusts throttling parameters. | Large-scale distributed systems, streaming services, financial trading platforms. |

Code Examples (Illustrative)

Illustrative code examples are crucial for understanding and applying throttling mechanisms effectively. These examples demonstrate how to integrate throttling logic into existing codebases and highlight different throttling strategies. They provide practical implementations that can be adapted and used in various contexts.

Python Example (Rate Limiting with a Counter)

This Python example showcases a basic rate-limiting mechanism using a counter to track requests within a specific time window.“`pythonimport timefrom collections import defaultdictclass RateLimiter: def __init__(self, rate, period): self.rate = rate self.period = period self.requests = defaultdict(int) # Track requests per key def allow(self, key): now = time.time() if key not in self.requests or now – self.requests[key] >= self.period: self.requests[key] = now return True else: return False# Example Usagelimiter = RateLimiter(rate=5, period=60) # Allow 5 requests per 60 secondsdef rate_limited_function(key): if limiter.allow(key): print(f”Request allowed for key: key”) # Perform the actual function time.sleep(1) # Simulate processing else: print(f”Request rate limited for key: key”)for i in range(10): rate_limited_function(i) time.sleep(1) # Simulate delays“`This code implements a `RateLimiter` class that tracks requests per key.

The `allow` method checks if a request is within the rate limit. If the rate limit is exceeded, the function returns `False`. This example demonstrates a simple counter-based rate limiter, which is suitable for basic rate limiting scenarios.

Integrating Throttling into Existing Codebases

Integrating throttling into existing codebases often involves modifying existing request handling logic. This typically includes adding a throttling layer that checks the rate limit before proceeding with the request. A suitable approach is to use middleware or decorators.

Token Bucket Throttling (Pseudocode)

This pseudocode illustrates a token bucket throttling implementation.“`// Define a token bucket with a capacity and refill ratetokenBucket = capacity: 10, tokens: 10, refillRate: 2, // Tokens refill every 2 seconds lastRefill: time.now()function allowRequest(key) // Calculate time since last refill timeDiff = time.now()

tokenBucket.lastRefill

// Refills tokens based on the rate if (timeDiff >= tokenBucket.refillRate) refillAmount = Math.min(tokenBucket.capacity – tokenBucket.tokens, Math.floor(timeDiff / tokenBucket.refillRate

tokenBucket.refillRate))

tokenBucket.tokens += refillAmount tokenBucket.lastRefill = time.now() // Check if tokens are available if (tokenBucket.tokens > 0) tokenBucket.tokens -= 1 return true // Request allowed else return false // Request denied “`This pseudocode demonstrates the logic of a token bucket implementation.

It calculates the tokens available and refills them based on the `refillRate` and `capacity`.

Web Application Example (Conceptual)

A web application might use a middleware or a filter to intercept incoming requests. This middleware would call the `allowRequest` function (from the previous example) to check if the request should be processed or blocked.

Last Recap

In conclusion, implementing throttling patterns offers a powerful approach to optimizing application performance and security. By carefully selecting the right strategy, considering security implications, and implementing robust monitoring and evaluation, you can ensure your application’s stability and scalability. This guide has provided a structured approach to understanding and implementing throttling, offering a comprehensive view of its various aspects, and enabling developers to build robust and resilient applications.

Expert Answers

What are the common causes of throttling failures?

Throttling failures can stem from various issues, including inadequate resource allocation, insufficient rate limits, and faulty implementation of throttling algorithms. Incorrect configuration of the throttling mechanism, a lack of proper monitoring, and inadequate error handling can also contribute to problems.

How do I choose the right throttling algorithm for my application?

The optimal throttling algorithm depends on your application’s specific needs and characteristics. Factors to consider include the expected traffic volume, the desired response time, and the trade-offs between accuracy and simplicity. Rate limiting, token bucket, and leaky bucket are common options, each with strengths and weaknesses.

What are the security implications of implementing throttling?

Throttling mechanisms can inadvertently create security vulnerabilities if not implemented correctly. Potential risks include allowing malicious actors to exploit the throttling mechanism to bypass security controls, or unintentionally blocking legitimate users due to overly aggressive throttling settings. Proper design and careful configuration are essential to mitigate these risks.

How can I ensure scalability and performance in my throttling implementation?

Scalability in throttling implementations necessitates careful consideration of factors like load balancing and the use of caching strategies. Dynamic adjustment of throttling parameters, based on real-time traffic conditions, can significantly enhance the system’s adaptability and overall performance.