Embarking on a journey to understand modern communication protocols, we’ll explore the intricacies of gRPC and its compelling advantages over the well-established REST. This guide serves as a comprehensive resource, offering insights into the core concepts, practical applications, and strategic considerations that define the choice between these two powerful technologies.

gRPC, a high-performance, open-source framework, leverages Protocol Buffers for efficient data serialization and HTTP/2 for transport, promising superior performance in various scenarios. REST, built upon the principles of HTTP, offers simplicity and broad compatibility. This exploration aims to equip you with the knowledge to make informed decisions, optimizing your applications for speed, scalability, and maintainability.

Introduction to gRPC

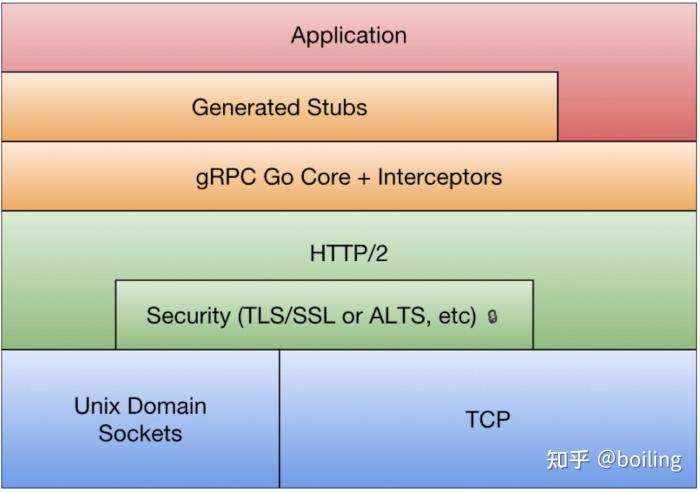

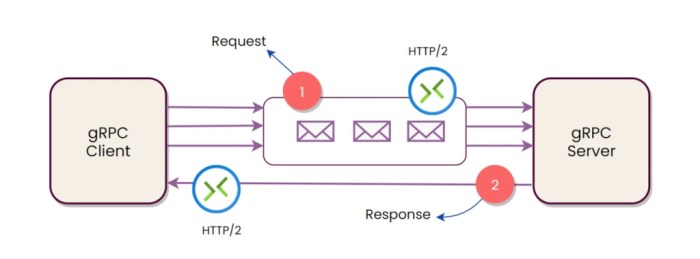

gRPC is a modern, high-performance framework for building APIs that enables efficient communication between services, applications, and devices. It’s designed to be language-agnostic and supports various programming languages, making it a versatile choice for distributed systems. gRPC is built on top of HTTP/2, which provides features like multiplexing, bidirectional streaming, and header compression, leading to significant performance improvements compared to traditional REST-based APIs.gRPC, in essence, is a remote procedure call (RPC) framework.

It allows client applications to directly call methods on server applications as if they were local objects. This abstraction simplifies the development of distributed applications by hiding the complexities of network communication. gRPC uses Protocol Buffers (protobuf) as its Interface Definition Language (IDL) to define service interfaces and message structures. This approach ensures efficient data serialization and deserialization, contributing to gRPC’s performance advantages.

Core Concept and Purpose of gRPC

The fundamental concept of gRPC revolves around enabling seamless communication between different software components, regardless of their location or programming language. Its primary purpose is to facilitate the creation of high-performance, scalable, and efficient distributed systems. gRPC achieves this by providing a standardized way to define service interfaces and data structures, and by leveraging the advanced features of HTTP/2 for underlying communication.

This approach streamlines the development process, reduces network overhead, and ultimately improves the overall performance of the system.

Definition of gRPC

gRPC is a high-performance, open-source RPC framework that utilizes Protocol Buffers for defining service interfaces and message payloads.Key features of gRPC include:

- HTTP/2-based Communication: gRPC leverages HTTP/2 for its transport layer, enabling features such as multiplexing (multiple requests over a single connection), header compression, and bidirectional streaming. These features contribute to reduced latency and improved efficiency.

- Protocol Buffers (protobuf): gRPC employs Protocol Buffers as its IDL and serialization format. Protobuf allows developers to define service methods and data structures in a language-neutral manner, ensuring efficient data transfer and compatibility across different programming languages.

- Code Generation: gRPC provides code generation tools that automatically generate client and server stubs from protobuf definitions. This simplifies the development process by eliminating the need to write boilerplate code for handling network communication and data serialization/deserialization.

- Bi-directional Streaming: gRPC supports bi-directional streaming, allowing both the client and server to send multiple messages over a single connection. This feature is particularly useful for real-time applications, such as chat applications or streaming services.

- Strong Typing and Schema Validation: Protocol Buffers provide strong typing and schema validation, which helps to ensure data integrity and reduce the likelihood of errors. This leads to more robust and reliable applications.

Benefits of Using gRPC in a Distributed System

gRPC offers several advantages that make it a compelling choice for building distributed systems:

- Improved Performance: gRPC’s use of HTTP/2 and Protocol Buffers results in significant performance gains compared to traditional REST APIs. The multiplexing feature of HTTP/2 reduces latency, and Protobuf’s binary format is more compact and faster to serialize/deserialize than JSON or XML. For instance, Google has reported significant performance improvements using gRPC in its internal services, with reduced latency and bandwidth consumption.

- Efficient Data Serialization: Protocol Buffers provides a highly efficient and compact binary format for data serialization. This leads to reduced network bandwidth usage and faster data transfer times, which is crucial for high-performance applications.

- Strong Typing and Schema Validation: Protocol Buffers’ schema definition provides strong typing and schema validation. This ensures data consistency and reduces the risk of errors caused by data format mismatches.

- Language and Platform Agnostic: gRPC supports a wide range of programming languages and platforms, making it easy to integrate into diverse environments. This flexibility allows developers to choose the best tools for their specific needs and facilitates interoperability between different services.

- Bi-directional Streaming: gRPC’s support for bi-directional streaming allows for real-time communication between clients and servers. This is particularly beneficial for applications that require low-latency, two-way data exchange, such as live chat applications or financial trading platforms.

- Simplified Service Definition: The use of Protocol Buffers simplifies service definition and contract management. Protobuf files serve as a single source of truth for service interfaces, making it easier to understand and maintain service contracts.

- Automatic Code Generation: gRPC’s code generation tools automate the creation of client and server stubs, reducing the amount of boilerplate code developers need to write. This accelerates the development process and minimizes the risk of errors.

Core Components of gRPC

gRPC’s efficiency and performance stem from its core components. These components work in concert to enable high-performance, cross-platform communication between services. Understanding these foundational elements is key to grasping how gRPC functions effectively.

Protocol Buffers

Protocol Buffers (protobufs) are a critical component of gRPC. They serve as a language-neutral, platform-neutral, extensible mechanism for serializing structured data. Think of them as a more efficient alternative to JSON or XML for data transmission.

- Definition of Service Contracts: Protocol Buffers are used to define the structure of the data exchanged between services and the methods available on those services. This definition happens in `.proto` files. These files specify the data types, message structures, and service definitions. This approach ensures both client and server understand the format of the data, reducing ambiguity and errors.

- Data Serialization and Deserialization: Protobufs efficiently serialize data into a binary format, which is much smaller than text-based formats like JSON. This reduces bandwidth usage and speeds up data transfer. On the receiving end, protobufs are deserialized back into the original data structure.

- Language and Platform Independence: Protobufs support a wide range of programming languages, including Go, Java, Python, C++, and more. This cross-platform compatibility is a significant advantage, enabling communication between services written in different languages.

- Versioning and Compatibility: Protobufs are designed with forward and backward compatibility in mind. This allows services to evolve over time without breaking existing clients. Adding new fields or modifying existing ones can be done while maintaining compatibility with older versions.

For example, consider a simple service that retrieves user information. Using protobufs, you would define a message for `User` and a service definition for a `UserService`. The `.proto` file might look something like this:“`protobufsyntax = “proto3”;package user;service UserService rpc GetUser (GetUserRequest) returns (User);message GetUserRequest int32 id = 1;message User int32 id = 1; string name = 2; string email = 3;“`In this example:* `GetUserRequest` and `User` are messages that define the structure of the data.

- `UserService` defines a service with a method `GetUser`.

- The `rpc` defines a remote procedure call.

- `returns (User)` specifies the return type of the `GetUser` method.

This `.proto` file is then used by the gRPC tooling to generate code for both the client and the server, including data structures and communication logic.

Stubs

Stubs are another crucial component of gRPC. They act as intermediaries between the client and the server, abstracting the complexities of the underlying communication protocol. They provide a convenient interface for clients to interact with remote services.Stubs play a vital role in facilitating client-server communication. They simplify the process of making remote procedure calls.

- Client-Side Stubs: On the client side, stubs provide a local interface that mimics the remote service’s methods. When a client calls a method on a stub, the stub handles the serialization of the request, the communication with the server, and the deserialization of the response. This allows developers to interact with remote services as if they were local objects.

- Server-Side Stubs: On the server side, stubs receive requests from clients, deserialize the data, invoke the appropriate service methods, serialize the response, and send it back to the client. This abstraction simplifies the server-side implementation.

- Automated Code Generation: gRPC tooling automatically generates stubs from the `.proto` files. This eliminates the need for developers to manually write the communication code, reducing the risk of errors and accelerating development.

- Simplified Communication: Stubs handle the underlying network communication details, such as connection management, data serialization, and error handling. This allows developers to focus on the business logic of their applications.

The generated stubs encapsulate the gRPC communication logic, including:* Serialization of request messages using Protocol Buffers.

- Establishing and managing the gRPC connection.

- Sending requests to the server.

- Receiving and deserializing response messages.

- Handling errors and exceptions.

For instance, in the previous `UserService` example, the gRPC tools would generate client-side stubs that would allow a client to call `GetUser` as if it were a local function. The stub would then handle all the underlying gRPC communication, allowing the client to simply call the method and receive the `User` object. The server-side stubs would receive the request, deserialize the `GetUserRequest`, invoke the `GetUser` implementation, serialize the `User` response, and send it back to the client.

gRPC vs. REST

Comparing gRPC and REST is essential for making informed decisions when designing and implementing APIs. Both are popular architectural styles for building networked applications, but they differ significantly in their underlying principles and operational characteristics. Understanding these differences is key to choosing the right technology for a specific use case.

Data Format, Performance, and Protocol Comparison

The choice between gRPC and REST often hinges on performance and data format considerations. Both aspects are intrinsically linked to the underlying protocols and communication models.

- Data Format: REST typically utilizes JSON (JavaScript Object Notation) or XML (Extensible Markup Language) for data serialization. These formats are human-readable and widely supported. gRPC, on the other hand, defaults to Protocol Buffers (Protobuf), a binary format. Protobuf is more compact and efficient than JSON or XML, leading to smaller message sizes and faster parsing.

- Performance: Due to its binary format and efficient serialization/deserialization, gRPC generally offers superior performance compared to REST, particularly in scenarios with high data volume and frequent communication. Protobuf’s compact nature reduces network bandwidth consumption, and its efficient parsing minimizes CPU overhead. REST, with its text-based formats, can be slower, especially when dealing with complex data structures.

- Protocol: REST typically operates over HTTP/1.1 or HTTP/2, leveraging HTTP methods (GET, POST, PUT, DELETE) to interact with resources. gRPC primarily uses HTTP/2, which provides features like multiplexing (multiple requests over a single connection) and bidirectional streaming. This results in reduced latency and improved efficiency. The use of HTTP/2 by gRPC, coupled with Protobuf, significantly contributes to its performance advantages.

Communication Models Comparison

The communication models of gRPC and REST represent a fundamental difference in how they handle interactions between client and server.

- REST (Representational State Transfer): REST follows a resource-oriented architecture. Clients interact with resources identified by URLs using standard HTTP methods. It’s inherently stateless, meaning each request contains all the information needed for the server to understand and process it. REST’s simplicity and ease of understanding have contributed to its widespread adoption.

- gRPC (gRPC Remote Procedure Call): gRPC uses a Remote Procedure Call (RPC) model. Clients call methods on the server as if they were local functions. This model can be more intuitive for developers accustomed to procedural programming. gRPC supports four types of service methods: unary (single request/single response), server streaming (single request/multiple responses), client streaming (multiple requests/single response), and bidirectional streaming (multiple requests/multiple responses).

Advantages and Disadvantages of Each Approach

Choosing between gRPC and REST requires careful consideration of the specific requirements of a project. Each approach has its own set of strengths and weaknesses.

- REST Advantages:

- Simplicity and Ease of Use: REST’s straightforward design and use of standard HTTP methods make it easy to understand and implement.

- Widely Supported: REST is a mature technology with extensive tooling and community support.

- Human-Readable Data: JSON and XML formats are human-readable, simplifying debugging and testing.

- Browser Compatibility: REST APIs are easily accessible from web browsers.

- REST Disadvantages:

- Performance Overhead: JSON and XML formats can be less efficient than binary formats, leading to larger message sizes and slower parsing.

- Lack of Strict Contracts: While OpenAPI (formerly Swagger) can define API contracts, REST lacks built-in contract enforcement.

- HTTP Verb Abuse: Overuse or misuse of HTTP verbs can sometimes lead to less clear API design.

- gRPC Advantages:

- High Performance: Protobuf and HTTP/2 contribute to superior performance, especially for high-volume, low-latency communication.

- Strong Typing: Protobuf provides strong typing, ensuring data integrity and enabling efficient serialization/deserialization.

- Bidirectional Streaming: gRPC supports bidirectional streaming, enabling real-time communication and efficient data transfer.

- Code Generation: gRPC provides code generation for multiple languages, simplifying client and server implementation.

- gRPC Disadvantages:

- Complexity: gRPC can be more complex to set up and understand initially, especially for developers unfamiliar with RPC.

- Limited Browser Support: Direct browser support for gRPC is limited, requiring the use of gRPC-Web or other proxies.

- Less Human-Readable: Protobuf is a binary format, making it less human-readable than JSON or XML.

- Steeper Learning Curve: The need to define service contracts using Protobuf can increase the learning curve for new developers.

When to Choose gRPC over REST

Choosing between gRPC and REST depends heavily on the specific requirements of your application. While REST is a widely adopted and well-understood architectural style, gRPC offers distinct advantages in certain scenarios, particularly where performance, efficiency, and strong typing are critical. Understanding these differences allows developers to make informed decisions, optimizing their applications for the best possible outcome.

Scenarios for Superior Performance and Efficiency with gRPC

gRPC excels in situations where high performance and efficiency are paramount. Its binary protocol, HTTP/2 multiplexing, and efficient data serialization contribute significantly to these advantages. These features translate to reduced latency, lower bandwidth consumption, and improved overall throughput.

- High-Performance Communication: gRPC’s use of Protocol Buffers for serialization leads to smaller message sizes compared to JSON, the common format used in REST. This results in faster data transfer, especially crucial in bandwidth-constrained environments or applications requiring real-time updates.

- Low Latency: The HTTP/2 protocol, which gRPC utilizes, supports multiplexing, allowing multiple requests and responses to be sent over a single TCP connection. This reduces the overhead of establishing new connections for each request, leading to lower latency. In contrast, REST often relies on HTTP/1.1, which typically uses a new connection for each request, adding latency.

- Efficient Data Serialization: Protocol Buffers (protobufs) are a binary serialization format, making them significantly more compact and faster to serialize and deserialize than JSON, which is text-based. This efficiency is particularly beneficial in applications that exchange large volumes of data.

- Bidirectional Streaming: gRPC supports bidirectional streaming, enabling both the client and server to send multiple messages concurrently. This is ideal for applications requiring real-time data transfer, such as live chat applications or financial trading platforms, where continuous data exchange is essential. REST, while capable of streaming, doesn’t inherently offer the same level of efficiency and ease of implementation for bidirectional communication.

Applications Benefiting from gRPC Capabilities

Several types of applications are particularly well-suited for gRPC. These applications typically prioritize performance, efficiency, and strong typing, and can leverage gRPC’s features to achieve significant improvements.

- Microservices Architectures: gRPC’s efficient communication and strong typing make it an excellent choice for inter-service communication in microservices architectures. Protocol Buffers ensure clear contracts between services, reducing the risk of compatibility issues and improving maintainability. Services can communicate rapidly and reliably.

- Mobile Applications: gRPC’s compact message sizes and efficient data transfer are ideal for mobile applications, especially those operating in bandwidth-constrained environments. The reduced bandwidth consumption can lead to improved battery life and a better user experience.

- Real-Time Applications: Applications requiring real-time data updates, such as live chat applications, streaming services, and financial trading platforms, benefit from gRPC’s bidirectional streaming capabilities. This enables efficient and continuous data exchange between the client and server.

- High-Performance Backend Systems: gRPC is a good fit for backend systems that require high throughput and low latency, such as those used in data processing, machine learning, and gaming. The efficient communication and serialization provided by gRPC can help to optimize these systems.

- Internal APIs: When designing APIs for internal use within a company or organization, gRPC can be a great option. The use of Protocol Buffers provides a clear contract, improving team collaboration and system maintainability. This can streamline development and reduce errors.

Trade-offs of Using gRPC

While gRPC offers significant advantages, there are trade-offs to consider. These trade-offs primarily relate to the learning curve, tooling, and potential for limited browser support.

- Learning Curve: gRPC has a steeper learning curve than REST. Developers need to learn about Protocol Buffers, gRPC’s service definition language (protobufs), and the gRPC framework itself. This requires an investment in time and effort for training and understanding.

- Tooling and Ecosystem Maturity: While the gRPC ecosystem is growing, it is not as mature as the REST ecosystem. The tooling for debugging, monitoring, and testing gRPC services may not be as readily available or as well-established as for REST. However, the gRPC ecosystem is rapidly evolving and improving.

- Browser Support: gRPC’s initial lack of direct browser support was a limitation, as browsers don’t natively support HTTP/2 and binary protocols. However, gRPC-Web, a proxy that translates gRPC requests into HTTP/1.1 and JSON, has been developed to address this. While gRPC-Web is a useful solution, it introduces an additional layer of complexity and may impact performance compared to native gRPC implementations.

- Human Readability: Protocol Buffers are not human-readable in their serialized form, unlike JSON. This can make debugging and troubleshooting more difficult. While tools exist to help decode protobuf messages, the lack of direct human readability can be a drawback.

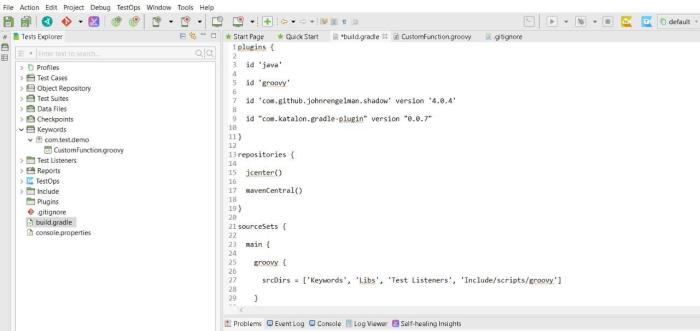

gRPC Implementation: A Practical Guide

This section provides a hands-on guide to implementing a gRPC service. We will walk through the process, from defining a service using Protocol Buffers to creating client and server applications that interact with each other. The goal is to provide a clear, step-by-step approach to help you build your own gRPC services.

Designing a Simple gRPC Service with Protocol Buffers

Protocol Buffers (protobuf) are essential for defining gRPC services. They act as the interface definition language (IDL), allowing you to specify the structure of your data and the methods your service will expose. We will design a basic service called `Greeter` that takes a name as input and returns a greeting.Let’s define a protobuf file named `greet.proto`:“`protobufsyntax = “proto3”;package greet;service Greeter rpc SayHello (HelloRequest) returns (HelloReply) message HelloRequest string name = 1;message HelloReply string message = 1;“`This `greet.proto` file defines:* `syntax = “proto3”;`: Specifies the Protocol Buffers version to use.

`package greet;`

Defines the package name for the generated code. This helps organize the code and prevent naming conflicts.

`service Greeter … `

Defines the gRPC service named `Greeter`.

`rpc SayHello (HelloRequest) returns (HelloReply) `

Defines a remote procedure call (RPC) method named `SayHello`. It takes a `HelloRequest` message as input and returns a `HelloReply` message.

`message HelloRequest … `

Defines the structure of the request message. It contains a single field, `name`, which is a string.

`message HelloReply … `

Defines the structure of the response message. It contains a single field, `message`, which is a string.This protobuf file is the blueprint for our gRPC service. It defines the structure of the data exchanged between the client and the server and specifies the methods the server will provide.

Generating Client and Server Code

Once you have defined your service in a `.proto` file, the next step is to generate the client and server code. This process involves using the Protocol Buffer compiler (`protoc`) along with the gRPC plugin for your chosen programming language. The generated code will handle the serialization and deserialization of messages, as well as the underlying gRPC communication.Here’s a breakdown of the steps involved:

- Install the Protocol Buffer compiler (`protoc`) and the gRPC plugin: The installation process varies depending on your operating system and programming language. You can typically install `protoc` using a package manager (e.g., `apt`, `brew`). For the gRPC plugin, you will often use a package manager specific to your programming language (e.g., `pip` for Python, `npm` for Node.js).

- Generate code: Use the `protoc` compiler to generate the code. The command will look something like this:

protoc --go_out=. --go_opt=paths=source_relative --go-grpc_out=. --go-grpc_opt=paths=source_relative greet.protoThis example generates Go code. The options specify the output directory, the programming language, and the gRPC plugin to use. Similar commands exist for other languages.

- Understand the generated code: The generated code includes the following:

- Message definitions: Structures that represent the messages defined in your `.proto` file (e.g., `HelloRequest`, `HelloReply`).

- Service interface: An interface that defines the methods of your gRPC service (e.g., `GreeterServer`).

- Client stubs: Classes that provide methods for calling the gRPC service from the client side.

- Server stubs: Classes that you can implement to provide the server-side logic.

The specific generated files and their contents will vary based on the programming language you choose. The generated code simplifies the process of interacting with the gRPC service, allowing you to focus on the business logic.

Creating a Basic Client-Server Interaction Example

To illustrate the client-server interaction, let’s create simple client and server applications based on the `greet.proto` file. This example will use Go, but the principles apply to other languages.First, let’s create the server (`server.go`):“`gopackage mainimport ( “context” “fmt” “log” “net” “google.golang.org/grpc” pb “your_package_name/greet” // Replace with your package name)type server struct pb.UnimplementedGreeterServer // Embed to satisfy the interfacefunc (s

- server) SayHello(ctx context.Context, in

- pb.HelloRequest) (*pb.HelloReply, error)

log.Printf(“Received: %v”, in.GetName()) return &pb.HelloReplyMessage: “Hello ” + in.GetName(), nilfunc main() lis, err := net.Listen(“tcp”, “:50051”) if err != nil log.Fatalf(“failed to listen: %v”, err) s := grpc.NewServer() pb.RegisterGreeterServer(s, &server) log.Printf(“server listening at %v”, lis.Addr()) if err := s.Serve(lis); err != nil log.Fatalf(“failed to serve: %v”, err) “`The server code:* Imports necessary packages, including the generated gRPC code (`pb`).

- Defines a `server` struct that implements the `GreeterServer` interface generated from the `.proto` file.

- Implements the `SayHello` method, which takes a `HelloRequest` and returns a `HelloReply`.

- Sets up a gRPC server, registers the `GreeterServer` implementation, and starts listening for incoming connections on port 50051.

Now, let’s create the client (`client.go`):“`gopackage mainimport ( “context” “log” “time” “google.golang.org/grpc” “google.golang.org/grpc/credentials/insecure” pb “your_package_name/greet” // Replace with your package name)func main() conn, err := grpc.Dial(“localhost:50051”, grpc.WithTransportCredentials(insecure.NewCredentials())) if err != nil log.Fatalf(“did not connect: %v”, err) defer conn.Close() c := pb.NewGreeterClient(conn) ctx, cancel := context.WithTimeout(context.Background(), time.Second) defer cancel() r, err := c.SayHello(ctx, &pb.HelloRequestName: “World”) if err != nil log.Fatalf(“could not greet: %v”, err) log.Printf(“Greeting: %s”, r.GetMessage())“`The client code:* Imports necessary packages, including the generated gRPC code (`pb`).

Establishes a connection to the gRPC server on `localhost

50051`.

- Creates a `GreeterClient` using the connection.

- Calls the `SayHello` method, sending a `HelloRequest` with the name “World”.

- Prints the received `HelloReply` message.

To run this example:

- Generate the Go code from the `greet.proto` file using the `protoc` compiler (as shown earlier).

- Replace `”your_package_name”` in both `server.go` and `client.go` with the actual package name you used when generating the Go code.

3. Compile and run the server

`go run server.go`.

4. Compile and run the client

`go run client.go`.

The client will send a request to the server, the server will process the request, and the client will then receive the greeting “Hello World”. This simple example demonstrates the basic client-server interaction using gRPC. This setup allows you to build more complex services, as the underlying gRPC communication is handled by the generated code.

gRPC and Protocol Buffers: Deep Dive

Protocol Buffers (protobufs) are a crucial component of gRPC, acting as the interface definition language (IDL) and data serialization format. Understanding protobufs is key to effectively using gRPC. This section will explore the structure, syntax, and optimization strategies for protobuf definitions.

Structure and Syntax of Protocol Buffer Definitions

Protocol Buffer definitions are written in a simple, yet powerful, language defined in `.proto` files. These files define the structure of messages, services, and other data structures used in gRPC communication. The syntax is designed to be human-readable and easily parsed by compilers.A `.proto` file typically includes:* Package Declaration: Specifies the namespace for the definitions. This helps organize the code and prevents naming conflicts.

“`protobuf package mypackage; “`

Message Definitions

Define the structure of data exchanged between services. Messages are similar to classes or structs in other programming languages. “`protobuf message Person int32 id = 1; string name = 2; string email = 3; “`

Service Definitions

Define the gRPC services and their methods. Services are the core building blocks for defining the remote procedure calls. “`protobuf service Greeter rpc SayHello (HelloRequest) returns (HelloReply) “`

Imports

Allow the inclusion of definitions from other `.proto` files. “`protobuf import “other_file.proto”; “`Each field within a message has a unique tag number. These tag numbers are used for serialization and deserialization and should not be changed once a message is in use to maintain compatibility. The field type defines the data type of the field (e.g., `int32`, `string`, `bool`).

The field name is the identifier used in the code.

Different Data Types and Message Structures in Protocol Buffers

Protocol Buffers support a wide variety of data types, allowing for the efficient representation of different types of information. Messages can be nested and complex, allowing for the creation of intricate data structures.The available data types include:* Scalar Types: These are fundamental data types.

`double`

64-bit floating-point number.

`float`

32-bit floating-point number.

`int32`

32-bit integer.

`int64`

64-bit integer.

`uint32`

32-bit unsigned integer.

`uint64`

64-bit unsigned integer.

`sint32`

32-bit signed integer (uses ZigZag encoding for efficiency with negative numbers).

`sint64`

64-bit signed integer (uses ZigZag encoding).

`fixed32`

32-bit unsigned integer (fixed byte size).

`fixed64`

64-bit unsigned integer (fixed byte size).

`sfixed32`

32-bit signed integer (fixed byte size).

`sfixed64`

64-bit signed integer (fixed byte size).

`bool`

Boolean value (true or false).

`string`

UTF-8 encoded text.

`bytes`

Arbitrary sequence of bytes.

Enum Types

Allow the definition of a set of named constants. “`protobuf enum Color RED = 0; GREEN = 1; BLUE = 2; “`

Message Types

Allow the creation of nested messages. “`protobuf message Address string street = 1; string city = 2; string zip_code = 3; message Person string name = 1; Address address = 2; “`

Map Types

Provide a key-value pair structure. “`protobuf message Profile map

Repeated Fields (Lists)

Allow for lists of any other type. “`protobuf message PhoneBook repeated Person people = 1; “`The flexibility of these data types allows for the creation of complex and efficient data structures, suitable for various communication scenarios. For instance, a system for managing user profiles might use nested messages to represent addresses, contact information, and preferences.

Tips for Optimizing Protocol Buffer Definitions for Performance

Optimizing protobuf definitions is crucial for achieving high performance in gRPC applications. Several strategies can be employed to minimize size, reduce serialization/deserialization overhead, and improve overall efficiency.* Choose the Right Data Types: Select data types that are most appropriate for the data being represented. For example, use `sint32` or `sint64` for signed integers where possible, as they use ZigZag encoding, which is more efficient for smaller values.

Avoid using `string` or `bytes` when smaller, fixed-size data types would suffice.

Use Tag Numbers Wisely

Carefully plan the tag numbers for fields within messages. While tag numbers can technically be anything from 1 to 2 291, smaller tag numbers (1-15) are encoded more efficiently. Consider the frequency with which fields are used and prioritize the most frequently used fields with smaller tag numbers.

Minimize Message Size

Reduce the overall size of messages by carefully considering which fields are included and how data is structured. Consider using nested messages to group related data. Eliminate unnecessary fields.

Optimize for Encoding

Understand how Protocol Buffers are encoded. Fields are encoded with a tag number and a value. Smaller values take up less space.

Avoid Unnecessary Repeated Fields

Use repeated fields (lists) only when necessary. If a field will always have a single value, using a scalar type is more efficient.

Consider the `packed` Option

For repeated numeric fields, use the `packed = true` option to enable packed encoding. This can significantly reduce the size of the serialized message. “`protobuf message MyMessage repeated int32 values = 1 [packed = true]; “`* Profile and Benchmark: Use profiling tools to identify performance bottlenecks in your gRPC application.

Benchmark different protobuf definitions to evaluate their performance characteristics. Tools like `protoc` and gRPC’s built-in metrics can help with performance analysis.

Versioning

Plan for future changes to your message definitions. Once a message is in production, avoid changing tag numbers. Instead, add new fields with new tag numbers.

Consider `oneof`

The `oneof` feature allows you to define a set of fields where only one can be set at a time. This can be useful for representing mutually exclusive data, saving space and improving clarity. “`protobuf message Result oneof result int32 success_code = 1; string error_message = 2; “`By applying these optimization techniques, developers can create efficient and performant gRPC applications that minimize bandwidth usage, reduce latency, and improve overall user experience.

Real-world examples of optimization can be seen in high-traffic services, where even small improvements in message size or serialization speed can lead to significant gains in resource utilization and scalability. For instance, in a financial trading platform, optimizing the size of market data messages can improve the speed and efficiency of data delivery to clients.

Advanced gRPC Features

gRPC offers a rich set of advanced features that go beyond basic remote procedure calls, enabling developers to build sophisticated, high-performance, and secure communication systems. These features are crucial for applications requiring real-time data streaming, robust security, and fine-grained control over access. Let’s delve into some of the most important advanced capabilities.

Streaming in gRPC for Real-time Communication

Streaming in gRPC allows for real-time, bidirectional communication between clients and servers, making it ideal for applications such as live chat, financial data feeds, and monitoring systems. This capability significantly enhances the responsiveness and efficiency of these types of applications compared to traditional request-response models. Streaming leverages a single, persistent connection for continuous data transfer.There are four types of streaming available in gRPC:

- Unary RPC: This is the standard request-response model, where the client sends a single request and the server responds with a single response.

- Server-side streaming RPC: The client sends a single request to the server, and the server streams back a sequence of responses. An example of this could be a server sending stock price updates to a client.

- Client-side streaming RPC: The client streams a sequence of requests to the server, and the server responds with a single response. An example of this is a client sending log data to a server for aggregation.

- Bidirectional streaming RPC: Both the client and server can stream messages to each other simultaneously. This is ideal for real-time chat applications or scenarios where both parties need to continuously exchange data.

To implement streaming, you define the service methods in your Protocol Buffers (.proto) file, specifying the streaming direction using the `stream` . The gRPC framework then handles the underlying complexities of managing the streams.

Authentication and Authorization in gRPC Services

Securing gRPC services is paramount, and gRPC provides mechanisms for both authentication (verifying the identity of the client) and authorization (determining what resources the authenticated client is allowed to access). Authentication ensures that only authorized clients can access the service, while authorization enforces access control policies based on the client’s identity and roles.gRPC supports various authentication methods:

- TLS (Transport Layer Security): Provides secure communication channels, encrypting data in transit. This is the foundation for secure gRPC services.

- API Keys: Simple method for authenticating clients, where each client is assigned a unique API key.

- JWT (JSON Web Tokens): A widely used standard for securely transmitting information between parties as a JSON object. Clients send a JWT in the request headers, which the server validates.

- Custom Authentication: Allows for the implementation of custom authentication schemes tailored to specific requirements.

Authorization is typically implemented after authentication. Once a client’s identity is verified, the server determines the client’s permissions. This often involves checking the client’s roles or claims against a set of access control rules.Example of using JWT authentication:

1. Client

Obtains a JWT (e.g., from a login service).

2. Client

Sends the JWT in the metadata of the gRPC request.

3. Server

Extracts the JWT from the metadata.

4. Server

Validates the JWT (e.g., verifies the signature, checks the expiration time).

5. Server

If the JWT is valid, the server authorizes the request based on the claims in the JWT.

This example demonstrates how JWTs, along with TLS, can be used to create a robust and secure gRPC service.

gRPC in Modern Architectures

gRPC has become a cornerstone technology in modern software architectures, particularly within microservices and cloud-native environments. Its efficiency, speed, and language-agnostic nature make it ideally suited for the demands of distributed systems. This section explores how gRPC seamlessly integrates with these architectural paradigms, highlighting its advantages and providing practical examples.

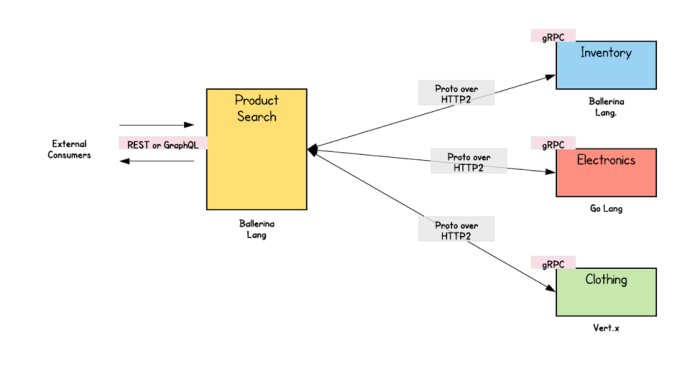

gRPC and Microservices Integration

gRPC’s design aligns perfectly with the microservices architectural style. Microservices, by their nature, are small, independent services that communicate with each other over a network. gRPC facilitates this communication through its:

- Efficient Communication: gRPC uses Protocol Buffers for serialization, resulting in smaller message sizes and faster transmission compared to text-based formats like JSON, often used in REST. This is crucial in microservices where high performance and low latency are paramount.

- Strong Typing and Contract Definition: Protocol Buffers provide a well-defined contract between services, ensuring that data exchanged between them is structured and validated. This reduces the likelihood of errors and makes it easier to maintain service compatibility as the system evolves.

- Polyglot Support: gRPC supports various programming languages, allowing different microservices to be built using the most appropriate technology for each service. This flexibility is a key advantage in microservices architectures.

- Streaming Capabilities: gRPC supports bidirectional streaming, enabling real-time communication between services. This is particularly useful for services that need to exchange continuous data streams, such as those involved in monitoring, analytics, or live updates.

gRPC in Cloud-Native Architectures

Cloud-native architectures leverage cloud computing to build and run scalable applications. gRPC is a natural fit for these environments, offering several benefits:

- Containerization Compatibility: gRPC works well with containerization technologies like Docker and orchestration platforms like Kubernetes. Services can be easily containerized and deployed, and gRPC provides a standardized way for these containers to communicate.

- Service Mesh Integration: Service meshes, such as Istio and Linkerd, manage the communication between microservices. gRPC is often a preferred protocol within service meshes because it is lightweight, efficient, and supports features like service discovery, load balancing, and traffic management.

- Scalability and Resilience: gRPC’s performance characteristics contribute to the scalability of cloud-native applications. Its support for features like load balancing and circuit breaking helps to build resilient systems that can handle failures gracefully.

Examples of gRPC Usage in Containerized Environments

Consider a scenario where a retail application is built using microservices, containerized with Docker, and deployed on Kubernetes.

- Product Catalog Service: This service manages product information. gRPC can be used for high-performance communication between the Product Catalog service and other services, such as the Inventory Service or the Recommendation Service. The protocol buffers would define the structure of product data.

- Order Processing Service: This service handles order creation and management. gRPC enables efficient communication between the Order Processing service and payment gateways, shipping providers, and other external services.

- Authentication Service: This service handles user authentication and authorization. gRPC is suitable for communication between the Authentication Service and other services requiring secure access control.

In each case, gRPC provides a robust and efficient communication mechanism within the containerized environment. Kubernetes can be used to manage the deployment, scaling, and service discovery of these gRPC-based services.

Popular gRPC Use Cases

The following table presents popular gRPC use cases across different industries. Each use case is described briefly, highlighting the benefits of gRPC in each context.

| Use Case | Description | Benefits of gRPC | Examples |

|---|---|---|---|

| Microservices Communication | gRPC is used as the primary communication protocol between microservices in distributed applications. | High performance, low latency, efficient data transfer, and strong typing with Protocol Buffers. | E-commerce platforms, social media applications, financial services. |

| API Gateways | gRPC is utilized in API gateways to manage and route traffic to backend microservices. | Improved performance compared to REST, supports streaming, and allows for efficient data transformation. | Cloud-based services, API management platforms, enterprise applications. |

| Real-time Communication | gRPC’s streaming capabilities are used to build real-time applications, such as chat applications, live data dashboards, and streaming services. | Bidirectional streaming, efficient data transfer, and low latency. | Live chat applications, financial trading platforms, online gaming. |

| Mobile Applications | gRPC is used for efficient communication between mobile clients and backend services. | Reduced bandwidth usage, faster response times, and efficient data serialization. | Mobile gaming, social media apps, and mobile commerce applications. |

Performance Considerations

Optimizing gRPC service performance is crucial for building responsive and scalable applications. Understanding the factors that impact performance, and employing effective optimization strategies, ensures efficient communication and a positive user experience. This section delves into methods for improving gRPC performance, the impact of data serialization, and strategies for monitoring and troubleshooting gRPC applications.

Optimizing gRPC Service Performance

Several techniques can be employed to enhance the performance of gRPC services. These optimizations often focus on reducing latency, increasing throughput, and efficiently utilizing resources.

- Connection Management: Maintaining persistent connections (HTTP/2’s default) significantly reduces connection overhead. This is especially beneficial for applications with frequent communication. The server can reuse existing connections for multiple requests, avoiding the latency associated with establishing a new connection for each request.

- Message Size Optimization: Smaller message sizes translate to faster transmission times. Protocol Buffers, by default, offer a compact binary format compared to text-based formats like JSON. Minimize the data sent in each message by only including necessary fields. Consider using compression (e.g., gzip) for large payloads, though this adds computational overhead.

- Load Balancing: Distribute traffic across multiple gRPC server instances to prevent overload and improve response times. Implement load balancing strategies, such as round-robin or least-connections, to ensure optimal resource utilization. This prevents any single server from becoming a bottleneck.

- Buffering and Batching: In certain scenarios, batching multiple requests into a single gRPC call can improve throughput. For example, aggregating multiple small database queries into a single batched query can reduce the overhead of individual gRPC calls. Buffering can be employed to handle bursts of requests, smoothing out the load on the server.

- Profiling and Tuning: Regularly profile your gRPC services to identify performance bottlenecks. Use profiling tools to pinpoint areas where optimization is needed, such as slow database queries or inefficient code. Tuning server parameters, such as the number of worker threads, can also improve performance.

- Server-Side Optimization: Optimize server-side code for efficiency. This includes minimizing database access, caching frequently accessed data, and using efficient algorithms. The server’s ability to quickly process requests directly impacts overall gRPC performance.

Impact of Data Serialization on gRPC Efficiency

Data serialization plays a vital role in gRPC performance. The choice of serialization format directly affects the size of the data transmitted, the speed of serialization and deserialization, and overall efficiency.

- Protocol Buffers (Protobuf): Protobuf is gRPC’s default and recommended serialization format. Its binary format is highly efficient, leading to smaller message sizes compared to text-based formats like JSON or XML. Protobuf also provides fast serialization and deserialization, reducing CPU overhead.

- Serialization/Deserialization Speed: The speed at which data is serialized and deserialized impacts the overall latency of gRPC calls. Protobuf’s optimized implementation results in faster processing times compared to other formats. Faster serialization and deserialization mean quicker processing of requests and responses.

- Message Size: Smaller message sizes reduce the amount of data that needs to be transmitted over the network. This translates to lower latency and improved throughput. Protobuf’s binary format achieves significant size reductions, especially for complex data structures.

- Compatibility and Versioning: Protobuf provides excellent support for backward and forward compatibility. This allows you to evolve your service definitions without breaking existing clients. Proper versioning strategies are essential for long-term maintainability and scalability.

- Alternatives to Protobuf: While Protobuf is the preferred choice, other serialization formats like JSON and Avro can be used with gRPC, though often with a performance penalty. JSON, for example, is human-readable but less efficient in terms of size and processing speed. Avro is a row-oriented remote procedure call (RPC) system that uses a schema-based binary format.

Strategies for Monitoring and Troubleshooting gRPC Applications

Effective monitoring and troubleshooting are essential for maintaining the health and performance of gRPC applications. Implementing comprehensive monitoring and using appropriate troubleshooting techniques can quickly identify and resolve issues.

- Metrics Collection: Collect key performance metrics, such as request latency, error rates, and throughput. Tools like Prometheus and Grafana can be used to collect, store, and visualize these metrics. Monitor the number of requests, response times, and error codes.

- Logging: Implement comprehensive logging to capture detailed information about gRPC calls, including request and response payloads, timestamps, and error messages. Centralized logging systems like the ELK stack (Elasticsearch, Logstash, Kibana) facilitate analysis and troubleshooting.

- Tracing: Implement distributed tracing to track requests as they flow through your system. Tools like Jaeger and Zipkin provide insights into the performance of individual gRPC calls and their dependencies. Tracing helps identify performance bottlenecks and pinpoint the source of errors.

- Error Handling: Implement robust error handling in both client and server code. Handle gRPC status codes and provide informative error messages. Utilize interceptors to centralize error handling logic.

- Health Checks: Implement health checks to monitor the availability of gRPC services. Health checks can be used by load balancers and other components to determine the health of a service instance. Implement a health check service to determine if a service is healthy and available.

- Debugging Tools: Utilize debugging tools, such as gRPC command-line tools (e.g., `grpcurl`) and IDE debuggers, to diagnose issues. Use `grpcurl` to inspect service definitions and send test requests. Debuggers help step through code and identify the root cause of problems.

- Network Monitoring: Use network monitoring tools to analyze network traffic and identify potential issues, such as latency or packet loss. Tools like Wireshark can be used to capture and analyze gRPC traffic. Network monitoring helps identify issues related to network connectivity.

Final Review

In conclusion, the choice between gRPC and REST hinges on specific project needs. While REST remains a solid choice for its simplicity and widespread adoption, gRPC shines in performance-critical environments and microservice architectures. By understanding their strengths and weaknesses, you can confidently select the optimal communication strategy, ensuring your applications are robust, efficient, and poised for success. The future of networked applications is dynamic, and mastering both gRPC and REST empowers you to navigate this landscape with expertise.

Detailed FAQs

What is the primary difference between gRPC and REST in terms of communication protocol?

gRPC utilizes HTTP/2, which supports multiplexing and bidirectional streaming, leading to more efficient communication. REST, on the other hand, primarily uses HTTP/1.1 or HTTP/2, but typically without these advanced features.

Is gRPC more difficult to implement than REST?

Initially, gRPC can have a steeper learning curve due to the need to define service contracts using Protocol Buffers and the use of code generation. REST, with its reliance on HTTP methods and common data formats like JSON, often appears simpler to get started with.

When is REST still the preferred choice over gRPC?

REST is often preferred when backward compatibility is crucial, as it’s widely supported. It also excels in scenarios requiring simple data retrieval and where ease of debugging and human readability are paramount.

Can gRPC and REST coexist in the same application?

Yes, absolutely. Many modern applications leverage both gRPC and REST. gRPC might be used for internal microservice communication, while REST is used for public APIs to provide broader accessibility and ease of use for external clients.

What are Protocol Buffers, and why are they used with gRPC?

Protocol Buffers (protobufs) are a language-neutral, platform-neutral, extensible mechanism for serializing structured data. They are used with gRPC to define the structure of data exchanged between services, enabling efficient serialization and deserialization, which contributes to gRPC’s performance benefits.