Change Data Capture (CDC) for data integration is a powerful technique transforming how businesses manage and utilize their data. This method focuses on identifying and capturing only the changes made to data in a source system, enabling real-time or near real-time data synchronization across different systems. Instead of moving entire datasets, CDC efficiently tracks modifications, ensuring data consistency and minimizing resource consumption.

This comprehensive guide delves into the core concepts of CDC, exploring various implementation methods, architectures, and suitable data sources. We’ll examine the benefits of adopting CDC over traditional data integration approaches, such as batch processing, highlighting its impact on data latency and real-time data availability. Moreover, we’ll address the challenges and considerations associated with CDC implementation, including performance overhead and schema changes.

The exploration will also cover popular CDC tools and technologies, best practices, and future trends, providing a holistic understanding of this crucial data integration strategy.

Definition and Core Concepts of CDC

Change Data Capture (CDC) is a critical technique in modern data integration, enabling efficient and timely data synchronization across various systems. Understanding its core principles and benefits is essential for building robust and scalable data pipelines.

Fundamental Principle of Change Data Capture

The fundamental principle behind CDC revolves around identifying and capturing only the changes made to data within a source system. Instead of replicating entire datasets, CDC focuses on tracking and extracting modifications, such as inserts, updates, and deletes. This approach minimizes the volume of data transferred, reduces processing overhead, and allows for near real-time data synchronization.

Definition of Change Data Capture in Data Integration

In the context of data integration, Change Data Capture (CDC) is a set of techniques and processes used to identify and capture data modifications in a source database and propagate those changes to target systems. These target systems can include data warehouses, data lakes, or other applications that require up-to-date information. The core function is to streamline the data flow process, reducing latency and improving data consistency across disparate systems.

Primary Goals and Benefits of Implementing Change Data Capture

Implementing CDC offers several key advantages for data integration projects. These benefits contribute to improved efficiency, reduced costs, and enhanced data quality.

- Reduced Data Transfer Volume: CDC minimizes the amount of data transferred by capturing only the changes. This leads to faster data replication and lower network bandwidth consumption, which is especially critical when dealing with large datasets or limited network resources.

- Improved Data Latency: By capturing changes in near real-time, CDC reduces the time it takes for data to become available in target systems. This low latency is crucial for applications that require up-to-date information, such as real-time analytics, fraud detection, and operational dashboards.

- Reduced Resource Consumption: Because only changed data is processed, CDC significantly reduces the load on source and target systems. This leads to lower CPU, memory, and storage requirements, and ultimately, lower infrastructure costs.

- Enhanced Data Consistency: CDC ensures that data in target systems is synchronized with the source system’s changes, minimizing the risk of data discrepancies. This improved consistency is essential for accurate reporting, decision-making, and regulatory compliance.

- Simplified Data Pipeline Design: CDC simplifies the design and maintenance of data pipelines. By focusing on incremental changes, CDC eliminates the need for complex full-table loads and transformations, resulting in a more manageable and efficient data integration process.

- Support for Event-Driven Architectures: CDC can be integrated with event streaming platforms to trigger actions or updates in response to data changes. For instance, when a customer’s address is updated in the source system, a CDC process can capture this change and trigger an update to the customer’s record in the data warehouse.

Types of CDC Methods

Implementing Change Data Capture (CDC) involves various methodologies, each with its own strengths and weaknesses. The choice of method depends on factors like the source database, performance requirements, data volume, and the desired level of real-time data integration. Understanding these different approaches is crucial for selecting the most appropriate solution for a given data integration scenario.

Log-Based CDC

Log-based CDC leverages the transaction logs of the source database to capture changes. These logs, which record every transaction (inserts, updates, deletes), are continuously monitored, and the changes are extracted and replicated.The process of log-based CDC involves several steps:

- Log Mining: A CDC tool reads the transaction logs, which contain a chronological record of all database changes.

- Change Identification: The tool parses the log entries to identify the specific changes made to the data, including the type of operation (insert, update, delete), the affected table, and the changed data.

- Change Extraction: The tool extracts the relevant data from the log entries. This may involve reconstructing the “before” and “after” images of updated rows.

- Change Application: The extracted changes are then applied to the target data store, ensuring that the target reflects the same state as the source.

Log-based CDC offers several advantages:

- Minimal Impact on Source Database Performance: Since the CDC tool reads the transaction logs, it avoids direct interaction with the database tables, reducing the impact on the source system’s performance.

- Real-time or Near Real-time Data Replication: Changes are captured and replicated almost immediately, enabling near real-time data integration.

- Comprehensive Change Capture: Log-based CDC captures all changes, including those made in bulk operations, ensuring data consistency.

However, it also has some disadvantages:

- Dependency on Database Log Format: The CDC tool must understand and be compatible with the source database’s log format, which can vary.

- Complexity in Implementation: Implementing log-based CDC can be complex, requiring specialized tools and expertise.

- Potential for Data Loss: If the transaction logs are not properly managed, there is a risk of data loss.

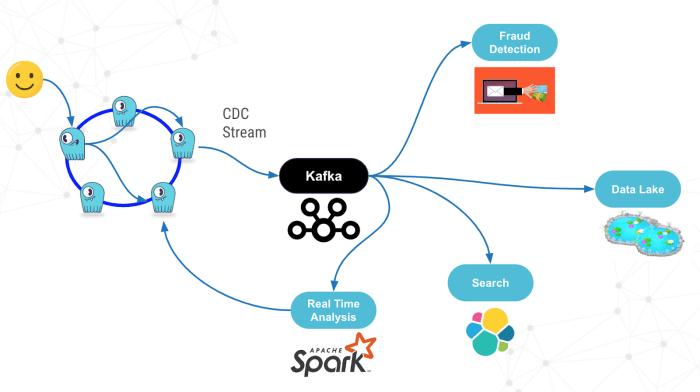

An example of log-based CDC in action is using tools like Debezium with Apache Kafka. Debezium monitors the database transaction logs (e.g., MySQL’s binary logs or PostgreSQL’s write-ahead logs) and streams the changes to Kafka topics. Consumers can then subscribe to these topics and process the change events in real-time. This is commonly used for building event-driven architectures and for real-time analytics.

Trigger-Based CDC

Trigger-based CDC utilizes database triggers to capture changes. Triggers are database objects that automatically execute a predefined set of actions in response to specific events on a table, such as inserts, updates, or deletes.Here’s how trigger-based CDC works:

- Trigger Creation: Database triggers are created on the source tables. These triggers are associated with specific events (e.g., `AFTER INSERT`, `AFTER UPDATE`, `AFTER DELETE`).

- Change Capture: When a change occurs on a source table, the associated trigger is activated. The trigger captures the changed data and stores it in a separate change data capture table.

- Change Extraction and Application: A CDC process reads the change data from the change data capture table and applies it to the target data store.

Trigger-based CDC has the following advantages:

- Simplicity of Implementation: Trigger-based CDC can be relatively easy to implement, especially for simpler scenarios.

- Fine-grained Control: Triggers allow for fine-grained control over which changes are captured and how they are captured.

- Immediate Change Detection: Changes are detected immediately when the triggers are activated.

However, trigger-based CDC also has several disadvantages:

- Performance Impact on Source Database: Triggers add overhead to the database operations, which can impact performance, especially for high-volume transactions.

- Increased Complexity in Data Management: Managing triggers and the associated change data capture tables can become complex, particularly in large and complex database environments.

- Risk of Data Integrity Issues: Improperly designed triggers can lead to data integrity issues.

An example of trigger-based CDC is creating triggers in a MySQL database to capture changes. The triggers would write the changed data to a separate “audit” or “changelog” table. This changelog table then serves as the source for replicating changes to another database or data warehouse.

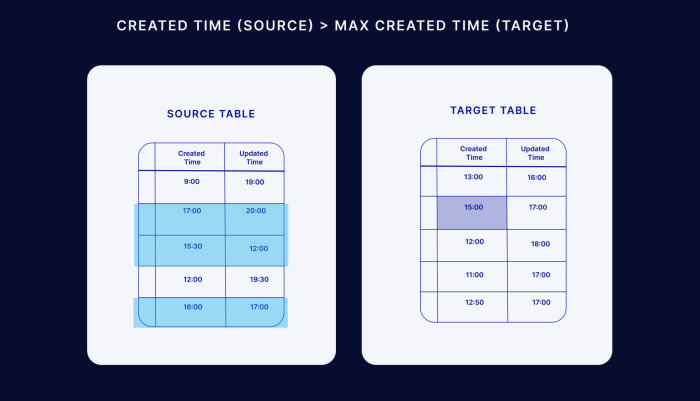

Timestamp-Based CDC

Timestamp-based CDC uses timestamps to identify and capture changes. A timestamp column is added to the source table, and the CDC process periodically queries the table for rows with timestamps newer than the last processed timestamp.The general process of timestamp-based CDC includes:

- Timestamp Column: A timestamp column (e.g., `last_updated_at`) is added to the source table. This column is automatically updated whenever a row is inserted or updated.

- Periodic Polling: A CDC process periodically queries the source table, selecting rows where the timestamp column is greater than the last processed timestamp.

- Change Extraction and Application: The CDC process extracts the changes and applies them to the target data store.

Timestamp-based CDC offers certain advantages:

- Simplicity of Implementation: Timestamp-based CDC is generally simpler to implement than log-based or trigger-based CDC.

- Reduced Performance Impact: It can have a lower performance impact on the source database compared to trigger-based CDC.

However, it also presents certain drawbacks:

- Potential for Missed Changes: If changes occur between polling intervals, they might not be captured immediately, leading to potential delays in data replication.

- Inefficiency for Frequent Updates: For tables with frequent updates, the CDC process might need to scan a large number of rows, which can be inefficient.

- Difficulty with Deleted Records: Timestamp-based CDC does not naturally handle deleted records. Additional logic is needed to track and replicate deletions.

An example of timestamp-based CDC could involve a batch process that runs every hour. The process queries a table for records where the `last_updated_at` column is more recent than the last processed timestamp. These changes are then replicated to a data warehouse. This method is appropriate for situations where near real-time data is not critical and a small delay is acceptable.

| Feature | Log-Based | Trigger-Based | Timestamp-Based |

|---|---|---|---|

| Change Capture Mechanism | Database transaction logs | Database triggers | Timestamp column |

| Impact on Source Database | Low | Medium to High | Low to Medium |

| Real-time Data Replication | Yes, near real-time | Yes, near real-time | No, based on polling interval |

| Complexity | High | Medium | Low |

| Data Loss Risk | Potential if logs are not managed properly | Low, if triggers are implemented correctly | Low, if polling intervals are managed effectively |

| Handling of Deleted Records | Handles deletions effectively | Can handle deletions with additional triggers | Requires additional logic to track deletions |

The selection of the most suitable CDC method is dependent on the specific requirements of the data integration project, considering factors such as data volume, the need for real-time replication, and the performance characteristics of the source database.

CDC Implementation Architectures

Implementing Change Data Capture (CDC) effectively involves choosing the right architecture to meet specific data integration needs. The architecture selected significantly impacts performance, scalability, and the overall complexity of the data pipeline. Several architectural patterns have emerged as common approaches, each with its strengths and weaknesses depending on the data sources, target systems, and the required level of real-time data synchronization.

Common CDC Architectures

Various architectures are employed for CDC implementation, each tailored to different use cases and system requirements. Understanding these architectures helps in selecting the most appropriate one for a given scenario.

- Log-Based CDC: This architecture directly reads transaction logs from the source database. It is a popular approach due to its efficiency and minimal impact on the source system. It is often implemented using tools like Debezium, which monitors database transaction logs (e.g., the MySQL binlog or the PostgreSQL write-ahead log) and streams the changes to a message broker like Kafka.

The advantages include real-time data capture, low latency, and minimal overhead on the source database. A significant disadvantage is the dependency on the availability and format of the database transaction logs, which can vary.

- Trigger-Based CDC: In this approach, database triggers are created to capture changes. When data in the source database is modified (insert, update, or delete), the triggers fire, capturing the changed data and writing it to a separate change log or staging area. This method is relatively easy to implement, especially in environments where database trigger capabilities are readily available. However, trigger-based CDC can introduce performance overhead on the source database, as the triggers execute within the same transaction as the data modifications.

This can be a significant concern in high-volume transactional systems.

- Polling-Based CDC: This architecture involves periodically querying the source database to identify changes. It typically compares timestamps or version numbers to detect modifications. Polling-based CDC is simpler to implement than log-based or trigger-based methods, requiring no specific database features or configurations. However, it often has higher latency and consumes more resources on the source database because it involves repeated queries. The frequency of polling determines the latency of data capture.

This method is suitable for situations where real-time updates are not critical and resource consumption on the source system needs to be carefully managed.

- Hybrid CDC: A hybrid architecture combines multiple CDC methods to leverage the benefits of each. For example, it might use log-based CDC for critical, high-volume tables and trigger-based CDC for less frequently updated data. This approach allows for optimizing performance and minimizing the impact on the source system. Hybrid approaches often involve complex configurations and require careful planning to manage data consistency across different CDC mechanisms.

The Role of Data Pipelines in CDC-Based Data Integration

Data pipelines are the backbone of CDC-based data integration, orchestrating the movement, transformation, and loading of data from source systems to target systems. They ensure that data changes are captured, processed, and delivered reliably and efficiently. Data pipelines provide the necessary infrastructure to handle the complexities of CDC, including data capture, transformation, and delivery.

- Data Ingestion: The initial stage of the pipeline involves ingesting data changes from the source systems. CDC tools, such as Debezium or custom CDC implementations, capture these changes and make them available for processing.

- Data Transformation: Once the data changes are captured, they are often transformed to match the schema and format of the target system. This can involve cleaning, filtering, aggregating, and enriching the data. Tools like Apache Kafka Streams, Apache Spark, or cloud-based data integration services are commonly used for this purpose.

- Data Loading: The transformed data is then loaded into the target system, which could be a data warehouse, a data lake, or another database. The loading process needs to handle various aspects such as schema evolution, data consistency, and error handling.

- Orchestration and Monitoring: Data pipelines require robust orchestration and monitoring capabilities. Orchestration tools manage the execution of the pipeline tasks, ensuring they run in the correct order and handle dependencies. Monitoring tools track the performance and health of the pipeline, alerting operators to any issues that may arise.

Basic CDC Architecture Diagram

A basic CDC architecture diagram illustrates the key components and the flow of data in a CDC-based data integration system.

Diagram Description:

The diagram illustrates a straightforward CDC architecture, involving a source database, a CDC component, and a target system. The source database, depicted as a database icon, is the origin of the data. The CDC component, often a software tool or a service, sits between the source database and the target system. The CDC component is connected to the source database, monitoring its transaction logs (if using a log-based approach) or using other methods to capture data changes.

These changes are then transmitted to the target system, which could be a data warehouse, a data lake, or another database, also depicted as a database icon. The flow of data is represented by arrows, indicating the path from the source database, through the CDC component, and into the target system.

Data Sources Suitable for CDC

Change Data Capture (CDC) is a powerful technique for tracking and propagating data changes across various systems. The suitability of a data source for CDC depends on factors like its ability to log changes, the volume of data, and the performance impact of capturing those changes. Understanding which data sources are best suited for CDC is crucial for designing efficient and reliable data integration pipelines.CDC is particularly beneficial when dealing with data sources that undergo frequent updates and require near real-time synchronization with other systems.

This allows for efficient data replication, minimizes resource consumption, and reduces the latency of data availability across different platforms.

Common Data Sources for CDC

Several types of data sources are ideally suited for CDC implementation. These sources typically offer robust change tracking mechanisms, making it easier to identify and capture data modifications.

- Relational Databases: Relational databases are a primary focus for CDC due to their structured nature and built-in logging capabilities.

- Example: A retail company uses a MySQL database to manage its product catalog. When a product’s price changes, CDC captures this update and propagates it to a data warehouse for analysis and a customer-facing application to reflect the new price. This is a common scenario where CDC ensures data consistency across various systems.

- NoSQL Databases: NoSQL databases, with their flexible data models, are increasingly used for CDC, especially when dealing with high-volume, rapidly changing data.

- Example: A social media platform uses a MongoDB database to store user profiles and activity. CDC monitors changes to user profiles (e.g., profile updates, new connections) and replicates these changes to a search index (like Elasticsearch) for real-time search functionality.

- Data Warehouses: Data warehouses can be both a source and a target for CDC. CDC can be used to capture changes from source systems and load them into the data warehouse.

- Example: An e-commerce company uses a data warehouse built on Snowflake. CDC is employed to capture changes from its transactional systems (e.g., order processing, customer interactions) and load them into the data warehouse for business intelligence reporting and analysis. This ensures that the data warehouse is always up-to-date with the latest transactional information.

- Message Queues: Message queues are used for CDC when changes are published as messages.

- Example: A financial institution uses Kafka as a message queue. Whenever a stock trade occurs, the details are published as a message. CDC can consume these messages and replicate the trade data to other systems for real-time risk management and market data analysis.

- Cloud Storage: Cloud storage services can be used as a data source when dealing with large datasets or when integrating data from various sources.

- Example: A healthcare provider stores patient records in Amazon S3. CDC can be used to track changes to these records (e.g., new patient data, updates to medical history) and replicate them to a data analytics platform for research and reporting purposes.

Applying CDC to Different Database Systems

The implementation of CDC varies slightly depending on the specific database system. However, the underlying principles remain the same: identifying, capturing, and propagating data changes.

- MySQL: MySQL supports CDC through its binary log, which records all data modifications. Tools like Debezium and Maxwell can read the binary log and stream changes to other systems.

- Example: A gaming company uses MySQL to store player statistics. CDC, using Debezium, captures changes in player scores and replicates them to a leaderboards service, allowing players to see real-time updates.

- PostgreSQL: PostgreSQL provides CDC capabilities through its logical replication feature. This allows for streaming changes to other databases or systems.

- Example: A logistics company uses PostgreSQL to manage its inventory and shipment tracking. CDC captures updates to shipment statuses (e.g., “in transit,” “delivered”) and replicates them to a dashboard for real-time monitoring by customers and internal teams.

- SQL Server: SQL Server has built-in CDC features that can be enabled on tables. This feature tracks changes in a dedicated change data capture database.

- Example: A banking institution uses SQL Server to store customer transaction data. CDC captures changes to transaction records (e.g., deposits, withdrawals) and replicates them to a fraud detection system for real-time monitoring and anomaly detection.

- MongoDB: MongoDB’s change streams feature allows for streaming changes from a collection. This is suitable for applications that need to react to data changes in real-time.

- Example: An online marketplace uses MongoDB to manage product listings. CDC, leveraging change streams, captures changes to product details (e.g., price, availability) and updates the search index to ensure the latest information is readily available to users.

Target Systems for CDC Data

The effectiveness of Change Data Capture (CDC) hinges not only on its ability to capture changes but also on the destination where this captured data is delivered. The choice of target system significantly impacts the usability, accessibility, and ultimately, the value derived from the CDC implementation. Various target systems can receive data captured by CDC, each with its own strengths and weaknesses, making the selection process a critical step in the overall data integration strategy.

Data Warehouses

Data warehouses are a common destination for CDC data. They are designed to store large volumes of historical data, enabling robust reporting and analytics. CDC data feeds into the data warehouse provide an up-to-date and complete view of the business, allowing for timely insights and informed decision-making.Data warehouses are suitable for CDC data because:

- Historical Analysis: They are optimized for storing and querying historical data, which is essential for trend analysis, performance tracking, and identifying patterns over time.

- Data Integration: Data warehouses typically integrate data from multiple sources. CDC facilitates this by providing a stream of changes that can be easily incorporated alongside other data sets.

- Reporting and Business Intelligence: They support sophisticated reporting and business intelligence (BI) tools, enabling users to create dashboards, reports, and visualizations based on the latest data.

- Data Consistency: Data warehouses employ rigorous data quality checks and transformations to ensure data consistency and accuracy, making them reliable sources for business insights.

For example, consider a retail company using CDC to capture changes in its transactional database. The CDC data, representing sales, inventory updates, and customer information, is then loaded into a data warehouse. This enables the company to track sales trends, manage inventory levels, and personalize customer experiences in real-time.

Data Lakes

Data lakes offer a flexible and scalable storage solution for CDC data, particularly when dealing with diverse data types and large volumes. They are designed to store raw data in its native format, providing a centralized repository for all data assets. This approach is particularly beneficial for organizations that want to leverage CDC data for advanced analytics, machine learning, and data exploration.Data lakes are suitable for CDC data because:

- Scalability: They can easily scale to accommodate growing data volumes, making them ideal for handling the continuous stream of data generated by CDC.

- Data Variety: They support a wide range of data formats, including structured, semi-structured, and unstructured data, allowing organizations to store and analyze diverse data types.

- Flexibility: They offer flexibility in data processing and analysis. Data can be transformed and analyzed as needed, supporting a variety of use cases.

- Cost-Effectiveness: They often provide cost-effective storage options, particularly for large volumes of data.

A telecommunications company might use CDC to capture changes from its billing system and customer relationship management (CRM) system. The raw CDC data is then ingested into a data lake, allowing data scientists to perform advanced analytics, such as fraud detection and customer churn prediction, using machine learning algorithms.

Operational Databases

Operational databases can be targeted for CDC data when real-time data synchronization is critical. This approach allows organizations to keep operational systems up-to-date with the latest changes, ensuring data consistency across different applications.Operational databases are suitable for CDC data because:

- Real-time Updates: CDC enables near real-time synchronization of data, ensuring that operational systems reflect the most current information.

- Data Consistency: It helps maintain data consistency across different systems by propagating changes quickly.

- Reduced Latency: By minimizing the time it takes to propagate changes, CDC reduces latency and improves system responsiveness.

- Operational Efficiency: CDC can automate data replication processes, improving operational efficiency and reducing manual intervention.

For example, a financial institution might use CDC to synchronize transaction data between its core banking system and its fraud detection system. This ensures that the fraud detection system has the latest information to identify and prevent fraudulent activities in real-time.

Other Destinations

Besides data warehouses, data lakes, and operational databases, CDC data can be directed to various other destinations based on specific business needs. These destinations may include:

- Message Queues: Systems like Apache Kafka can be used to stream CDC data to various consumers, enabling real-time data integration and event-driven architectures.

- Search Engines: Tools like Elasticsearch can index CDC data, allowing for efficient search and retrieval of data changes.

- Data Streaming Platforms: Platforms like Apache Flink or Apache Spark Streaming can process CDC data in real-time, enabling real-time analytics and data transformations.

- Cloud Storage: Cloud storage services like Amazon S3 or Google Cloud Storage can store CDC data for archival purposes or for further processing.

For example, a logistics company might use CDC to capture changes in its order management system and stream the data to a message queue. This allows the company to trigger real-time updates to its delivery tracking system and notify customers about the status of their orders.

Considerations for Choosing a Target System

Choosing the right target system for CDC data depends on several factors, including business requirements, data volume, data velocity, and the need for data transformation.Key considerations include:

- Business Requirements: What are the primary goals for using CDC data? (e.g., reporting, analytics, real-time synchronization).

- Data Volume and Velocity: How much data is being generated, and how quickly does it need to be processed?

- Data Transformation Needs: Does the data need to be transformed or cleansed before being used?

- Data Quality Requirements: What level of data accuracy and consistency is required?

- Scalability and Performance: How well does the target system scale to handle increasing data volumes and processing demands?

- Cost: What is the total cost of ownership, including storage, processing, and maintenance?

- Existing Infrastructure: What infrastructure is already in place, and how well does the target system integrate with it?

For instance, a financial institution prioritizing real-time fraud detection would likely choose an operational database or a message queue, while a retail company focusing on long-term sales analysis might opt for a data warehouse or data lake. Carefully evaluating these factors will help organizations select the target system that best aligns with their specific needs and ensures the successful implementation of their CDC strategy.

Benefits of Using CDC for Data Integration

Change Data Capture (CDC) offers significant advantages for data integration, revolutionizing how organizations manage and utilize their data. By capturing and processing only the changes made to data, CDC provides a more efficient and effective approach compared to traditional methods. This section delves into the key benefits of employing CDC for data integration, highlighting its advantages in terms of data latency, cost savings, and overall operational efficiency.

Advantages of CDC over Batch Processing

Batch processing, a traditional method of data integration, involves periodically extracting, transforming, and loading (ETL) large datasets. CDC, on the other hand, focuses on capturing and processing only the changed data, leading to several key advantages.

- Reduced Data Latency: CDC processes data changes in near real-time, significantly reducing data latency compared to batch processing, which typically operates on a schedule (e.g., daily, hourly). This near real-time capability enables quicker decision-making and faster responses to changing business conditions.

- Lower Resource Consumption: Batch processing requires significant resources for processing large datasets, especially during peak load times. CDC, by processing only changes, consumes far fewer resources, reducing the load on source systems and the data integration infrastructure.

- Improved Scalability: CDC solutions are often more scalable than batch processes. As data volumes grow, CDC can adapt more easily because it processes only the incremental changes, unlike batch processes that must reprocess the entire dataset.

- Enhanced Operational Efficiency: CDC minimizes the time window required for data integration. Batch processes often involve lengthy ETL cycles, potentially impacting system availability. CDC streamlines this process, leading to improved operational efficiency and reduced downtime.

- Simplified Data Lineage: CDC provides a clearer view of data lineage, as it tracks the changes made to the data over time. This is more straightforward than batch processing, where tracking the transformation of the entire dataset can be complex.

Impact of CDC on Data Latency and Real-Time Data Availability

One of the most significant benefits of CDC is its impact on data latency and the availability of real-time data. By capturing and processing data changes as they occur, CDC enables organizations to access up-to-date information faster than ever before.

- Near Real-Time Data Availability: CDC systems can deliver data updates within seconds or minutes of the change occurring in the source system. This enables real-time analytics, real-time dashboards, and immediate responses to business events.

- Reduced Time-to-Insight: The reduced data latency provided by CDC accelerates the time it takes to gain insights from data. This allows businesses to make faster, more informed decisions based on the most current information available.

- Improved Responsiveness to Events: In scenarios such as fraud detection or customer service, CDC enables organizations to react quickly to changing circumstances. For example, real-time fraud detection systems can identify and respond to suspicious transactions immediately, minimizing financial losses.

- Enhanced Customer Experience: Real-time data availability through CDC can improve customer experience. For instance, in e-commerce, CDC can enable real-time inventory updates, ensuring customers see accurate product availability.

Cost Savings and Efficiency Gains Achieved Through CDC

Implementing CDC can result in significant cost savings and efficiency gains for organizations. These benefits arise from reduced resource consumption, improved operational efficiency, and enhanced data utilization.

- Reduced Infrastructure Costs: By processing only data changes, CDC minimizes the need for large-scale data processing infrastructure, which reduces hardware, software, and operational costs.

- Lower Storage Costs: CDC helps reduce storage costs by avoiding the need to store entire datasets for each integration cycle. Only the changed data needs to be stored and processed.

- Increased Developer Productivity: CDC solutions often simplify the data integration process, reducing the time and effort required for developers to build and maintain data pipelines. This leads to increased developer productivity and faster time-to-market for new data-driven applications.

- Improved Data Quality: CDC can help improve data quality by ensuring that data changes are captured and integrated accurately and consistently. This leads to more reliable data and better decision-making.

- Optimized Resource Utilization: CDC optimizes the utilization of computing resources, leading to more efficient data integration operations. For example, a company that uses CDC can reduce the processing time for its daily sales reports, allowing for more efficient use of its computing infrastructure.

Challenges and Considerations in CDC Implementation

Implementing Change Data Capture (CDC) offers significant advantages for data integration, but it also introduces several challenges that must be carefully considered and addressed. Successful CDC implementation requires a thorough understanding of these potential pitfalls and the development of appropriate strategies to mitigate them. Addressing these challenges proactively ensures the reliability, performance, and data integrity of the CDC-enabled data pipelines.

Performance Overhead

One of the primary challenges in CDC implementation is the potential for performance overhead on the source systems. Capturing and tracking changes consumes resources, which can impact the performance of the source database and applications.To mitigate this, several strategies can be employed:

- Minimizing Impact on Source Systems: CDC implementations should be designed to have minimal impact on the source database. This involves careful selection of CDC methods (e.g., log-based CDC is generally less intrusive than trigger-based CDC), efficient change capture processes, and optimized data transfer mechanisms.

- Resource Allocation: Adequate resources, such as CPU, memory, and I/O, should be allocated to the CDC processes. Monitoring the performance of both the source system and the CDC processes is crucial to identify and address bottlenecks.

- Incremental Processing: Processing changes in smaller, incremental batches rather than large, infrequent batches can help to distribute the load and reduce the impact on the source system.

- Data Filtering and Transformation: Filtering unnecessary data at the source can reduce the volume of data captured and processed, improving performance. Similarly, performing initial transformations at the source can also optimize downstream processing.

- Hardware Optimization: Investing in faster hardware, such as high-performance storage and powerful processors, can help alleviate performance bottlenecks.

Schema Changes Management

Schema changes in source systems pose a significant challenge to CDC processes. Changes to table structures, data types, or the addition/removal of columns can break the CDC pipelines and lead to data inconsistencies if not managed correctly.Addressing schema evolution requires a robust strategy that includes:

- Schema Versioning: Implementing schema versioning allows for tracking changes over time. This enables the CDC process to understand the schema at the point in time the change occurred, which is essential for data reconstruction in the target system.

- Schema Registry: A schema registry acts as a central repository for schema definitions. CDC processes can consult the registry to understand the schema of the source data and adapt to changes. Apache Kafka’s Schema Registry is a popular example.

- Backward Compatibility: Designing schema changes to be backward-compatible ensures that existing CDC processes can continue to function without interruption. This might involve adding new columns with default values or avoiding the removal of existing columns.

- Forward Compatibility: Implementing forward compatibility allows the target system to handle changes in the source schema. This could involve ignoring new columns or handling changes in data types.

- Automated Schema Propagation: Automating the propagation of schema changes to the target systems ensures that the target systems are always aligned with the source schema. This can be achieved through tools that detect schema changes and automatically update the target systems.

- Data Migration Strategies: When breaking changes are unavoidable, data migration strategies are necessary. This might involve creating new tables in the target system with the updated schema and migrating the data.

Data Consistency and Integrity

Maintaining data consistency and integrity during CDC processes is crucial for ensuring the reliability of the integrated data. Data loss or corruption can occur due to various factors, such as network failures, system crashes, or processing errors.Strategies to ensure data consistency and integrity include:

- Transaction Management: Ensuring that CDC processes are executed within transactions is crucial. This guarantees that changes are applied atomically, preventing partial updates and ensuring data consistency.

- Idempotent Operations: Designing CDC processes to be idempotent ensures that the same change can be applied multiple times without causing adverse effects. This is important for handling retries and dealing with potential failures.

- Error Handling and Recovery: Implementing robust error handling and recovery mechanisms is essential for dealing with failures. This might involve retrying failed operations, logging errors, and implementing data validation checks.

- Data Validation: Validating data at various stages of the CDC process, such as at the source, during transformation, and in the target system, helps to identify and prevent data corruption.

- Data Auditing: Implementing data auditing allows for tracking changes to the data and provides a mechanism for identifying and resolving data inconsistencies. This includes logging changes and capturing metadata, like timestamps and user information.

- Checkpointing: Periodically checkpointing the CDC process allows for resuming from a known good state in case of failure. This minimizes data loss and reduces the time required to recover from errors.

- Conflict Resolution: In cases where concurrent updates occur on the source system, conflict resolution strategies are needed to handle conflicting changes. This might involve prioritizing certain changes or using a last-write-wins approach.

CDC Tools and Technologies

Choosing the right tools and technologies is crucial for a successful Change Data Capture (CDC) implementation. The market offers a variety of solutions, each with its strengths and weaknesses. Understanding the available options and their capabilities allows organizations to select the tool that best aligns with their specific data integration needs, infrastructure, and budget.

Popular CDC Tools and Technologies

The following list highlights some of the most popular and widely used CDC tools and technologies available today. Each tool offers a unique set of features and capabilities designed to address various data integration requirements.

- Debezium: An open-source distributed platform for change data capture. It monitors databases and streams changes to Apache Kafka.

- Apache Kafka Connect: A framework for connecting Kafka to external systems. It offers pre-built connectors for various data sources and targets, including CDC functionalities.

- Qlik Replicate: A commercial CDC and data replication tool known for its high performance and scalability.

- Oracle GoldenGate: A comprehensive data integration platform that provides real-time data replication and CDC capabilities, particularly for Oracle databases.

- AWS Database Migration Service (DMS): A managed service offered by Amazon Web Services (AWS) for migrating databases to AWS and replicating data between different databases.

- Microsoft SQL Server Change Data Capture: A built-in feature within Microsoft SQL Server for tracking changes made to tables.

- Striim: A real-time data integration and streaming platform that supports CDC from various data sources.

- HVR: A data replication and integration platform known for its ability to handle complex data environments.

Comparison of CDC Tool Features

Selecting the right CDC tool requires a thorough comparison of its features. The following table provides a side-by-side comparison of key features for some of the popular CDC tools. Note that specific feature availability and pricing may vary depending on the vendor and licensing model.

| Tool | Key Features | Data Sources Supported | Target Systems Supported |

|---|---|---|---|

| Debezium | Real-time change data capture, open-source, Kafka-based, supports various database connectors, scalable. | MySQL, PostgreSQL, SQL Server, MongoDB, Oracle, Db2, Cassandra. | Kafka, various databases, data warehouses. |

| Qlik Replicate | High-performance, real-time data replication, supports a wide range of sources and targets, automated schema management. | Oracle, SQL Server, DB2, MySQL, PostgreSQL, SAP, Salesforce, and many others. | Data warehouses (Snowflake, Amazon Redshift, Google BigQuery), databases, data lakes. |

| Oracle GoldenGate | Real-time data replication, high availability, supports a broad range of databases and platforms, robust transformation capabilities. | Oracle, SQL Server, MySQL, PostgreSQL, Db2, and others. | Oracle, SQL Server, MySQL, PostgreSQL, data warehouses, and cloud platforms. |

| AWS DMS | Managed service, easy to set up, supports various database migrations, real-time replication capabilities, cost-effective. | Oracle, SQL Server, PostgreSQL, MySQL, MariaDB, and others. | AWS services (Amazon S3, Amazon Redshift, Amazon RDS), other databases. |

Selecting the Right CDC Tool for Specific Data Integration Needs

Choosing the appropriate CDC tool involves a careful assessment of several factors. These include the data sources and target systems, the required level of performance and scalability, the complexity of data transformations, and the budget.

- Assess Data Sources and Targets: Determine which databases, applications, and platforms need to be integrated. Ensure the chosen tool supports these sources and targets. For example, if integrating data from an Oracle database to a Snowflake data warehouse, tools like Qlik Replicate or Oracle GoldenGate would be suitable, as they offer strong support for both.

- Evaluate Performance and Scalability Requirements: Consider the volume of data changes, the required latency, and the expected growth in data volume. High-performance tools like Qlik Replicate and Oracle GoldenGate are well-suited for demanding environments. For instance, a financial institution handling millions of transactions per day would require a tool capable of handling high throughput and low latency.

- Analyze Data Transformation Needs: Determine if data needs to be transformed before being loaded into the target system. Some tools offer built-in transformation capabilities. For example, Oracle GoldenGate provides robust data transformation features. If complex transformations are needed, choose a tool that offers these capabilities or integrates well with other transformation tools.

- Consider Budget and Licensing: CDC tools vary in price. Open-source tools like Debezium offer cost-effectiveness, while commercial tools like Qlik Replicate and Oracle GoldenGate provide comprehensive features and support, but at a higher cost. AWS DMS is a managed service with a pay-as-you-go pricing model, which can be advantageous for cloud-based environments.

- Evaluate Ease of Use and Management: Consider the tool’s ease of setup, configuration, and management. Managed services like AWS DMS simplify the deployment and maintenance process. Some tools require specialized expertise to configure and maintain.

- Assess Support and Community: Evaluate the availability of vendor support, documentation, and community resources. Commercial tools typically offer dedicated support. Open-source tools have active communities that can provide assistance.

CDC and Real-Time Data Integration

Change Data Capture (CDC) plays a pivotal role in enabling real-time data integration, providing a mechanism to capture and propagate data changes as they occur. This allows organizations to react swiftly to events, make informed decisions, and maintain up-to-date data across various systems.

Enabling Real-Time Data Integration with CDC

CDC facilitates real-time data integration by continuously monitoring data sources for changes. These changes, whether inserts, updates, or deletes, are captured and transformed into a stream of events. This stream is then delivered to target systems, enabling near real-time data synchronization. The key lies in the low-latency nature of CDC, allowing for minimal delay between the change in the source system and its reflection in the target systems.

This is often achieved through the use of database transaction logs, which record every data modification. CDC tools analyze these logs, extract the relevant changes, and publish them to a message queue or data pipeline.

Real-Time Use Cases Leveraging CDC

CDC empowers a variety of real-time use cases across different industries.

- Real-Time Fraud Detection: Financial institutions employ CDC to monitor transaction data in real-time. When suspicious activities, such as unusual spending patterns or large transactions, are detected, alerts are immediately triggered, enabling rapid intervention to prevent fraud.

- Inventory Management and Supply Chain Optimization: Retailers and manufacturers utilize CDC to track inventory levels and manage supply chains in real-time. CDC captures changes in inventory data, such as stock movements or sales, and updates the relevant systems instantly. This enables better demand forecasting, optimized inventory levels, and reduced stockouts.

- Personalized Customer Experiences: E-commerce businesses leverage CDC to provide personalized recommendations and offers to customers in real-time. CDC captures customer interactions, such as browsing history, purchases, and cart additions, and updates the recommendation engine accordingly. This allows for dynamic adjustments to product suggestions and promotions, leading to increased sales and customer satisfaction.

- Real-Time Monitoring and Alerting: Operational teams use CDC to monitor system performance, application logs, and other critical data in real-time. When performance metrics deviate from expected levels or critical errors occur, alerts are immediately triggered. This enables proactive issue resolution and minimizes downtime.

- Healthcare Monitoring: Healthcare providers use CDC to monitor patient data, such as vital signs, medication adherence, and lab results, in real-time. This allows for timely intervention and improved patient outcomes. For instance, changes in a patient’s vital signs can trigger alerts for nurses, enabling prompt medical attention.

Advantages of CDC for Streaming Data Applications

CDC offers significant advantages for streaming data applications.

- Low Latency: CDC captures changes directly from the source system’s transaction logs, minimizing latency. This allows for near real-time data propagation.

- Reduced Resource Consumption: CDC operates without requiring full table scans, reducing the load on source systems and minimizing resource consumption.

- Data Integrity: CDC ensures data integrity by capturing changes as they occur, preserving the order and context of the data modifications.

- Scalability: CDC solutions can be designed to scale horizontally, handling large volumes of data and accommodating growing data needs.

- Event-Driven Architecture: CDC integrates seamlessly with event-driven architectures, allowing for the creation of real-time data pipelines and applications. This architecture enhances responsiveness and adaptability to changing data requirements.

CDC Best Practices

Implementing Change Data Capture (CDC) effectively requires careful planning and execution. Adhering to best practices ensures data integrity, minimizes performance impacts, and simplifies management. This section Artikels crucial strategies for designing, implementing, monitoring, and optimizing CDC solutions.

Designing CDC Solutions

Designing a robust CDC solution begins with a thorough understanding of the data landscape and business requirements. Careful consideration of data sources, target systems, and data transformation needs is paramount.

- Define Clear Objectives: Determine the specific goals of the CDC implementation. Are you aiming for real-time analytics, data warehousing, disaster recovery, or a combination of these? Clearly defined objectives will guide design decisions.

- Assess Data Sources: Evaluate the characteristics of each data source. Consider the data volume, change frequency, data structure, and the source’s capabilities for change detection (e.g., transaction logs, triggers).

- Choose Appropriate CDC Methods: Select the most suitable CDC method based on the data source and requirements. Consider log-based CDC, trigger-based CDC, or a combination. Log-based CDC is often preferred for its minimal impact on source systems.

- Plan for Data Transformation: Data often needs to be transformed before loading into the target system. Design the transformation logic to handle data type conversions, data cleansing, and aggregation, if necessary.

- Select Target Systems: Choose target systems that can handle the incoming data volume and velocity. Consider the scalability and performance characteristics of the target systems (e.g., data warehouses, data lakes, streaming platforms).

- Implement Data Validation: Implement robust data validation checks at various stages of the CDC pipeline to ensure data integrity. This includes validating data at the source, during transformation, and in the target system.

- Design for Scalability: Design the CDC solution to accommodate future growth in data volume and velocity. This might involve using scalable CDC tools, horizontally scaling the infrastructure, and optimizing the data pipeline.

- Consider Security: Implement appropriate security measures to protect sensitive data during the CDC process. This includes encrypting data in transit and at rest, securing access to data sources and target systems, and complying with relevant data privacy regulations.

Monitoring and Troubleshooting CDC Processes

Continuous monitoring and proactive troubleshooting are essential for maintaining the health and performance of a CDC implementation. Effective monitoring allows for early detection of issues, preventing data delays and ensuring data quality.

- Establish Monitoring Metrics: Define key performance indicators (KPIs) to monitor the CDC pipeline. These metrics should include:

- Latency: The time it takes for data changes to be captured and delivered to the target system.

- Throughput: The rate at which data changes are processed.

- Error Rates: The frequency of errors occurring during the CDC process.

- Data Volume: The amount of data processed.

- Resource Utilization: CPU, memory, and disk I/O usage.

- Implement Alerting: Set up alerts to notify administrators of any deviations from the established KPIs. Alerts should be triggered based on thresholds for latency, error rates, and resource utilization.

- Centralized Logging: Implement centralized logging to capture detailed information about all CDC activities. Logs should include timestamps, event types, error messages, and performance metrics.

- Regular Health Checks: Schedule regular health checks to verify the integrity of the CDC pipeline. These checks should include validating data consistency between source and target systems.

- Troubleshooting Techniques: When issues arise, use the following troubleshooting techniques:

- Review Logs: Analyze logs to identify the root cause of errors.

- Check Resource Utilization: Monitor CPU, memory, and disk I/O usage to identify performance bottlenecks.

- Verify Network Connectivity: Ensure that the CDC components can communicate with each other and with the data sources and target systems.

- Test Data Transformations: Verify that data transformations are functioning correctly.

- Replay Failed Events: Replay failed events to attempt to recover from errors.

- Establish a Change Management Process: Implement a formal change management process to manage updates to the CDC pipeline. This process should include testing changes in a non-production environment before deploying them to production.

Optimizing CDC Performance and Scalability

Optimizing the performance and scalability of a CDC solution is crucial for handling large data volumes and real-time data requirements. This involves tuning the CDC tools, optimizing the data pipeline, and scaling the infrastructure.

- Tune CDC Tool Configuration: Optimize the configuration of the CDC tool to improve performance. This includes adjusting parameters related to:

- Batch Size: The number of changes processed in each batch.

- Concurrency: The number of parallel processes used to capture and deliver changes.

- Buffer Sizes: The size of buffers used to store data during processing.

- Optimize Data Transformations: Streamline data transformations to reduce processing time. This includes:

- Using efficient transformation functions.

- Minimizing data type conversions.

- Avoiding unnecessary operations.

- Implement Parallel Processing: Leverage parallel processing to speed up data ingestion and transformation. This can be achieved by:

- Partitioning data into multiple streams.

- Using multiple worker threads or processes.

- Optimize Network Performance: Optimize network performance to reduce latency. This includes:

- Using high-bandwidth network connections.

- Reducing network congestion.

- Scale Infrastructure Horizontally: Scale the infrastructure horizontally to handle increasing data volumes. This involves:

- Adding more servers or instances to the CDC cluster.

- Using a distributed data storage system.

- Implement Data Compression: Compress data to reduce storage space and improve network performance.

- Use Incremental Loads: Load only changed data into the target system instead of full loads. This significantly reduces the amount of data that needs to be processed.

- Regular Performance Testing: Conduct regular performance testing to identify bottlenecks and opportunities for optimization.

- Consider Data Partitioning: Partition the data across multiple tables or databases to improve query performance and scalability. For example, in a retail scenario, you might partition sales data by region or product category. This allows for parallel processing and reduces the load on individual tables.

Future Trends in CDC

The landscape of Change Data Capture (CDC) is constantly evolving, driven by the need for faster, more efficient, and more scalable data integration solutions. As data volumes continue to explode and real-time analytics become increasingly critical, CDC technologies are adapting to meet the demands of modern data environments. This section explores emerging trends and advancements shaping the future of CDC and their potential impact on data integration practices.

Advancements in Streaming Data Integration

The integration of CDC with streaming data platforms is becoming increasingly prevalent. This approach allows for real-time data ingestion and processing, enabling organizations to react quickly to changes in their data.

- Integration with Apache Kafka: Kafka, a distributed streaming platform, is a popular choice for CDC implementations. CDC tools can publish changes as events to Kafka topics, allowing downstream consumers to subscribe and process the data in real-time. This architecture supports high-throughput and fault-tolerant data pipelines. For example, Debezium is a popular open-source project that provides CDC connectors for various databases, publishing change events directly to Kafka.

- Support for Cloud-Native Streaming Services: Cloud providers offer managed streaming services like Amazon Kinesis, Google Cloud Pub/Sub, and Azure Event Hubs. CDC tools are increasingly integrating with these services to provide scalable and cost-effective data integration solutions within cloud environments.

- Event-Driven Architectures: CDC facilitates the adoption of event-driven architectures. Changes in the source data trigger events, which are then processed by various applications and services. This approach promotes loose coupling, scalability, and responsiveness.

Rise of Serverless CDC

Serverless computing offers a compelling model for CDC, allowing organizations to deploy and manage CDC pipelines without provisioning or managing servers. This can lead to reduced operational overhead and improved scalability.

- Function-as-a-Service (FaaS) Integration: CDC tools can trigger serverless functions in response to data changes. These functions can then process the changes and update target systems. For example, when a record is updated in a database, a serverless function can be invoked to transform and load the data into a data warehouse.

- Automated Scaling and Resource Management: Serverless platforms automatically scale resources based on demand, ensuring that CDC pipelines can handle fluctuating data volumes. This eliminates the need for manual capacity planning and reduces the risk of bottlenecks.

- Cost Optimization: Serverless computing offers a pay-per-use model, which can be more cost-effective than traditional infrastructure-based solutions, especially for workloads with variable demand.

AI and Machine Learning in CDC

Artificial intelligence (AI) and machine learning (ML) are beginning to play a role in CDC, offering new possibilities for data quality, anomaly detection, and automated data transformation.

- Data Quality Monitoring: ML models can be trained to identify data quality issues in CDC pipelines, such as missing values, incorrect data types, and inconsistencies. These models can automatically flag problematic data and trigger alerts.

- Anomaly Detection: CDC can be used to monitor data for unusual patterns or anomalies, which may indicate fraudulent activity, system failures, or other critical events. ML algorithms can be trained to detect these anomalies in real-time.

- Automated Data Transformation: ML can be used to automate data transformation tasks, such as data type conversion, data cleansing, and data enrichment. This can reduce the need for manual data preparation and improve the efficiency of data integration pipelines.

Enhanced Data Governance and Security

Data governance and security are paramount in modern data environments. CDC technologies are evolving to provide improved capabilities in these areas.

- Data Lineage Tracking: CDC tools are incorporating features for tracking data lineage, which provides visibility into the origin, transformation, and movement of data throughout the pipeline. This helps with data governance, compliance, and troubleshooting.

- Encryption and Data Masking: CDC solutions are increasingly supporting encryption and data masking to protect sensitive data during transit and at rest. This is crucial for meeting regulatory requirements and protecting privacy.

- Role-Based Access Control (RBAC): CDC tools are implementing RBAC to control access to data and CDC pipelines. This ensures that only authorized users can view, modify, or manage data.

CDC is evolving to meet the demands of modern data environments.

- Faster Data Ingestion: Real-time data processing with streaming technologies.

- Scalability: Serverless CDC solutions to handle growing data volumes.

- Intelligence: AI and ML for data quality and anomaly detection.

- Security and Compliance: Enhanced data governance and security features.

These trends are poised to have a significant impact on data integration practices. Organizations that embrace these advancements will be better positioned to harness the full value of their data, make data-driven decisions in real-time, and improve their overall business agility. The future of CDC is bright, with continued innovation driving improvements in performance, scalability, and functionality.

Last Point

In conclusion, understanding and implementing Change Data Capture (CDC) for data integration is essential for modern data-driven organizations. By focusing on capturing and replicating only data changes, CDC offers significant advantages in terms of efficiency, real-time data availability, and cost savings. As technology continues to evolve, CDC will play an increasingly vital role in enabling seamless data integration and driving informed decision-making.

Embracing CDC best practices and staying informed about emerging trends will empower businesses to maximize the value of their data assets in an ever-changing landscape.

Query Resolution

What is the primary goal of Change Data Capture (CDC)?

The primary goal of CDC is to capture and track changes made to data in a source system and efficiently replicate those changes to target systems, minimizing latency and resource usage.

How does CDC differ from traditional data integration methods?

Unlike traditional methods that often involve batch processing and full data loads, CDC focuses on capturing only the changes. This results in faster data replication, reduced resource consumption, and improved real-time data availability.

What are the main types of CDC methods?

The main types of CDC methods include log-based, trigger-based, and timestamp-based approaches. Each method has its own advantages and disadvantages regarding performance, complexity, and impact on the source system.

What are the common challenges in implementing CDC?

Common challenges include managing performance overhead, handling schema changes, ensuring data consistency, and dealing with data integrity during the CDC process.

What are some popular CDC tools and technologies?

Popular CDC tools include Debezium, Apache Kafka Connect, AWS Database Migration Service (DMS), and Oracle GoldenGate. The choice of tool depends on the specific requirements of the data integration project.