Multi-stage Docker builds represent a powerful optimization technique for container image creation. By enabling the construction of images in multiple stages, developers can significantly reduce the final image size and build time. This approach is particularly beneficial for applications with complex dependencies or multiple build steps.

This detailed exploration of multi-stage Docker builds will cover various aspects, including optimization strategies, dependency management, and advanced techniques. We’ll also delve into security considerations, performance implications, and real-world use cases.

Defining Multi-stage Builds

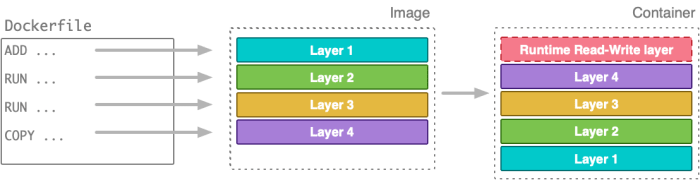

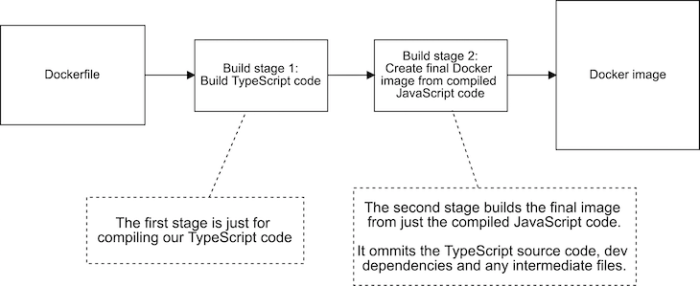

Multi-stage Docker builds offer a powerful approach to creating optimized Docker images. They enable the construction of smaller, faster, and more efficient images by allowing the separation of build-time and runtime dependencies. This approach is particularly beneficial for applications that require significant build processes or complex dependencies.Multi-stage builds are fundamentally different from traditional single-stage builds. Instead of accumulating all necessary layers in a single image, multi-stage builds leverage multiple stages, allowing for the removal of intermediate layers.

This separation significantly reduces the final image size and accelerates the build process. This method leads to more lightweight images, resulting in faster download and deployment times.

Key Differences between Single-Stage and Multi-Stage Builds

Single-stage builds create a single Docker image that encapsulates all the necessary steps for the application’s execution. This monolithic approach often results in larger image sizes due to the inclusion of unnecessary build-time tools and dependencies. Multi-stage builds, on the other hand, employ multiple stages. Each stage is a separate build process, and the final image only contains the required runtime components.

Benefits of Multi-Stage Builds

Multi-stage builds offer significant advantages in terms of image size, build time, and overall efficiency. The key benefits include:

- Reduced Image Size: By separating build-time tools and dependencies, multi-stage builds eliminate unnecessary components from the final image. This leads to substantially smaller images, reducing download times and storage requirements.

- Faster Build Times: The removal of unnecessary layers from the image streamlines the build process, enabling faster creation of images. This is particularly important for frequent builds or continuous integration/continuous delivery (CI/CD) pipelines.

- Improved Security: By limiting the tools and dependencies included in the final image, multi-stage builds reduce the attack surface, enhancing the security of the containerized application.

- Enhanced Portability: Smaller images are more portable and easier to transfer across different environments. This is crucial in distributed development and deployment scenarios.

Comparison of Single-Stage and Multi-Stage Builds

The following table summarizes the key differences between single-stage and multi-stage build strategies:

| Feature | Single-Stage Build | Multi-Stage Build |

|---|---|---|

| Image Size | Generally larger due to inclusion of build tools and dependencies | Significantly smaller, containing only runtime components |

| Build Time | Potentially longer due to inclusion of build processes in the final image | Potentially faster due to the separation of build-time and runtime dependencies |

| Image Layers | More layers, increasing the image size and build time | Fewer layers, resulting in a smaller and faster build process |

Optimizing Image Size

Multi-stage Docker builds are a powerful tool for creating smaller, more efficient images. By separating build-time dependencies from runtime dependencies, these builds significantly reduce the final image size, resulting in faster downloads and deployment. This optimization is crucial for deploying applications in environments with limited bandwidth or storage.Minimizing the size of Docker images is critical for various reasons.

Smaller images translate to faster download times, reduced storage consumption on container registries and servers, and ultimately lower costs. Multi-stage builds are a primary mechanism to achieve these size reductions. This involves strategically leveraging different stages to construct an image with only the necessary components at runtime.

Intermediate Layer Handling

The intermediate layers generated during a multi-stage build are managed efficiently by Docker. Docker caches these layers, preventing redundant work when building subsequent images. This caching mechanism is a key aspect of performance optimization, significantly reducing build times. A crucial aspect is the efficient removal of intermediate layers. Multi-stage builds inherently remove unnecessary build-time artifacts, such as compilers or development tools, during the image construction.

COPY and RUN Usage in Different Stages

The `COPY` and `RUN` commands are employed differently in each stage of a multi-stage build. In the first stage, often a build stage, `COPY` commands are used to copy the necessary source code and dependencies. The `RUN` commands in this stage handle compilation and any necessary build-time operations. Subsequent stages, often the runtime stage, use `COPY` to copy only the compiled code, libraries, or other required runtime assets.

`RUN` commands in these stages are minimized, focusing on minimal setup and startup.

Scenario: Reducing a Large Image Size

Consider a scenario where a Node.js application needs to be packaged into a Docker image. A large image might result from including the entire Node.js environment, including development dependencies and tools. In a multi-stage build, the first stage could build the application. This stage would install all necessary build-time dependencies, compile the code, and copy the resulting files.

The second stage, the runtime stage, copies only the application files and their required runtime dependencies (e.g., only the necessary Node.js version). This significantly reduces the size of the final image. The build stage effectively removes the development dependencies from the final image.

Reducing Dependencies to Decrease Image Size

One crucial technique to reduce image size involves carefully managing dependencies. This involves:

- Using a minimal base image: Selecting a base image that includes only the necessary operating system components for the application’s runtime environment.

- Leveraging a thin base image: Base images, often minimal, offer only essential tools for the application’s runtime, drastically reducing the size of the final image compared to full-fledged operating systems.

- Utilizing a specific version of a dependency: This ensures the final image has only the necessary dependencies without extraneous versions.

- Using a package manager for managing dependencies: Tools like npm, yarn, or pip help ensure that only the required dependencies are included, reducing the size of the image.

By carefully considering these factors, developers can significantly reduce the size of Docker images. This strategy optimizes the image’s size, resulting in faster deployments and reduced storage costs.

Managing Dependencies

Effective dependency management is crucial for successful multi-stage Docker builds. Properly handling dependencies ensures that the final image contains only the necessary components, minimizing its size and improving build times. This section details strategies for managing dependencies, including efficient copying methods and solutions for common issues.Managing dependencies in multi-stage Docker builds is essential for creating lean and efficient container images.

This involves carefully selecting which packages and libraries are required at each stage of the build process and strategically transferring only the necessary components to the final stage.

Strategies for Dependency Management

Careful selection and management of dependencies are crucial in multi-stage Docker builds. Choosing the right tools and methods can significantly impact the final image size and build time. Consider the following approaches:

- Leveraging intermediate stages: Utilize intermediate build stages to install dependencies. This isolates dependency installations, preventing the inclusion of unnecessary packages in the final image. For example, if a Python application requires specific libraries, an intermediate stage can install these libraries without impacting the base image.

- Selective copying: Copy only the necessary files and directories from the intermediate stage to the final stage. Avoid unnecessary copying to reduce the image size. For instance, copying only the compiled Python application code, avoiding redundant copies of the development environment’s libraries.

- Using cache effectively: Leverage Docker’s caching mechanism. The build process can leverage previous stages’ caches, speeding up builds by reusing previously compiled dependencies.

Efficient Copying Methods

Efficient copying of necessary files is paramount for optimized multi-stage builds. The methods described below facilitate a streamlined transfer of required components.

- `COPY` command: The `COPY` instruction allows you to selectively copy files and directories from one stage to another. Specify the source and destination paths to ensure that only the necessary components are transferred.

- `COPY –from=tag` command: This variant of the `COPY` command enables copying files from a specific image stage. The `–from=tag` parameter specifies the image stage from which to copy. This approach ensures that only the relevant files are copied, minimizing image size.

- `COPY –from` with relative paths: Using relative paths within the `COPY –from` command allows for more flexibility and maintainability. This approach simplifies the copying process and enhances the readability of the Dockerfile.

Common Dependency Issues and Solutions

Understanding common dependency issues is essential for troubleshooting and optimizing multi-stage builds.

- Incorrect dependency versions: Ensuring the correct versions of dependencies for different stages is crucial. This can be addressed by using a package manager like `pip` or `npm` to manage dependencies, specifying versions in the `requirements.txt` or `package.json` files.

- Unnecessary dependencies: Identify and remove any unnecessary dependencies. This often involves careful analysis of the application’s requirements and removing any libraries or packages not directly used in the final application. Using a dependency analysis tool can help with this.

- Dependency conflicts: Resolving dependency conflicts between different stages can be a challenge. Using dependency managers to resolve conflicts and specifying dependencies with clear versions can help.

Dependency Management Table

This table provides a concise summary of dependency management strategies for various application types.

| Application Type | Dependency Management Strategy |

|---|---|

| Python Application | Use `requirements.txt` to specify dependencies. Install them in an intermediate stage, then copy the necessary files to the final stage. |

| Node.js Application | Use `package.json` to specify dependencies. Install them in an intermediate stage, then copy the necessary files to the final stage. |

| Java Application | Use a build tool like Maven or Gradle to manage dependencies. Package the application in the intermediate stage, and copy the necessary files to the final stage. |

Building and Testing Stages

Multi-stage Docker builds offer a powerful approach to streamlining application deployment. They allow for the creation of optimized images by separating the build process from the runtime environment. This separation significantly reduces the size of the final image, leading to faster downloads and deployments. A crucial aspect of this process involves meticulous building and testing of each stage to ensure a smooth and reliable deployment pipeline.The key to successful multi-stage builds lies in understanding how each stage contributes to the overall process.

Properly isolating and testing each stage, from dependency installation to final application compilation, guarantees that any errors or issues are identified early in the development cycle. This proactive approach prevents problems from surfacing during deployment, leading to more robust and efficient deployments.

Example Multi-Stage Dockerfile

This example demonstrates a multi-stage Dockerfile for a simple Node.js application. The initial stage builds the application, while the final stage runs the application.“`dockerfile# Stage 1: Build the applicationFROM node:16 as buildWORKDIR /appCOPY package*.json ./RUN npm installCOPY . .RUN npm run build# Stage 2: Run the applicationFROM node:16-alpine as runtimeWORKDIR /appCOPY –from=build /app/dist ./COPY –from=build /app/node_modules ./node_modulesCMD [“node”, “index.js”]“`This Dockerfile leverages two stages: `build` and `runtime`.

The `build` stage utilizes a `node:16` image to install dependencies and build the application’s code. The `runtime` stage leverages a smaller `node:16-alpine` image to run the application.

Building Each Stage

The build process for each stage involves a series of commands, executed sequentially. This approach ensures that the necessary steps are completed before moving on to the next stage. For instance, in the `build` stage, the `npm install` command is executed to install the application’s dependencies, followed by the `npm run build` command to compile the application. The `runtime` stage copies the compiled application and necessary dependencies from the `build` stage to the `runtime` stage, then executes the application.

Importance of Testing Each Stage

Testing each stage independently is crucial for ensuring the build process’s reliability. This involves running specific tests for each stage to validate its functionality. For the `build` stage, tests could include checking that all dependencies are installed correctly and that the application compiles without errors. For the `runtime` stage, tests could involve verifying that the application starts correctly and that it handles expected inputs and outputs.

Workflow for Creating, Testing, and Combining Stages

A structured workflow facilitates the development and testing of multi-stage builds. This process involves:

- Creating the Dockerfile with separate stages for build and runtime.

- Building each stage individually using `docker build -t build .` and `docker build -t runtime .`.

- Testing each stage independently using unit tests or integration tests to ensure that each stage functions correctly.

- Combining the stages into a final image using `docker build -t final .`.

- Validating the final image by running it in a container and ensuring the application functions as expected.

Debugging and Troubleshooting

Troubleshooting multi-stage builds often involves identifying the source of errors within each stage. Using Docker logs, examining build outputs, and analyzing error messages are essential steps in diagnosing issues. For example, if the `npm install` command fails, the log output from the `build` stage will provide clues about the problem. This proactive approach to debugging streamlines the process and ensures the smooth functioning of the application.

Advanced Techniques

Multi-stage Docker builds offer significant advantages for optimizing image size and streamlining development workflows. Leveraging advanced techniques, developers can tailor the build process to specific needs, enhancing efficiency and maintainability. This section explores sophisticated strategies for handling diverse build environments, maximizing caching, and managing complex dependencies within multi-stage builds.By employing advanced techniques, developers can significantly reduce the size of the final Docker image by carefully selecting which parts of the build process are included.

This approach not only optimizes storage space but also reduces deployment times.

Utilizing Different Languages in Separate Stages

Multi-stage builds excel at handling projects involving multiple programming languages. By separating the build process for each language into distinct stages, developers can leverage the appropriate tools and environments for each component. This isolates the build requirements of each language, simplifying dependency management and avoiding conflicts.

- Consider a project that combines Python for application logic and C++ for a performance-critical component. In a multi-stage build, the Python code could be built in one stage, leveraging Python-specific build tools and packages. Subsequently, the C++ code could be built in a separate stage, utilizing the necessary C++ compilers and libraries. This approach isolates the build process for each language, ensuring compatibility and reducing potential conflicts.

Handling Diverse Build Environments

Multi-stage builds can be tailored to accommodate diverse build environments. This capability proves crucial when projects necessitate specific libraries or tools that are not readily available in the development environment. The build process can be orchestrated to effectively use a dedicated build environment, ensuring a consistent build regardless of the developer’s local setup.

- Imagine a project requiring a specific version of a compiler or a specialized build tool that isn’t readily available on the developer’s local machine. A multi-stage build can utilize a dedicated build environment that contains the necessary software, guaranteeing consistent builds irrespective of the local development environment.

The Crucial Role of Caching in Multi-Stage Builds

Caching mechanisms are essential in multi-stage builds to significantly speed up the build process. By caching intermediate results and dependencies, developers can avoid redundant computations, minimizing build times, particularly when dealing with larger projects.

- A substantial advantage of caching is its ability to prevent unnecessary recompilation of components. When building a large application, caching intermediary results can considerably reduce the overall build time. For example, if a Python package is built in a previous stage, caching its output in the subsequent stage can eliminate the need to rebuild it, thus optimizing the build process.

Managing Different Build Contexts

Multi-stage builds provide mechanisms for effectively managing different build contexts. By defining distinct stages for various build operations, developers can isolate and control the necessary resources and dependencies for each step.

- A project requiring multiple dependencies in different stages can leverage this feature. For instance, a build stage might need a specific set of libraries or tools, while another stage may need a different set. Multi-stage builds enable the isolation of these dependencies, preventing conflicts and ensuring a smooth build process.

Security Considerations

Multi-stage Docker builds, while offering significant advantages in terms of image size and build efficiency, introduce new security considerations that must be meticulously addressed. Carefully managing the intermediate layers and securing the final image are paramount to preventing vulnerabilities and ensuring the integrity of the deployed application. Ignoring these considerations can lead to security breaches and compromise the system.Intermediate layers, often discarded during the build process, can harbor vulnerabilities if not properly handled.

These layers can contain sensitive data or unintended dependencies that might be exploited by attackers. Furthermore, if a compromised layer is included in the final image, it can grant attackers access to the running application.

Managing Intermediate Layers

Thorough management of intermediate layers is crucial to mitigate potential security risks. This involves carefully controlling the contents of each layer and ensuring that only necessary components are included in the final image. A systematic approach to layer management minimizes the attack surface by limiting the number of potential entry points.

- Careful Dependency Management: Ensure dependencies are minimized and only necessary libraries and packages are included. Redundant or outdated dependencies should be avoided to reduce the attack surface and prevent potential exploits. Utilize the latest, most secure versions of dependencies to benefit from patches and fixes.

- Explicit Removal of Sensitive Data: Ensure that sensitive data, such as API keys, passwords, and configuration files, is removed from intermediate layers. Avoid hardcoding sensitive information in Dockerfiles. Utilize environment variables or secrets management tools to store these values securely. Implement practices like removing all sensitive data, including intermediate build artifacts, in the final image.

- Secure Build Environment: The build environment should be secured to prevent unauthorized access to sensitive data and intermediate layers. Restrict access to the build environment and employ robust access control mechanisms to ensure only authorized personnel can interact with it.

Securing the Final Image

Ensuring the security of the final image is essential to protect the deployed application. The final image should contain only the necessary components and should be free of vulnerabilities.

- Image Scanning and Vulnerability Assessment: Regularly scan the final image for known vulnerabilities using tools like Docker Bench and Snyk. This proactive approach helps identify and address potential security weaknesses before deployment.

- Principle of Least Privilege: Restrict the permissions of the application container. Grant only the necessary permissions to the application container to execute its tasks, minimizing the potential damage if a vulnerability is exploited. Avoid granting unnecessary privileges. This involves granting only the minimum necessary permissions to run the application.

- Regular Updates and Patches: Maintain the final image by regularly updating and patching it. This ensures that known vulnerabilities are addressed and that the image remains protected from emerging threats. Keep the base images updated to benefit from security patches.

Performance and Efficiency

Multi-stage Docker builds significantly enhance the performance and efficiency of container image creation. They achieve this by allowing developers to construct the image in stages, effectively separating build-time dependencies from runtime dependencies. This approach minimizes the final image size and reduces the time required to build, test, and deploy applications.

Impact on Build Time

Multi-stage builds can dramatically reduce build times compared to traditional single-stage builds. This is primarily due to the elimination of unnecessary layers in the final image. The intermediate stages, which are not needed in the final image, are discarded, leading to a faster build process. For example, a build process that requires compilation of code using a specific language toolchain, can use a different intermediate stage with that toolchain, which is then removed in the final image, avoiding unnecessary build steps and compilation tools in the final image.

This optimization significantly reduces the overall time required to create the image.

Impact on Final Image Size

Multi-stage builds directly impact the final image size by leveraging intermediate stages to install and use only the necessary tools and packages. The final image includes only the dependencies required for the application’s runtime, resulting in a smaller image size. By eliminating unnecessary build tools and dependencies, the final image size is substantially reduced, improving efficiency in storage, transfer, and deployment.

This translates into faster download times, reduced storage costs, and improved overall performance in CI/CD pipelines.

Scenarios with Significant Performance Gains

Multi-stage builds demonstrate substantial performance gains in various scenarios. Consider a scenario where a Java application is built and deployed. A traditional build might include the entire Java Development Kit (JDK) in the final image, even though the application only requires the Java Runtime Environment (JRE). With a multi-stage build, the JDK can be installed in a separate stage, significantly reducing the final image size.

Similarly, in projects with complex build dependencies, multi-stage builds allow isolation of the build environment, minimizing unnecessary tools and packages in the final image, reducing build times and image sizes.

Advantages in CI/CD Pipelines

Multi-stage builds offer several advantages in CI/CD pipelines. Smaller image sizes translate to faster download times in CI/CD pipelines, reducing overall build times and enabling faster feedback loops. This improvement directly impacts the efficiency of the CI/CD pipeline, leading to quicker deployments and easier maintenance. Furthermore, the isolated nature of the build stages helps prevent conflicts and inconsistencies between different stages, promoting better reliability and consistency in the build process.

Use Cases

Multi-stage Docker builds offer significant advantages over traditional build processes, particularly in scenarios where minimizing the final image size is crucial. By separating build-time dependencies from runtime dependencies, multi-stage builds enable the creation of leaner, more efficient Docker images, ultimately leading to faster deployment and reduced resource consumption. This approach becomes especially valuable in applications requiring a complex build process or with a large number of external libraries.

Real-World Applications

Multi-stage builds excel in applications where the final image needs to be as lightweight as possible. A common example involves applications with substantial build dependencies, such as Python applications requiring numerous libraries for compilation or testing. By building the application in one stage and packaging the necessary libraries only for the build phase in a second stage, the final image can significantly shrink.

This is particularly useful in containerized environments where resources are limited, or for deploying applications to environments with strict resource quotas.

Benefits for Developer Productivity

Multi-stage builds streamline the development process by reducing the size and complexity of the final image. This translates to faster build times, resulting in quicker feedback loops for developers. Developers can focus on the application’s logic rather than the intricacies of managing large, bloated Docker images. The separation of concerns inherent in multi-stage builds promotes modularity, making it easier to maintain and update the build process.

Comparison with Other Build Strategies

Traditional Docker builds often lead to large, unwieldy images that include all dependencies, regardless of whether they are needed at runtime. This approach contrasts sharply with multi-stage builds, which efficiently separate build-time and runtime dependencies, dramatically reducing the image size. The use of a multi-stage approach, compared to a single-stage build, minimizes the overall size of the container image.

The reduction in image size leads to significant improvements in container deployment speed, storage requirements, and operational efficiency. This difference becomes critical in situations involving large, complex applications, or when deploying to resource-constrained environments.

Comparison to Other Strategies

Multi-stage Docker builds offer significant advantages over traditional single-stage builds, but understanding the trade-offs is crucial for choosing the optimal approach. This section explores the differences, highlighting the strengths and weaknesses of each strategy, and presents a comparative analysis.Traditional single-stage builds create a single Docker image containing all the necessary layers for the application’s runtime environment. This approach simplifies the build process but often results in larger image sizes due to the inclusion of development tools and dependencies not needed at runtime.

Multi-stage builds, on the other hand, enable a more modular approach by creating multiple intermediate images, allowing the final image to contain only the necessary runtime components.

Comparison of Build Strategies

Understanding the trade-offs between single-stage and multi-stage builds is essential. Single-stage builds are simpler to implement, while multi-stage builds yield significantly smaller image sizes and often faster build times. The choice depends on the specific needs and priorities of the project.

| Criterion | Single-Stage Build | Multi-Stage Build |

|---|---|---|

| Image Size | Generally larger due to inclusion of development tools and dependencies. | Significantly smaller, only runtime dependencies are included. |

| Build Time | Potentially faster for simple projects. | May be slightly slower due to multiple build stages, but overall time savings are often observed in the long run due to reduced image size. |

| Security | Potentially higher risk of vulnerabilities if development dependencies are not thoroughly removed. | Lower risk due to the explicit exclusion of unnecessary dependencies. |

| Complexity | Simpler to implement. | Slightly more complex to set up and manage. |

| Maintainability | Can become challenging for large, complex applications. | Improved maintainability due to clear separation of build and runtime components. |

Scenario Favoring Single-Stage Builds

Single-stage builds are often preferable when dealing with extremely simple applications. For instance, a small command-line utility with minimal dependencies may benefit from the simplicity of a single-stage build. The overhead of managing multiple stages in a complex build process is not justified by the potential gains in size reduction. Moreover, if the project’s build system is already optimized for speed and size in a single-stage approach, this might be a more efficient solution.

Conclusive Thoughts

In conclusion, multi-stage Docker builds offer a robust and efficient solution for building optimized container images. By strategically managing dependencies and minimizing intermediate layers, developers can significantly reduce the final image size and build time, leading to improved performance and developer productivity. Understanding the nuances of multi-stage builds is crucial for anyone seeking to create efficient and secure containerized applications.

Frequently Asked Questions

What are the typical benefits of using multi-stage Docker builds?

Multi-stage builds primarily reduce image size by discarding unnecessary intermediate layers. This results in faster build times and smaller deployments, crucial for efficient CI/CD pipelines.

How do multi-stage builds handle dependencies?

Multi-stage builds allow for the selective copying of necessary files between stages. This focused approach eliminates the need for including complete dependencies in the final image, significantly reducing its size.

What are some common security considerations when using multi-stage Docker builds?

Security is paramount. Careful management of intermediate layers is essential, as they can potentially introduce vulnerabilities. Thorough testing and validation of each stage are vital for preventing security risks.

When might a single-stage build be preferable to a multi-stage build?

Single-stage builds might be suitable for simple applications with straightforward dependencies. However, complex applications with numerous build steps or large dependencies often benefit from the optimizations provided by multi-stage builds.