Understanding container orchestration is crucial for modern application deployments. This exploration delves into Docker Swarm and Kubernetes, two prominent platforms that simplify managing containerized applications. We’ll examine their core functionalities, architectures, and key differences to help you determine which best suits your needs.

Docker Swarm and Kubernetes both aim to streamline the deployment, scaling, and management of containerized applications. However, their approaches and strengths vary, making careful consideration of your project requirements essential. This comparison will provide a detailed overview to help you navigate the intricacies of each platform.

Introduction to Docker Swarm

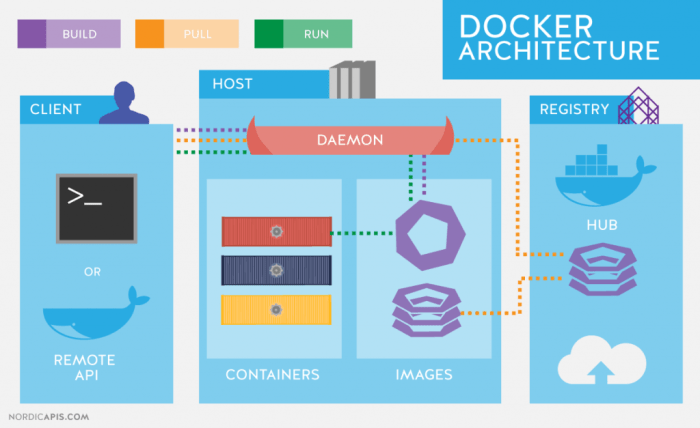

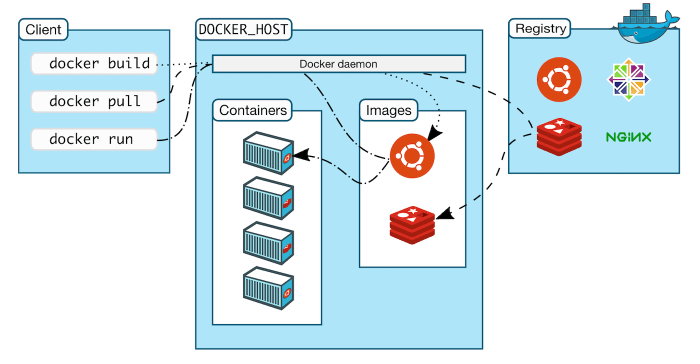

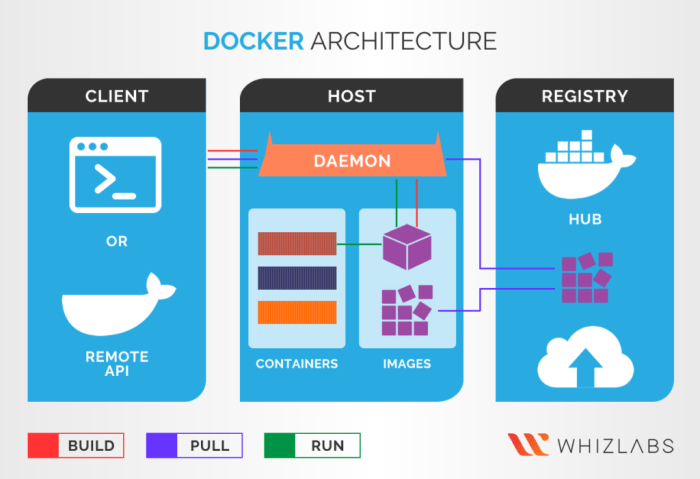

Docker Swarm is a container orchestration tool developed by Docker Inc. It simplifies the management of containerized applications by enabling the creation and management of clusters of Docker hosts. This allows for the scaling, deployment, and monitoring of applications across multiple machines, automating tasks like service discovery and load balancing.Swarm offers a straightforward approach to container orchestration, making it easier for developers to deploy and manage containerized applications at scale.

Its ease of use and integration with Docker make it a practical choice for many use cases.

Core Functionalities of Docker Swarm

Docker Swarm’s core functionalities revolve around automating the deployment, scaling, and management of containerized applications across multiple Docker hosts. This includes functionalities like service discovery, load balancing, and task scheduling. Swarm’s inherent design prioritizes ease of use and seamless integration with the Docker ecosystem.

Architecture of a Docker Swarm Cluster

A Docker Swarm cluster consists of a manager node and worker nodes. The manager node is responsible for coordinating the cluster’s activities, including scheduling tasks, managing services, and monitoring the overall health of the cluster. Worker nodes execute the tasks assigned by the manager node. This distributed architecture allows for efficient resource utilization and high availability.

Typical Use Cases for Docker Swarm Deployments

Docker Swarm is a suitable choice for a variety of use cases. These include deploying microservices applications, scaling web applications, and creating highly available containerized systems. Organizations can leverage Swarm to manage complex applications across multiple servers and to improve overall application performance. Its inherent scalability and reliability make it a popular choice for many organizations.

Example of a Docker Swarm Deployment

This example Artikels a basic deployment of a web application using Docker Swarm.

- Manager Node: A single machine acting as the central control point for the cluster. This node orchestrates tasks, manages services, and monitors the health of the cluster.

- Worker Nodes: Multiple machines that execute the tasks assigned by the manager node. These nodes host the containers for the web application.

- Docker Compose: Used to define the application’s services and dependencies in a YAML file, simplifying the deployment process. This file defines the application’s containerized components and their interactions. This abstraction is crucial for simplifying deployment tasks.

The deployment process typically involves:

- Defining the application’s services: Using Docker Compose, the application’s services are defined in a YAML file. This file details the application’s containerized components and their dependencies. This configuration is crucial for streamlining the application deployment process.

- Creating a Swarm cluster: The manager node is configured to create a Docker Swarm cluster. This step initializes the control plane of the cluster, allowing for the orchestration of containerized components.

- Deploying the application’s services: The defined services are deployed to the worker nodes within the Swarm cluster. This step effectively launches the application containers on the designated worker nodes.

This example highlights the fundamental components of a Docker Swarm deployment. The key takeaway is the automated management of containers and services across multiple hosts, facilitating scalability and high availability.

Introduction to Kubernetes

Kubernetes is an open-source platform for automating deployment, scaling, and management of containerized applications. It provides a powerful and flexible way to orchestrate containerized workloads, enabling developers to focus on building applications rather than managing infrastructure. Kubernetes’s core functionality revolves around managing containers, ensuring they run reliably and efficiently, and adapting to changing demands.Kubernetes leverages a sophisticated architecture that allows for distributed control and scalability.

This allows applications to be deployed and managed across multiple machines in a cluster, making it highly suitable for complex and demanding applications. Its architecture is designed to handle failures and ensure the continued operation of the application, even when individual nodes or components fail.

Core Functionalities of Kubernetes

Kubernetes offers a comprehensive suite of functionalities for managing containerized applications. These functionalities include:

- Deployment Management: Kubernetes allows for the deployment and management of containerized applications across a cluster of machines. This includes specifying the desired state of the application, such as the number of replicas running and their resource requirements. It then automatically manages the deployment and ensures the desired state is maintained.

- Scaling and Resource Management: Kubernetes dynamically scales applications based on demand. It automatically adjusts the number of containers running to meet the workload requirements. It also efficiently manages resources, ensuring that each container gets the necessary CPU, memory, and storage. This ensures that the application adapts effectively to changing traffic loads and resource constraints.

- Service Discovery and Load Balancing: Kubernetes provides mechanisms for services to discover each other and distribute traffic efficiently. This allows services to communicate seamlessly, regardless of their location within the cluster. It also implements load balancing, ensuring that traffic is distributed evenly across available instances.

- Automated Rollouts and Rollbacks: Kubernetes automates the process of updating applications. It manages the rollout of new versions, ensuring minimal disruption to the application’s operation. This includes features for reverting to previous versions if issues arise.

- Self-Healing Capabilities: Kubernetes automatically detects and recovers from failures. It monitors the health of containers and restarts those that fail. This continuous monitoring and corrective action ensure high availability.

Kubernetes Cluster Architecture

A Kubernetes cluster is a collection of machines (nodes) that work together to run containerized applications. The basic architecture involves:

- Nodes: These are the physical or virtual machines that run containers. Each node has a Kubernetes agent (kubelet) that manages the containers on that node. The kubelet communicates with the control plane to ensure that containers are running according to the desired state.

- Control Plane: This is the brain of the cluster, responsible for orchestrating the activities of the nodes. The control plane comprises several components, including the API server, scheduler, controller manager, and etcd (a distributed key-value store).

- API Server: This is the entry point for all interactions with the cluster. It exposes the Kubernetes API, which allows clients to interact with the cluster and manage resources.

- Scheduler: This component determines which node a container should run on based on resource availability and other factors.

- Controller Manager: This component watches the desired state of resources (like pods) and ensures that the actual state matches the desired state. It manages various controllers, each responsible for specific tasks, such as deployments, services, and persistent volumes.

- Etcd: This distributed database stores the cluster’s state. It provides a consistent and reliable view of the cluster’s resources, enabling all components to have a shared understanding of the current state.

Typical Use Cases for Kubernetes Deployments

Kubernetes is widely used in various scenarios due to its scalability, reliability, and automation capabilities. Typical use cases include:

- Microservice Architectures: Kubernetes excels at deploying and managing microservices, allowing for independent scaling and deployment of individual services. This promotes flexibility and resilience in complex applications.

- Web Applications: The dynamic scaling capabilities of Kubernetes enable web applications to handle fluctuating traffic loads effectively. This ensures consistent performance and availability.

- Data Processing Pipelines: Kubernetes can manage complex data pipelines, allowing for the deployment and orchestration of different stages of the pipeline across a cluster. This facilitates the efficient processing of large datasets.

- Continuous Integration/Continuous Deployment (CI/CD) Pipelines: Kubernetes is integrated seamlessly with CI/CD pipelines, automating the deployment and testing of applications. This accelerates the release cycle and improves the overall development process.

Example of a Kubernetes Deployment

A Kubernetes deployment describes the desired state of a set of pods. The following example showcases a simple deployment of a web application:

| Component | Description |

|---|---|

| Deployment | Defines the desired state of the application. It specifies the number of replicas and the container image to use. |

| Service | Exposes the application to the outside world. It provides a stable external endpoint for accessing the application, even if the pods change. |

| Pod | Represents a group of containers running on a node. In this example, a single container is running. |

| Container | The actual running application. In this case, a simple web server image. |

This example demonstrates the fundamental components of a Kubernetes deployment, illustrating how Kubernetes manages and scales applications in a containerized environment.

Comparing Core Concepts

Docker Swarm and Kubernetes are both container orchestration platforms, but they differ significantly in their architectural approaches. Understanding these differences is crucial for selecting the right tool for a given project. While both aim to automate deployment, scaling, and management of containerized applications, their underlying philosophies and functionalities vary.Kubernetes is often perceived as a more complex, feature-rich solution, whereas Swarm is more straightforward for simpler deployments.

The choice between them hinges on the specific needs and technical expertise of the development team, the complexity of the application, and the desired level of control over the orchestration process.

Deployment Models

The deployment models of Docker Swarm and Kubernetes differ in their approach to managing application deployments. Swarm employs a simpler, more declarative model based on services and tasks. It facilitates the deployment of applications across a cluster of Docker hosts. Kubernetes, on the other hand, leverages a more sophisticated declarative approach, using Kubernetes manifests to define the desired state of the application.

This declarative model allows for greater control and abstraction over the underlying infrastructure.

Service Discovery and Load Balancing

Swarm’s service discovery and load balancing mechanisms are built into its core functionality. It automatically discovers and balances traffic across the available containers. Kubernetes, while also offering robust service discovery, provides more granular control and flexibility. Kubernetes’s service abstraction enables more complex load balancing strategies, including using different load balancers and advanced routing mechanisms. This allows for more refined control over traffic distribution.

Scaling Strategies

Swarm’s scaling strategies are primarily based on replicating services across the available nodes in the cluster. The scaling process is triggered by factors such as service requests. Kubernetes, on the other hand, uses more sophisticated scaling mechanisms. Kubernetes employs concepts like horizontal pod autoscaling, enabling dynamic scaling based on metrics like CPU utilization and resource requests. This allows Kubernetes to adapt to fluctuating workloads more efficiently.

Orchestration Capabilities

Docker Swarm’s orchestration capabilities are limited compared to Kubernetes. Swarm excels at simple deployments and scaling but lacks the advanced features of Kubernetes, such as rolling updates, blue/green deployments, and advanced resource management. Kubernetes’s comprehensive orchestration features empower complex deployments, allowing for more sophisticated strategies for application updates and upgrades, ensuring high availability and minimizing downtime.

Deployment Strategies

Deploying applications efficiently is crucial for any modern application architecture. Both Docker Swarm and Kubernetes provide mechanisms for deploying applications, but their approaches and complexities differ significantly. Understanding these deployment strategies is essential for selecting the right orchestration tool for your needs.

Docker Swarm Deployment

Docker Swarm, being a relatively simpler orchestration engine, utilizes a straightforward deployment process. It relies on a cluster of Docker hosts, where one or more nodes are designated as managers.

- Defining the Application: First, define the application’s Docker image and associated configuration files. This typically involves specifying the image’s name, port mappings, environment variables, and other relevant parameters within a Docker Compose file or similar configuration format.

- Creating a Swarm Cluster: Next, establish a Docker Swarm cluster. This involves initiating the Swarm mode on the Docker daemon on each participating node, forming a cluster of Docker hosts that work together.

- Creating a Service: A service is then created in the Swarm. This service defines how many replicas of the application should be running and how to manage them across the cluster. The service deployment command specifies the Docker image, ports, and other configuration details.

- Scaling and Managing: Scaling the application involves adjusting the service’s replica count. Swarm automatically manages the scaling process, ensuring the desired number of application instances are running across the cluster. Managing tasks and troubleshooting are facilitated through the Docker Swarm CLI and other tools.

Kubernetes Deployment

Kubernetes, a more comprehensive platform, employs a declarative approach for deployment. This approach involves defining the desired state of the application, and Kubernetes automatically ensures that the cluster conforms to this state.

- Defining the Application: Kubernetes utilizes a declarative approach to application definition using YAML files. These files describe the desired state of the application, including the number of pods, containers, networking configurations, and storage requirements.

- Creating a Kubernetes Cluster: A Kubernetes cluster is established, comprised of worker nodes (also known as minions) and control plane components. These components manage the cluster and ensure that the application runs consistently.

- Creating Deployments: Deployments in Kubernetes are used to manage the desired number of application replicas. Kubernetes uses the defined YAML file (manifest) to create and manage these replicas.

- Scaling and Managing: Scaling is accomplished by modifying the Deployment manifest to adjust the desired replica count. Kubernetes automatically manages the scaling and ensures the application remains in the desired state. This management is handled through the Kubernetes API and various CLI tools.

Comparison

| Feature | Docker Swarm | Kubernetes |

|---|---|---|

| Complexity | Simpler to set up and manage for basic deployments. | More complex but offers greater flexibility and advanced features. |

| Declarative Approach | Imperative approach, requiring more manual intervention. | Declarative approach, automating the deployment process. |

| Features | Limited advanced features compared to Kubernetes. | Extensive features, including advanced scheduling, networking, and storage. |

| Scalability | Scales well for simple applications. | Highly scalable, capable of handling complex applications and large deployments. |

Docker Swarm’s ease of use makes it ideal for smaller deployments and projects where a quick setup is needed. Kubernetes, on the other hand, excels in complex environments, ensuring high availability, and managing intricate deployments with advanced features.

Service Discovery and Load Balancing

Service discovery and load balancing are crucial components for orchestrating containerized applications, especially in large-scale deployments. These mechanisms ensure that services within a cluster are aware of each other and that incoming traffic is distributed effectively across healthy instances. This allows for scalability and resilience, crucial characteristics for modern application architectures.Effective service discovery and load balancing minimize single points of failure and maximize resource utilization.

Proper configuration of these features ensures smooth application performance and availability, regardless of the number of deployed instances.

Docker Swarm Service Discovery

Docker Swarm utilizes a simple, built-in service discovery mechanism. Services register their availability with the Swarm manager. Other services can then query the manager to find the registered services. This method leverages the underlying Docker network infrastructure, which is fundamental to the Docker ecosystem. The process is inherently efficient and tightly integrated with the Docker Swarm architecture.

Kubernetes Service Discovery

Kubernetes employs a more comprehensive approach to service discovery. It utilizes the concept of Services, which abstract the underlying pods. These Services expose endpoints for external access and provide mechanisms for load balancing. The Kubernetes service discovery mechanism is based on DNS and the Kubernetes API, which allows for greater flexibility and integration with other Kubernetes features.

Service discovery is a fundamental aspect of Kubernetes, enabling its use in complex, distributed applications.

Comparing Load Balancing Approaches

Docker Swarm’s load balancing is primarily based on the Docker network infrastructure. It is inherently simpler, focusing on the direct connection between services and clients. Kubernetes, however, offers more granular control through various load balancer types, including Ingress controllers. This allows for more complex scenarios, such as traffic routing based on different criteria, security policies, and more.

The flexibility of Kubernetes’ load balancing approaches makes it suitable for complex applications and environments.

Examples of Configuration

Docker Swarm

- Defining a service: A Docker Swarm service definition specifies the application’s container image, the number of replicas, and the network it will use. This defines the service’s availability.

- Discovering services: Docker Swarm’s `docker service ls` command will list available services. The command `docker service ps` shows the current status of the service.

- Load Balancing: Swarm’s default load balancing distributes traffic across replicas of a service. This is a fundamental aspect of service deployment.

Kubernetes

- Defining a service: A Kubernetes Service definition specifies the selector (a label query) for the pods the service will expose, the type of service (ClusterIP, NodePort, LoadBalancer), and the port mapping. This defines the service’s external interface.

- Discovering services: Kubernetes uses DNS for service discovery. Services are accessible by their name, making service interaction easier.

- Load Balancing: Kubernetes can leverage external load balancers (like AWS ELB) or internal load balancers (like HAProxy). The `type` field in the service definition determines the type of load balancer.

| Feature | Docker Swarm | Kubernetes |

|---|---|---|

| Service Discovery | Built-in, simple, tightly integrated with Docker | More comprehensive, using DNS and API |

| Load Balancing | Basic, distributed across replicas | Flexible, supports various types (e.g., Ingress controllers) |

| Complexity | Simpler, suitable for smaller deployments | More complex, but adaptable to larger and more intricate deployments |

Scaling and Resource Management

Scaling applications efficiently is crucial for ensuring responsiveness and performance under varying workloads. Both Docker Swarm and Kubernetes offer mechanisms for scaling applications, but their approaches and underlying principles differ significantly. Understanding these differences is essential for selecting the right platform for specific needs. Resource management, including allocation and monitoring, is equally vital for optimal performance and cost-effectiveness.

Scaling Applications in Docker Swarm

Docker Swarm uses a simple, declarative approach to scaling. To scale an application, you modify the service definition to specify the desired number of replicas. Swarm automatically manages the creation and orchestration of these replicas across the nodes in the cluster. This process is straightforward and often requires minimal configuration beyond defining the desired scale.

Scaling Applications in Kubernetes

Kubernetes leverages a more complex but flexible scaling methodology. Scaling in Kubernetes is achieved by defining desired state for your deployments. This allows for intricate scaling strategies based on various metrics, including CPU usage, memory consumption, and even custom metrics. Kubernetes deployments are capable of automatic scaling to meet demand or maintain performance under load.

Comparing Resource Management Strategies

Docker Swarm’s resource management is inherently simpler, focusing on the replication of existing containers. Kubernetes’ approach allows for more granular control and advanced scaling policies, making it suitable for complex applications with varying resource demands. Kubernetes’ resource quotas and limits offer a finer level of control over resource consumption. Swarm, while simpler, may be less efficient in managing resource constraints compared to Kubernetes’ sophisticated approach.

Features for Handling Resource Limitations

- Docker Swarm: Swarm offers basic resource limits through the service definition, allowing you to specify CPU and memory constraints for each service. However, it lacks the sophisticated resource management capabilities found in Kubernetes. This may not be suitable for complex applications requiring more granular resource management and monitoring.

- Kubernetes: Kubernetes provides extensive resource management features, including resource quotas, limits, and requests. These features allow administrators to enforce limits on resource consumption across the cluster and applications, preventing resource exhaustion. Kubernetes’ resource limits, requests, and other advanced features enable proactive management of resource utilization, which is particularly beneficial for applications with unpredictable or fluctuating resource needs.

Networking and Security

Docker Swarm and Kubernetes, while both orchestrating containerized applications, differ significantly in their networking and security models. Understanding these differences is crucial for selecting the appropriate platform for a given use case. This section details the networking mechanisms of each platform, highlights their respective security features, and provides a comparative table to summarize key distinctions.

Docker Swarm Networking

Docker Swarm utilizes a simple networking model built on Docker’s native networking capabilities. It relies on overlay networks for communication between containers across different hosts. These overlay networks provide a virtual network on top of the underlying physical infrastructure. This mechanism facilitates communication between containers, regardless of their host location. Each service in a Swarm cluster is assigned an IP address on the overlay network.

This approach simplifies service discovery and communication.

Kubernetes Networking

Kubernetes leverages a more complex networking architecture. It allows the use of various networking solutions, including Calico, Flannel, and others. The choice of networking solution impacts the overall cluster’s configuration and performance. Kubernetes networking provides a robust framework for service discovery and load balancing, typically using a combination of IP addresses and service names. The platform’s robust networking layer facilitates complex interactions between services and external resources.

Security Features in Docker Swarm

Docker Swarm’s security features are primarily based on the underlying Docker engine security mechanisms. Access control, role-based access control (RBAC), and network segmentation are important security aspects. These mechanisms control access to the Swarm cluster, limiting exposure to unauthorized access. Effective security in Docker Swarm relies heavily on proper configuration and management of the Docker engine.

Security Features in Kubernetes

Kubernetes provides a comprehensive suite of security features, including Role-Based Access Control (RBAC), network policies, and pod security policies. RBAC enables granular control over access to cluster resources. Network policies allow for fine-grained control over network traffic between pods. Pod Security Policies (PSPs) enforce security best practices by defining constraints on the deployment of containers. These policies enhance the security posture of the cluster.

Comparison of Networking and Security Options

| Feature | Docker Swarm | Kubernetes |

|---|---|---|

| Networking Model | Overlay network based on Docker networking | Flexible, supports various networking solutions (e.g., Calico, Flannel) |

| Service Discovery | Simple, based on service names and IPs | Robust, with DNS and service discovery mechanisms |

| Load Balancing | Built-in load balancing for services | Highly configurable load balancing, integrating with various solutions |

| Security Features | Based on Docker engine security mechanisms | Comprehensive security features including RBAC, network policies, and PSPs |

| Network Policies | Limited control over network traffic | Highly granular control over network traffic |

| Scalability | Suitable for smaller to medium-sized deployments | Highly scalable, capable of handling large-scale deployments |

Ecosystem and Community Support

Docker Swarm and Kubernetes, while both container orchestration platforms, differ significantly in their ecosystem and community support. Understanding these nuances is crucial for selecting the right platform for a given project, as community support plays a vital role in troubleshooting, resource acquisition, and ongoing development.The strength of a platform’s ecosystem directly influences its long-term viability and the availability of assistance.

A robust ecosystem often translates into more readily available tools, plugins, and integrations, leading to a more developer-friendly experience.

Docker Swarm Community Support

Docker Swarm’s community support is largely tied to the Docker ecosystem. Docker’s extensive community, renowned for its open-source projects and active support channels, benefits Swarm. While Swarm’s community is not as vast as Kubernetes’, it’s generally supportive and responsive to issues. The Docker documentation and forums provide valuable resources for learning and troubleshooting.

Kubernetes Community Support

The Kubernetes community is arguably the largest and most active in the container orchestration space. This extensive community fosters a rich ecosystem of tools, plugins, and integrations. Its size and engagement contribute to faster issue resolution, greater innovation, and a wealth of resources for learning and development. The sheer volume of available resources, including tutorials, documentation, and community forums, is unparalleled.

Comparison of Available Tools and Resources

| Feature | Docker Swarm | Kubernetes |

|---|---|---|

| Documentation | Comprehensive, though potentially less extensive than Kubernetes’ | Extremely comprehensive, with detailed tutorials, examples, and extensive API documentation. |

| Community Forums and Support Channels | Active but smaller than Kubernetes’ | Large and active, with numerous channels for support and discussion. |

| Third-party Tools and Integrations | Fewer readily available third-party tools compared to Kubernetes. | A vast ecosystem of third-party tools and integrations tailored for various use cases. |

| Training and Educational Resources | Available through Docker’s resources. | Numerous online courses, workshops, and training materials, reflecting the platform’s extensive use. |

| Ecosystem Maturity | Mature but with a smaller ecosystem compared to Kubernetes | Highly mature and extensive ecosystem, with many established tools and integrations. |

Summary of Ecosystem and Community Support

A strong community is crucial for long-term project success. Kubernetes’ substantial community provides access to a wider range of tools, support, and resources, making it a more robust platform for complex deployments and ongoing maintenance. Docker Swarm’s community support, while active, is less extensive, potentially making it less suitable for large-scale or complex projects where the abundance of community resources is vital.

Use Cases and Scenarios

Choosing between Docker Swarm and Kubernetes depends heavily on the specific needs of a project. While both orchestrate containerized applications, their strengths lie in different areas. Understanding these strengths and the typical use cases for each platform is crucial for making an informed decision.Docker Swarm is often a suitable choice for simpler deployments and applications with less complex scaling requirements.

Kubernetes, on the other hand, provides greater flexibility and control for larger, more complex, and demanding applications.

Docker Swarm Use Cases

Docker Swarm shines in scenarios where straightforward management and rapid deployment are paramount. Its ease of use and relatively low learning curve make it an attractive option for smaller teams or projects with limited resources.

- Development and Testing Environments: Swarm’s simplicity makes it ideal for rapidly spinning up and tearing down development and testing environments. Teams can quickly provision and manage multiple containers for different testing scenarios without extensive configuration. Example: A small development team working on a web application can use Swarm to provision multiple instances of the application for simultaneous testing, quickly scaling up or down as needed.

- Microservices with Basic Scaling: If the microservices are relatively straightforward and don’t require highly sophisticated scaling strategies, Swarm can handle the orchestration effectively. Example: A company with a few microservices that need basic scaling for increased traffic during peak hours can use Swarm for this, avoiding the complexity of Kubernetes.

- Proof of Concept and Prototyping: Swarm is well-suited for initial proof-of-concept deployments and rapid prototyping. Its quick setup and management features help teams quickly validate their ideas and test different architectures without significant upfront investment in learning Kubernetes. Example: A startup exploring new technologies for their online store can utilize Swarm for a prototype to test various containerized architectures.

Kubernetes Use Cases

Kubernetes excels in scenarios demanding sophisticated resource management, complex deployments, and high availability. Its powerful features cater to larger and more demanding applications, allowing for robust management of complex systems.

- Large-Scale Production Deployments: Kubernetes provides the advanced capabilities required for managing large-scale applications in production environments. Its sophisticated scaling, self-healing, and robust networking capabilities are essential for mission-critical applications. Example: A major e-commerce platform with millions of users and transactions requires Kubernetes to handle the high load and ensure consistent availability.

- Complex Microservice Architectures: Kubernetes’s ability to handle complex interdependencies between microservices makes it a strong choice for large-scale microservice deployments. Its sophisticated service discovery and load balancing mechanisms ensure smooth communication and distribution of traffic across services. Example: A large financial institution with hundreds of interconnected microservices needs Kubernetes to manage the intricate interactions and high availability of these services.

- Highly Available and Fault-Tolerant Applications: Kubernetes’s self-healing capabilities and robust deployment mechanisms make it a perfect fit for applications requiring high availability and fault tolerance. Kubernetes can automatically replace failed containers or nodes, ensuring minimal downtime. Example: A cloud-based banking platform needs Kubernetes to handle unexpected failures and ensure that critical services remain accessible to users, preventing disruptions.

- Multi-Cloud Deployments: Kubernetes’s portability across different cloud providers makes it suitable for applications deployed across multiple cloud environments. This portability reduces vendor lock-in and provides flexibility for managing deployments in various cloud platforms. Example: A company using multiple cloud providers for their applications can use Kubernetes to manage their deployments in a consistent manner across all environments.

Comparison Table

| Feature | Docker Swarm | Kubernetes |

|---|---|---|

| Complexity | Simpler | More complex |

| Scalability | Good for basic scaling | Excellent for complex scaling |

| Features | Limited advanced features | Rich set of advanced features |

| Use Cases | Development, small-scale production, proof of concept | Large-scale production, complex microservices, multi-cloud |

Learning Curve and Complexity

Choosing between Docker Swarm and Kubernetes often hinges on the team’s existing expertise and the project’s scale. Both platforms offer powerful container orchestration capabilities, but their learning curves and complexities differ significantly. Understanding these nuances is crucial for a successful deployment.

Docker Swarm Learning Curve

Docker Swarm, being relatively simpler in design, often boasts a gentler learning curve compared to Kubernetes. Its core concepts are more straightforward, making it easier for teams with less experience in container orchestration to grasp quickly. The intuitive nature of its commands and configuration options allows for faster onboarding and deployment. This simplicity, however, comes with limitations in terms of advanced features and scalability compared to Kubernetes.

Kubernetes Learning Curve

Kubernetes, while more complex, offers a significantly broader range of features and control over containerized applications. Its learning curve is steeper, requiring a deeper understanding of concepts like pods, deployments, services, and namespaces. Mastering these components is essential for achieving advanced deployments and managing complex applications. This depth in features also translates to more extensive configurations and potential for intricate troubleshooting.

Comparison of Complexity Levels

Docker Swarm’s straightforward architecture makes it easier to get started. Its smaller feature set, however, restricts its capabilities for highly complex or large-scale deployments. Kubernetes, on the other hand, provides extensive control and flexibility, but its comprehensive architecture demands a more substantial investment in learning and understanding its many components.

Summary Table

| Feature | Docker Swarm | Kubernetes |

|---|---|---|

| Learning Curve | Generally considered easier to learn initially. | Steeper learning curve, requiring more time and effort to master. |

| Complexity | Lower complexity for simpler deployments. | Higher complexity but greater flexibility and scalability for complex deployments. |

| Scalability | Limited scalability compared to Kubernetes. | High scalability, supporting complex and large-scale deployments. |

| Features | Fewer advanced features. | Extensive range of advanced features. |

| Community Support | Strong community support, with a wealth of resources available. | Extremely strong community support, offering extensive documentation and assistance. |

Future Trends and Considerations

The container orchestration landscape is constantly evolving. Docker Swarm and Kubernetes, while currently dominant, are adapting to new demands and technologies. Understanding future trends allows organizations to strategically position their deployments and maximize the benefits of these platforms. Both platforms are increasingly incorporating features for enhanced security, scalability, and integration with emerging technologies.

Potential Future Trends in Docker Swarm

Docker Swarm, while less feature-rich than Kubernetes, continues to evolve. Future development likely focuses on improving its ease of use and integration with existing Docker workflows. Increased automation and streamlined management tools are expected to enhance user experience and reduce operational complexity. Furthermore, integration with cloud-native technologies and enhanced support for microservices architectures are likely developments.

Potential Future Trends in Kubernetes

Kubernetes, with its extensive feature set, will likely continue to mature and become even more versatile. Expect further enhancements in security features, such as improved access control and auditing mechanisms. Enhanced integration with various cloud providers, and support for more specialized use cases like serverless computing, will be significant developments. Additionally, a greater emphasis on automated governance and compliance will ensure its continued reliability and suitability for production environments.

Adaptation to Emerging Technologies

Both platforms are adapting to emerging technologies such as serverless functions, edge computing, and artificial intelligence (AI). Docker Swarm and Kubernetes are likely to offer seamless integration with these technologies, enabling organizations to deploy and manage applications across diverse environments. This adaptation will empower organizations to leverage the benefits of these technologies while maintaining the reliability and scalability provided by container orchestration.

Future Considerations for Both Platforms

The future success of both platforms hinges on several key considerations. A critical aspect is the continued development of robust security features. This includes ensuring secure deployments, preventing unauthorized access, and providing mechanisms for comprehensive auditing and monitoring. Furthermore, a focus on simplifying the learning curve and providing better documentation and support resources will be crucial for widespread adoption.

Finally, fostering collaboration within the broader ecosystem of tools and services will further empower developers and system administrators to leverage these platforms effectively.

Concluding Remarks

In conclusion, Docker Swarm and Kubernetes offer distinct approaches to container orchestration. Docker Swarm is generally easier to set up and manage for smaller deployments, while Kubernetes’s advanced features and extensive ecosystem are better suited for complex and large-scale applications. Choosing the right platform depends on factors such as project complexity, team expertise, and long-term scalability goals.

Helpful Answers

What are the typical use cases for Docker Swarm?

Docker Swarm is well-suited for smaller deployments, microservices with simpler architectures, and projects where rapid prototyping and quick setup are prioritized. Its simplicity makes it a good option for smaller teams and projects with limited resources.

What are the key differences in scaling strategies between Docker Swarm and Kubernetes?

Kubernetes offers more sophisticated scaling options, including horizontal pod autoscaling, which dynamically adjusts the number of pods based on resource utilization. Docker Swarm’s scaling mechanisms are simpler, relying more on manually adjusting the number of replicas.

How does Kubernetes handle resource limitations compared to Docker Swarm?

Kubernetes provides detailed resource quotas and limits, enabling precise control over resource consumption. Docker Swarm’s resource management is less granular, making it less suitable for highly resource-intensive applications needing detailed control.

What are the pros and cons of choosing Docker Swarm over Kubernetes?

Docker Swarm is simpler to learn and deploy, making it suitable for smaller teams and simpler applications. However, Kubernetes offers more advanced features and a more robust ecosystem for large-scale deployments and complex applications.