AWS Lambda’s custom runtimes represent a paradigm shift in serverless computing, offering developers unprecedented flexibility in language and framework selection. This technology allows the execution of code written in languages not natively supported by Lambda, effectively broadening the platform’s applicability. By understanding the intricacies of custom runtimes, developers can unlock new possibilities, optimize performance, and tailor their serverless applications to meet specific project requirements.

The following sections will dissect the core components, advantages, and practical implementations of custom runtimes within the AWS Lambda ecosystem.

Custom runtimes are essentially wrappers that bridge the gap between the AWS Lambda execution environment and the developer’s code. They provide a mechanism for handling the invocation lifecycle, including receiving events, executing the function code, and returning results. This decoupling of the underlying execution environment from the function code itself allows for greater portability and the use of specialized libraries and frameworks, resulting in a highly adaptable serverless architecture.

Defining Custom Runtimes in AWS Lambda

AWS Lambda is a serverless compute service that allows developers to run code without provisioning or managing servers. A key feature of Lambda is its support for various programming languages and runtimes. However, Lambda doesn’t natively support every language. Custom runtimes address this limitation, expanding the range of languages developers can use to build serverless applications.

Fundamental Concept of Custom Runtimes

The core concept behind custom runtimes in AWS Lambda is to provide a mechanism for executing code written in languages not directly supported by the service. This is achieved by allowing developers to package a runtime environment along with their function code. This runtime environment acts as an intermediary, handling the interaction between the Lambda execution environment and the developer’s code.

Definition and Purpose of Custom Runtimes

A custom runtime in AWS Lambda is a component that enables the execution of code written in programming languages or environments not natively supported by Lambda. Its primary purpose is to provide a bridge between the Lambda execution environment and the developer’s code, handling the tasks of:

- Receiving invocation events from Lambda.

- Passing these events to the function code.

- Receiving the function’s output.

- Returning the output to Lambda.

This architecture allows developers to use a wider variety of programming languages and frameworks, increasing flexibility and reducing vendor lock-in. The custom runtime effectively defines how Lambda interacts with the developer’s code, allowing the function to be written in almost any language as long as a compatible runtime is created.

Languages Supported Through Custom Runtimes

Custom runtimes extend the capabilities of AWS Lambda by enabling the use of languages beyond those that are natively supported. This is achieved by allowing developers to create a runtime environment that can execute code written in a specific language. For instance, languages like Go, Rust, and languages with specialized frameworks can be utilized through custom runtimes.

- Go: Developers can leverage the performance benefits of Go for Lambda functions. The runtime would handle the compilation and execution of Go binaries within the Lambda environment.

- Rust: Rust’s memory safety and performance characteristics make it a viable choice for serverless applications. The custom runtime would manage the interaction between the compiled Rust code and the Lambda environment.

- Languages with Specialized Frameworks: Custom runtimes facilitate the use of languages like Ruby, where developers can manage the execution and dependencies of Ruby code within the Lambda environment, enabling complex web applications.

This approach allows developers to select the best language or framework for their specific use case, leading to more efficient and tailored serverless applications.

Why Use Custom Runtimes?

Custom runtimes in AWS Lambda provide developers with enhanced flexibility and control over their function execution environments. This capability allows for the utilization of programming languages and frameworks not natively supported by AWS Lambda, opening up a broader range of possibilities for application development and deployment. The benefits extend beyond simple language support, offering potential improvements in performance, cost optimization, and the integration of specialized tools and libraries.

Extending Language Support

One of the primary advantages of custom runtimes is the ability to use programming languages not directly supported by AWS Lambda’s standard runtime environment. This flexibility enables developers to leverage their existing skills and codebases, reducing the need for rewriting applications in supported languages.

- Go: Developers can write Lambda functions in Go, a language known for its performance and efficiency. This is particularly beneficial for computationally intensive tasks or applications requiring low latency. For example, a web application that processes image transformations could benefit from the speed of Go.

- Rust: Rust offers memory safety and performance characteristics, making it suitable for building secure and high-performance applications. Using Rust in Lambda functions can improve the security posture and execution speed of tasks, such as data validation or encryption.

- Pascal/Delphi: For developers with existing codebases in Pascal or Delphi, custom runtimes provide a path to integrate these applications into a serverless architecture, minimizing the need for extensive code modifications.

- COBOL: Organizations can migrate legacy COBOL applications to a serverless environment. This enables the modernization of older systems without rewriting the entire application.

Optimizing Performance

Custom runtimes can be optimized for specific workloads, potentially leading to performance improvements. This is achieved through fine-tuning the runtime environment, including the selection of libraries and the configuration of the execution engine.

- Specific Library Optimization: By including only the necessary libraries and dependencies, the size of the deployment package can be reduced, which leads to faster cold start times. For instance, a Python-based Lambda function using custom runtime can be optimized by removing unnecessary modules, leading to a reduction in deployment package size.

- Customized Execution Environments: Custom runtimes allow for the configuration of the execution environment to optimize resource utilization. For example, you can set specific memory allocation or CPU configurations based on the workload.

- Performance Benchmarking: Developers can perform rigorous performance benchmarking to optimize the custom runtime for specific use cases. This can involve measuring the execution time of various tasks, identifying bottlenecks, and implementing optimizations.

Reducing Costs

Custom runtimes can contribute to cost reduction by optimizing resource utilization and reducing the overall execution time of Lambda functions. This can be achieved through several strategies.

- Reduced Cold Start Times: Optimized custom runtimes, with smaller deployment packages, can lead to faster cold start times, minimizing the duration for which resources are allocated and charged.

- Efficient Resource Utilization: By fine-tuning the runtime environment, you can ensure that Lambda functions utilize only the necessary resources, preventing over-provisioning and reducing costs.

- Optimized Execution Time: The use of efficient languages or optimized libraries in a custom runtime can reduce the overall execution time of a function, which in turn reduces the cost.

Integration with Specialized Tools and Libraries

Custom runtimes enable the integration of specialized tools and libraries that are not readily available in standard Lambda runtimes. This can significantly enhance the capabilities of Lambda functions.

- Specialized Scientific Computing Libraries: Researchers and data scientists can integrate specialized scientific computing libraries, such as those for machine learning or data analysis, into their Lambda functions.

- Custom Monitoring and Logging Agents: Developers can integrate custom monitoring and logging agents to collect detailed performance metrics and log data specific to their application requirements.

- Proprietary Software Integration: Custom runtimes can integrate with proprietary software or libraries that are not publicly available.

Supported Languages and Frameworks

Custom runtimes in AWS Lambda significantly broaden the scope of programming languages and frameworks that can be employed for serverless application development. This flexibility is a key advantage, enabling developers to leverage their existing skills and choose the best tools for specific tasks, optimizing for performance, cost, and maintainability. The ability to support diverse languages and frameworks is a direct result of the runtime’s architecture, which decouples the execution environment from the language implementation itself.Custom runtimes empower developers to select programming languages based on project requirements, team expertise, and performance characteristics.

This adaptability allows for innovation and efficiency, as teams are not limited to the languages natively supported by Lambda. The core principle is that any language or framework that can be packaged into a Linux-compatible executable can be used.

Language Selection Flexibility

The adaptability of custom runtimes stems from their ability to execute any program that adheres to the Lambda runtime API. This API defines the communication protocol between the Lambda service and the custom runtime. The runtime handles the initialization, invocation, and shutdown phases of the function, interacting with the Lambda service through standard input/output (stdin/stdout) and environment variables. This decoupling allows for the use of virtually any language or framework.

Supported Languages and Use Cases

The following list details several programming languages and frameworks frequently used with custom runtimes, along with their typical use cases:

- Go: Go’s speed and concurrency features make it ideal for high-performance applications, such as processing large datasets, handling real-time data streams, and building APIs. Its lightweight nature and compiled nature result in fast startup times, which is crucial in a serverless environment.

- Rust: Rust provides memory safety without garbage collection, which is advantageous for resource-intensive applications, especially those sensitive to latency and memory usage. It’s suitable for applications requiring high performance and low-level control, like image processing or network services.

- C/C++: For applications that require direct hardware interaction, or when integrating with existing C/C++ libraries, custom runtimes offer a path to bring this functionality to Lambda. This is particularly useful for scientific computing, embedded systems integration, and high-performance computing tasks.

- PHP: PHP, while not traditionally a serverless language, can be adapted using custom runtimes, allowing the execution of existing PHP applications or integrating PHP into microservices architectures. This enables the reuse of existing codebases and frameworks, such as Laravel or Symfony.

- Ruby: Similar to PHP, Ruby can be employed with custom runtimes. This is particularly relevant for applications built with Ruby on Rails or other Ruby frameworks, facilitating the migration of existing Ruby applications to a serverless model.

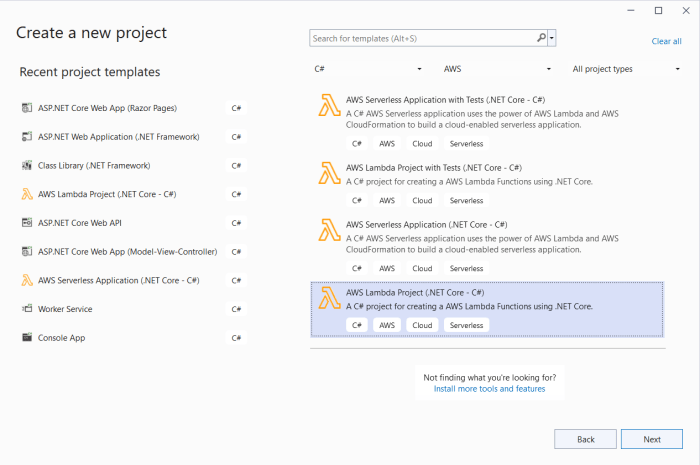

- .NET (C#, F#): The .NET ecosystem, including C# and F#, is supported, enabling developers to utilize their existing .NET skills and leverage .NET’s extensive library support. This facilitates the migration of .NET applications to a serverless architecture, or building new applications using .NET’s performance capabilities.

- Python: While Python is natively supported, custom runtimes can be used to manage specific Python versions, optimize the execution environment, or integrate with custom libraries and dependencies. It offers flexibility in managing dependencies and customizing the Python environment.

- Node.js: Though Node.js is natively supported, custom runtimes offer control over Node.js versions and allow for fine-tuning the environment for performance. They can also be used for custom pre-processing or post-processing steps.

- Java: Although Java is natively supported, custom runtimes can provide enhanced control over the JVM and enable the integration of specific Java libraries and frameworks. This facilitates the optimization of Java-based applications for serverless environments.

- Frameworks: Beyond specific languages, custom runtimes can support various frameworks, including:

- Serverless Frameworks: Such as Serverless Framework and Claudia.js, which facilitate the deployment and management of serverless applications.

- Web Frameworks: Like Flask (Python), Express.js (Node.js), and Spring Boot (Java), enabling the development of APIs and web applications within Lambda functions.

- Machine Learning Frameworks: TensorFlow, PyTorch (Python), and others, used for deploying machine learning models and inference services.

The Custom Runtime Interface

The custom runtime interface defines the contract between your function code and the AWS Lambda service. It dictates how Lambda invokes your function, passes event data, and receives the function’s response. Understanding this interface is crucial for building and deploying custom runtimes effectively.

The Role of the Lambda Runtime Interface Emulator (RIE)

The Lambda Runtime Interface Emulator (RIE) is a critical tool for developing and testing custom runtimes locally. It mimics the behavior of the Lambda service’s runtime environment, allowing developers to validate their runtime implementation without deploying to AWS.The RIE emulates the core functionalities of the Lambda service, including:

- Invocation: The RIE simulates the process of Lambda invoking the function handler. It receives events from the Lambda service (simulated) and passes them to the runtime.

- Environment Variables: It provides an environment that closely mirrors the production environment, including environment variables configured for the Lambda function. This allows for accurate testing of configuration dependencies.

- Response Handling: The RIE simulates the Lambda service’s expectation of a response from the runtime. It validates that the runtime correctly formats and returns the function’s output.

- Logs and Errors: It captures and displays logs and error messages generated by the runtime and the function code, providing valuable debugging information.

By using the RIE, developers can quickly iterate on their runtime code, identify and fix issues, and ensure compatibility with the Lambda service before deployment. This significantly reduces development time and the potential for errors in production. The RIE is typically invoked via a command-line interface and can be integrated into local development workflows.

Interaction Between Function Code, the Runtime, and the Lambda Service

The interaction between the function code, the custom runtime, and the Lambda service is a well-defined process involving a series of sequential steps. Understanding this process is fundamental to the operation of custom runtimes.The following is a detailed breakdown of the interaction:

- Invocation Request: The Lambda service receives an invocation request, triggered by an event (e.g., an API Gateway request, an S3 object creation).

- Runtime Initialization: The Lambda service starts the custom runtime process. The runtime is responsible for initializing itself and preparing to receive invocations. This often includes loading necessary libraries and configuring environment variables.

- Event Retrieval: The Lambda service sends an event (input data) to the runtime. The runtime typically retrieves the event from a specific location (e.g., a file on the file system, an environment variable).

- Function Execution: The runtime passes the event data to the function handler (your code). The function code processes the event and generates a response.

- Response Transmission: The function handler returns a response to the runtime. The runtime formats the response according to the Lambda service’s requirements (e.g., JSON format).

- Response Delivery: The runtime sends the formatted response back to the Lambda service.

- Log Handling: During the entire process, the runtime and the function code can write logs to standard output (stdout) and standard error (stderr). Lambda captures these logs and makes them available for monitoring and debugging.

- Iteration and Shutdown: After a successful invocation, the runtime can either wait for another invocation or shut down if the function’s configured timeout is reached or an error occurs.

The critical aspect of this interaction is the contract between the Lambda service and the custom runtime. The runtime must adhere to specific protocols and formats for event retrieval, response transmission, and log handling. The Lambda service provides the event data, and the runtime returns the function’s output. The use of environment variables for configuration, and the logging of standard output and error, are key parts of this process.

Core Components of the Custom Runtime Interface

The custom runtime interface comprises several core components that work together to enable the execution of function code within the Lambda environment.The diagram below illustrates the core components and their interactions.

Diagram Description: The diagram illustrates the interaction between the Lambda service, a custom runtime, and the function code. The Lambda service, represented by a box at the top, initiates the process. Arrows indicate the flow of data and control. The Lambda service sends invocation requests and event data to the custom runtime. The custom runtime, depicted as a central box, receives the event data, passes it to the function code (another box below the runtime), and receives the function’s response.

The runtime then sends the response back to the Lambda service. Logs from both the runtime and the function code are directed to the Lambda service for monitoring. Key elements include the event source, the function code (which contains the business logic), the runtime interface, the Lambda service, and the response that is generated. The Lambda service is responsible for the initial trigger and final delivery of the response.

The custom runtime bridges the gap, facilitating communication between the service and the function code.

The following are the components:

- Lambda Service: The core service that manages the execution environment, invokes functions, and handles the overall lifecycle of the function. It provides the event data and receives the function’s response.

- Custom Runtime: This is the component developed by the user. It receives events from the Lambda service, invokes the function code, and returns the response. The runtime must adhere to the Lambda runtime API.

- Function Code: This is the user-defined code that performs the actual business logic. It receives the event data from the runtime, processes it, and generates a response.

- Event Source: This represents the source that triggers the Lambda function invocation. It could be an API Gateway, an S3 bucket, or another AWS service. The event data originates from the event source.

- Runtime Interface API: This is the core of the interface, defining the methods for retrieving the event, sending the response, and retrieving the environment variables. It is the bridge between the Lambda service and the runtime.

- Logging Mechanism: Both the runtime and the function code can generate logs, which are sent to the Lambda service for monitoring and debugging. Standard output and standard error are used for logging.

The interaction between these components forms the basis of the custom runtime interface. The Lambda service handles the invocation and provides the execution environment. The custom runtime bridges the gap between the service and the function code.

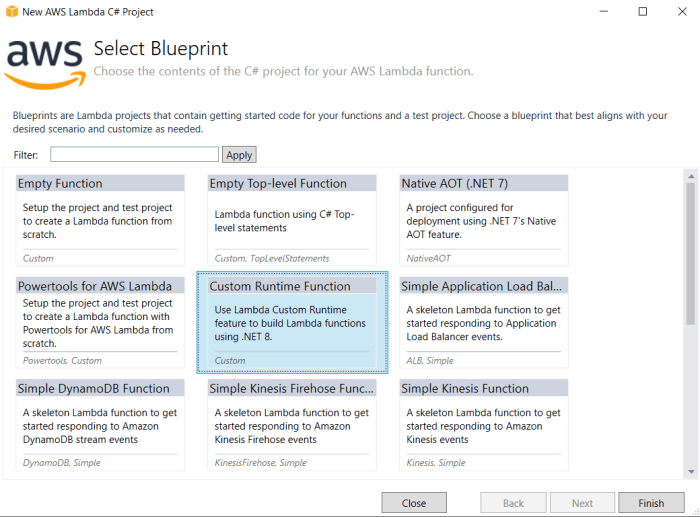

Building a Custom Runtime

Building a custom runtime allows developers to execute code in AWS Lambda using languages or frameworks not natively supported. This provides flexibility and control over the execution environment, enabling the use of specialized tools and libraries. The following sections detail the steps and processes involved in creating and deploying a custom runtime.

Building a Basic Custom Runtime: Steps and Processes

Creating a custom runtime involves several key steps, from writing the bootstrap script to packaging and deploying the runtime. This process ensures the Lambda function can correctly execute code within the specified environment. The steps Artikeld below provide a structured approach to building a basic custom runtime.

- Choose a Base Language/Environment: Select the programming language or environment for the custom runtime. This will influence the libraries and dependencies required. For example, Python, Node.js, or a compiled language like Go can be used.

- Write the Bootstrap Script: This script is the entry point for the runtime. It’s responsible for receiving the Lambda invocation events, executing the handler function, and returning the results to the Lambda service. The bootstrap script must:

- Retrieve the handler function’s name from the `_HANDLER` environment variable.

- Fetch invocation events from the Lambda runtime API.

- Execute the handler function with the event data.

- Send the function’s response back to the Lambda runtime API.

For instance, a Python bootstrap script might use the `requests` library to communicate with the Lambda runtime API.

- Implement the Handler Function: The handler function is the code that will be executed when the Lambda function is invoked. This function receives the event data as input and returns the result. The handler function’s implementation depends on the specific task the Lambda function performs.

- Create a Runtime Layer: Package the bootstrap script, any necessary dependencies (e.g., libraries), and the handler function into a single archive. This archive is then uploaded as a Lambda layer. This layer provides the runtime environment for the function.

- Configure the Lambda Function: Create a Lambda function and configure it to use the custom runtime. This involves specifying the layer containing the runtime and the handler function’s name (defined in the bootstrap script).

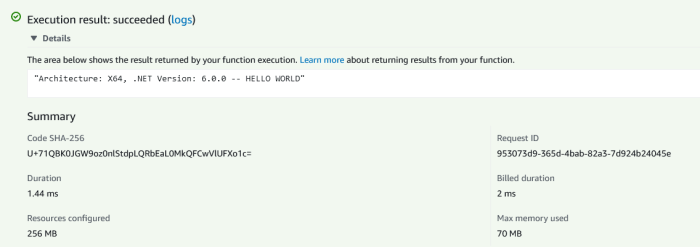

- Test the Lambda Function: Invoke the Lambda function with test events to verify it executes correctly and returns the expected results. This involves testing various input scenarios to ensure the function behaves as intended.

Packaging and Deploying a Custom Runtime to Lambda

Deploying a custom runtime to Lambda involves packaging the runtime components and making them accessible to the Lambda function. The deployment process ensures the runtime environment is available when the function is invoked. The steps Artikeld below detail the process of packaging and deploying a custom runtime.

- Package the Runtime Components: Create a ZIP archive containing the bootstrap script, the handler function, and any required dependencies. The directory structure within the ZIP archive is critical; the bootstrap script typically resides at the root, and dependencies are often placed in a `lib` or `vendor` directory.

- Create a Lambda Layer: Upload the ZIP archive to AWS Lambda as a layer. The layer encapsulates the runtime environment. When creating the layer, specify the compatible architectures (e.g., x86_64, arm64) to ensure the layer is compatible with the Lambda function’s architecture.

- Configure the Lambda Function:

- Create or update a Lambda function.

- In the function configuration, add the Lambda layer created in the previous step. This makes the runtime available to the function.

- Specify the handler function’s name in the function configuration. This is the entry point for the function’s execution (e.g., `main.handler` if `main.py` contains a function called `handler`).

- Set Environment Variables: Configure any environment variables required by the runtime or the handler function. These variables can include configuration settings, API keys, or other necessary information.

- Deploy and Test: Deploy the Lambda function and test it with various invocation events. Verify that the function executes correctly and returns the expected results. Use the Lambda console, the AWS CLI, or an SDK to invoke the function.

- Monitor and Troubleshoot: Monitor the function’s execution using CloudWatch logs and metrics. If any issues arise, review the logs to identify the root cause. Common issues include incorrect file paths, missing dependencies, or errors in the bootstrap script.

Runtime Environment Variables and Configuration

Environment variables and configuration are crucial for customizing the behavior of custom runtimes in AWS Lambda. They allow developers to inject settings, secrets, and other runtime-specific information without modifying the underlying code. This promotes portability, security, and easier management across different environments.

Environment Variables in Custom Runtimes

Environment variables play a vital role in the execution of custom runtimes. They provide a mechanism for injecting configuration data into the runtime environment, enabling dynamic behavior and adaptation based on the specific needs of the Lambda function. The custom runtime accesses these variables through standard environment variable access methods, such as the `os.environ` dictionary in Python or the `getenv()` function in C++.

Configuration Options for Custom Runtimes

Custom runtimes offer several configuration options that can be managed through environment variables. These options enable developers to fine-tune the runtime’s behavior, including settings related to logging, security, and application-specific parameters. Configuring these aspects through environment variables promotes separation of concerns and allows for modifications without requiring code recompilation or redeployment. The configuration process typically involves setting these variables within the Lambda function’s configuration in the AWS Management Console, the AWS CLI, or through infrastructure-as-code tools like AWS CloudFormation or Terraform.

Setting Environment Variables

Setting environment variables involves defining key-value pairs that the runtime can access during execution. The following table provides examples of how environment variables are commonly used within custom runtimes:

| Variable Name | Description | Example Value |

|---|---|---|

| `LOG_LEVEL` | Controls the verbosity of the application’s logging. | `INFO` |

| `DATABASE_URL` | Specifies the connection string for the application’s database. | `postgresql://user:password@host:port/database` |

| `API_KEY` | Stores an API key for interacting with external services. Storing sensitive data directly as a plain text value in the environment variables is not a best practice for security reasons. Consider using AWS Secrets Manager to store and retrieve such values. | `YOUR_API_KEY_12345` |

| `REGION` | Defines the AWS region where the Lambda function is deployed. | `us-east-1` |

| `S3_BUCKET_NAME` | Specifies the name of the S3 bucket used by the function. | `my-lambda-data-bucket` |

| `MAX_RETRIES` | Sets the maximum number of retries for an operation. | `3` |

| `TIMEOUT_SECONDS` | Sets the timeout for specific operations or requests. | `60` |

| `CUSTOM_ENDPOINT` | Defines a custom endpoint for an external service. | `https://api.example.com` |

Error Handling and Logging in Custom Runtimes

Implementing robust error handling and comprehensive logging is crucial for the operational efficiency, debugging capabilities, and overall reliability of custom runtimes within AWS Lambda. These elements are essential for diagnosing issues, monitoring performance, and ensuring that functions behave as expected under various circumstances. Effective error handling prevents unexpected function terminations and provides informative error messages, while logging captures valuable data for analysis and troubleshooting.

Error Handling in Custom Runtimes

Error handling in custom runtimes involves anticipating potential issues and gracefully managing them to prevent function failures and provide meaningful feedback. This includes handling exceptions, validating inputs, and managing external dependencies. The core principle is to identify and address errors at their source, providing informative error messages and, where appropriate, retrying operations or implementing fallback mechanisms.The methods for implementing error handling are:

- Exception Handling: Implement try-catch blocks within the runtime code to trap and handle exceptions raised by the underlying programming language or the Lambda function’s logic. This is a fundamental approach to manage unexpected situations.

- Input Validation: Validate the input data received by the function. This can prevent errors caused by malformed or incorrect input. Validation should occur early in the function’s execution to avoid processing invalid data.

- Dependency Management: Handle errors related to external dependencies, such as network connectivity issues, database connection problems, or API rate limits. Implement retry mechanisms, circuit breakers, or fallback strategies to mitigate these issues.

- Error Propagation: Ensure that errors are propagated back to the Lambda service, including relevant context and details. This enables the Lambda service to correctly report and handle function failures. This is commonly done by returning an error object or raising an exception that Lambda can interpret.

- Timeout Handling: Implement mechanisms to detect and handle function timeouts. This is especially important in custom runtimes where the execution environment may be less controlled.

Consider the following pseudo-code example illustrating basic exception handling in a Python-based custom runtime:“`pythonimport jsonimport osimport sysimport tracebackdef handler(event, context): try: # Your function logic here result = some_operation(event) return ‘statusCode’: 200, ‘body’: json.dumps(result) except Exception as e: # Log the error details print(f”Error: e”) traceback.print_exc() # Print the full traceback # Return an error response to Lambda return ‘statusCode’: 500, ‘body’: json.dumps(‘error’: str(e)) “`This example demonstrates how to wrap the function logic within a `try-except` block.

If an exception occurs, the code catches it, logs the error, prints the traceback for debugging, and returns an error response to Lambda, signaling a function failure. This structure is a foundation for all custom runtimes.

Logging in Custom Runtime Functions

Logging is critical for monitoring and debugging custom runtime functions. It provides visibility into function execution, helps identify performance bottlenecks, and aids in troubleshooting errors. The logs generated by a custom runtime are sent to Amazon CloudWatch Logs, where they can be analyzed and monitored.Configuration of logging for custom runtime functions involves:

- Choosing a Logging Library: Select an appropriate logging library for the programming language used by the custom runtime. Most languages have built-in logging capabilities or well-established third-party libraries.

- Configuring Log Levels: Define and utilize different log levels (e.g., DEBUG, INFO, WARNING, ERROR) to categorize log messages based on their severity and relevance. This enables filtering and analyzing logs based on specific criteria.

- Formatting Log Messages: Structure log messages to include relevant information, such as timestamps, function names, request IDs, and any relevant context. This ensures logs are easily readable and searchable.

- Integrating with AWS CloudWatch Logs: Ensure that log messages are directed to the standard output (stdout) or standard error (stderr) streams, which are automatically captured by AWS Lambda and sent to CloudWatch Logs.

- Adding Contextual Information: Include contextual information, such as the function’s invocation ID and any relevant input parameters, to facilitate troubleshooting.

Consider the following example of logging within a Go-based custom runtime:“`gopackage mainimport ( “context” “encoding/json” “fmt” “log” “os” “github.com/aws/aws-lambda-go/lambda”)type Request struct Name string `json:”name”`type Response struct Message string `json:”message”`func HandleRequest(ctx context.Context, request Request) (Response, error) log.Printf(“Received request: %+v”, request) // Log the incoming request if request.Name == “” log.Println(“Error: Name is required”) // Log an error return Response, fmt.Errorf(“name is required”) message := fmt.Sprintf(“Hello, %s!”, request.Name) log.Printf(“Returning message: %s”, message) // Log the outgoing message return ResponseMessage: message, nilfunc main() // Set up logging (optional, but recommended) log.SetOutput(os.Stdout) // Direct logs to standard output lambda.Start(HandleRequest)“`In this Go example, the `log` package is used to write messages to standard output.

Lambda automatically captures this output and sends it to CloudWatch Logs. The example demonstrates the use of `log.Printf` to include contextual information (request details and the returned message) within the logs, and how errors are logged to aid in debugging. The standard output stream is crucial for capturing the log messages.

Security Considerations for Custom Runtimes

Implementing custom runtimes in AWS Lambda introduces a new attack surface and necessitates careful consideration of security best practices. The flexibility afforded by custom runtimes is accompanied by the responsibility of securing the runtime environment and the code it executes. Neglecting these considerations can lead to vulnerabilities that could compromise the confidentiality, integrity, and availability of your Lambda functions and the resources they access.

Therefore, a proactive and layered security approach is crucial.

Security Best Practices for Custom Runtimes

Adhering to security best practices is paramount when designing and deploying custom runtimes. These practices span various aspects, from code development to runtime environment configuration and monitoring. Ignoring these aspects could lead to potential vulnerabilities.

- Minimize the Attack Surface: Reduce the number of libraries, dependencies, and components included in your custom runtime. Each additional component increases the potential attack surface. Only include essential libraries and modules. For example, avoid including unnecessary utilities or development tools.

- Secure Dependencies: Regularly scan and update dependencies to address known vulnerabilities. Employ a Software Composition Analysis (SCA) tool to identify and manage dependencies. Ensure that all dependencies are from trusted sources.

- Implement Least Privilege: Grant the runtime only the minimum permissions necessary to execute its tasks. Avoid overly permissive IAM roles. Use the principle of least privilege to restrict access to AWS resources. For instance, if a Lambda function only needs to read from an S3 bucket, grant it only read access to that specific bucket.

- Input Validation and Sanitization: Implement robust input validation and sanitization techniques to prevent common vulnerabilities like cross-site scripting (XSS), SQL injection, and command injection. Carefully validate all inputs, including event payloads, environment variables, and configuration data. For example, use parameterized queries to prevent SQL injection.

- Code Signing and Integrity Checks: Employ code signing to verify the authenticity and integrity of the runtime code. Implement integrity checks, such as checksums or digital signatures, to ensure that the runtime code has not been tampered with. This helps to prevent the execution of malicious code.

- Secure Storage of Secrets: Never hardcode sensitive information, such as API keys, database passwords, and other secrets, directly into the runtime code. Use AWS Secrets Manager or AWS Systems Manager Parameter Store to securely store and manage secrets. Retrieve secrets dynamically during runtime.

- Regular Security Audits and Penetration Testing: Conduct regular security audits and penetration testing to identify and address potential vulnerabilities in the custom runtime and the associated Lambda functions. This helps to proactively identify and remediate security weaknesses.

- Monitoring and Logging: Implement comprehensive monitoring and logging to track the behavior of the custom runtime and the Lambda functions. Collect logs, metrics, and traces to detect anomalies, security incidents, and performance issues. Use a centralized logging system for easy analysis.

- Immutable Infrastructure: Treat your runtime as immutable. Avoid making modifications to the runtime environment after deployment. Instead, create new versions of the runtime with the necessary updates and deploy them.

- Security Updates and Patch Management: Establish a process for regularly updating the runtime environment with security patches and bug fixes. Automate the patching process whenever possible to ensure timely updates.

Securing the Runtime Environment

Securing the runtime environment is critical to protecting the Lambda function and the resources it accesses. This involves configuring the environment with security best practices and ensuring that it is isolated from potential threats. Consider these points:

- Operating System Hardening: Harden the operating system used by the custom runtime. Disable unnecessary services, restrict network access, and apply security patches. Follow the CIS Benchmarks for OS hardening.

- Network Security: Configure network security controls, such as firewalls and security groups, to restrict network access to and from the Lambda function. Use VPC (Virtual Private Cloud) to isolate the Lambda function within a private network.

- File System Security: Secure the file system used by the runtime. Restrict write access to critical directories and implement file integrity monitoring to detect unauthorized changes.

- Environment Variables Security: Protect environment variables from unauthorized access. Avoid storing sensitive information directly in environment variables. Encrypt environment variables if necessary.

- Regular Security Scans: Conduct regular security scans of the runtime environment to identify vulnerabilities. Utilize vulnerability scanners and penetration testing tools.

Security Considerations in a Table Format

The following table summarizes key security concerns, their potential impacts, and recommended mitigations when implementing custom runtimes in AWS Lambda.

| Security Concern | Impact | Mitigation |

|---|---|---|

| Vulnerable Dependencies | Compromised runtime, data breaches, service disruption. | Regularly scan and update dependencies; use a Software Composition Analysis (SCA) tool. |

| Insufficient Input Validation | Code injection, data breaches, unauthorized access. | Implement robust input validation and sanitization; use parameterized queries. |

| Unsecured Secrets | Unauthorized access to sensitive information, data breaches. | Store secrets in AWS Secrets Manager or AWS Systems Manager Parameter Store; avoid hardcoding secrets. |

| Lack of Code Integrity Checks | Execution of malicious code, compromise of the runtime. | Implement code signing and integrity checks using checksums or digital signatures. |

| Excessive Permissions | Unauthorized access to AWS resources, data breaches. | Grant the runtime only the minimum necessary permissions; use the principle of least privilege. |

| Unsecured Runtime Environment | Compromise of the runtime, data breaches, service disruption. | Harden the operating system; configure network security; implement file system security. |

| Inadequate Monitoring and Logging | Delayed detection of security incidents, difficulty in incident response. | Implement comprehensive monitoring and logging; collect logs, metrics, and traces. |

| Failure to Apply Security Patches | Exposure to known vulnerabilities, compromise of the runtime. | Establish a process for regular security updates and patch management; automate patching. |

| Lack of Code Signing | Execution of untrusted or malicious code. | Use code signing to verify the authenticity and integrity of the runtime code. |

Monitoring and Debugging Custom Runtimes

Effective monitoring and debugging are critical for the successful operation and maintenance of custom runtime AWS Lambda functions. These practices ensure optimal performance, rapid identification of issues, and streamlined troubleshooting. This section details the tools and techniques employed for monitoring and debugging custom runtimes, providing a comprehensive guide for developers.

Monitoring Custom Runtime Functions

Monitoring custom runtime functions necessitates a multi-faceted approach that incorporates various tools and strategies to capture and analyze relevant data. Comprehensive monitoring allows for proactive identification of performance bottlenecks, error conditions, and resource utilization patterns.

- CloudWatch Metrics: AWS CloudWatch is the primary monitoring service for Lambda functions. It automatically collects metrics such as invocations, errors, duration, and throttles. Custom runtimes can leverage CloudWatch to publish custom metrics, providing granular insights into runtime behavior. This is achieved by emitting structured logs that CloudWatch can interpret.

- CloudWatch Logs: Lambda functions, including those using custom runtimes, generate logs. CloudWatch Logs stores these logs, enabling developers to search, filter, and analyze them. Structured logging, such as JSON formatted logs, enhances the ability to extract specific data points for analysis.

- Distributed Tracing with AWS X-Ray: AWS X-Ray provides distributed tracing capabilities. Custom runtimes can integrate with X-Ray to trace requests as they traverse the function and any downstream services. This enables visualization of the request flow, identifying latency issues and performance bottlenecks within the custom runtime and its dependencies.

- Function Concurrency and Scaling: Monitoring the concurrency and scaling behavior of the custom runtime is essential. CloudWatch metrics provide insights into the number of concurrent function invocations, and the auto-scaling behavior based on the configured concurrency limits. Monitoring helps to identify potential scaling issues or resource exhaustion.

- Custom Metrics for Application-Specific Data: Beyond the standard Lambda metrics, custom runtimes should emit application-specific metrics. These metrics could include the time taken for specific operations, the number of processed events, or the success rate of API calls within the runtime logic. This detailed information provides valuable insights into the performance and health of the custom runtime.

- Alerting and Notifications: Configure CloudWatch alarms to monitor key metrics and trigger notifications when thresholds are exceeded. Alerts can be set up for errors, duration, or other relevant performance indicators. Notifications can be sent via email, SMS, or other channels to alert developers to potential issues.

Debugging Custom Runtimes

Debugging custom runtimes requires a methodical approach to identify and resolve issues efficiently. The process typically involves analyzing logs, reproducing errors, and using debugging tools.

- Log Analysis: Thorough log analysis is the cornerstone of debugging custom runtimes. Examining logs for error messages, stack traces, and detailed information about the function’s execution flow is crucial. Log entries should be structured and include timestamps, log levels (e.g., INFO, WARNING, ERROR), and context-specific information.

- Error Reproduction: The ability to reproduce errors is essential for debugging. This may involve simulating the same input data, environment variables, and configuration settings as the original error. Reproducing the error allows for controlled testing and validation of potential fixes.

- Local Testing and Debugging: Testing custom runtimes locally is a common practice. This allows for the use of local debuggers, such as those provided by IDEs (Integrated Development Environments), to step through the code, inspect variables, and identify the root cause of issues. This also provides faster iteration cycles compared to debugging in the cloud.

- Remote Debugging: For complex issues that are difficult to reproduce locally, remote debugging can be used. This involves connecting a local debugger to a running Lambda function in the cloud. This technique allows for real-time debugging and inspection of the runtime environment. However, remote debugging requires careful setup and security considerations.

- Tracing and Profiling: Using tools like AWS X-Ray and profilers can help identify performance bottlenecks. Tracing provides insights into the flow of requests and the time spent in different parts of the function. Profilers can analyze the code to identify the areas consuming the most resources.

- Code Reviews and Static Analysis: Code reviews and static analysis tools can help identify potential issues before deployment. These tools can detect errors, vulnerabilities, and code quality issues.

Debugging Workflow Visualization

The debugging workflow for custom runtimes typically involves a series of steps, often iterative, to identify and resolve issues. The following description Artikels a common workflow:

1. Symptom Identification

The process begins with the identification of a symptom, such as an error message, performance degradation, or unexpected behavior. This is often triggered by CloudWatch alarms or user reports.

2. Log Analysis

The next step is to analyze the logs generated by the custom runtime function. These logs are reviewed for error messages, stack traces, and other relevant information. The goal is to understand the root cause of the issue.

3. Error Reproduction

The error is reproduced, either locally or in a test environment. This allows for controlled testing and validation of potential fixes.

4. Debugging Tools

Debugging tools are used to step through the code, inspect variables, and identify the root cause of the issue. This may involve local debugging, remote debugging, or the use of profilers and tracers.

5. Code Modification

Based on the debugging findings, the code is modified to address the issue.

6. Testing and Validation

The modified code is tested to ensure that the issue is resolved and that no new issues have been introduced. This may involve unit tests, integration tests, and end-to-end tests.

7. Deployment and Monitoring

Once the code is validated, it is deployed to the production environment, and monitoring is used to ensure that the issue is resolved and that the function is performing as expected.

The process is iterative. If the initial debugging steps do not resolve the issue, the workflow repeats, possibly involving more in-depth analysis, more sophisticated debugging techniques, or code refactoring. The entire process is designed to identify the root cause of issues and provide a robust, maintainable custom runtime function.

Examples and Use Cases

Custom runtimes in AWS Lambda unlock a vast array of possibilities, enabling developers to leverage languages and frameworks not natively supported by Lambda. This flexibility allows for optimized performance, specialized functionality, and integration with existing infrastructure. The following examples demonstrate the power and versatility of custom runtimes across diverse application domains.

Real-World Applications Benefiting from Custom Runtimes

Custom runtimes are particularly valuable in situations demanding specific language features, performance characteristics, or integration with legacy systems. They facilitate a more tailored approach to serverless computing, leading to more efficient and maintainable applications.

- High-Performance Computing (HPC) and Scientific Simulations: For computationally intensive tasks, such as scientific simulations, financial modeling, or image processing, custom runtimes allow developers to leverage languages like Fortran or C/C++ directly within the Lambda environment. This bypasses the performance overhead associated with interpreted languages and allows for optimal utilization of CPU resources.

- Legacy System Integration: Many organizations have significant investments in legacy systems written in languages like COBOL or Delphi. Custom runtimes provide a bridge to integrate these systems with modern serverless architectures. This allows developers to expose legacy functionality as APIs without rewriting the entire application.

- Game Development Backends: Game developers often require custom logic for game servers, player authentication, and data processing. Custom runtimes enable the use of languages like Lua or C# (via Mono) to build performant and scalable game backend services.

- Specialized Data Processing: Applications that involve complex data transformations or require specific libraries that are not available in the standard Lambda runtimes can benefit from custom runtimes. Examples include applications using specialized image processing libraries or machine learning frameworks.

- IoT Device Communication and Data Ingestion: Custom runtimes can be used to build Lambda functions that interact with IoT devices using protocols that are not directly supported by the default Lambda runtimes. This allows for flexible and secure communication with a wide range of devices.

Specific Use Cases Where Custom Runtimes Are Advantageous

Custom runtimes provide clear advantages when specific requirements are not met by the standard Lambda runtime offerings.

- Performance-Critical Applications: When raw performance is paramount, such as in real-time data processing or high-frequency trading, custom runtimes allow developers to optimize for speed and efficiency by using compiled languages.

- Integration with Existing Codebases: Organizations can leverage existing codebases written in languages not natively supported by Lambda, reducing development time and cost. This is particularly beneficial when migrating applications to the cloud.

- Access to Specialized Libraries: Custom runtimes allow access to specialized libraries and frameworks that are not available in the standard Lambda runtimes. This is essential for applications that rely on proprietary algorithms or custom functionality.

- Compliance and Security Requirements: For applications that require specific security configurations or compliance with industry regulations, custom runtimes can be tailored to meet these requirements. This provides greater control over the runtime environment.

Examples of Custom Runtime Implementations for Different Programming Languages

Several languages and frameworks are commonly employed in custom runtime implementations. These implementations demonstrate the flexibility and adaptability of the approach.

- C/C++: C and C++ are often used for high-performance applications due to their efficiency and control over system resources. A custom runtime could involve compiling C/C++ code into a native executable that is then executed within the Lambda environment. The runtime would handle the necessary interactions with the Lambda service.

- Go: Go’s built-in concurrency features and fast compilation times make it well-suited for serverless applications. A custom runtime for Go typically involves compiling the Go code into a single binary that is then deployed to Lambda. The runtime would manage the invocation of the Go function and handle any necessary dependencies.

- Rust: Rust’s focus on memory safety and performance makes it an excellent choice for building secure and efficient serverless applications. A custom runtime for Rust would follow a similar approach to Go, compiling the Rust code into a binary that can be executed in Lambda.

- Lua: Lua is a lightweight and embeddable scripting language often used in game development and embedded systems. A custom runtime for Lua would involve embedding the Lua interpreter within the Lambda environment and executing Lua scripts.

- .NET/C# (Mono): While .NET Core and .NET 6+ have native Lambda support, Mono can be used to run .NET applications that are not compatible with those runtimes. A custom runtime using Mono involves compiling the C# code and deploying it to Lambda with the Mono runtime.

Comparing Custom Runtimes with Standard Runtimes

The choice between custom and standard AWS Lambda runtimes significantly impacts development flexibility, operational overhead, and performance characteristics. Understanding the differences and trade-offs is crucial for optimizing serverless application deployments. This comparison analyzes the key aspects of each runtime type to guide informed decision-making.

Feature Comparison of Custom and Standard Runtimes

A direct comparison reveals the strengths and weaknesses of each runtime model. The following table provides a structured overview of the key features differentiating custom and standard Lambda runtimes.

| Feature | Custom Runtime | Standard Runtime |

|---|---|---|

| Language Support | Supports any language or framework capable of running on Linux and adhering to the Lambda runtime API. | Limited to officially supported languages (e.g., Python, Node.js, Java, Go, .NET). |

| Flexibility | Highly flexible; allows developers to use any programming language or framework. | Less flexible; constrained by the supported language and framework versions. |

| Control | Offers granular control over the runtime environment and dependencies. | Limited control over the underlying runtime environment; dependencies are managed by AWS. |

| Maintenance | Requires developers to build, manage, and maintain the runtime environment, including patching and security updates. | Managed by AWS; AWS handles runtime environment updates, patching, and security. |

| Performance | Potential for optimized performance if the runtime is carefully designed and optimized. Startup latency can be a factor. | Generally optimized by AWS; often exhibits faster cold start times due to pre-built environments. |

| Deployment | Involves packaging the runtime along with the function code. Requires a build process. | Easier deployment; AWS manages the runtime environment; simpler deployment packages. |

| Debugging | Debugging can be more complex, requiring specific tools and configurations for the custom runtime. | Debugging is generally easier, leveraging standard debugging tools and integrations provided by AWS. |

| Security | Developers are responsible for the security of the custom runtime and its dependencies. Requires careful attention to security best practices. | AWS manages security updates and patching for the standard runtime environment. |

| Cold Start Time | Can be higher due to the need to initialize the custom runtime. The cold start is a significant concern in many scenarios. | Generally lower, due to the pre-built and optimized nature of the standard runtimes. |

| Dependencies | Developers manage all dependencies within the runtime. This can add complexity to deployment. | AWS manages most dependencies, reducing the need for developers to manage them directly. |

Trade-offs in Choosing Between Custom and Standard Runtimes

Selecting the appropriate runtime involves balancing various trade-offs. Each option presents advantages and disadvantages, and the optimal choice depends on the specific application requirements and priorities.

- Language and Framework Support: Standard runtimes offer immediate support for common languages, reducing development effort. Custom runtimes enable the use of any language, but require more initial setup and maintenance. For example, if a project requires a language like Rust, a custom runtime is essential.

- Development Time: Standard runtimes typically accelerate development, providing pre-built environments and tools. Custom runtimes increase initial development time due to runtime setup and configuration.

- Operational Overhead: Standard runtimes reduce operational overhead by delegating runtime management to AWS. Custom runtimes increase operational responsibilities, including patching and security updates.

- Performance Considerations: Standard runtimes often provide optimized performance. Custom runtimes offer potential for optimization but require careful design and tuning.

- Security Implications: Standard runtimes benefit from AWS-managed security updates. Custom runtimes require developers to proactively manage security.

- Cost Optimization: While standard runtimes can sometimes offer cost advantages through optimized resource utilization, the choice often depends on factors like code execution time and memory consumption. Custom runtimes can be cost-effective if they allow for highly optimized code.

Final Review

In conclusion, custom runtimes in AWS Lambda provide a powerful mechanism for extending the capabilities of serverless applications. By offering support for a wide array of languages and frameworks, they empower developers to build more flexible, efficient, and tailored solutions. From the fundamental concepts of runtime interfaces to practical considerations of error handling and security, understanding custom runtimes is essential for maximizing the potential of serverless computing.

The ability to choose the best tool for the job, irrespective of native support, marks a significant evolution in cloud-based development, enabling innovation and driving efficiency across diverse application landscapes.

User Queries

What is the primary benefit of using a custom runtime?

The primary benefit is the ability to use programming languages and frameworks not natively supported by AWS Lambda, enabling greater flexibility and allowing developers to leverage existing codebases or specialized libraries.

How does a custom runtime interact with the AWS Lambda service?

The custom runtime interacts with the Lambda service through a specific interface, the Runtime API. This API facilitates communication between the Lambda service and the custom runtime, enabling event reception, function execution, and response handling.

What are the performance implications of using a custom runtime?

Performance can be impacted by the custom runtime. Properly optimized custom runtimes can provide performance parity or even improvements over standard runtimes, particularly when leveraging optimized libraries or frameworks. However, poorly optimized runtimes can introduce overhead.

Is there a cost associated with using custom runtimes?

There is no additional cost specifically for using custom runtimes. However, the execution time of the function, and the memory allocated, will affect the overall cost. Custom runtimes may indirectly impact cost based on the performance characteristics of the chosen language and the efficiency of the implementation.

How do I debug a custom runtime function?

Debugging custom runtime functions involves using standard debugging tools for the chosen language, coupled with logging and monitoring capabilities within the Lambda environment. The Lambda service provides logs, and metrics, and can be integrated with debugging tools.