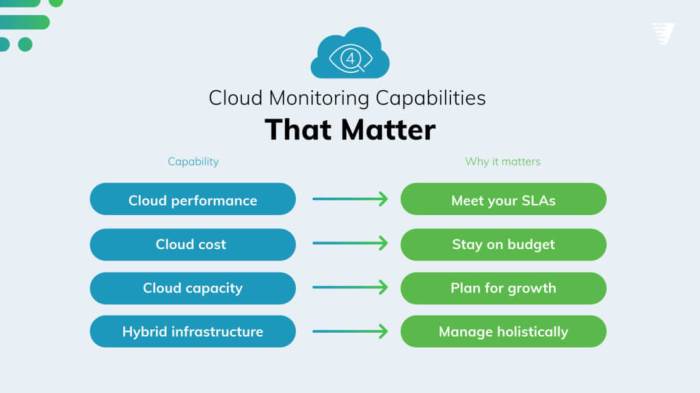

Understanding the key performance indicators (KPIs) in a cloud environment is crucial for effective management and optimization. This comprehensive guide delves into the essential metrics for monitoring various aspects of cloud operations, from resource utilization to security and cost optimization. Monitoring these metrics empowers cloud administrators to proactively identify and address potential issues, ultimately ensuring smooth operation and maximizing return on investment.

The intricate web of metrics encompasses resource utilization, network performance, application performance, security, cost optimization, availability, reliability, scalability, database performance, and compliance. Each of these facets contributes to a holistic view of cloud health, enabling informed decisions and proactive management.

Resource Utilization

Effective cloud resource utilization is crucial for optimizing performance and minimizing costs. Understanding and monitoring CPU, memory, and storage usage allows for proactive management and prevents performance bottlenecks or unexpected expenses. This section delves into the key metrics, their interpretation, and tools for monitoring them across various cloud platforms.

CPU Utilization

CPU utilization, expressed as a percentage, represents the proportion of time the CPU is actively processing tasks. High CPU utilization can indicate that your applications are demanding significant processing power. Conversely, consistently low utilization might signify under-utilized resources, potentially leading to cost inefficiencies. Monitoring CPU utilization helps identify performance bottlenecks and ensure optimal application responsiveness. For example, if a web server’s CPU utilization consistently exceeds 80%, it suggests potential performance degradation and the need for optimization or scaling.

Memory Utilization

Memory utilization, also expressed as a percentage, reflects the amount of RAM actively in use. High memory utilization can cause applications to slow down or even crash if insufficient RAM is available. Monitoring memory utilization is essential for preventing application failures and ensuring smooth operation. For example, if a database application consistently utilizes 95% of available memory, it indicates potential performance issues and the need for more RAM.

Storage Utilization

Storage utilization represents the percentage of storage capacity currently in use. Monitoring storage utilization is vital to prevent storage exhaustion and ensure sufficient space for new data. High storage utilization can lead to slower application performance and data retrieval issues. Monitoring storage utilization helps to prevent data loss and ensures data accessibility. For example, if a file server’s storage utilization reaches 90%, it’s essential to proactively address the storage needs, possibly by adding more storage capacity or optimizing data storage.

Interpreting Metrics in a Cloud Environment

In a cloud environment, monitoring these metrics requires analyzing trends over time rather than focusing on a single snapshot. Fluctuations in utilization are normal, but consistent high utilization may indicate a need for scaling resources up. Regularly reviewing these metrics helps to understand application behavior and predict potential issues. For example, monitoring CPU utilization over a 24-hour period reveals patterns, peak usage times, and average utilization levels.

This enables the adjustment of resources to meet demand without incurring unnecessary costs.

Resource Utilization Metrics and Thresholds

| Metric | Description | Optimal Threshold (Percentage) ||———————-|—————————————————————————–|——————————–|| CPU Utilization | Percentage of CPU resources in use | 70-80 || Memory Utilization | Percentage of RAM in use | 70-80 || Storage Utilization | Percentage of storage capacity in use | 80-90 |These thresholds are guidelines, and actual optimal values may vary based on the specific application and workload.

Importance of Monitoring for Cost Optimization

Monitoring resource utilization directly impacts cost optimization in a cloud environment. By identifying underutilized resources, you can adjust configurations to reduce unnecessary spending. Conversely, proactively scaling resources to meet peak demand minimizes costs compared to reactive scaling, which may lead to higher expenses. For instance, if a database application only utilizes 20% of its allocated CPU, adjusting the resource allocation can reduce monthly expenses significantly.

Tools for Monitoring Resource Utilization

- Cloud Provider Tools: Each cloud provider (AWS, Azure, GCP) offers built-in monitoring tools that provide detailed insights into resource utilization metrics. These tools are user-friendly and integrated with other cloud services, facilitating streamlined management.

- Third-Party Monitoring Tools: Third-party tools like Datadog, New Relic, and Prometheus provide comprehensive monitoring solutions for various cloud environments. These tools offer extensive customization and integration options to adapt to specific monitoring needs.

Network Performance

Effective cloud application performance hinges significantly on the efficiency of the underlying network infrastructure. Understanding and monitoring key network metrics are crucial for identifying bottlenecks and ensuring optimal application responsiveness. Poor network performance can lead to increased latency, decreased throughput, and ultimately, a frustrating user experience.

Key Network Metrics for Cloud Application Performance

Network performance metrics provide a quantitative measure of the network’s effectiveness. Crucial metrics include packet loss, latency, throughput, jitter, and network bandwidth. These metrics allow for the identification of potential network bottlenecks and enable proactive measures to maintain high-performance application operation.

Identifying Network Bottlenecks in a Cloud Setup

Identifying network bottlenecks in a cloud environment requires a multifaceted approach. Monitoring tools, combined with analysis of collected data, can pinpoint areas of congestion or high latency. Analyzing network traffic patterns, identifying periods of high load, and correlating them with application performance metrics can pinpoint the source of bottlenecks. Techniques such as tracing network requests can help isolate specific points of network contention.

Comparison of Network Performance Metrics

Different network performance metrics provide varying insights into the network’s behavior. Packet loss, for instance, indicates the percentage of packets that did not reach their destination, signifying potential issues in the network’s reliability. Latency, on the other hand, measures the time taken for data to travel between two points, highlighting the responsiveness of the network. Throughput quantifies the rate at which data is transferred, showcasing the network’s capacity.

Jitter represents variations in latency, impacting the stability of real-time applications.

Impact of Latency and Throughput on Cloud Application Responsiveness

Latency directly impacts the responsiveness of cloud applications. High latency translates to longer response times for user requests, leading to a poor user experience. Conversely, low latency ensures quick responses, enabling smooth and efficient application interaction. Throughput, representing the rate of data transmission, influences the speed of data transfer. High throughput allows for faster data transfer, which is crucial for applications handling large volumes of data.

A balance between latency and throughput is essential for optimal application performance.

Network Performance Metrics and their Impact on Cloud Services

| Metric | Definition | Impact on Cloud Services |

|---|---|---|

| Packet Loss | Percentage of packets that fail to reach their destination. | Reduced reliability, potential data corruption, increased latency. |

| Latency | Time taken for data to travel between two points. | Slow application response times, poor user experience, decreased productivity. |

| Throughput | Rate of data transmission. | Slow data transfer rates, limits application scalability, reduced efficiency. |

| Jitter | Variations in latency. | Intermittent delays in application response, reduced stability in real-time applications. |

| Bandwidth | Maximum data transmission capacity. | Limits application scalability, congestion during high usage periods. |

Application Performance

Application performance is a critical aspect of cloud environment monitoring. Effective monitoring ensures optimal user experience, identifies performance bottlenecks, and facilitates proactive issue resolution. By closely tracking application performance metrics, organizations can proactively address potential problems and maintain high levels of service availability.Understanding and analyzing these metrics is essential for maintaining a healthy and responsive application in a cloud environment.

This allows for timely identification of issues, efficient resource allocation, and ultimately, a positive user experience.

Application Performance Metrics

A comprehensive approach to monitoring application performance involves tracking various metrics. These metrics provide valuable insights into the responsiveness and stability of applications. Key metrics include request latency, error rates, and response times.

Request Latency

Request latency, or the time it takes for a request to be processed, is a crucial indicator of application performance. High latency can negatively impact user experience, leading to frustration and decreased productivity. Monitoring request latency allows for the identification of performance bottlenecks within the application architecture, and the implementation of necessary optimizations. Average request latency is a commonly used metric, but it’s often supplemented by percentiles (e.g., 95th percentile latency) to understand the performance experienced by a larger portion of users.

Error Rates

Error rates, or the percentage of requests that result in errors, provide insight into the stability and reliability of an application. High error rates can indicate issues with the application’s logic, data integrity, or resource availability. Monitoring error rates helps identify and address underlying problems that may be affecting application reliability. Different error types should be categorized and monitored separately to pinpoint specific sources of errors.

Response Times

Response time is the total time it takes for an application to process a request and return a response. It’s a key metric encompassing both latency and processing time. Monitoring response time is vital for ensuring that users experience quick and efficient application performance. Long response times often result in poor user experiences, potentially leading to lost revenue or user churn.

Troubleshooting Application Performance Issues

Troubleshooting application performance issues involves a systematic approach based on monitoring metrics. Identifying the root cause of slowdowns or errors is crucial for effective remediation. This involves analyzing patterns in latency, error rates, and response times. For example, a sudden spike in error rates might indicate a database issue, while a consistently high response time might point to a bottleneck in the application’s processing logic.

Common Application Performance Metrics and Typical Ranges

The following table provides a summary of common application performance metrics and their typical ranges. Note that these ranges are indicative and may vary based on the specific application and its requirements.

| Metric | Typical Range | Interpretation |

|---|---|---|

| Average Request Latency (ms) | 10-100 | Good performance. |

| 95th Percentile Request Latency (ms) | 50-250 | Performance acceptable for most users. |

| Error Rate (%) | 0-1 | Low error rate indicates stable application. |

| Average Response Time (ms) | 100-500 | Acceptable response time. |

Significance of Monitoring Application Performance

Monitoring application performance is essential for delivering a positive user experience. Fast response times and low error rates contribute to user satisfaction and retention. Conversely, slow response times and frequent errors can lead to user frustration and ultimately, impact business goals. Organizations that prioritize application performance monitoring are better equipped to identify and address issues before they significantly affect users.

Furthermore, timely identification of issues can reduce costs associated with remediation and improve the overall efficiency of application operation.

Security Metrics

Maintaining a robust security posture in a cloud environment is paramount. A comprehensive approach requires not only strong preventative measures but also continuous monitoring of security-related activities. This enables proactive identification and mitigation of potential threats, ultimately safeguarding sensitive data and ensuring the reliability of cloud services.Understanding and tracking key security metrics provides valuable insights into the overall security health of the cloud environment.

These metrics offer a quantifiable measure of the effectiveness of security controls and can help pinpoint areas needing improvement. Early detection of security anomalies allows for swift response and reduces the impact of any potential breaches.

Importance of Security Metrics

Security metrics are crucial for evaluating the effectiveness of security controls in a cloud environment. They provide a quantitative measure of the security posture, allowing organizations to identify vulnerabilities and proactively address potential risks. Continuous monitoring of these metrics enables organizations to adapt their security strategies in response to evolving threats and maintain a strong security posture.

Security Metrics to Monitor

Monitoring various security metrics is essential for maintaining a secure cloud environment. This includes tracking unauthorized access attempts, failed logins, and security alerts, which can indicate potential vulnerabilities or malicious activities. Regular monitoring of these metrics enables swift response to threats and helps to prevent potential security breaches.

- Unauthorized Access Attempts: Monitoring attempts to access resources without proper authorization provides a clear picture of potential threats. These attempts can originate from various sources, including automated tools and human actors. Tracking these attempts allows for proactive identification of malicious activity.

- Failed Logins: Tracking failed login attempts is a vital indicator of potential security breaches. A sudden increase in failed logins can suggest brute-force attacks or unauthorized access attempts. Analyzing patterns and frequency can help identify and mitigate such threats.

- Security Alerts: Security alerts generated by intrusion detection systems or other security tools provide immediate notifications of suspicious activity. These alerts may indicate potential threats or policy violations. Prompt investigation and response to these alerts are critical for preventing security breaches.

Interpreting Security Alerts

Security alerts require careful interpretation to effectively prevent breaches. Analyzing the source, nature, and frequency of alerts is crucial to determine the severity and potential impact of the identified security incidents. This interpretation process should consider the context of the cloud environment, potential vulnerabilities, and the overall security posture.

- Alert Source Identification: Identifying the source of an alert is the first step in determining the potential threat. This could be from a user, a device, or a network. Understanding the origin helps in prioritizing responses and taking appropriate action.

- Alert Nature Assessment: Determining the nature of the alert is crucial for assessing its potential impact. Is it a simple policy violation, a brute-force attack, or a more sophisticated intrusion attempt? This assessment helps in categorizing the threat and implementing the correct response.

- Frequency Analysis: Analyzing the frequency of alerts can reveal patterns or trends. A high frequency of similar alerts might indicate a larger-scale attack or a widespread vulnerability. Understanding the patterns helps in focusing on the most critical threats.

Security Monitoring Strategy

Designing a robust security monitoring strategy involves a multi-layered approach. This strategy should encompass proactive monitoring of key metrics, timely response to security alerts, and ongoing improvement of security controls. The monitoring process must be tailored to the specific needs and resources of the cloud application.

| Security Metric | Action |

|---|---|

| Unauthorized Access Attempts | Investigate the source and nature of the attempt. Enhance security controls if necessary. |

| Failed Logins | Identify patterns and implement stronger authentication methods. Monitor user accounts for suspicious activity. |

| Security Alerts | Investigate the alert immediately. Determine the root cause and implement corrective measures. Document the incident and lessons learned. |

Cost Optimization Metrics

Effective cloud management hinges on a keen understanding of cost implications. Monitoring and analyzing cost metrics allows for proactive identification of potential cost overruns and the implementation of strategies to optimize spending. This proactive approach translates into significant long-term financial benefits.Understanding resource consumption and correlating it with associated costs is crucial for achieving cost optimization in cloud environments. This involves not just identifying high-cost resources, but also understanding the reasons behind those costs and implementing changes to reduce them.

This proactive approach allows for adjustments in resource allocation and usage patterns, leading to significant long-term cost savings.

Resource Usage and Cost Tracking

Accurate tracking of resource utilization is paramount for cost optimization. Understanding how different services are being used, and how their usage translates to costs, provides crucial insights for efficient resource management. This knowledge empowers informed decisions regarding resource allocation and scaling strategies. The key is to correlate usage with costs, allowing for the identification of potential cost-saving opportunities.

- Instance types and sizes: Monitoring the specific instance types and sizes used for different applications is essential. Over-provisioning or inappropriate instance selection directly impacts costs. For example, using a high-cost, high-performance instance for a task requiring only basic processing leads to unnecessary spending.

- Storage consumption: Tracking storage usage across various services (e.g., object storage, databases) is vital. Unused or underutilized storage can lead to unnecessary costs. A company may realize they are storing data they don’t need, or that their storage is not optimized for the data they are storing. This awareness allows for the identification of opportunities to reduce storage costs.

- Database queries and operations: Monitoring database activity, including query frequency and complexity, is crucial. Inefficient queries or inadequate database configurations can significantly impact costs. A company could identify a query that is overly complex and find a simpler alternative, thereby reducing costs.

Analyzing Cost Trends

Understanding cost trends over time provides a crucial perspective for identifying patterns and making data-driven decisions. Analyzing cost data over time enables the identification of anomalies and opportunities for optimization. A sustained increase in spending on a specific resource may indicate an underlying issue that needs to be addressed.

- Cost forecasting: Using historical data to predict future costs allows for proactive budgeting and resource allocation. Accurate forecasting can help companies anticipate cost spikes and prepare for them.

- Cost anomaly detection: Algorithms can identify significant deviations from expected cost patterns. This allows for rapid response to unexpected cost increases and the implementation of corrective actions.

- Cost optimization strategies: Leveraging data insights to develop and implement cost-saving strategies, such as right-sizing resources, optimizing storage, or migrating to more cost-effective services, is essential. For example, identifying a service that is frequently used and can be migrated to a more cost-effective service can significantly reduce costs.

Cost Optimization Metrics and Implications

A structured approach to tracking cost optimization metrics is essential for effective cloud spending management. A well-defined set of metrics allows for the comparison of costs over time and between different resources.

| Metric | Description | Implications for Cloud Spending |

|---|---|---|

| Compute Costs | Cost associated with running virtual machines or containers | Over-provisioning instances, inappropriate instance types can lead to high costs. |

| Storage Costs | Cost associated with storing data in cloud storage | Unused storage or inefficient storage strategies increase costs. |

| Database Costs | Cost associated with running and using databases | Inefficient queries or poorly configured databases lead to higher costs. |

| Network Costs | Cost associated with data transfer within the cloud or to/from the cloud | High network traffic can significantly impact costs. |

| Management Costs | Cost associated with managing and monitoring the cloud environment | Effective monitoring and management tools help identify and mitigate cost overruns. |

Identifying Cost Savings Opportunities

Analyzing cost data allows for the identification of areas for cost savings. This involves comparing costs against expected or historical data, looking for anomalies, and understanding the reasons behind cost variations. Regular review and analysis of cloud spending patterns help to identify and address cost inefficiencies.

- Right-sizing resources: Optimizing resource allocation to meet actual needs, avoiding over-provisioning or under-provisioning. This could involve migrating to smaller instances or reducing storage capacity where possible.

- Optimizing storage: Employing efficient storage strategies to reduce storage costs. This could involve compressing data, using appropriate storage tiers, and regularly deleting unused data.

- Leveraging cost-effective services: Migrating to more cost-effective services or adjusting service configurations to minimize costs. Using a different, more economical service for the same task can be a significant cost saver.

Availability and Reliability

Ensuring the continuous operation of cloud services is paramount. Availability and reliability metrics directly impact user experience, business operations, and overall success. Understanding these metrics allows proactive identification of potential issues and facilitates the implementation of necessary enhancements. This section delves into the significance of availability and reliability, methods for measuring uptime and downtime, and the interpretation of these metrics for service improvements.

Importance of Availability and Reliability Metrics

Cloud services must be consistently available and reliable to meet user expectations and business needs. High availability minimizes disruptions and maintains service continuity, ensuring users can access applications and data without interruption. Reliability, on the other hand, focuses on the system’s ability to perform its intended function without errors or failures over an extended period. Robust availability and reliability contribute to a positive user experience, support business continuity, and reduce the risk of financial loss due to service outages.

Measuring Uptime and Downtime of Cloud Services

Accurate measurement of uptime and downtime is crucial for evaluating the performance of cloud services. Uptime is the total time a service is operational, while downtime represents the total time it is unavailable. These metrics are often expressed as percentages or in terms of hours or minutes. Tools and monitoring systems provide detailed logs and reports, offering granular insight into the duration and frequency of outages.

Monitoring systems should identify the root cause of downtime events to facilitate corrective actions and prevent future occurrences.

Metrics Related to Service Level Agreements (SLAs)

Service Level Agreements (SLAs) define the expected performance levels for cloud services. Key metrics within SLAs often include service uptime targets, response time objectives, and maximum allowable downtime. These metrics are vital for ensuring that the cloud provider meets the agreed-upon service standards. Examples include 99.9% uptime for a specific service, or a maximum of 15 minutes of downtime per month.

Compliance with these SLAs is crucial for maintaining customer trust and satisfaction.

Interpreting Metrics for Service Improvements

Analyzing availability and reliability metrics helps identify areas for improvement. High downtime percentages can indicate potential issues with infrastructure, applications, or processes. Trends in downtime durations and frequencies provide insights into recurring problems and patterns. This analysis facilitates the implementation of preventative measures, such as enhanced infrastructure redundancy, improved application monitoring, or refined operational procedures. For instance, if a specific service consistently experiences downtime during peak hours, it might suggest the need for scaling resources to handle increased demand.

Table of Availability and Reliability Metrics

| Metric | Description | Target Value |

|---|---|---|

| Service Uptime | Percentage of time the service is operational | 99.95% or higher |

| Average Downtime Duration | Average duration of service outages | Under 15 minutes per month |

| Downtime Frequency | Number of service outages per period | Minimal (e.g., less than 5 outages per month) |

| SLA Compliance | Percentage of time the service meets agreed-upon SLA targets | 100% |

| Mean Time to Recovery (MTTR) | Average time taken to restore service after an outage | Minimized (e.g., under 1 hour) |

Scalability Metrics

Assessing scalability in a cloud environment is crucial for ensuring the system can handle increasing workloads without performance degradation. Proper monitoring and analysis of scalability metrics allow proactive adjustments to maintain optimal performance under fluctuating demands. This enables businesses to avoid bottlenecks and ensure smooth operation during peak hours or periods of rapid growth.

Measuring and Monitoring Scalability

Effective scalability monitoring involves tracking key metrics that reflect the system’s ability to adapt to changing demands. This involves analyzing performance under varying workloads to identify potential bottlenecks and optimize resource allocation. A crucial aspect is the system’s responsiveness to dynamic workloads, ensuring minimal latency and high throughput.

Analyzing Cloud Infrastructure Performance Under Varying Workloads

To evaluate the cloud infrastructure’s responsiveness under different workloads, performance benchmarks are crucial. These benchmarks assess the infrastructure’s ability to handle increased traffic, data processing, and application requests. Key indicators include response times, throughput, and resource utilization under various load conditions. Analyzing these metrics reveals the system’s capacity and potential bottlenecks, allowing for targeted improvements. For example, if the response time consistently increases as the load increases, it indicates a potential scalability issue.

Scalability Metrics and Thresholds

A well-defined set of scalability metrics and their corresponding thresholds is essential for proactive management. This allows for early identification of potential performance issues.

| Metric | Description | Thresholds (Example Values) |

|---|---|---|

| Request Rate | The number of requests processed per unit of time. | High: >1000 requests/second; Moderate: 500-1000 requests/second; Low: <500 requests/second. |

| Response Time | The time taken to process a request. | High: >100 milliseconds; Moderate: 50-100 milliseconds; Low: <50 milliseconds. |

| CPU Utilization | The percentage of CPU resources used. | High: >80%; Moderate: 50-80%; Low: <50%. |

| Memory Utilization | The percentage of memory resources used. | High: >80%; Moderate: 50-80%; Low: <50%. |

| Network Throughput | The rate at which data is transferred over the network. | High: >10 Gbps; Moderate: 5-10 Gbps; Low: <5 Gbps. |

Adapting the Cloud Environment to Changing Demands

Adapting the cloud environment to changing demands necessitates proactive adjustments. This includes scaling resources up or down based on real-time monitoring of resource utilization. Auto-scaling mechanisms are critical for maintaining performance and cost-effectiveness. For example, during peak hours, the system can automatically provision additional resources to handle increased traffic. Conversely, during off-peak hours, resources can be scaled down to reduce costs.

Measuring Responsiveness to Dynamic Workloads

Monitoring the responsiveness of the cloud to dynamic workloads involves tracking metrics like request latency, error rates, and resource utilization under varying load conditions. Tools that capture and analyze these metrics in real-time provide valuable insights. These tools can alert administrators to potential issues and allow for prompt adjustments. For example, a sudden increase in request latency might indicate a need to scale up resources or optimize application performance.

Database Performance Metrics

Effective database performance is critical for application responsiveness in a cloud environment. Poor database performance can lead to slow application response times, impacting user experience and potentially hindering business operations. Monitoring key database metrics allows proactive identification and resolution of performance bottlenecks, ensuring optimal application functionality.Robust monitoring of database performance is essential for maintaining application responsiveness and user satisfaction.

This includes continuous tracking of various metrics to identify and address potential issues proactively. By monitoring key performance indicators (KPIs), IT teams can ensure optimal database functionality and application performance, thereby maximizing user experience.

Query Performance Metrics

Monitoring query performance is vital for identifying slow or inefficient queries. This often involves tracking the execution time of queries and analyzing their complexity. Tools and techniques for query analysis allow for the identification of potential bottlenecks, such as inefficient indexing or poorly constructed queries. Understanding query performance allows for optimization, reducing database load and improving application speed.

Response Time Metrics

Response time metrics, such as average query response time and maximum response time, are crucial for gauging the speed of database operations. These metrics indicate how quickly the database responds to user requests. Consistent high response times can significantly impact user experience. Monitoring these metrics helps pinpoint issues, such as excessive locking or resource contention. Efficient response times are paramount for maintaining a seamless user experience.

Resource Usage Metrics

Resource usage metrics, including CPU utilization, memory consumption, and disk I/O, are essential for understanding the database’s resource demands. Monitoring these metrics allows for the detection of potential resource exhaustion. Excessive resource usage can lead to performance degradation and database instability. Efficient resource management is key to preventing such issues. Maintaining resource utilization within acceptable levels is crucial for consistent application performance.

Database Performance Metrics Table

| Metric | Ideal Value | Explanation |

|---|---|---|

| Average Query Response Time | < 100ms (or lower, depending on the application's requirements) | Indicates the average time taken to execute a query. Lower values are better. |

| Maximum Query Response Time | < 500ms (or lower, depending on the application's requirements) | Indicates the longest time taken to execute a query. Lower values are better. Outliers should be investigated. |

| CPU Utilization | Below 80% | High CPU utilization suggests potential bottlenecks. |

| Memory Consumption | Below 80% of available memory | High memory consumption may indicate memory leaks or insufficient memory allocation. |

| Disk I/O | Within acceptable limits for the database’s storage | High disk I/O can be a bottleneck, particularly for read-intensive operations. |

Monitoring Tools

Various tools are available for monitoring database performance in a cloud environment. These tools provide insights into query execution time, resource usage, and other critical metrics. Examples include:

- Cloud-native database monitoring tools: Cloud providers often offer integrated tools for monitoring databases running in their environments. These tools provide dashboards, alerts, and detailed performance reports.

- Third-party monitoring tools: Many third-party tools are available that provide comprehensive database monitoring capabilities, allowing for granular control over metrics collection and analysis. These tools may offer broader compatibility with various database systems.

- Database Management Systems (DBMS) tools: Some DBMSs have built-in monitoring and analysis capabilities. These allow for detailed understanding of query performance and resource utilization.

Importance for Application Responsiveness

Monitoring database performance directly impacts application responsiveness. By proactively identifying and addressing performance issues, database performance monitoring ensures that applications remain responsive and provide a seamless user experience. This, in turn, contributes to higher user satisfaction and improved business outcomes. Slow database queries can significantly impact application performance, causing delays and frustration for users. Proactive monitoring and optimization can mitigate these issues.

Compliance Metrics

Maintaining regulatory compliance is paramount in a cloud environment. Failure to adhere to relevant standards can lead to significant penalties, reputational damage, and legal ramifications. Effective monitoring of compliance metrics is crucial for mitigating these risks and ensuring the continued operation of cloud-based services.

Importance of Compliance Metrics

Compliance metrics provide a structured approach to verifying adherence to industry regulations and internal policies. They quantify and track the organization’s progress toward meeting these standards, enabling proactive identification and remediation of potential compliance issues. Robust compliance monitoring minimizes operational disruptions, safeguards sensitive data, and protects the organization’s reputation.

Metrics Related to Regulatory Compliance

Several key metrics are essential for monitoring compliance with regulations like GDPR and HIPAA. These metrics encompass data security practices, access controls, and data handling procedures. For example, GDPR requires organizations to demonstrate appropriate data protection measures. Metrics related to this could include the number of data breaches, the time taken to respond to data breaches, and the number of data subject access requests processed.

Table of Compliance Metrics and Standards

The table below Artikels some key compliance metrics and their corresponding standards.

| Metric | Standard | Description |

|---|---|---|

| Data Breach Rate | NIST Cybersecurity Framework | Number of data breaches per unit of time, measured against established benchmarks. |

| Data Encryption Rate | NIST 800-53 | Percentage of sensitive data encrypted at rest and in transit. |

| Access Control Violations | ISO 27001 | Number of unauthorized access attempts or successful breaches of access controls. |

| Data Subject Access Requests (DSAR) Processing Time | GDPR | Average time taken to process requests from data subjects to access, correct, or delete their data. |

| Security Incident Response Time | Various industry standards | Time taken to detect, contain, and resolve security incidents. |

Tracking and Ensuring Compliance in the Cloud

Effective tracking of compliance metrics involves employing monitoring tools and establishing clear procedures. These tools should be able to track events and data related to access, storage, and handling of sensitive data. Robust logging mechanisms and regular audits are crucial for ensuring ongoing compliance. A key element is establishing a security information and event management (SIEM) system for detecting anomalies and potential security breaches.

Auditing and reporting processes should be standardized to ensure consistency and transparency.

Using Compliance Metrics to Ensure Security and Privacy

Compliance metrics are vital for demonstrating a commitment to security and privacy. By consistently monitoring and reporting on these metrics, organizations can demonstrate their commitment to protecting sensitive data. For example, low data breach rates and quick response times to incidents demonstrate robust security measures. Metrics related to data encryption and access control provide evidence of adherence to privacy regulations.

This, in turn, builds trust with customers and stakeholders, leading to increased confidence in the organization’s security practices.

Logging and Monitoring Tools

Effective cloud management relies heavily on robust logging and monitoring tools. These tools provide crucial insights into the performance, health, and security of cloud resources, enabling proactive issue resolution and optimized resource utilization. Comprehensive monitoring goes beyond basic metrics, allowing for a deeper understanding of the underlying processes and potential issues before they impact service availability or operational efficiency.Cloud environments are complex ecosystems, with numerous interacting components.

Comprehensive logging and monitoring tools provide a centralized view of this intricate network, facilitating the identification and resolution of potential issues, ensuring efficient and reliable operations. These tools offer a spectrum of functionalities, from simple metric collection to sophisticated analysis and alerting, allowing organizations to tailor their monitoring strategy to their specific needs.

Various Tools for Monitoring Cloud Metrics

A wide array of tools are available for monitoring cloud environments. These tools range from vendor-specific solutions to open-source alternatives, each offering a unique set of features and capabilities. Understanding the strengths and weaknesses of each tool is crucial for selecting the most appropriate solution for a given scenario. Popular choices include cloud providers’ native monitoring tools, specialized third-party applications, and open-source solutions.

How These Tools Collect and Present Data

Logging and monitoring tools collect data from various sources within the cloud environment. These sources include virtual machines, databases, applications, and network components. Data collection mechanisms vary depending on the tool, employing methods like agent-based monitoring, API integrations, or log file analysis. Collected data is typically presented in dashboards, graphs, and tables, enabling users to visualize trends, identify anomalies, and understand resource utilization patterns.

These tools often provide customizable dashboards, allowing users to focus on specific metrics relevant to their needs.

Table of Commonly Used Logging and Monitoring Tools

The following table presents a selection of commonly used logging and monitoring tools for cloud environments, highlighting their key features and characteristics.

| Tool | Description | Data Collection Method | Data Presentation |

|---|---|---|---|

| AWS CloudWatch | Amazon Web Services’ comprehensive monitoring service. | Agent-based monitoring, API integrations, log file analysis | Dashboards, graphs, and tables; customizable views |

| Azure Monitor | Microsoft Azure’s monitoring service. | Agent-based monitoring, API integrations, log file analysis | Dashboards, graphs, and tables; customizable views |

| Datadog | A third-party monitoring and analytics platform. | Agent-based monitoring, API integrations, log file analysis | Dashboards, graphs, and alerts; powerful correlation features |

| Prometheus | An open-source systems monitoring and alerting toolkit. | Agent-based monitoring, metrics collection | Dashboards, graphs; customizable alerting systems |

| Grafana | An open-source platform for visualizing metrics from various sources. | Integrates with other monitoring tools | Dashboards, graphs, alerts; customizable and versatile |

Examples of Using These Tools to Identify and Resolve Issues

Consider an instance where application performance degrades. Using a monitoring tool like Datadog, developers can quickly identify bottlenecks in the application’s code or database queries. Real-time metrics and visualizations allow for pinpoint diagnosis of the problem, guiding developers toward specific areas needing optimization. CloudWatch logs can provide context about specific API calls, requests, and errors, helping correlate the application performance issues with the underlying infrastructure events.

This allows for faster root cause analysis and more effective problem resolution.

Advantages and Disadvantages of Different Monitoring Tools

Each monitoring tool possesses a unique set of advantages and disadvantages. AWS CloudWatch, for instance, offers a comprehensive solution integrated with other AWS services, but might have limited customization options compared to third-party tools like Datadog. Prometheus, while open-source and highly customizable, may require more technical expertise to set up and maintain. The selection of a monitoring tool should align with the specific needs and technical expertise of the organization.

Wrap-Up

In conclusion, effectively monitoring cloud metrics is paramount for achieving optimal performance, cost efficiency, and security. By meticulously tracking resource utilization, network performance, application behavior, security threats, and cost trends, organizations can proactively address issues, optimize resource allocation, and ensure high availability and reliability. This holistic approach fosters a robust and responsive cloud environment, maximizing the value derived from cloud services.

FAQ Overview

What tools are commonly used for monitoring cloud metrics?

Various tools cater to specific needs, such as CloudWatch (AWS), Azure Monitor (Microsoft Azure), and Google Cloud Monitoring (Google Cloud Platform). The choice often depends on the cloud provider and the specific metrics being monitored.

How frequently should cloud metrics be monitored?

The frequency of monitoring depends on the specific metric and the sensitivity of the application. Real-time monitoring is essential for critical systems, while scheduled reports might suffice for less critical applications.

What are the typical ranges for acceptable latency in application performance?

Acceptable latency varies depending on the application. Generally, lower latency translates to a better user experience. Benchmarking against industry standards and internal benchmarks is crucial for defining acceptable ranges.

How can I identify areas for cost savings based on monitoring data?

Analyzing cost trends and resource usage patterns allows for identification of potential cost savings. Identifying idle resources, optimizing resource allocation, and implementing cost-effective solutions are key steps.