The exponential growth of data has driven the need for efficient and scalable data processing solutions. Serverless query engines for data lakes represent a paradigm shift, offering on-demand, pay-per-query analytics capabilities. This introduction explores the core principles behind these engines, contrasting them with traditional data warehousing approaches, and highlighting their advantages in terms of cost, scalability, and ease of management within the context of a modern data landscape.

The subsequent sections will delve into the architectural components, popular technologies, and practical considerations involved in leveraging serverless query engines. We will examine the characteristics of data lakes, the crucial elements of query optimization, security protocols, cost management strategies, and performance tuning techniques, providing a comprehensive understanding of this critical technology for data-driven decision-making.

Introduction to Serverless Query Engines for Data Lakes

Serverless query engines represent a paradigm shift in how data lakes are accessed and analyzed. They offer a flexible and cost-effective approach to querying large datasets stored in object storage, eliminating the need for upfront infrastructure provisioning and management. This introduction will delve into the core concepts, advantages, and architectural aspects of serverless query engines, contrasting them with traditional data warehousing methods.

Core Concept of Serverless Query Engines

Serverless query engines are computational services that execute queries on data lakes without requiring the user to manage the underlying infrastructure. Users submit queries, and the engine automatically allocates the necessary resources, processes the query, and returns the results. This “pay-as-you-go” model eliminates the need for provisioning, scaling, and maintaining servers, resulting in operational efficiency and cost savings. The core principle revolves around abstracting the infrastructure layer, allowing users to focus solely on data analysis.

Advantages in a Data Lake Environment

Serverless query engines offer several advantages specifically tailored for data lake environments, including:

- Cost-Effectiveness: The pay-per-query model eliminates the cost of idle resources. Users are charged only for the compute resources consumed during query execution.

- Scalability: Serverless engines automatically scale up or down based on query demands, ensuring optimal performance without manual intervention. This dynamic scaling is crucial for handling unpredictable workloads.

- Reduced Operational Overhead: The absence of infrastructure management simplifies operations. Users do not need to worry about server provisioning, patching, or maintenance, reducing the burden on IT teams.

- Accessibility: Serverless query engines provide easy access to data lake data, making it readily available for analysis by a broader range of users, including data scientists, analysts, and business users.

- Integration: Serverless engines seamlessly integrate with various data lake storage solutions, such as Amazon S3, Azure Data Lake Storage, and Google Cloud Storage.

Comparison of Traditional Data Warehousing versus Serverless Query Engines

Traditional data warehousing, often involving on-premise or cloud-based data warehouses, typically involves significant upfront investments in hardware and software. Serverless query engines offer a more agile and cost-effective alternative, particularly for data lakes.

| Feature | Traditional Data Warehousing | Serverless Query Engines |

|---|---|---|

| Infrastructure | Requires dedicated hardware and software, often provisioned in advance. | Uses a serverless architecture, eliminating infrastructure management. |

| Cost Model | Typically involves fixed costs (hardware, software licenses) and variable costs (e.g., storage). | Pay-per-query, eliminating costs for idle resources. |

| Scalability | Scaling often requires manual intervention, leading to potential performance bottlenecks. | Automatically scales based on query demands. |

| Operational Overhead | High operational overhead, including server management, patching, and maintenance. | Minimal operational overhead; focus on data analysis. |

| Data Loading | Often requires ETL (Extract, Transform, Load) processes to move data into the warehouse. | Directly queries data in the data lake, reducing data movement. |

The difference in cost is significant. For example, a small data warehouse might cost tens of thousands of dollars annually, while a serverless query engine could cost a few hundred dollars per month for the same workload, depending on query volume and data size. The scalability difference is equally important; traditional warehouses can struggle to handle rapid data growth, while serverless engines can automatically scale to meet demand.

Architecture of a Serverless Query Engine Interacting with a Data Lake

The architecture typically involves several key components, working together to process queries against data stored in the data lake.

1. User Submission

A user submits a query, typically using SQL or a similar query language, through a client application (e.g., a BI tool or a custom application).

2. Query Processing

The serverless query engine receives the query and parses it to understand the requested operation.

3. Resource Allocation

The engine automatically allocates the necessary compute resources (e.g., virtual machines, containers) based on the query complexity and data size.

4. Data Retrieval

The engine accesses the data stored in the data lake, typically in object storage (e.g., S3, Azure Data Lake Storage, Google Cloud Storage).

5. Query Execution

The engine executes the query, processing the data and performing the required calculations.

6. Result Delivery

The engine returns the query results to the user, typically in a format like CSV, JSON, or a data visualization.

This architecture provides a streamlined approach to data analysis, focusing on ease of use and efficiency.

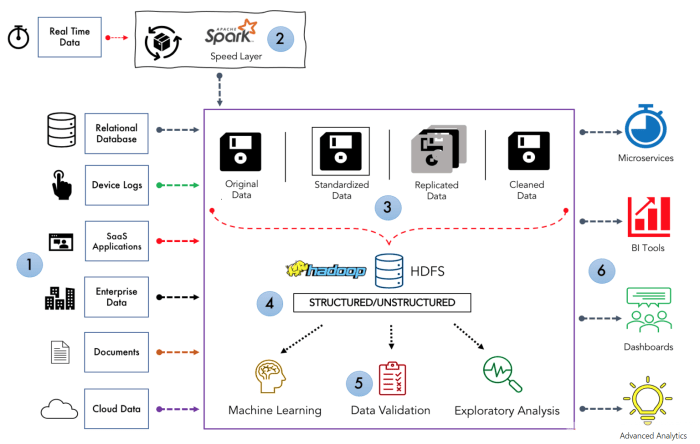

Understanding Data Lakes

Data lakes have emerged as a crucial component of modern data architectures, providing a centralized repository for storing vast amounts of data in various formats. Their flexibility and scalability have made them a preferred choice for organizations seeking to leverage the power of big data analytics. Understanding the core characteristics of data lakes, the challenges they present, and the diverse data types they accommodate is essential for effectively utilizing serverless query engines.

Characteristics of Data Lakes

Data lakes are characterized by their ability to store data in its native format, eliminating the need for rigid schemas and transformations during ingestion. This approach allows for the storage of structured, semi-structured, and unstructured data, providing a holistic view of an organization’s information assets. Data lakes typically leverage cost-effective storage solutions like cloud object storage (e.g., Amazon S3, Azure Data Lake Storage, Google Cloud Storage) for storing data at scale.

They also support a variety of data formats, allowing for flexibility in how data is ingested and analyzed.Common use cases for data lakes include:

- Data Warehousing: Data lakes can serve as a staging area or a primary data repository for traditional data warehousing, enabling organizations to consolidate data from diverse sources before loading it into a data warehouse. This process allows for more flexible data exploration and transformation.

- Business Intelligence and Reporting: Data lakes provide a rich source of data for generating business intelligence reports and dashboards, empowering stakeholders to make data-driven decisions. This includes creating visualizations and reports using tools that can connect to the data lake.

- Data Science and Machine Learning: Data scientists utilize data lakes to train machine learning models and perform advanced analytics. The ability to store raw data in its original format allows for more flexible and iterative model development.

- Exploratory Data Analysis: Data lakes facilitate exploratory data analysis, enabling analysts to discover patterns, trends, and insights within large datasets. The flexibility of the data lake allows for rapid prototyping and experimentation.

- Archiving and Compliance: Data lakes can be used for long-term data archiving and compliance purposes, providing a cost-effective way to store historical data for regulatory requirements. Data can be retained for extended periods.

Common Challenges in Querying Data Lakes

Querying data stored in data lakes presents several challenges, primarily related to data diversity and schema evolution. The lack of a predefined schema can lead to data quality issues and requires careful data governance. The variety of data formats and the potential for schema changes over time necessitate robust data discovery and management capabilities.Key challenges include:

- Data Format Variations: Data lakes often contain data in various formats, such as CSV, JSON, Parquet, and Avro. Query engines must support these diverse formats to efficiently process the data.

- Schema Evolution: Data schemas can change over time, leading to compatibility issues if not handled correctly. Query engines need to accommodate schema changes without breaking existing queries.

- Data Quality: The absence of strict schema enforcement can result in data quality problems, such as missing values, inconsistencies, and incorrect data types. This demands careful data validation and cleansing.

- Data Discovery: Finding and understanding data within a data lake can be challenging due to the volume and variety of data. Effective data discovery tools are crucial for identifying and accessing relevant datasets.

- Performance Optimization: Querying large datasets in a data lake can be computationally intensive. Optimizing query performance through techniques like partitioning, indexing, and data compression is essential.

Types of Data in Data Lakes

Data lakes accommodate a wide range of data types, including structured, semi-structured, and unstructured data. This versatility allows organizations to store and analyze data from various sources, including databases, weblogs, social media, and sensor data.

- Structured Data: Structured data is organized in a predefined format, typically stored in relational databases or data warehouses. It is characterized by its well-defined schema, making it relatively easy to query and analyze. Examples include:

- Customer records stored in a relational database.

- Financial transactions stored in a transaction processing system.

- Sales data organized in tables with specific columns.

- Semi-structured Data: Semi-structured data does not conform to a rigid schema but contains tags or markers that separate data elements. Common formats include JSON, XML, and CSV. Examples include:

- Weblogs containing information about user activity.

- JSON documents representing product catalogs.

- CSV files containing sensor readings with column headers.

- Unstructured Data: Unstructured data lacks a predefined format and is often challenging to process directly. It encompasses a wide variety of data types, including text, images, audio, and video. Examples include:

- Text documents, such as emails and reports.

- Images and videos from surveillance systems.

- Audio recordings of customer service calls.

Core Components of Serverless Query Engines

Serverless query engines for data lakes are complex systems designed to efficiently process queries against large datasets stored in various formats. Their architecture is fundamentally different from traditional database systems, focusing on scalability, cost-effectiveness, and ease of management. Understanding the core components is crucial for appreciating how these engines achieve their performance and flexibility.

Query Optimizer

The query optimizer is a critical component responsible for determining the most efficient execution plan for a given query. It analyzes the query, the data schema, and statistics about the data to identify the optimal sequence of operations to retrieve the requested information. This process involves several steps.

- Query Parsing and Validation: The optimizer first parses the query, ensuring it adheres to the query language’s syntax and semantics. It validates the query against the data schema to check for errors and identify the tables, columns, and functions involved.

- Logical Optimization: This phase focuses on transforming the query logically to simplify it and improve its efficiency. Techniques like predicate pushdown (moving filtering operations closer to the data source) and redundant operation elimination are employed.

- Physical Optimization: The physical optimizer determines the actual execution plan, considering factors like data distribution, available indexes, and the cost of different operations. It chooses the best algorithms for joins, aggregations, and other operations.

- Cost-Based Optimization: Many optimizers use a cost-based approach, estimating the cost of different execution plans based on data statistics (e.g., number of rows, data distribution, column cardinality). The plan with the lowest estimated cost is selected.

The effectiveness of the query optimizer directly impacts query performance. A well-optimized query can significantly reduce the amount of data processed and the overall execution time. For example, a query optimizer might rewrite a complex join operation involving multiple tables into a series of simpler operations, potentially improving performance by orders of magnitude.

Execution Engine

The execution engine is responsible for carrying out the query plan generated by the optimizer. It manages the parallel execution of query tasks, distributing the workload across available compute resources and coordinating the flow of data between different stages of the execution. The execution engine typically involves several key components.

- Task Scheduling and Management: The engine breaks down the query plan into smaller tasks and schedules them for execution on available compute resources. It monitors the progress of each task and handles failures or errors.

- Data Shuffling and Partitioning: Data is often shuffled and partitioned across multiple compute nodes to enable parallel processing. This involves redistributing data based on keys (e.g., join keys or grouping keys) to ensure that related data is processed together.

- Resource Allocation and Management: The engine allocates and manages compute resources (e.g., CPU, memory, I/O) to the tasks based on their requirements. It dynamically scales resources up or down to match the workload demands.

- Result Aggregation and Delivery: After processing the data, the engine aggregates the results from different tasks and delivers the final output to the user.

The execution engine leverages parallel processing and distributed computing to achieve high performance. By distributing the workload across multiple nodes, it can process large datasets much faster than a single-node system. For instance, a query that takes hours to run on a single machine might complete in minutes or even seconds using a serverless query engine with a highly optimized execution engine.

Data Connectors

Data connectors are essential components that enable serverless query engines to access data stored in various formats and locations. They act as translators, converting data from its native format into a format that the execution engine can understand and process.

- Format-Specific Readers: Data connectors include format-specific readers for popular data formats like Parquet, ORC, CSV, and JSON. These readers are optimized for efficiently reading data from storage.

- Storage Integration: Connectors integrate with different storage systems, such as Amazon S3, Azure Data Lake Storage, and Google Cloud Storage. They handle authentication, authorization, and data access.

- Schema Discovery: Connectors often provide schema discovery capabilities, automatically inferring the schema of the data based on its format and structure.

- Data Type Conversion: Connectors convert data types from the storage format to the internal representation used by the query engine.

The choice of data connectors is crucial for supporting different data formats and storage systems. The following table compares the functionality of various data connectors:

| Data Connector | Supported Formats | Storage Systems | Key Features | Performance Considerations |

|---|---|---|---|---|

| Parquet | Parquet | S3, ADLS, GCS, HDFS | Columnar storage, efficient compression, schema evolution | Excellent for analytical queries, fast filtering and aggregation, optimized for columnar reads |

| ORC | ORC | S3, ADLS, GCS, HDFS | Columnar storage, optimized for Hive, supports complex data types | Similar performance to Parquet, often optimized for Hive workloads, good compression |

| CSV | CSV | S3, ADLS, GCS, HDFS | Simple text-based format, widely supported | Generally slower than columnar formats, requires parsing, less efficient for analytical queries |

| JSON | JSON | S3, ADLS, GCS, HDFS | Semi-structured data format, flexible schema | Can be slower than columnar formats, parsing overhead, less efficient for analytical queries |

Data Indexing and Partitioning

Data indexing and partitioning are crucial techniques for improving query performance in serverless query engines. They allow the engine to efficiently locate and retrieve the relevant data for a query, reducing the amount of data that needs to be scanned.

- Data Partitioning: Data partitioning involves dividing the data into smaller, manageable units (partitions) based on one or more partitioning keys. This allows the query engine to selectively scan only the partitions that contain the data relevant to a query. Common partitioning strategies include date-based partitioning, region-based partitioning, and range partitioning.

- Data Indexing: Data indexing creates auxiliary data structures (indexes) that map values in specific columns to the locations of the corresponding data rows. Indexes enable the query engine to quickly locate rows that satisfy a specific filter condition without scanning the entire dataset. Common index types include B-tree indexes, hash indexes, and inverted indexes.

Effective partitioning and indexing can dramatically improve query performance, especially for queries that involve filtering or aggregation. For example, if a table is partitioned by date and a query filters on a specific date, the query engine can scan only the partition corresponding to that date, significantly reducing the amount of data processed. Similarly, if an index is created on a frequently filtered column, the query engine can use the index to quickly locate the relevant rows.

Consider a large dataset of customer transactions. Without partitioning or indexing, a query to find all transactions for a specific customer might require scanning the entire dataset. However, if the data is partitioned by customer ID and an index is created on the customer ID column, the query engine can quickly locate the relevant partition and use the index to find the specific transactions, resulting in a substantial performance improvement.

Popular Serverless Query Engine Technologies

Serverless query engines have become essential tools for analyzing data stored in data lakes, offering scalability, cost-effectiveness, and ease of use. Several technologies have emerged to meet the diverse needs of data professionals. This section will explore some of the most popular serverless query engine technologies available, delving into their specific features, functionalities, and pricing models.

AWS Athena

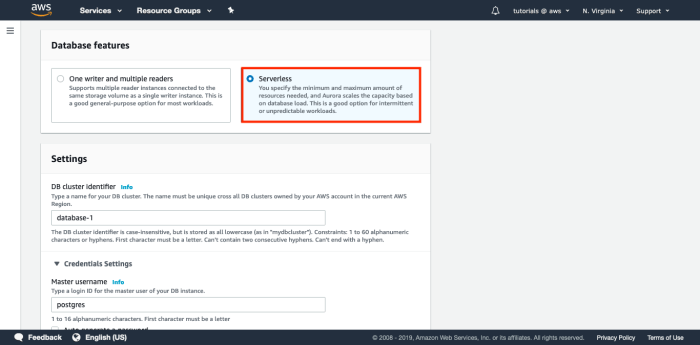

AWS Athena is a serverless query service provided by Amazon Web Services (AWS) that allows users to analyze data stored in Amazon S3 using standard SQL. It eliminates the need to manage infrastructure, and users only pay for the queries they run.

- Features and Functionalities: Athena supports a wide range of data formats, including CSV, JSON, Parquet, and ORC. It integrates seamlessly with other AWS services like S3, Glue (for data cataloging), and Lake Formation (for data governance). Key features include:

- SQL-based querying: Athena uses standard SQL, making it easy for users familiar with SQL to query data.

- Data format support: Athena supports various data formats, including CSV, JSON, Parquet, ORC, and Avro, offering flexibility in handling different data types.

- Integration with AWS ecosystem: Seamless integration with S3, Glue, and Lake Formation simplifies data storage, cataloging, and governance.

- Federated Query: Allows querying data from various sources, including relational databases and other AWS services.

- Cost-effective: Pay-per-query pricing model, charging based on the amount of data scanned per query.

- Use Cases: Athena is well-suited for ad-hoc querying, log analysis, business intelligence, and data exploration. For example, a marketing team could use Athena to analyze website clickstream data stored in S3 to identify trends and optimize marketing campaigns.

Google BigQuery

Google BigQuery is a fully managed, serverless data warehouse that enables scalable analysis of large datasets. It offers a powerful SQL engine and built-in features for data storage, querying, and analytics.

- Features and Functionalities: BigQuery provides a robust platform for data warehousing and analysis. Its key features include:

- Scalable SQL engine: BigQuery’s SQL engine is designed for high-performance querying of massive datasets.

- Columnar storage: BigQuery uses columnar storage, which is optimized for analytical queries by storing data in columns rather than rows.

- Data storage and management: Provides built-in data storage and management capabilities, including data loading, partitioning, and clustering.

- Integration with Google Cloud Platform (GCP): Integrates seamlessly with other GCP services, such as Cloud Storage, Cloud Functions, and Dataflow.

- Machine learning capabilities: Supports built-in machine learning capabilities, enabling users to train and deploy machine learning models directly within BigQuery.

- Use Cases: BigQuery is used for data warehousing, business intelligence, real-time analytics, and machine learning. A financial institution, for instance, might use BigQuery to analyze transaction data, identify fraudulent activities, and generate reports.

Azure Synapse Serverless SQL Pool

Azure Synapse Analytics offers a serverless SQL pool that allows users to query data stored in Azure Data Lake Storage (ADLS) Gen2 or Azure Blob Storage using standard SQL. It provides a cost-effective and scalable solution for data analysis.

- Features and Functionalities: Azure Synapse Serverless SQL pool provides a flexible and scalable query engine. Its key features include:

- SQL-based querying: Uses standard SQL for querying data stored in Azure Data Lake Storage and Azure Blob Storage.

- Support for various data formats: Supports a wide range of data formats, including CSV, JSON, Parquet, and ORC.

- Serverless architecture: Eliminates the need for infrastructure management, allowing users to focus on data analysis.

- Integration with Azure ecosystem: Seamless integration with Azure Data Lake Storage, Azure Blob Storage, and other Azure services.

- Cost-effective: Pay-per-query pricing model, charging based on the amount of data scanned per query.

- Use Cases: The Azure Synapse Serverless SQL pool is suitable for data exploration, ad-hoc querying, and data analysis tasks. A retail company might use it to analyze sales data stored in ADLS Gen2 to understand sales trends and customer behavior.

Pricing Model Comparison

Understanding the pricing models is critical when choosing a serverless query engine. Pricing typically depends on the amount of data scanned during queries and the compute time consumed. Here’s a comparison of the pricing models for AWS Athena, Google BigQuery, and Azure Synapse Serverless SQL Pool:

- AWS Athena: Athena charges based on the amount of data scanned per query. The pricing is typically around $5 per TB of data scanned. Data compression and partitioning can significantly reduce costs.

- Google BigQuery: BigQuery offers a pay-per-query pricing model, with charges based on the amount of data processed by a query. Pricing is tiered, with lower rates for larger datasets. For instance, the cost can be around $5 per TB of data processed, but it can vary based on data storage location and query complexity.

- Azure Synapse Serverless SQL Pool: Azure Synapse Serverless SQL Pool also uses a pay-per-query pricing model, charging based on the amount of data processed by a query. The pricing is similar to other providers, with a rate around $5 per TB of data processed. However, actual costs can fluctuate depending on the region and the specific data storage used.

For example, consider a scenario where a user queries a 10 TB dataset.

Assuming no data compression or partitioning is applied, the cost would be approximately $50 for all three platforms, since they are charging approximately $5 per TB of data scanned/processed. However, applying data compression (e.g., using Parquet format) and data partitioning can drastically reduce the amount of data scanned, thereby reducing costs. The actual cost will vary depending on factors like the complexity of the query, the region where the data is stored, and any discounts or credits the user may have.

Querying Data in a Serverless Environment

Querying data in a serverless environment is a core function of serverless query engines, enabling users to analyze data stored in data lakes without managing infrastructure. The process leverages SQL and other query languages, allowing for efficient data retrieval and transformation. This section will delve into the mechanics of submitting queries, provide practical SQL examples, and Artikel the steps involved in connecting to and querying a serverless engine.

Submitting Queries to a Serverless Query Engine

The process of submitting queries to a serverless query engine generally involves several steps. Users typically interact with the engine through a client application or a command-line interface (CLI). The query, written in SQL or another supported query language, is submitted to the engine’s API endpoint. The engine then parses the query, optimizes it, and distributes the execution across the underlying compute resources, which are provisioned dynamically based on the query’s requirements.

Results are returned to the user, often in a structured format such as CSV, JSON, or Parquet.

- Query Submission Methods: Queries can be submitted through various methods, including APIs (REST or GraphQL), CLI tools, or graphical user interfaces (GUIs). The specific method depends on the serverless query engine and the user’s preference.

- Query Language Support: Serverless query engines typically support SQL as the primary query language, providing a familiar interface for data analysts and engineers. Some engines also support other query languages, such as Apache Spark SQL or custom query languages optimized for specific data formats or analytical tasks.

- Query Processing: The engine parses the submitted query, optimizes it based on the data’s schema and statistics, and creates an execution plan. This plan determines how the query will be executed across the distributed compute resources.

- Resource Allocation: The serverless architecture dynamically allocates compute resources, such as CPU, memory, and storage, based on the query’s demands. This scalability ensures efficient execution, especially for complex queries or large datasets.

- Result Retrieval: Once the query execution is complete, the results are retrieved and presented to the user. The output format is often configurable, allowing users to choose the format that best suits their needs.

Common SQL Queries for Data Analysis

SQL queries are fundamental for data analysis in a data lake environment. They enable users to extract, transform, and analyze data stored in various formats. The following examples demonstrate common SQL queries used for data analysis, assuming a hypothetical data lake with a table named `sales` containing columns such as `date`, `product_id`, `quantity`, and `price`.

- Selecting Data: The `SELECT` statement is used to retrieve data from one or more tables. For example, to retrieve all columns for all rows:

SELECT- FROM sales;This query retrieves all columns from the `sales` table.

- Filtering Data: The `WHERE` clause filters the data based on specified conditions. For example, to retrieve sales data for a specific product:

SELECT- FROM sales WHERE product_id = 'P123';This query retrieves all columns from the `sales` table where the `product_id` is ‘P123’.

- Aggregating Data: Aggregate functions like `SUM`, `AVG`, `COUNT`, `MIN`, and `MAX` are used to summarize data. For example, to calculate the total sales revenue:

SELECT SUM(quantity- price) AS total_revenue FROM sales;This query calculates the total revenue by multiplying the `quantity` and `price` for each row and summing the results.

- Grouping Data: The `GROUP BY` clause groups rows based on one or more columns, allowing aggregate functions to be applied to each group. For example, to calculate the total sales revenue per product:

SELECT product_id, SUM(quantity- price) AS total_revenue FROM sales GROUP BY product_id;This query groups the sales data by `product_id` and calculates the total revenue for each product.

- Joining Data: The `JOIN` clause combines rows from two or more tables based on a related column. For example, assuming a `products` table with columns `product_id` and `product_name`:

SELECT s.date, p.product_name, s.quantity- s.price AS revenue FROM sales s JOIN products p ON s.product_id = p.product_id;This query joins the `sales` and `products` tables to retrieve the date, product name, and revenue for each sale.

- Ordering Data: The `ORDER BY` clause sorts the result set based on one or more columns. For example, to list the top 10 products by revenue:

SELECT product_id, SUM(quantity- price) AS total_revenue FROM sales GROUP BY product_id ORDER BY total_revenue DESC LIMIT 10;This query calculates the total revenue per product, orders the results in descending order, and limits the output to the top 10 products.

Procedure for Connecting and Running a Basic Query

Connecting to a serverless query engine and running a basic query involves a series of steps. The specific steps may vary slightly depending on the chosen engine, but the general procedure remains consistent. This procedure assumes the user has access credentials and a data lake environment already set up.

- Obtain Access Credentials: The user must first obtain the necessary credentials (e.g., API keys, usernames, passwords) to authenticate with the serverless query engine. These credentials are often provided by the cloud provider or data lake administrator.

- Install the Client Tool (if applicable): Depending on the engine, the user may need to install a client tool, such as a CLI or a library for a programming language like Python or Java. This tool provides the interface for interacting with the engine.

- Configure the Connection: Using the client tool, the user must configure the connection to the serverless query engine. This involves providing the engine’s endpoint URL, the access credentials, and any other required parameters (e.g., region, database name).

- Write the Query: The user writes the SQL query or uses another supported query language to retrieve the desired data. The query should be designed to meet the user’s analytical needs.

- Submit the Query: The user submits the query to the serverless query engine using the client tool or API. The submission process includes sending the query to the engine and specifying any necessary parameters.

- Retrieve the Results: Once the query has been executed, the user retrieves the results from the engine. The results are typically returned in a structured format such as CSV, JSON, or Parquet.

- Analyze the Results: The user can then analyze the retrieved data to gain insights and make informed decisions. This may involve further processing of the data using other tools or techniques.

Data Format and Optimization

Optimizing data formats and query performance is crucial for achieving cost-efficiency and responsiveness in serverless query engines for data lakes. Choosing the right data formats and implementing effective optimization strategies directly impact query execution time, resource consumption, and overall system performance. This section delves into the best practices for data format selection and query optimization techniques.

Best Data Formats for Optimal Performance

Selecting the appropriate data format is the first step towards efficient querying in a serverless environment. The format influences how data is stored, indexed, and accessed, which, in turn, affects query speed and resource utilization. Several formats are particularly well-suited for serverless query engines, each with its own advantages.

- Parquet: Parquet is a columnar storage format optimized for analytical queries. It stores data in a column-oriented fashion, allowing the query engine to read only the columns needed for a specific query. This significantly reduces I/O operations, leading to faster query execution. Parquet also supports compression and encoding, further optimizing storage space and query performance. The schema is stored with the data, ensuring data integrity and facilitating schema evolution.

- ORC (Optimized Row Columnar): ORC is another columnar storage format, similar to Parquet, designed for high performance in Hadoop-based systems. It offers features like predicate pushdown, which allows the query engine to filter data based on conditions before reading it, reducing the amount of data processed. ORC also supports compression and various encoding options. It is often a strong choice for large datasets where efficient filtering and data access are critical.

- Avro: Avro is a row-oriented format that supports schema evolution. It stores the schema alongside the data, which makes it compatible with evolving data structures. Avro is particularly suitable for applications where the schema changes over time, such as streaming data pipelines. It offers good compression and serialization capabilities, making it efficient for data transfer and storage. However, due to its row-oriented nature, it may not be as efficient as columnar formats for analytical queries that involve accessing only a few columns.

- JSON (JavaScript Object Notation): JSON is a text-based format commonly used for data exchange. While it’s human-readable and flexible, it is generally less efficient for analytical queries compared to columnar formats. This is because JSON stores data in a row-oriented fashion, requiring the entire row to be read even if only a few fields are needed. However, it is still useful when dealing with semi-structured data, as its flexible structure can accommodate nested objects and arrays.

- CSV (Comma-Separated Values): CSV is a simple, text-based format suitable for small datasets or for data that needs to be easily read by humans. It’s easy to create and understand, but it lacks features like compression, indexing, and schema enforcement, making it inefficient for large-scale analytical queries. CSV’s performance degrades significantly as the dataset size increases.

Strategies for Optimizing Query Performance

Beyond choosing the right data format, various optimization strategies can significantly enhance query performance in serverless environments. These strategies aim to reduce the amount of data processed, improve data access speed, and minimize resource consumption.

- Data Partitioning: Partitioning involves dividing data into smaller, manageable chunks based on specific criteria, such as date, region, or product category. When a query includes a filter on the partitioning column, the query engine can quickly identify and read only the relevant partitions, reducing the amount of data scanned. This strategy significantly improves query performance, especially for time-series data or datasets with geographic dimensions.

For instance, a dataset of sales transactions can be partitioned by date, allowing queries for specific days or months to process only the relevant data subsets.

- Compression: Compressing data reduces storage space and improves I/O performance. By reducing the amount of data that needs to be read from storage, compression speeds up query execution. Several compression codecs are available, each offering a different balance between compression ratio and decompression speed. The choice of codec depends on the specific dataset and query workload.

- Indexing: Indexing creates data structures that enable faster lookups based on specific columns. When a query includes a filter on an indexed column, the query engine can use the index to quickly locate the relevant data rows, avoiding a full table scan. This is particularly effective for queries that frequently filter data based on specific criteria. Indexing, however, adds overhead during data ingestion and storage.

- Predicate Pushdown: Predicate pushdown is a query optimization technique where the query engine pushes filter conditions (predicates) down to the storage layer. This allows the storage layer to filter out irrelevant data before it is read, reducing the amount of data processed by the query engine. This is particularly effective when combined with columnar storage formats, where only the necessary columns are read.

- Query Optimization Techniques: The query engine itself employs several techniques to optimize query performance. These include query rewriting, cost-based optimization, and parallel query execution. Query rewriting involves transforming a query into an equivalent form that can be executed more efficiently. Cost-based optimization uses statistics about the data to estimate the cost of different query execution plans and choose the most efficient one.

Parallel query execution involves breaking down a query into smaller tasks that can be executed concurrently, leveraging the distributed nature of serverless query engines.

Compression Codec Comparison

The selection of a compression codec significantly impacts both storage efficiency and query performance. Different codecs offer varying trade-offs between compression ratio, decompression speed, and CPU usage.

| Codec | Pros | Cons | Use Cases |

|---|---|---|---|

| GZIP | High compression ratio, widely supported, good for archival data. | Slower decompression speed, higher CPU usage. | Archiving older data, infrequently accessed datasets. |

| Snappy | Fast decompression speed, good balance between compression and speed. | Lower compression ratio compared to GZIP or Zstd. | Real-time analytics, frequently accessed data. |

| Zstd (Zstandard) | Excellent compression ratio, very fast decompression, good for both compression and speed. | Higher CPU usage than Snappy, but still often faster than GZIP. | General-purpose compression, large datasets, data lakes. |

Security and Access Control

Securing data within a serverless query engine environment is paramount for maintaining data integrity, confidentiality, and compliance with regulatory requirements. Implementing robust security measures necessitates a multi-faceted approach encompassing authentication, authorization, and data encryption, all meticulously managed to protect sensitive information from unauthorized access and potential breaches.

Authentication, Authorization, and Data Encryption

Authentication verifies the identity of users or services attempting to access the serverless query engine. Authorization determines what resources an authenticated user or service is permitted to access and the actions they can perform. Data encryption safeguards data both in transit and at rest, rendering it unreadable to unauthorized parties. These three elements are crucial to building a secure environment.

- Authentication Mechanisms: Serverless query engines leverage various authentication methods. These include:

- API Keys: Unique identifiers used to authenticate requests from applications.

- OAuth 2.0 and OpenID Connect (OIDC): Standards-based protocols enabling secure delegation of user identity.

- Identity Providers (IdPs): Integration with external identity providers (e.g., Azure Active Directory, AWS IAM, Google Cloud Identity) to manage user identities and authentication.

- Authorization Strategies: Authorization defines the specific permissions granted to authenticated users or services. Common strategies include:

- Role-Based Access Control (RBAC): Assigning permissions based on user roles (e.g., administrator, analyst, viewer).

- Attribute-Based Access Control (ABAC): Defining access policies based on attributes of users, resources, and the environment.

- Fine-Grained Access Control: Granting permissions at the table, column, or even row level.

- Data Encryption Techniques: Data encryption protects data at rest and in transit. Encryption methods include:

- Encryption at Rest: Encrypting data stored in the data lake using server-side encryption or client-side encryption. Server-side encryption typically uses encryption keys managed by the cloud provider, while client-side encryption allows users to manage their own keys.

- Encryption in Transit: Encrypting data transmitted between the serverless query engine and the data lake using protocols like Transport Layer Security (TLS/SSL).

- Key Management: Securely managing encryption keys through key management services (e.g., AWS KMS, Azure Key Vault, Google Cloud KMS).

Managing User Access and Permissions

Effective management of user access and permissions is essential for maintaining the security posture of a serverless query engine environment. This involves defining user roles, assigning permissions, and regularly reviewing access rights.

- User Roles and Permissions:

- Define distinct user roles based on job functions (e.g., data engineer, data scientist, business analyst).

- Assign specific permissions to each role, such as read, write, execute, and administrative privileges.

- Employ the principle of least privilege, granting users only the minimum necessary access to perform their tasks.

- Access Control Lists (ACLs):

- Implement ACLs to control access to individual data lake objects (e.g., files, tables).

- ACLs define which users or groups have specific permissions on a particular object.

- ACLs can be used to implement fine-grained access control, allowing for granular control over data access.

- Regular Auditing and Monitoring:

- Regularly audit user access logs to identify suspicious activity or potential security breaches.

- Monitor data access patterns to detect unauthorized data access or unusual query behavior.

- Implement alerts to notify administrators of any security-related events.

Best Practices for Securing Data in a Data Lake

Securing data within a data lake, accessible by a serverless query engine, requires adhering to a set of best practices that encompass data storage, access control, and monitoring. These practices are critical to protecting the confidentiality, integrity, and availability of the data.

- Data Encryption:

- Encrypt all data at rest using strong encryption algorithms (e.g., AES-256).

- Encrypt data in transit using TLS/SSL protocols.

- Manage encryption keys securely using key management services.

- Access Control and Authorization:

- Implement RBAC or ABAC to control user access to data.

- Use ACLs to grant fine-grained permissions on data lake objects.

- Regularly review and update access permissions.

- Network Security:

- Restrict network access to the data lake.

- Use firewalls and network security groups to control inbound and outbound traffic.

- Implement virtual private clouds (VPCs) to isolate the data lake from public networks.

- Data Masking and Anonymization:

- Mask or anonymize sensitive data to reduce the risk of exposure.

- Use data masking techniques to replace sensitive data with non-sensitive values.

- Anonymize data by removing or modifying personally identifiable information (PII).

- Regular Auditing and Monitoring:

- Enable logging and monitoring of data access and query activity.

- Regularly review security logs to detect suspicious behavior.

- Implement alerts for security-related events.

- Data Governance and Compliance:

- Establish data governance policies and procedures.

- Comply with relevant data privacy regulations (e.g., GDPR, CCPA).

- Implement data lineage tracking to understand the origin and transformations of data.

Cost Management and Optimization

The economic viability of serverless query engines for data lakes hinges on effective cost management. Understanding the factors driving costs and implementing optimization strategies is crucial for maximizing the return on investment and preventing unexpected expenses. This section details the key aspects of cost control within a serverless environment.

Factors Influencing Cost

Several factors directly influence the cost of utilizing serverless query engines. These elements interact to determine the overall expenditure, and a thorough understanding of each is paramount for effective cost management.

- Query Execution Time: The duration a query takes to execute is a primary driver of cost. Longer query execution times translate to increased resource consumption and, consequently, higher charges. The engine’s billing model often charges based on the compute time used.

- Data Volume Processed: Serverless query engines typically charge based on the amount of data scanned during query execution. Processing larger datasets incurs higher costs, irrespective of the query’s complexity. Efficient data partitioning and filtering become critical in this context.

- Resource Allocation: Although serverless, the underlying infrastructure still involves resource allocation. The engine automatically scales resources based on demand, but the level of scaling impacts cost. Over-provisioning leads to unnecessary expenses, while under-provisioning can degrade performance.

- Data Storage Costs: While the query engine itself incurs costs, the data residing in the data lake also contributes to the overall expense. The storage format, data compression, and storage tier (e.g., hot, cold, archive) influence these costs.

- Query Complexity: Complex queries, involving joins, aggregations, and user-defined functions (UDFs), often require more computational resources and longer execution times, thereby increasing costs. Query optimization techniques can mitigate this impact.

- Concurrency: The number of concurrent queries affects resource utilization. High concurrency levels can lead to increased scaling and higher associated costs. Effective resource management and query prioritization can help control costs during peak demand.

Strategies for Optimizing Costs

Several strategies can be employed to optimize the costs associated with serverless query engines. These techniques focus on improving query efficiency, reducing data volume, and maximizing resource utilization.

- Query Optimization: Query optimization involves rewriting queries to improve their efficiency. This includes:

- Query Rewriting: Rephrasing queries to minimize data scanning. For example, pushing down filters to the data source.

- Join Optimization: Choosing optimal join strategies (e.g., broadcast joins, shuffle joins) based on data size and distribution.

- Predicate Pushdown: Applying filtering conditions as early as possible in the query execution plan.

- Data Compression: Compressing data stored in the data lake reduces the amount of data scanned during query execution. Common compression formats include Parquet, ORC, and Avro. The choice of compression algorithm depends on the data type and desired compression ratio.

- Data Partitioning: Partitioning data divides it into logical segments based on specific criteria (e.g., date, region). This enables the query engine to scan only the relevant partitions, reducing the amount of data processed.

- Data Format Selection: Choosing an efficient data format can significantly impact performance and cost. Columnar formats like Parquet and ORC are generally preferred over row-oriented formats like CSV for analytical workloads.

- Resource Monitoring and Tuning: Regularly monitoring resource utilization (CPU, memory, I/O) and adjusting query configurations can optimize performance and costs. This involves identifying bottlenecks and fine-tuning parameters such as memory allocation and concurrency limits.

- Cost-Aware Query Planning: Some query engines offer cost-based optimization, where the query planner estimates the cost of different execution plans and selects the most cost-effective one.

- Data Lifecycle Management: Implementing data lifecycle policies to move less frequently accessed data to cheaper storage tiers (e.g., cold storage) can reduce storage costs.

Cost Optimization Techniques: Examples and Impact

Implementing cost optimization techniques can significantly impact query performance and overall expenses. The following blockquote provides examples, illustrating their effects.

Example 1: Query Optimization and Predicate Pushdown

Scenario: A query scans a large dataset to filter records based on a specific date range.

Technique: Applying predicate pushdown to filter data at the source.

Impact: Reduced data scanned by 80%, query execution time decreased from 10 minutes to 2 minutes, and costs were reduced by 60%.

Example 2: Data Compression with Parquet

Scenario: A data lake stores large CSV files.

Technique: Converting CSV files to Parquet format with compression.

Impact: Data size reduced by 70%, query execution time decreased by 40%, and storage costs were reduced by 30%.

Example 3: Data Partitioning by Date

Scenario: Analyzing sales data over several years.

Technique: Partitioning the data by year and month.

Impact: Reduced the amount of data scanned by 90% when querying specific date ranges, leading to a 90% reduction in execution time and a 80% reduction in costs for those queries.

Monitoring and Performance Tuning

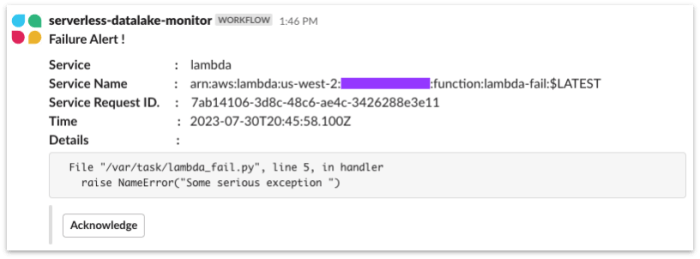

Monitoring and performance tuning are critical for maintaining the efficiency and cost-effectiveness of serverless query engines within data lake environments. Continuous monitoring allows for proactive identification of performance bottlenecks, enabling timely adjustments to optimize query execution and resource utilization. This proactive approach is essential for ensuring that data analysis remains responsive and cost-efficient as data volumes and query complexity increase.

Importance of Monitoring Serverless Query Engine Performance

Regular performance monitoring is essential to ensure that serverless query engines operate optimally and provide consistent performance. Monitoring allows for the identification of performance degradations, resource over-utilization, and potential security vulnerabilities. Early detection of issues enables administrators to address them promptly, minimizing the impact on query execution times and overall system costs. Effective monitoring also provides insights into query patterns, enabling optimization strategies tailored to the specific workloads.

Metrics to Monitor

Monitoring a serverless query engine involves tracking various metrics to understand its performance characteristics and identify areas for improvement. Analyzing these metrics provides a comprehensive view of the engine’s behavior and helps pinpoint potential bottlenecks.

- Query Execution Time: This metric measures the total time taken for a query to complete, from submission to result retrieval. Analyzing execution time is fundamental to understanding query performance.

- Data Scanned: This metric quantifies the amount of data read from storage during query execution. Minimizing data scanned is crucial for reducing both execution time and cost, especially in data lakes where storage costs can be significant.

- Resource Utilization: Tracking resource utilization, such as CPU, memory, and network I/O, provides insights into how effectively the engine is utilizing the allocated resources. High resource utilization can indicate bottlenecks or inefficient query plans.

- Concurrency: This metric measures the number of queries running concurrently. Monitoring concurrency helps understand the engine’s ability to handle multiple requests simultaneously and identify potential queuing or throttling issues.

- Error Rates: Tracking the rate of query failures and errors is essential for identifying and addressing underlying issues. Analyzing error logs provides valuable information for troubleshooting and improving system reliability.

- Cost: Monitoring the costs associated with query execution, including compute, storage, and network charges, provides insights into the financial efficiency of the serverless query engine. Cost optimization strategies can be implemented based on this data.

Procedure for Tuning a Serverless Query Engine

Tuning a serverless query engine is an iterative process that involves analyzing performance metrics, identifying bottlenecks, and implementing optimization strategies. This procedure is designed to systematically improve query performance and reduce costs.

- Baseline Performance Measurement: Establish a baseline by measuring key performance metrics, such as query execution time, data scanned, and resource utilization, under normal operating conditions. This baseline serves as a reference point for evaluating the effectiveness of tuning efforts.

- Performance Analysis: Analyze the collected performance data to identify performance bottlenecks. This may involve examining query execution plans, resource utilization graphs, and error logs. Identify queries that are consistently slow or consume excessive resources.

- Query Optimization: Optimize individual queries by rewriting them to improve efficiency. This may involve:

- Using appropriate data types and formats.

- Applying filtering and partitioning strategies to reduce the amount of data scanned.

- Optimizing join operations.

- Data Format and Storage Optimization: Optimize the data format and storage layout to improve query performance. This may involve:

- Choosing efficient data formats, such as Parquet or ORC, which support columnar storage and compression.

- Implementing data partitioning and bucketing strategies to reduce the amount of data scanned.

- Using data compression to reduce storage costs and improve I/O performance.

- Resource Allocation Adjustment: Adjust the resources allocated to the serverless query engine based on the identified bottlenecks. This may involve increasing the memory, CPU, or network bandwidth available to the engine.

- Concurrency Management: Manage query concurrency to prevent queuing and throttling issues. This may involve:

- Setting limits on the number of concurrent queries.

- Implementing query prioritization.

- Using query caching to reduce the load on the engine.

- Monitoring and Iteration: Continuously monitor performance metrics after implementing optimization strategies. Evaluate the impact of the changes and iterate on the tuning process as needed. This iterative approach ensures that the serverless query engine remains optimized as data volumes and query complexity evolve.

End of Discussion

In conclusion, serverless query engines are revolutionizing data lake analytics, offering a compelling alternative to traditional approaches. By embracing these technologies, organizations can achieve enhanced scalability, reduced costs, and improved agility in their data processing workflows. From understanding data formats and optimization strategies to implementing robust security measures and monitoring performance, the insights provided equip professionals with the knowledge needed to effectively leverage these engines and unlock the full potential of their data lakes.

Essential FAQs

What is the primary advantage of serverless query engines over traditional SQL engines?

Serverless query engines offer automatic scaling and pay-per-query pricing, eliminating the need for infrastructure management and reducing costs, especially for unpredictable workloads.

How do serverless query engines handle different data formats?

They typically support various formats (Parquet, ORC, CSV, JSON) through data connectors and optimized query processing, automatically parsing and interpreting data based on the file format.

What are the key security considerations for serverless query engines?

Authentication, authorization, data encryption (at rest and in transit), and access control are essential for securing data and ensuring compliance.

How can I optimize query performance in a serverless environment?

Strategies include data partitioning, compression, indexing, and using optimized data formats, along with query optimization techniques.

How do serverless query engines integrate with existing data lake storage solutions?

They typically integrate with object storage services (e.g., AWS S3, Azure Data Lake Storage, Google Cloud Storage) through data connectors, accessing data directly from the data lake.