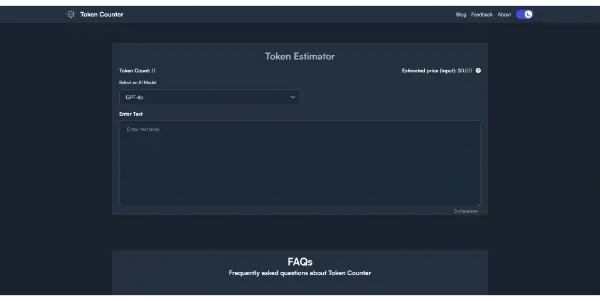

Token Counter

A useful tool for estimating the cost and length of your texts in tokens. Calculate the exact token usage for different AI models, optimize your queries and manage your API budgets efficiently

Token Counter: A Free AI Tool for Optimizing Text and API Costs

The burgeoning field of AI necessitates efficient management of resources, especially when dealing with large language models (LLMs). One crucial resource is the token, the fundamental unit of text processing for most AI models. Understanding token count is paramount for estimating costs, optimizing prompts, and avoiding unexpected API expenditure. This is where a token counter tool becomes invaluable. This article explores the functionality, benefits, and applications of a free token counter, focusing on its practical use for developers and AI practitioners.

What Does a Token Counter Do?

A token counter is a tool that analyzes text and determines the number of tokens it contains. This number varies depending on the specific AI model used (e.g., GPT-3, GPT-4, LaMDA), as different models tokenize text differently. A token might be a single word, a sub-word unit, or even a punctuation mark. The tool provides an accurate estimate of the token usage for your text, allowing you to predict the cost associated with processing it through various AI models.

Main Features and Benefits

A robust token counter offers several key features and benefits:

- Accurate Token Calculation: Precisely calculates the number of tokens based on the selected AI model. This ensures accurate cost estimations and prevents unexpected overruns.

- Model Selection: Allows selection of different AI models (e.g., GPT-3.5-turbo, GPT-4, etc.) to reflect the specific model's tokenization scheme.

- Real-time Updates: Provides immediate feedback as you type or paste text, enabling real-time optimization of your prompts.

- Cost Estimation: Helps estimate the cost associated with processing the text using a particular model's API. This is crucial for budget planning and resource allocation.

- Improved Prompt Engineering: By understanding token limits, you can refine your prompts to be more concise and efficient, resulting in better performance and reduced costs.

- Efficient API Usage: Prevents exceeding API token limits, avoiding errors and ensuring smooth operation.

Use Cases and Applications

Token counters find wide applications across various scenarios:

- Chatbot Development: Optimize chatbot conversations by ensuring prompts remain within the model's token limits.

- Large Language Model Applications: Estimate the costs of processing large volumes of text for tasks like summarization, translation, and question answering.

- API Budget Management: Effectively manage and control spending on API calls by accurately predicting costs beforehand.

- Prompt Engineering: Experiment with different prompt lengths and structures to find the optimal balance between conciseness and effectiveness.

- Content Creation: Optimize the length of text inputs for different AI-powered writing tools.

Comparison to Similar Tools

While several tools offer token counting capabilities, many are integrated within larger platforms or require paid subscriptions. A standalone, free token counter provides the advantage of accessibility and ease of use without the need for additional accounts or subscriptions. Its simplicity makes it an ideal choice for quick estimations and casual use. Paid alternatives might offer more advanced features like integration with specific APIs or detailed cost analysis reports, but for basic token counting, a free tool is often sufficient.

Pricing Information

The token counter discussed in this article is completely free to use. This makes it an accessible and valuable tool for developers and anyone working with LLMs, regardless of their budget.

In conclusion, a free token counter is an essential tool for developers and anyone interacting with large language models. Its ability to accurately estimate token usage, predict costs, and facilitate efficient prompt engineering makes it a valuable asset for managing resources and optimizing the performance of AI applications. Its simplicity and accessibility make it an invaluable addition to any AI developer's toolkit.