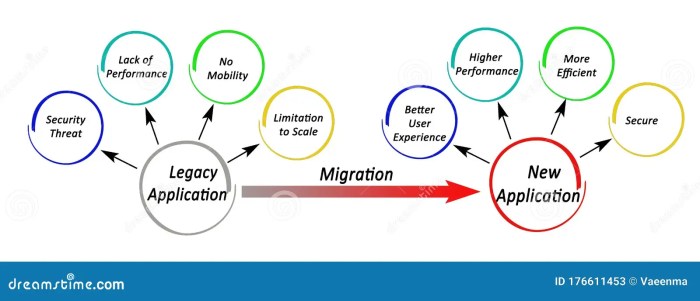

The migration of legacy applications, often burdened by accumulated technical debt, presents a significant challenge in modern software development. These systems, vital to business operations, are frequently characterized by outdated technologies, complex architectures, and a lack of maintainability. This document systematically explores the strategies required to navigate this complex landscape, ensuring a successful transition to more modern and efficient platforms.

This comprehensive analysis delves into the critical stages of legacy application migration, from initial assessment and planning to post-migration monitoring and optimization. It examines the identification and prioritization of technical debt, the selection of appropriate migration strategies (rehosting, replatforming, refactoring), and the crucial role of data migration, infrastructure modernization, and rigorous testing. The document provides practical guidance and actionable insights for IT professionals and business stakeholders seeking to modernize their legacy systems.

Assessment and Planning for Legacy Application Migration

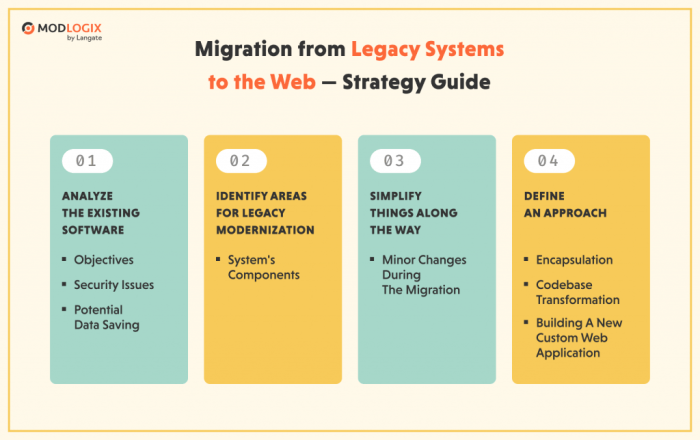

The successful migration of legacy applications hinges on a meticulous assessment and comprehensive planning phase. This involves understanding the existing application landscape, evaluating its technical debt, and selecting the most appropriate migration strategy. A well-defined plan minimizes risks, reduces costs, and ensures a smoother transition to a modern environment.

Inventorying Existing Applications and Dependencies

A comprehensive inventory is the cornerstone of any successful migration project. It provides a clear understanding of the current application portfolio, including its components, functionalities, and interdependencies. This knowledge is essential for informed decision-making throughout the migration process.

- Application Discovery: This initial step involves identifying all applications within the organization. Tools like automated scanning software, CMDB (Configuration Management Database) queries, and manual interviews with stakeholders are employed to create a comprehensive list. Include applications developed in-house, as well as third-party solutions.

- Application Profiling: Once applications are identified, they need to be profiled. This involves gathering detailed information about each application, including its name, version, purpose, business criticality, user base, and current hosting environment (e.g., operating system, database, middleware). Document the business processes supported by each application.

- Dependency Mapping: Understanding dependencies is crucial. This involves identifying all external and internal dependencies of each application. These include:

- Hardware: Servers, storage, network devices.

- Software: Operating systems, databases, middleware, libraries, and frameworks.

- Data: Data sources, data formats, and data volumes.

- Integration Points: APIs, web services, message queues, and other integration mechanisms.

Tools such as network scanners, code analysis tools, and dependency mapping software can be used to automate this process. Manual documentation is often necessary, especially for complex or undocumented systems.

- Documentation and Centralization: All collected information should be documented in a centralized repository, such as a spreadsheet, database, or dedicated application portfolio management tool. This repository should be regularly updated and accessible to all relevant stakeholders. Consistent naming conventions and data formats are crucial for maintaining data integrity.

Evaluating the Current State of Technical Debt

Technical debt represents the implied cost of rework caused by choosing an easy solution now instead of a better approach that would take longer. Identifying and quantifying technical debt is crucial for prioritizing migration efforts and selecting the most appropriate migration strategy. Ignoring technical debt can lead to increased migration costs, delays, and post-migration issues.

- Code Quality Assessment: This involves evaluating the quality of the application’s codebase. Metrics to consider include:

- Code Complexity: Measured by metrics like cyclomatic complexity and lines of code. High complexity can indicate areas prone to errors and difficult to maintain.

- Code Duplication: Identifies redundant code blocks that increase maintenance efforts and the risk of introducing errors.

- Code Coverage: Measures the percentage of code covered by automated tests. Low coverage indicates potential risks of undetected bugs.

- Coding Standards Compliance: Assesses adherence to established coding standards and best practices.

Automated code analysis tools, such as SonarQube or static code analyzers, can be used to automate this assessment.

- Architecture and Design Evaluation: The application’s architecture and design should be evaluated for maintainability, scalability, and security.

- Modularity: Assess the degree to which the application is broken down into independent, reusable modules.

- Coupling and Cohesion: Analyze the relationships between modules and their internal consistency. High coupling and low cohesion can make changes difficult and error-prone.

- Use of Obsolete Technologies: Identify the use of outdated programming languages, frameworks, and libraries.

- Performance and Scalability Analysis: Evaluate the application’s performance and scalability characteristics.

- Response Times: Measure the time it takes for the application to respond to user requests.

- Throughput: Measure the number of transactions the application can handle per unit of time.

- Resource Utilization: Monitor the utilization of CPU, memory, and disk I/O.

Performance testing tools and monitoring solutions can be employed to gather these metrics.

- Security Vulnerability Assessment: Identify security vulnerabilities within the application.

- Vulnerability Scanning: Utilize vulnerability scanners to identify known vulnerabilities in the application’s code and dependencies.

- Penetration Testing: Conduct penetration tests to simulate real-world attacks and assess the application’s security posture.

- Security Best Practices Compliance: Evaluate the application’s adherence to security best practices, such as secure coding standards and access control mechanisms.

- Documentation Review: Assess the completeness and accuracy of the application’s documentation. Lack of documentation can increase maintenance costs and risks.

Designing a Decision Tree for Migration Strategy

The choice of migration strategy is critical to the success of the project. The decision tree provides a structured approach to evaluate application characteristics and select the most suitable migration approach. The process helps in considering various factors, minimizing risks, and optimizing resource allocation.The decision tree is a visual representation of the decision-making process. The tree typically starts with an initial question, and each subsequent node represents a question or a decision point.

The branches of the tree lead to different migration strategies based on the answers to these questions. Example Decision Tree Artikel:

1. Initial Question

Is the application business-critical and does it require minimal downtime?

Yes

Is the application’s architecture suitable for cloud deployment?

Yes

Rehosting or Refactoring (depending on technical debt)

No

Replatforming or Refactoring

No

Is the application’s code base well-documented and maintainable?

Yes

Rehosting or Replatforming

No

Refactoring or Retirement

2. Application Characteristics

Business Value

High, Medium, Low. This considers the application’s impact on business operations and revenue generation.

Technical Debt

High, Medium, Low. Assessed based on the checklist above.

Application Architecture

Monolithic, Microservices, N-Tier. This influences the feasibility of different migration strategies.

Dependencies

Number and complexity of dependencies. Complex dependencies may limit migration options.

Performance Requirements

High, Medium, Low. Dictates the need for optimized performance post-migration.

Security Requirements

High, Medium, Low. Determines the need for enhanced security measures.

3. Decision Points

Rehosting (Lift and Shift)

Simplest approach, suitable for applications with low technical debt and minimal architectural changes.

Replatforming

Involves making some changes to the application to take advantage of cloud services, such as database or operating system.

Refactoring

Involves restructuring and optimizing the application’s code to improve its maintainability, scalability, and performance.

Re-architecting

Involves a significant overhaul of the application’s architecture, potentially including the adoption of microservices or other modern architectural patterns.

Retirement

Decommissioning the application if it is no longer needed or its functionality can be replaced by another solution.

Comparing Different Migration Approaches

Selecting the appropriate migration approach is crucial for minimizing costs, mitigating risks, and maximizing the benefits of modernization. The following table provides a comparison of the most common migration approaches, including their pros, cons, and suitability for different application types.

| Migration Approach | Description | Pros | Cons | Suitability |

|---|---|---|---|---|

| Rehosting (Lift and Shift) | Migrating the application to a new infrastructure without significant code changes. |

|

|

|

| Replatforming | Migrating the application to a new platform, such as a different operating system or database, with minimal code changes. |

|

|

|

| Refactoring | Restructuring and optimizing the application’s code without changing its functionality. |

|

|

|

| Re-architecting | Redesigning and rebuilding the application to take advantage of modern architectural patterns, such as microservices. |

|

|

|

Identifying and Prioritizing Technical Debt

Identifying and prioritizing technical debt is a crucial step in migrating legacy applications. It allows for a strategic approach to remediation, ensuring that efforts are focused on the most impactful issues. Effective management of technical debt minimizes risks, improves maintainability, and facilitates a smoother migration process. This section will detail methods for identifying, prioritizing, and quantifying technical debt within legacy systems.

Methods for Identifying Different Types of Technical Debt

Identifying the various forms of technical debt requires a multi-faceted approach, incorporating both automated analysis and manual investigation. Different types of technical debt manifest in distinct ways, demanding tailored detection strategies.

- Code Quality Debt: This form of debt arises from suboptimal coding practices, leading to reduced readability, maintainability, and increased bug potential. Identification methods include:

- Code Reviews: Manual inspection of code by experienced developers can uncover violations of coding standards, design flaws, and areas requiring refactoring. Code reviews are particularly effective at identifying subjective issues such as code style inconsistencies and potential performance bottlenecks.

- Static Analysis: Tools like SonarQube, ESLint, and PMD automatically scan the codebase for violations of coding rules, potential bugs, and code smells (e.g., long methods, duplicated code, complex conditional statements). These tools provide objective metrics and reports to highlight areas of concern.

- Automated Testing: Insufficient or poorly written tests are a significant indicator of code quality debt. Low test coverage and frequent test failures suggest areas that are brittle and prone to errors. Tools like JUnit (for Java), pytest (for Python), and Jest (for JavaScript) can be used to assess test coverage and identify testing gaps.

- Architectural Debt: Architectural debt stems from design decisions that compromise the system’s long-term scalability, flexibility, and adaptability. This debt is often more challenging to identify than code quality debt.

- Architectural Reviews: Expert architects can assess the system’s overall design, identifying potential issues such as tight coupling, single points of failure, and lack of modularity. These reviews involve analyzing diagrams, documentation, and the system’s behavior to evaluate its structural integrity.

- Performance Testing: Performance bottlenecks can reveal architectural limitations. Load testing and stress testing can identify areas where the system struggles to handle increasing user loads or data volumes, indicating architectural weaknesses. Tools like JMeter and Gatling can be used to simulate user traffic and measure performance metrics.

- Dependency Analysis: Analyzing the system’s dependencies on external libraries, frameworks, and services can uncover architectural debt related to outdated or unsupported components. Tools like Dependency-Check can identify vulnerable dependencies.

- Outdated Dependencies: This form of debt arises from the use of outdated libraries, frameworks, and software components. It increases security risks and limits the ability to leverage new features and improvements.

- Dependency Scanning: Tools such as OWASP Dependency-Check and Snyk can automatically scan the project’s dependencies and identify known vulnerabilities. They compare the project’s dependencies against a database of known vulnerabilities.

- Version Management: Regular monitoring of dependency versions and updating to the latest stable releases is essential. Version control systems like Git can help track dependency changes and facilitate rollbacks if necessary.

- Security Audits: Periodic security audits can identify vulnerabilities related to outdated dependencies. Penetration testing can simulate real-world attacks to assess the system’s resilience.

Strategies for Prioritizing Technical Debt Remediation Efforts

Prioritizing technical debt remediation requires a strategic approach that considers both the business impact and the associated risks. This ensures that efforts are focused on the most critical issues first.

- Business Impact Analysis: Assess the potential impact of each technical debt item on the business. Consider factors such as:

- Feature Delivery: How does the debt affect the ability to deliver new features and enhancements? Delays in feature delivery can negatively impact market competitiveness and revenue.

- Customer Satisfaction: Does the debt impact the user experience, leading to performance issues, bugs, or security vulnerabilities? Customer dissatisfaction can lead to churn and reputational damage.

- Operational Costs: Does the debt increase operational costs, such as maintenance, support, or infrastructure costs? High operational costs can reduce profitability.

- Compliance and Legal Risks: Does the debt expose the system to compliance violations or legal risks? Non-compliance can lead to fines and legal action.

- Risk Assessment: Evaluate the risks associated with each technical debt item. Consider factors such as:

- Probability of Failure: What is the likelihood that the debt will lead to a failure or outage?

- Severity of Failure: What is the potential impact of a failure on the business?

- Security Vulnerabilities: Does the debt introduce security vulnerabilities that could be exploited by attackers?

- Technical Complexity: How difficult and time-consuming is it to remediate the debt?

- Prioritization Frameworks: Utilize established frameworks to prioritize technical debt remediation. Examples include:

- Cost of Delay: This framework prioritizes debt items based on the cost of delaying their remediation. It considers the business impact and the time it takes to remediate the debt.

- Risk-Based Prioritization: This approach prioritizes debt items based on their risk score, which is calculated by considering the probability and severity of failure.

- Weighted Scoring: Assign weights to different factors, such as business impact, risk, and technical complexity, and use a scoring system to prioritize debt items.

Step-by-Step Procedure for Measuring and Quantifying Technical Debt

Quantifying technical debt provides a basis for objective assessment and tracking of remediation efforts. This involves defining metrics, collecting data, and analyzing trends over time.

- Define Metrics: Identify relevant metrics to measure technical debt. Examples include:

- Code Coverage: Percentage of code covered by automated tests. Lower coverage indicates higher debt.

- Code Complexity: Cyclomatic complexity, which measures the complexity of code. Higher complexity indicates higher debt.

- Code Duplication: Percentage of duplicated code. High duplication indicates higher debt.

- Number of Code Smells: The number of code smells detected by static analysis tools. More smells indicate higher debt.

- Number of Open Bugs: The number of open bugs, especially those related to code quality or architectural issues.

- Dependency Version: Frequency of outdated libraries and frameworks.

- Collect Data: Gather data using various tools and techniques:

- Automated Analysis: Utilize static analysis tools (e.g., SonarQube, ESLint, PMD) to collect metrics on code quality, complexity, and code smells.

- Test Coverage Tools: Use tools like JaCoCo (for Java), pytest (for Python), and Jest (for JavaScript) to measure code coverage.

- Bug Tracking Systems: Use bug tracking systems (e.g., Jira, Bugzilla) to track the number of open bugs and their severity.

- Dependency Scanners: Employ tools like OWASP Dependency-Check to identify outdated or vulnerable dependencies.

- Establish a Baseline: Establish a baseline for each metric at a specific point in time. This serves as a reference point for tracking progress.

- Track Trends: Regularly monitor the metrics over time to identify trends and assess the effectiveness of remediation efforts. Use charts and graphs to visualize the data.

- Calculate the Cost of Debt: Estimate the cost of technical debt using various methods:

Cost of Debt = (Cost of Remediation + Cost of Failure)

- Cost of Remediation: Estimate the time and resources required to fix the debt. This can be based on the number of lines of code, the complexity of the code, and the expertise required.

- Cost of Failure: Estimate the potential cost of a failure caused by the debt. This can include lost revenue, customer dissatisfaction, and reputational damage.

- Report and Communicate: Regularly report on the progress of technical debt remediation to stakeholders. Communicate the metrics, trends, and cost of debt to ensure transparency and accountability.

Elaboration on the Use of Static Analysis Tools and Code Quality Metrics to Assess Technical Debt

Static analysis tools and code quality metrics are essential for assessing and managing technical debt. These tools automatically analyze source code without executing it, identifying potential issues related to code quality, security, and maintainability.

- Static Analysis Tools: These tools examine the code for violations of coding standards, potential bugs, and code smells. Examples include:

- SonarQube: A popular open-source platform for continuous inspection of code quality. It supports multiple programming languages and provides detailed reports on code quality metrics, security vulnerabilities, and code smells. It provides visualization of code quality trends over time.

- ESLint: A linter for JavaScript and TypeScript code that helps identify and fix stylistic issues and potential errors. It enforces coding style and can automatically fix many issues.

- PMD: A static analysis tool for Java, Apex, PL/SQL, and other languages. It detects potential problems like unused code, duplicate code, and complex expressions.

- Code Quality Metrics: Static analysis tools generate various code quality metrics to quantify technical debt. Key metrics include:

- Code Coverage: Measures the percentage of code covered by automated tests. Low code coverage indicates a higher risk of bugs and makes it difficult to refactor code.

- Cyclomatic Complexity: Measures the complexity of code. High cyclomatic complexity makes code difficult to understand, test, and maintain.

Cyclomatic Complexity = Number of Decision Points + 1

For example, if a method has three `if` statements, its cyclomatic complexity would be 4.

- Code Duplication: Measures the amount of duplicated code in the codebase. High code duplication increases the risk of bugs and makes it difficult to maintain the code.

- Code Smells: Identifies code patterns that indicate potential problems, such as long methods, complex conditional statements, and duplicated code. Examples of code smells include “Long Method”, “Large Class”, “Duplicated Code”, and “God Class”.

- Maintainability Index: An aggregate metric that indicates the ease of maintaining the code. A low maintainability index suggests that the code is difficult to maintain.

- Integrating Tools and Metrics into the Workflow:

- Continuous Integration/Continuous Delivery (CI/CD): Integrate static analysis tools into the CI/CD pipeline to automatically analyze code during the build process.

- Automated Builds: Configure the build process to fail if the code quality metrics fall below a certain threshold.

- Regular Reporting: Generate regular reports on code quality metrics and share them with the development team and stakeholders.

- Code Reviews: Use static analysis tools to support code reviews. The tool can automatically flag potential issues, allowing reviewers to focus on more complex problems.

Choosing the Right Migration Strategy

Selecting the optimal migration strategy is a critical decision that significantly impacts the success, cost, and timeline of a legacy application modernization project. This choice hinges on a thorough evaluation of the application’s current state, business requirements, and available resources. Careful consideration of factors like technical debt, application complexity, and desired future state is paramount to making an informed decision.

Factors for Strategy Selection: Rehosting, Replatforming, and Refactoring

The selection of a migration strategy – rehosting, replatforming, or refactoring – is dictated by a combination of technical and business considerations. These strategies represent a spectrum of effort, risk, and potential benefits. Understanding the key factors that influence the choice is essential for effective decision-making.

- Application Architecture and Complexity: The application’s architecture plays a crucial role. Monolithic applications, characterized by tightly coupled components, may be more suitable for rehosting or replatforming initially, whereas applications with a modular design may lend themselves to refactoring. Complex applications with significant technical debt may necessitate a more comprehensive approach like refactoring.

- Technical Debt: The level of technical debt directly influences the choice. Applications with high technical debt, such as outdated code, security vulnerabilities, and performance bottlenecks, often benefit from refactoring. This allows for the elimination of problematic code and improvement of maintainability. Rehosting, while quicker, may simply transfer the debt to the new environment.

- Business Goals and Objectives: Business objectives drive the overall migration strategy. If the primary goal is to minimize disruption and maintain current functionality quickly, rehosting may be preferred. If the goal is to improve scalability, performance, and leverage cloud-native services, replatforming or refactoring might be more suitable.

- Budget and Timeline: The available budget and the desired timeline are critical constraints. Rehosting is generally the fastest and least expensive option, while refactoring is the most time-consuming and potentially costly. Replatforming falls in between, offering a balance of effort and benefit.

- Skills and Expertise: The availability of internal skills and expertise influences the chosen strategy. Rehosting often requires less specialized knowledge, while refactoring demands deep understanding of the application’s codebase and modern development practices. Replatforming requires knowledge of the new platform.

Cost and Effort Comparison of Migration Strategies

The cost and effort associated with each migration strategy vary significantly. These differences are influenced by factors such as the size and complexity of the application, the level of technical debt, and the chosen platform. A clear understanding of these costs is crucial for budgetary planning and resource allocation.

| Migration Strategy | Cost | Effort | Typical Timeline | Key Considerations |

|---|---|---|---|---|

| Rehosting (Lift and Shift) | Lowest | Lowest | Weeks to Months | Focus on minimal code changes; suitable for quick migration; maintains existing infrastructure dependencies. |

| Replatforming | Moderate | Moderate | Months | Requires code changes to adapt to a new platform (e.g., database migration); improves performance and scalability. |

| Refactoring | Highest | Highest | Months to Years | Significant code changes and architectural redesign; addresses technical debt; enables modern features and scalability. |

The cost can be measured in terms of labor hours, infrastructure expenses, and potential downtime. Effort relates to the amount of code modification and the need for testing. Timelines depend on the size and complexity of the application, and the number of resources assigned to the project. These are general estimates, and actual costs may vary.

Application Suitability for Each Migration Approach

Different application types and characteristics lend themselves to specific migration strategies. Choosing the right approach depends on a careful evaluation of the application’s requirements and limitations.

- Rehosting: Best suited for applications that need to be migrated quickly with minimal code changes. This approach is suitable for applications that are functioning well, and the primary goal is to move them to a new infrastructure, such as the cloud. Applications with less critical technical debt and less performance issues are also a good fit.

- Replatforming: Appropriate for applications where there is a need to improve performance or take advantage of new features of a different platform. This is a good choice when a change of database is needed, or the application needs to run on a new operating system or framework.

- Refactoring: Best suited for applications with high technical debt, complex functionality, and the need for significant improvements in maintainability, scalability, and performance. This approach is appropriate when modernizing the application to leverage new technologies is a priority.

Refactoring as the Preferred Approach Example

Refactoring is the most comprehensive approach, and is necessary when technical debt is very high and business needs require significant improvements.

Consider a legacy e-commerce application built on an outdated framework with a monolithic architecture. The application suffers from performance bottlenecks, security vulnerabilities, and frequent downtime. Furthermore, the application lacks scalability to handle peak traffic during promotional events. In this scenario, rehosting would simply transfer the existing problems to the new environment, and replatforming would only address a subset of the issues. Refactoring, in this case, is the preferred approach. This will allow for the redesign of the application’s architecture, the elimination of technical debt, and the implementation of modern technologies, such as microservices, to improve scalability, security, and performance. This allows the business to better respond to market demands and enhance the customer experience.

Refactoring Techniques for Legacy Applications

Refactoring is a crucial process in migrating legacy applications and mitigating technical debt. It involves systematically improving the internal structure of code without altering its external behavior. This iterative process enhances maintainability, readability, and scalability, making the application more adaptable to future changes and migration strategies.

Incremental Refactoring Techniques

Incremental refactoring allows for the gradual improvement of a legacy application, minimizing risk and disruption. This approach breaks down large refactoring tasks into smaller, manageable steps.

- Identify and Address Code Smells: Code smells are indicators of deeper problems in the code. Examples include long methods, large classes, duplicate code, and excessive commenting. Addressing these smells improves code quality. For example, a “long method” can be refactored by extracting smaller, more focused methods, improving readability and maintainability.

- Introduce Tests: Writing unit tests, integration tests, and end-to-end tests provides a safety net for refactoring. Tests ensure that changes do not introduce regressions and that the application’s functionality remains intact. Prioritize testing critical areas first, and progressively increase test coverage.

- Refactor Small Units: Focus on refactoring small, well-defined units of code at a time. This limits the scope of changes and makes it easier to identify and fix any issues that arise. Start with the most frequently used or problematic areas.

- Apply the “Extract Method” Refactoring: This technique involves extracting a block of code into a separate method. This reduces the size of the original method, improving readability and reusability. The extracted method should have a descriptive name that reflects its purpose.

- Use “Rename Method” and “Rename Class” Refactorings: Giving meaningful names to methods and classes improves code clarity. This makes it easier to understand the code’s intent and purpose. Consistent naming conventions are essential.

- Apply “Move Method” and “Move Class” Refactorings: Moving methods and classes to more appropriate locations in the code structure can improve the overall organization and reduce dependencies. This often involves grouping related functionality together.

- Utilize “Replace Conditional with Polymorphism”: Conditional statements can often be replaced with polymorphism to simplify code and make it more extensible. This involves creating subclasses for each condition and overriding a method in each subclass.

Strangler Fig Pattern Application

The Strangler Fig pattern is a migration strategy that involves gradually replacing parts of a legacy application with a new system. This approach minimizes risk by allowing the new system to “strangle” the old one, piece by piece, until the legacy application is entirely replaced.

- Identify Areas for Replacement: Analyze the legacy application and identify functional areas that can be replaced with a new implementation. Prioritize areas with high business value or significant technical debt.

- Create a New Application: Develop a new application or microservice that provides the functionality of the selected area. This new application should be designed with modern architecture and technologies.

- Route Traffic: Gradually route traffic from the legacy application to the new application. This can be done using techniques like API gateways, load balancers, or feature flags.

- Decommission the Legacy Code: Once the new application handles all the traffic for a particular area, the corresponding code in the legacy application can be decommissioned.

- Iterate and Repeat: Repeat the process for other functional areas until the entire legacy application is replaced. This iterative approach allows for continuous improvement and adaptation.

For example, consider a legacy e-commerce platform. The initial focus might be on replacing the product catalog functionality. A new microservice is developed for the product catalog, and traffic is gradually shifted from the legacy system to the new service. Once the new service is stable and handles all product catalog requests, the corresponding code in the legacy system can be removed.

This process is repeated for other functionalities like shopping carts, checkout, and user accounts.

Design Patterns for Architecture Improvement

Design patterns provide reusable solutions to commonly occurring problems in software design. Applying design patterns can improve the architecture of a legacy application, making it more maintainable, extensible, and understandable.

- Factory Pattern: The Factory pattern is used to create objects without specifying their concrete classes. This promotes loose coupling and makes it easier to add new object types without modifying existing code. This is especially useful for creating complex objects with various dependencies.

- Strategy Pattern: The Strategy pattern defines a family of algorithms, encapsulates each one, and makes them interchangeable. This allows the application to select an algorithm at runtime, providing flexibility and adaptability. Consider a payment processing system that supports multiple payment methods (credit card, PayPal, etc.). The Strategy pattern can be used to encapsulate each payment method as a separate strategy.

- Observer Pattern: The Observer pattern defines a one-to-many dependency between objects so that when one object changes state, all its dependents are notified and updated automatically. This is useful for implementing event-driven architectures.

- Adapter Pattern: The Adapter pattern converts the interface of a class into another interface clients expect. Adapter lets classes work together that couldn’t otherwise because of incompatible interfaces. This is helpful when integrating legacy components with modern systems.

- Facade Pattern: The Facade pattern provides a simplified interface to a complex subsystem. It simplifies the interaction with a complex set of classes or APIs, making the system easier to use and understand.

For instance, the Facade pattern can be used to simplify access to a complex legacy database system. A facade class can provide a simplified API that hides the complexities of interacting with the database.

Best Practices for Code Refactoring

Following best practices ensures that refactoring is performed effectively and safely, leading to improved code quality and reduced technical debt.

- Prioritize Tests: Write comprehensive tests before and after refactoring. Ensure that all existing functionality is preserved and that no new bugs are introduced.

- Small, Frequent Commits: Commit changes frequently to keep track of progress and make it easier to revert changes if necessary. This also helps with collaboration and code reviews.

- Automated Testing: Utilize automated testing tools to run tests frequently and automatically. This helps identify regressions early and reduces the risk of introducing errors.

- Code Reviews: Have other developers review the refactored code to catch potential issues and ensure that the changes align with coding standards and best practices.

- Continuous Integration/Continuous Deployment (CI/CD): Integrate refactoring into the CI/CD pipeline to ensure that refactored code is automatically tested and deployed.

- Document Changes: Document the refactoring changes, including the rationale, the specific changes made, and any potential impacts. This documentation is crucial for understanding the code and for future maintenance.

- Version Control: Use version control to track changes, allowing for easy rollback to previous versions if necessary. This is a crucial safeguard during refactoring.

- Monitor Performance: Monitor the application’s performance after refactoring to ensure that the changes have not negatively impacted performance. Address any performance bottlenecks that may arise.

Data Migration Strategies

Migrating data from legacy applications is a critical, often complex, undertaking in any modernization effort. It’s frequently the most time-consuming and error-prone phase, directly impacting the success of the overall migration. A well-defined data migration strategy is essential to minimize disruptions, ensure data integrity, and optimize the transition to the new system. This section explores the challenges, strategies, and techniques involved in successful data migration.

Challenges of Migrating Data from Legacy Systems

Data migration from legacy systems presents numerous challenges, stemming from the age and design of the original systems. These challenges can significantly impact the migration’s timeline, cost, and overall success.

- Data Volume and Complexity: Legacy systems often contain vast amounts of data, frequently in complex, poorly documented formats. This complexity can make it difficult to understand the data structures, relationships, and dependencies. For example, a mainframe system holding financial records might have hundreds of tables, with intricate foreign key relationships, requiring meticulous analysis and planning to migrate.

- Data Quality Issues: Legacy data often suffers from quality problems, including inconsistencies, inaccuracies, incomplete records, and duplicated entries. These issues can stem from a lack of data validation, inconsistent data entry practices, and the evolution of the system over time. Cleaning and correcting these data quality issues can be a significant undertaking, requiring specialized tools and expertise.

- Data Format and Structure Differences: Legacy systems frequently use outdated data formats and structures that are incompatible with modern systems. This includes differences in database technologies (e.g., flat files, older relational databases), data types, and character encodings. Conversion and transformation are essential to map the data from the source system to the target system.

- Downtime and Business Disruption: Data migration can require downtime for the legacy system, impacting business operations. Minimizing downtime is critical, particularly for systems that are critical to business functions. Careful planning and execution are required to minimize the impact on users and business processes.

- Security and Compliance: Data migration must adhere to security and compliance regulations. This involves protecting sensitive data during the migration process and ensuring compliance with data privacy laws such as GDPR or CCPA. Security measures, such as encryption and access controls, are crucial throughout the migration process.

- Lack of Documentation and Expertise: Legacy systems often lack adequate documentation, making it difficult to understand the data structures, business rules, and data dependencies. Finding personnel with the necessary expertise to work with these older systems can also be challenging.

Strategies for Minimizing Data Loss and Downtime During Migration

Effective strategies are necessary to mitigate the risks of data loss and downtime during the migration process. Careful planning, robust tools, and proven methodologies are critical to minimizing disruption.

- Pre-Migration Data Assessment and Profiling: Before the migration begins, a thorough assessment of the source data is crucial. This involves profiling the data to understand its structure, quality, and volume. Data profiling tools can be used to identify data quality issues, such as missing values, inconsistencies, and duplicates. This helps in creating a targeted data cleansing and transformation plan.

- Incremental Migration Approach: Instead of migrating all the data at once, an incremental approach, also known as a phased approach, can be employed. This involves migrating data in smaller batches or subsets, allowing for more controlled testing and validation. For instance, migrating data by customer segment, geographic region, or business function can reduce the risk of widespread data loss and enable faster issue resolution.

- Data Replication and Synchronization: Employing data replication and synchronization techniques can minimize downtime. This involves replicating the data to the target system while the legacy system is still operational. Once the replication is complete, a cutover can be performed, where the target system takes over as the primary system. This approach ensures that the target system has a complete and up-to-date copy of the data.

- Data Transformation and Cleansing during Migration: Incorporating data transformation and cleansing steps directly into the migration process can reduce downtime and improve data quality. This includes using data integration tools to cleanse, transform, and enrich the data as it is migrated. For example, converting data types, standardizing addresses, and removing duplicates can be done during the migration process.

- Thorough Testing and Validation: Comprehensive testing and validation are critical to ensuring data integrity and minimizing data loss. This includes testing the migration process with sample data, performing data validation checks, and comparing the data in the source and target systems. Rigorous testing allows for identifying and resolving issues before the full migration.

- Use of Data Migration Tools: Leveraging specialized data migration tools can automate and streamline the migration process. These tools offer features such as data extraction, transformation, loading (ETL), data validation, and monitoring. Choosing the right tools based on the specific needs of the migration project is crucial.

- Rollback Plan: Having a well-defined rollback plan is essential. If issues arise during the migration, the rollback plan provides a mechanism to revert to the legacy system quickly. This plan should include detailed steps for restoring the legacy system to its pre-migration state. Regular testing of the rollback plan is important.

Techniques for Data Transformation and Cleansing

Data transformation and cleansing are critical steps in ensuring data quality and compatibility between legacy and modern systems. These techniques address data inconsistencies, improve data accuracy, and prepare data for use in the new system.

- Data Standardization: This involves standardizing data formats, such as dates, addresses, and phone numbers. This ensures consistency and facilitates data integration. For example, converting all dates to a consistent format (e.g., YYYY-MM-DD) or standardizing address formats (e.g., using postal codes) improves data quality.

- Data Cleansing: This involves identifying and correcting data errors, such as invalid values, missing data, and duplicate records. Techniques include data validation rules, data scrubbing, and data de-duplication. Data cleansing tools and scripts can automate these processes.

- Data Enrichment: This involves adding missing information or enhancing existing data with additional attributes. This can include looking up data from external sources, adding demographic information, or calculating new fields based on existing data.

- Data Transformation: This involves converting data from its legacy format to the format required by the new system. This includes data type conversions, schema mapping, and data aggregation. Data transformation tools and scripts are used to automate these conversions. For example, converting currency values from one format to another, or mapping data from legacy tables to new tables.

- Data Deduplication: This process involves identifying and removing duplicate records. This is often done by comparing records based on key attributes and identifying those that match. De-duplication tools can automate this process and ensure data consistency.

- Data Aggregation: This involves summarizing data from multiple records into a single record. This is often used to create reports or provide a consolidated view of the data. Data aggregation can involve summing values, calculating averages, or counting occurrences.

Process for Validating Data Integrity After Migration

Validating data integrity after migration is crucial to confirm the success of the migration and ensure the accuracy and reliability of the data in the new system. A comprehensive validation process should include several key steps.

- Data Profiling and Comparison: After migration, perform data profiling on the target system to understand its structure, quality, and volume. Compare the data profiles of the source and target systems to identify any discrepancies. Data profiling tools can automate this comparison process.

- Data Sampling and Spot Checks: Select a sample of records from the migrated data and perform spot checks to verify data accuracy. This can involve manually reviewing data, comparing data with source system records, and validating data against business rules.

- Data Reconciliation: Reconcile key data elements between the source and target systems to ensure data consistency. This includes comparing totals, counts, and other summary metrics to verify that the data has been migrated correctly.

- Data Testing and Validation Rules: Implement data validation rules in the target system to ensure data integrity. These rules can check for data type consistency, data range constraints, and referential integrity. Data validation rules can be integrated into the application or database.

- User Acceptance Testing (UAT): Involve end-users in the validation process. Users can test the data and functionality of the new system to ensure that it meets their needs. This provides valuable feedback on data accuracy and usability.

- Automated Data Validation Tools: Utilize automated data validation tools to streamline the validation process. These tools can automate data comparisons, data quality checks, and data reconciliation. This reduces the time and effort required for data validation.

- Performance Monitoring: Monitor the performance of the target system to ensure that the migrated data is performing efficiently. This includes monitoring query performance, data loading times, and other performance metrics. Performance monitoring can help identify any performance bottlenecks related to the data.

Modernization of Infrastructure

Migrating legacy applications often necessitates a parallel transformation of the underlying infrastructure. This modernization effort aims to enhance scalability, improve resource utilization, and reduce operational costs. It frequently involves shifting from on-premises data centers to cloud-based environments, alongside leveraging technologies like containerization and cloud-native services. The goal is to create a more agile and resilient infrastructure that can support the evolving needs of the modernized applications.

Steps Involved in Migrating Infrastructure to the Cloud

Migrating infrastructure to the cloud is a phased process, involving careful planning and execution. This process typically includes several key steps.

- Assessment and Planning: This initial phase involves evaluating the existing infrastructure, identifying dependencies, and defining the migration strategy. This includes assessing the current hardware, software, network configurations, and security requirements. The choice of cloud provider and migration approach (rehosting, replatforming, refactoring, etc.) are determined during this stage.

- Proof of Concept (POC): A POC is often conducted to validate the chosen migration strategy and assess the feasibility of the approach. This involves migrating a small subset of the application or a non-critical component to the cloud to test the process and identify potential issues.

- Migration Execution: This is the actual process of moving the infrastructure components to the cloud. This may involve various techniques such as virtual machine (VM) migration, database migration, and application redeployment. The migration is usually performed in stages to minimize disruption.

- Testing and Validation: Thorough testing is essential after migration. This includes functional testing, performance testing, security testing, and disaster recovery testing to ensure that the migrated infrastructure functions as expected.

- Optimization and Monitoring: After successful migration, ongoing optimization and monitoring are crucial. This involves monitoring performance, optimizing resource utilization, and implementing cost-saving measures. Continuous monitoring and alerting systems are established to proactively identify and address any issues.

Benefits of Using Containerization and Orchestration Technologies

Containerization and orchestration technologies offer significant advantages in modernizing infrastructure. They improve resource utilization, streamline deployments, and enhance application portability.

- Improved Resource Utilization: Containerization allows for efficient use of server resources. Multiple containers, each running a specific application or service, can be deployed on a single server, maximizing the use of CPU, memory, and storage.

- Simplified Deployment and Management: Container orchestration platforms, such as Kubernetes, automate the deployment, scaling, and management of containerized applications. This reduces manual effort and minimizes the risk of human error.

- Enhanced Application Portability: Containers package applications and their dependencies into self-contained units. This ensures that applications run consistently across different environments, including development, testing, and production.

- Increased Scalability and Resilience: Orchestration platforms can automatically scale applications based on demand, ensuring high availability and responsiveness. They also provide mechanisms for self-healing, automatically restarting failed containers and distributing workloads across multiple instances.

Examples of How to Leverage Cloud-Native Services to Improve Application Performance and Scalability

Cloud-native services offer numerous opportunities to improve application performance and scalability. These services are designed to be highly available, scalable, and cost-effective.

- Database Services: Cloud providers offer managed database services (e.g., Amazon RDS, Azure SQL Database, Google Cloud SQL) that automate database management tasks such as patching, backups, and scaling. This reduces the operational burden and allows developers to focus on application development.

- Load Balancing and Auto-Scaling: Cloud providers provide load balancing services that distribute traffic across multiple application instances. Auto-scaling automatically adjusts the number of instances based on demand, ensuring optimal performance and resource utilization.

- Content Delivery Networks (CDNs): CDNs (e.g., Amazon CloudFront, Azure CDN, Google Cloud CDN) cache content at edge locations around the world, reducing latency and improving the user experience. This is particularly beneficial for applications that serve static content, such as images, videos, and JavaScript files.

- Serverless Computing: Serverless computing platforms (e.g., AWS Lambda, Azure Functions, Google Cloud Functions) allow developers to run code without managing servers. This can significantly reduce operational costs and improve scalability, as the platform automatically scales resources based on demand.

- Message Queues: Message queue services (e.g., Amazon SQS, Azure Service Bus, Google Cloud Pub/Sub) enable asynchronous communication between application components. This improves application responsiveness and scalability by decoupling different parts of the application.

Comparison of Cloud Providers and Their Services

This table compares the services offered by three major cloud providers: Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). This comparison is intended to provide a high-level overview and is not exhaustive. Service offerings and pricing are subject to change.

| Feature | Amazon Web Services (AWS) | Microsoft Azure | Google Cloud Platform (GCP) |

|---|---|---|---|

| Compute | Amazon EC2, AWS Lambda, Amazon ECS, Amazon EKS | Azure Virtual Machines, Azure Functions, Azure Kubernetes Service (AKS), Azure Container Instances | Google Compute Engine, Cloud Functions, Google Kubernetes Engine (GKE), Cloud Run |

| Storage | Amazon S3, Amazon EBS, Amazon EFS, Amazon Glacier | Azure Blob Storage, Azure Disks, Azure Files, Azure Archive Storage | Cloud Storage, Persistent Disk, Cloud Filestore, Cloud Storage Nearline/Coldline |

| Database | Amazon RDS, Amazon DynamoDB, Amazon Aurora, Amazon Redshift | Azure SQL Database, Azure Cosmos DB, Azure Database for PostgreSQL/MySQL/MariaDB, Azure Synapse Analytics | Cloud SQL, Cloud Spanner, Cloud Datastore, BigQuery |

| Networking | Amazon VPC, Amazon CloudFront, AWS Direct Connect | Azure Virtual Network, Azure CDN, Azure ExpressRoute | Virtual Private Cloud (VPC), Cloud CDN, Cloud Interconnect |

| Container Orchestration | Amazon ECS, Amazon EKS | Azure Kubernetes Service (AKS), Azure Container Instances | Google Kubernetes Engine (GKE), Cloud Run |

Testing and Quality Assurance

Testing is a critical component of any legacy application migration project. It ensures that the migrated application functions as expected, meets performance requirements, and provides a seamless user experience. Without rigorous testing, organizations risk introducing new defects, disrupting business operations, and damaging their reputation. The following sections detail strategies and plans for ensuring quality throughout the migration process.

Importance of Testing During Legacy Application Migration

Testing validates the integrity of the migrated application, safeguarding against functional regressions, performance bottlenecks, and data corruption. The complexity inherent in legacy systems, coupled with the potential for unforeseen interactions during migration, amplifies the necessity of a robust testing strategy. Comprehensive testing minimizes downtime, reduces the cost of rework, and mitigates the risk of business disruption. A well-defined testing plan provides confidence in the successful transition to the modernized application.

Strategies for Testing Migrated Applications

Testing migrated applications necessitates a multifaceted approach to encompass functionality, performance, and security. Employing a combination of testing methodologies ensures thorough validation.

- Functional Testing: Verifies that the migrated application’s features operate as designed. This includes testing individual modules, end-to-end workflows, and integration points. Testing should cover all use cases, including positive and negative scenarios, to identify potential defects.

- Performance Testing: Assesses the application’s speed, stability, and scalability under various load conditions. Performance testing identifies bottlenecks, resource constraints, and potential performance degradations. Load testing, stress testing, and endurance testing are crucial components of performance validation.

- Security Testing: Evaluates the application’s resilience against security threats and vulnerabilities. Security testing encompasses vulnerability scanning, penetration testing, and code review to identify and remediate security flaws.

- Usability Testing: Evaluates the ease of use and user experience of the migrated application. Usability testing involves gathering feedback from end-users to identify areas for improvement in the user interface and overall usability.

- Regression Testing: Confirms that the migration process has not introduced new defects or broken existing functionality. Regression testing is conducted after each migration phase and after any code changes to ensure the stability and reliability of the application.

Comprehensive Testing Plan

A comprehensive testing plan comprises a structured approach to testing, encompassing various test types and levels of detail. The plan should Artikel the scope of testing, test objectives, testing environment, test data requirements, and expected results.

- Unit Tests: Focus on testing individual components or modules of the application in isolation. Unit tests are typically automated and designed to verify the correctness of individual functions, classes, or methods. Unit tests are usually performed by developers during the development phase.

- Integration Tests: Verify the interactions between different modules or components of the application. Integration tests ensure that the different parts of the application work together correctly. These tests are crucial to ensure the proper exchange of data and coordination of functionality between various modules.

- System Tests: Assess the overall functionality and performance of the complete migrated application. System tests simulate real-world user scenarios and verify that the application meets the specified requirements. System testing ensures that all the integrated components work together as expected in a production-like environment.

- User Acceptance Tests (UAT): Involve end-users in testing the migrated application to ensure that it meets their business requirements. UAT provides valuable feedback on usability, functionality, and overall user experience. User acceptance tests are the final step before the application goes live.

Use of Automated Testing Tools

Automated testing tools significantly improve testing efficiency and effectiveness, especially for complex legacy applications. Automation allows for rapid execution of tests, consistent results, and efficient regression testing.

- Functional Testing Tools: Tools like Selenium, JUnit, and TestComplete automate functional testing, enabling the creation of automated test scripts that simulate user interactions with the application.

- Performance Testing Tools: Tools such as JMeter, LoadRunner, and Gatling are used for performance testing. These tools simulate multiple users accessing the application simultaneously to measure response times, throughput, and resource utilization.

- Test Management Tools: Tools like Jira, TestRail, and Zephyr provide a centralized platform for managing test cases, test execution, and defect tracking. These tools improve collaboration among team members and facilitate the reporting of test results.

- Continuous Integration and Continuous Delivery (CI/CD): Integrating automated testing into a CI/CD pipeline enables frequent testing and rapid feedback, allowing for early detection and resolution of defects.

Risk Management and Mitigation

Legacy application migration is a complex undertaking, fraught with potential pitfalls that can derail projects, increase costs, and negatively impact business operations. Proactive risk management is essential to ensure a smooth and successful transition. This involves identifying potential threats, assessing their likelihood and impact, and developing strategies to minimize their negative effects. Effective risk management also includes a robust contingency plan to address unforeseen issues that may arise during the migration process.

Identifying Potential Risks Associated with Legacy Application Migration

The migration of legacy applications presents a multitude of risks that can jeopardize project success. These risks span various domains, including technical, organizational, and financial aspects. Understanding these potential challenges is the first step toward developing effective mitigation strategies.

- Technical Risks: These risks relate to the technical aspects of the migration process.

- Compatibility Issues: Incompatibility between the legacy application and the new platform or environment. This can manifest in data format inconsistencies, API mismatches, or code dependencies.

- Data Loss or Corruption: Risks associated with data migration, including data integrity issues, data transformation errors, and incomplete data transfer.

- Performance Degradation: The new environment may not provide the same performance levels as the legacy system, leading to slower response times and reduced user experience.

- Integration Challenges: Difficulties in integrating the migrated application with other systems, leading to workflow disruptions and data silos.

- Security Vulnerabilities: Introduction of new security vulnerabilities during the migration process, such as improper access controls or insecure configurations.

- Organizational Risks: These risks pertain to the organizational aspects of the migration project.

- Lack of Skill and Expertise: Insufficient skills or experience within the project team to handle the complexities of the migration process.

- Resistance to Change: User resistance to the new system, which can hinder adoption and impact productivity.

- Poor Communication: Inadequate communication between stakeholders, leading to misunderstandings, delays, and conflicts.

- Scope Creep: Uncontrolled expansion of project scope, resulting in increased costs, delays, and scope creep.

- Vendor Lock-in: Dependence on a single vendor for migration services, leading to reduced flexibility and increased costs.

- Financial Risks: These risks relate to the financial aspects of the migration project.

- Cost Overruns: Exceeding the allocated budget due to unforeseen issues, scope creep, or changes in requirements.

- Project Delays: Delays in the project timeline, leading to increased costs and missed business opportunities.

- Return on Investment (ROI) Failure: The migrated application may not deliver the expected ROI due to poor performance, user adoption issues, or other factors.

- Hidden Costs: Unforeseen costs, such as data conversion expenses, training costs, and ongoing maintenance costs.

Strategies for Mitigating Risks

Proactive risk mitigation is critical for minimizing the impact of potential issues during legacy application migration. Effective mitigation strategies should be implemented throughout the project lifecycle, from planning to deployment.

- Technical Risk Mitigation:

- Thorough Assessment and Planning: Conduct a comprehensive assessment of the legacy application, the target environment, and the migration requirements. Develop a detailed migration plan that addresses potential technical challenges.

- Pilot Projects and Prototyping: Conduct pilot projects and prototyping to test migration strategies, identify potential issues, and validate the feasibility of the proposed solutions.

- Data Validation and Testing: Implement rigorous data validation and testing procedures to ensure data integrity and accuracy throughout the migration process.

- Performance Testing and Optimization: Conduct performance testing to identify and address performance bottlenecks in the new environment. Optimize the application and infrastructure to ensure optimal performance.

- Security Hardening: Implement security best practices, such as secure coding practices, access controls, and vulnerability scanning, to protect the migrated application from security threats.

- Organizational Risk Mitigation:

- Skills Development and Training: Provide comprehensive training to the project team and end-users to ensure they have the necessary skills and knowledge to support the migration process.

- Change Management: Implement a robust change management program to address user resistance and promote adoption of the new system.

- Communication Plan: Establish a clear and consistent communication plan to keep stakeholders informed of project progress, issues, and decisions.

- Scope Management: Implement strict scope management controls to prevent scope creep and ensure that the project remains within budget and on schedule.

- Vendor Management: Select vendors with proven experience and expertise in legacy application migration. Establish clear service level agreements (SLAs) to ensure accountability.

- Financial Risk Mitigation:

- Realistic Budgeting: Develop a realistic budget that accounts for potential risks and contingencies.

- Project Management Best Practices: Employ project management best practices to track progress, manage risks, and control costs.

- Phased Approach: Implement a phased migration approach to minimize financial risk and allow for adjustments based on lessons learned.

- Contingency Planning: Develop a contingency plan to address unforeseen issues and mitigate financial impact.

- ROI Analysis: Conduct a thorough ROI analysis to ensure that the migration project is financially viable and will deliver the expected benefits.

Contingency Plan for Handling Unexpected Issues During Migration

A well-defined contingency plan is crucial for addressing unexpected issues that may arise during the migration process. The plan should Artikel the steps to be taken to identify, assess, and resolve these issues, minimizing their impact on the project.

- Issue Identification and Reporting: Establish a clear process for identifying and reporting issues, including a designated point of contact and a reporting template.

- Issue Assessment and Prioritization: Assess the severity and impact of each issue and prioritize them based on their potential to affect the project.

- Escalation Procedures: Define escalation procedures for issues that require immediate attention or that cannot be resolved within the project team.

- Resolution Strategies: Develop specific resolution strategies for common issues, such as data migration errors, performance bottlenecks, and compatibility problems.

- Communication Plan: Communicate the issue, the resolution plan, and the progress to stakeholders.

- Documentation: Document all issues, resolutions, and lessons learned to improve future migration projects.

Common Migration Risks and Their Mitigation Strategies

The following table summarizes common migration risks and their associated mitigation strategies. This table provides a concise overview of potential challenges and the recommended approaches to address them.

| Risk | Description | Impact | Mitigation Strategy |

|---|---|---|---|

| Compatibility Issues | Incompatibility between legacy application and new platform. | Data loss, functionality loss, project delays. | Thorough assessment, pilot projects, compatibility testing. |

| Data Loss or Corruption | Data integrity issues during migration. | Data loss, business disruption, regulatory non-compliance. | Data validation, data transformation, comprehensive testing. |

| Performance Degradation | New environment underperforms legacy system. | Slow response times, user dissatisfaction, reduced productivity. | Performance testing, infrastructure optimization, code optimization. |

| Integration Challenges | Difficulties in integrating migrated application with other systems. | Workflow disruptions, data silos, increased costs. | API integration, interface testing, integrated testing. |

| Lack of Skill and Expertise | Insufficient skills within the project team. | Project delays, increased costs, project failure. | Training, vendor support, knowledge transfer. |

| Resistance to Change | User resistance to the new system. | Reduced adoption, decreased productivity, project failure. | Change management, communication, user training. |

| Cost Overruns | Exceeding the allocated budget. | Financial losses, project cancellation. | Realistic budgeting, scope management, contingency planning. |

Team and Skillset Considerations

Legacy application migration is a complex undertaking that necessitates a specialized team possessing a diverse set of skills. The success of the migration hinges on the availability of individuals with the appropriate expertise to navigate the intricacies of the existing system, select and implement the optimal migration strategy, and ensure a smooth transition to the modernized environment. Failing to assemble a well-rounded team can lead to project delays, increased costs, and ultimately, an unsuccessful migration.

Skills and Expertise Required for Successful Legacy Application Migration

The necessary skills span several domains, encompassing technical proficiency, project management acumen, and a deep understanding of the business context. A successful migration team requires a combination of skills to manage the technical complexities and organizational changes involved.

- Application Architecture Expertise: Deep understanding of the legacy application’s architecture, including its components, dependencies, and interactions. This includes proficiency in the original programming languages, frameworks, and platforms used.

- Programming Languages and Frameworks Proficiency: Expertise in both the legacy and target programming languages and frameworks. For instance, knowledge of COBOL or Fortran for legacy systems and Java, Python, or .NET for modern platforms is crucial.

- Database Management and Data Migration Skills: Proficiency in database technologies, including SQL, NoSQL, and data migration tools. This involves understanding the legacy database schema, mapping data to the new schema, and ensuring data integrity during the migration process.

Data migration often involves Extract, Transform, Load (ETL) processes, which require specialized skills in data manipulation and transformation.

- Cloud Computing and Infrastructure as Code (IaC): Knowledge of cloud platforms (AWS, Azure, GCP) and infrastructure as code principles. This enables the team to provision and manage the target infrastructure efficiently.

- DevOps and CI/CD: Familiarity with DevOps practices, including continuous integration and continuous delivery (CI/CD). This ensures a streamlined and automated deployment process.

- Testing and Quality Assurance: Expertise in various testing methodologies, including unit testing, integration testing, and user acceptance testing (UAT). This is essential for verifying the functionality and performance of the migrated application.

- Project Management and Agile Methodologies: Strong project management skills, including planning, scheduling, risk management, and communication. Experience with Agile methodologies is beneficial for iterative development and adapting to changing requirements.

- Security and Compliance: Understanding of security best practices and compliance requirements. This ensures that the migrated application meets security standards and regulatory obligations.

- Business Domain Knowledge: Understanding of the business domain in which the application operates. This allows the team to make informed decisions about the migration strategy and ensure that the migrated application meets business needs.

Strategies for Building a Skilled Migration Team

Building a skilled migration team involves a multi-faceted approach, combining internal resources, external expertise, and ongoing training. The team structure should be flexible and adaptable to the project’s evolving needs.

- Assess Existing Skills: Conduct a thorough assessment of the existing team’s skills and identify any gaps. This will help determine the areas where training or external expertise is needed.

- Assemble a Core Team: Form a core team with a combination of experienced developers, architects, and project managers. This team will be responsible for leading the migration effort.

- Leverage Internal Resources: Utilize existing team members with relevant skills, such as those familiar with the legacy application or the target platform.

- Engage External Experts: Bring in external consultants or contractors with specialized expertise in areas where the internal team lacks skills, such as cloud migration, data migration, or specific programming languages.

- Establish a Center of Excellence (CoE): Create a CoE dedicated to legacy application migration. This center can provide guidance, best practices, and reusable assets.

- Foster Collaboration: Promote collaboration and knowledge sharing among team members, both internal and external. This can be facilitated through regular meetings, code reviews, and knowledge-sharing platforms.

Guidance on Training and Upskilling Existing Team Members

Upskilling existing team members is a crucial aspect of a successful migration project. It not only ensures that the team possesses the necessary skills but also fosters employee engagement and reduces the reliance on external resources.

- Identify Training Needs: Based on the skills assessment, identify specific training needs for each team member.

- Provide Targeted Training: Offer training programs tailored to the identified needs. This can include online courses, workshops, certifications, and on-the-job training.

- Encourage Hands-on Experience: Provide opportunities for team members to gain hands-on experience with the new technologies and platforms. This can be achieved through pilot projects, proof-of-concepts, or shadowing experienced team members.

- Foster a Learning Culture: Create a culture of continuous learning and knowledge sharing. This can be facilitated through internal training sessions, knowledge-sharing platforms, and mentorship programs.

- Offer Certification Programs: Encourage team members to pursue relevant certifications, such as cloud certifications or specific technology certifications.

- Provide Mentorship Opportunities: Pair experienced team members with less experienced ones to provide guidance and support.

Essential Skills Needed, Including Examples

The following table provides a detailed overview of the essential skills required for legacy application migration, along with specific examples of tools and technologies.

| Skill Category | Skill | Examples |

|---|---|---|

| Programming Languages | Legacy Languages | COBOL, Fortran, RPG, PL/I |

| Modern Languages | Java, Python, C#, JavaScript | |

| Databases | Legacy Databases | IMS, VSAM, DB2 (Mainframe), Oracle (older versions) |

| Modern Databases | MySQL, PostgreSQL, SQL Server, MongoDB, Cassandra | |

| Cloud Computing | Cloud Platforms | AWS, Azure, Google Cloud Platform |

| Cloud Services | AWS EC2, Azure Virtual Machines, Google Compute Engine, AWS S3, Azure Blob Storage, Google Cloud Storage | |

| DevOps | CI/CD Tools | Jenkins, GitLab CI, Azure DevOps, AWS CodePipeline |

| Infrastructure as Code (IaC) | Terraform, AWS CloudFormation, Azure Resource Manager | |

| Testing | Testing Frameworks | JUnit, TestNG, Selenium, Cypress |

| Testing Methodologies | Unit testing, integration testing, system testing, UAT | |

| Data Migration | Data Migration Tools | Informatica, Talend, AWS Database Migration Service (DMS), Azure Database Migration Service |

| ETL Processes | Extract, Transform, Load | |

| Project Management | Project Management Methodologies | Agile, Scrum, Waterfall |

| Project Management Tools | Jira, Asana, Microsoft Project |

Monitoring and Optimization Post-Migration

Post-migration, continuous monitoring and optimization are crucial for ensuring the migrated application functions correctly, performs efficiently, and meets business requirements. This phase is not merely about verifying functionality; it’s about proactively managing the application’s health, identifying bottlenecks, and adapting to changes in the new environment. Neglecting this aspect can lead to performance degradation, increased costs, and ultimately, a failure to realize the benefits of the migration.

Importance of Post-Migration Monitoring

Effective monitoring post-migration is paramount for several reasons. It provides insights into the application’s behavior, allowing for proactive issue resolution and performance tuning.

- Performance Baseline Establishment: Monitoring establishes a baseline of performance metrics in the new environment. This baseline serves as a reference point for identifying deviations and potential problems. For example, a significant increase in response times after a database migration would immediately trigger investigation.

- Early Issue Detection: Monitoring tools enable the early detection of issues that might not be immediately apparent to end-users. This includes identifying slow queries, resource contention, or unexpected errors, enabling swift intervention before they impact business operations.

- Resource Optimization: Monitoring data helps identify areas where resources are underutilized or over-provisioned. This information is crucial for optimizing infrastructure costs and ensuring efficient resource allocation. For instance, if CPU utilization consistently remains low, the infrastructure can be scaled down, saving costs.

- Validation of Migration Success: Monitoring confirms that the migration was successful in terms of performance and stability. It provides tangible evidence of the improvements achieved or areas that require further refinement.

- Compliance and Security: Monitoring helps ensure compliance with security policies and regulatory requirements. It can detect unusual activity, potential security breaches, and unauthorized access attempts.

Strategies for Optimizing Application Performance

Optimizing application performance in the new environment requires a multi-faceted approach, encompassing various aspects of the application and its infrastructure.

- Performance Testing: Conduct thorough performance testing, including load testing and stress testing, to simulate real-world user traffic and identify performance bottlenecks. This helps determine the application’s capacity and identify areas for improvement. For example, simulating peak load during a Black Friday sale can expose weaknesses in the system’s ability to handle the surge in transactions.