Embarking on a journey through the intricacies of IT infrastructure management, this exploration delves into the critical process of workload discovery and dependency mapping. Understanding the operational landscape of an IT environment is paramount for effective resource allocation, performance optimization, and strategic decision-making. This analysis dissects the methodologies, tools, and challenges associated with uncovering and visualizing the interconnectedness of applications, services, and underlying infrastructure components.

This document will explore the fundamental goals of workload discovery, covering data gathering techniques, application and service identification, workload profiling, and dependency mapping approaches. Furthermore, it will analyze direct and indirect relationships, examine visualization methods, and discuss tools and technologies. Finally, it will present solutions to common challenges and how dependency mapping supports migration, optimization, and the continuous maintenance of accurate infrastructure representations.

Defining Workload Discovery

Workload discovery is a critical process in IT infrastructure management, providing the foundational intelligence necessary for effective resource allocation, performance optimization, and risk mitigation. It involves systematically identifying and characterizing the computational tasks, processes, and data flows that constitute an organization’s IT operations. This understanding is essential for making informed decisions about infrastructure design, capacity planning, and application modernization.

Fundamental Goals of Workload Discovery

The primary objectives of workload discovery are multifaceted, aiming to provide a comprehensive understanding of the IT environment’s operational characteristics. These goals include:* Identifying and cataloging all active workloads: This involves a complete inventory of applications, services, and processes running within the IT infrastructure. This includes both on-premise and cloud-based resources.

Characterizing workload resource consumption

Understanding how each workload utilizes system resources, such as CPU, memory, storage, and network bandwidth. This helps to identify resource bottlenecks and optimize performance.

Mapping dependencies between workloads

Determining the relationships between different applications and services, including which components rely on others. This is crucial for understanding the impact of changes or failures.

Establishing baseline performance metrics

Capturing historical data on workload performance to enable trend analysis, capacity planning, and proactive issue resolution.

Facilitating informed decision-making

Providing the necessary data and insights to support strategic initiatives such as cloud migration, application modernization, and infrastructure optimization.

Scope of Workload Discovery

The scope of workload discovery encompasses a wide range of IT components and their interactions. A thorough discovery process considers the following elements:* Servers: This includes both physical and virtual servers, encompassing operating systems, hardware specifications, and software installed. Data collection involves CPU utilization, memory consumption, disk I/O, and network traffic.

Applications

Identifying all applications running within the environment, including their versions, configurations, and associated processes. Understanding application dependencies is key. Consider a financial institution; workload discovery would reveal the core banking application, its associated middleware, and databases.

Databases

Mapping database instances, schemas, and data volumes. This involves understanding database server resources, query performance, and data storage requirements. For instance, the discovery process might reveal a large Oracle database supporting the core banking application, along with its associated indexes and storage allocation.

Network Infrastructure

Assessing network devices (routers, switches, firewalls), network topology, and network traffic patterns. This includes understanding network latency, bandwidth utilization, and communication pathways between applications and servers.

Storage Systems

Identifying storage devices, storage capacity, and data access patterns. This involves analyzing storage performance metrics, such as I/O operations per second (IOPS) and latency. For example, discovering a high-performance storage array serving the database.

Operating Systems

Detailed information on the operating systems used, including their versions, patches, and configurations. This is crucial for identifying potential security vulnerabilities and compatibility issues.

Middleware

Understanding the middleware components, such as application servers, message queues, and integration platforms, which are crucial for application connectivity. For example, the discovery might identify a WebLogic application server hosting the web front-end for the core banking application.

Cloud Resources

If the infrastructure includes cloud resources (e.g., AWS, Azure, GCP), workload discovery also needs to include the assessment of cloud-based virtual machines, storage, and networking configurations.

User Activity

Monitoring user activity and application usage patterns to understand how workloads are utilized by end-users. This provides context for performance analysis and capacity planning.

Benefits of Understanding the Workloads

A thorough understanding of workloads provides significant benefits, leading to more efficient and resilient IT operations. These benefits include:* Improved Resource Optimization: By identifying resource bottlenecks and underutilized resources, organizations can optimize resource allocation, reducing costs and improving performance. For example, identifying that a particular server is consistently over-provisioned allows for re-allocation of resources.

Enhanced Performance

Understanding workload behavior allows for proactive performance tuning and optimization. This leads to improved application responsiveness and user experience.

Reduced Downtime

By mapping dependencies and identifying critical components, organizations can mitigate the impact of failures and reduce downtime.

Simplified Migration and Modernization

Workload discovery provides the necessary information to plan and execute cloud migrations or application modernization projects.

Better Capacity Planning

Understanding workload growth and resource consumption patterns allows for accurate capacity planning, preventing performance degradation and ensuring scalability.

Improved Security Posture

Identifying and tracking all software and configurations improves security posture, enabling faster identification and mitigation of vulnerabilities.

Cost Savings

By optimizing resource utilization and reducing downtime, organizations can achieve significant cost savings.

Risk Mitigation

By identifying dependencies and potential points of failure, organizations can mitigate risks associated with infrastructure changes and outages. For example, understanding that a specific application relies on a particular database server helps to prioritize its protection.

Gathering Data

The accuracy and completeness of workload discovery and dependency mapping are directly proportional to the quality of the data gathered. A comprehensive understanding of data sources and collection methods is crucial for achieving a robust and reliable assessment of the IT infrastructure. This section details the primary information sources and the methodologies employed to extract pertinent data for workload analysis.

Information Sources

Workload information resides in diverse locations within an IT environment. Identifying and accessing these sources is the first step in data gathering.

- Logs: System logs, application logs, and security logs provide valuable insights into resource utilization, application behavior, and inter-component communication. Log data includes timestamps, event details, error messages, and performance metrics. For example, web server logs often contain information on HTTP requests, response times, and client IP addresses, which can be used to identify dependencies and traffic patterns.

- Monitoring Tools: Systems monitoring tools collect real-time performance data, including CPU utilization, memory usage, disk I/O, network bandwidth, and application response times. These tools often provide historical data and dashboards for visualizing performance trends. Examples include tools like Prometheus, Nagios, and Datadog.

- Configuration Files: Configuration files define how applications and systems are set up. Analyzing these files can reveal dependencies, network configurations, and resource allocations. Configuration management tools like Ansible or Chef can be used to extract and analyze configuration data systematically.

- Network Traffic Analysis: Network traffic analysis tools capture and analyze network packets to identify communication patterns between applications and systems. This method can reveal dependencies that are not apparent from logs or monitoring tools. Tools like Wireshark or tcpdump can be used for packet capture and analysis.

- Metadata Repositories: Databases and other metadata repositories store information about IT assets, including hardware specifications, software versions, and application owners. This data can be used to enrich the workload discovery process. Examples include CMDBs (Configuration Management Databases) and asset management systems.

Data Collection Methods

Data collection methods can be broadly categorized into agent-based and agentless approaches. Each method has its own advantages and disadvantages, impacting the trade-off between data granularity, deployment complexity, and resource consumption.

- Agent-Based Approach: This method involves installing software agents on the target systems. These agents collect data locally and transmit it to a central collection point. Agent-based approaches generally provide more detailed and granular data, as the agents can directly access system resources and monitor application-specific metrics.

- Agentless Approach: Agentless data collection does not require the installation of software agents on the target systems. Instead, data is collected remotely using protocols such as SNMP, SSH, or WMI. This approach is often easier to deploy, especially in large environments, as it minimizes the need for software installation and maintenance. However, it may provide less detailed data and may be limited by network connectivity and access permissions.

Comparison of Data Collection Methods

The following table provides a comparison of the pros and cons of agent-based and agentless data collection methods.

| Feature | Agent-Based | Agentless |

|---|---|---|

| Data Granularity | High: Provides detailed, real-time data from local system resources and applications. | Lower: Data collection relies on remote access protocols, potentially limiting the depth of data collected. |

| Deployment Complexity | Higher: Requires software installation, configuration, and maintenance on each target system. | Lower: Easier to deploy, as it does not require software installation on the target systems. |

| Resource Consumption | Moderate: Agents consume system resources (CPU, memory, disk I/O) for data collection and transmission. | Lower: Typically consumes fewer resources on target systems, as data is collected remotely. |

| Security Implications | Increased: Requires careful management of agent security and access controls. | Potentially Lower: Depends on the security of the remote access protocols used. |

| Scalability | Can be challenging in large environments due to the overhead of managing agents. | Generally more scalable, as it reduces the need for individual agent installations. |

| Maintenance | Requires ongoing agent updates, configuration changes, and troubleshooting. | Reduced maintenance overhead, as there are no agents to manage. |

Application and Service Identification

Identifying applications and services is a critical step in workload discovery and dependency mapping. This phase involves systematically cataloging the software components that comprise the IT infrastructure, understanding their functionalities, and pinpointing their interactions. This process provides a foundational understanding of the digital ecosystem, allowing for informed decisions about resource allocation, performance optimization, and risk mitigation.

Application and Service Identification Process

The identification process leverages data collected during the gathering phase, including network traffic analysis, configuration data, and performance metrics. It involves analyzing this data to distinguish individual applications and services from the raw data. The process often employs a combination of automated tools and manual validation to ensure accuracy. This includes methods such as pattern matching, signature analysis, and behavioral analysis.

Methods for Recognizing Service Dependencies and Relationships

Determining service dependencies and relationships is crucial for understanding how applications interact and how failures in one service can impact others. This involves tracing communication patterns, analyzing configuration files, and examining code.

- Network Traffic Analysis: Analyzing network traffic to identify communication patterns between services. Tools like Wireshark or tcpdump can be used to capture and analyze network packets. The analysis reveals which services are communicating with each other and the volume of data exchanged. This can be visualized using network diagrams that illustrate dependencies.

- Configuration File Analysis: Examining configuration files (e.g., .ini, .xml, .yaml files) to identify dependencies. These files often contain information about the services a particular application relies on, such as database connection strings, API endpoints, and other service configurations. Automated scripts or tools can parse these files and extract dependency information.

- Code Analysis: Examining the source code of applications to identify dependencies. This is particularly useful for identifying internal dependencies that are not visible through network traffic analysis. Static code analysis tools can identify function calls, class instantiations, and other code constructs that indicate dependencies. For example, a function call within one application to a function in a library indicates a dependency.

- Log Analysis: Examining application logs to identify interactions between services and error messages that can reveal dependencies. Centralized logging systems aggregate logs from various sources, facilitating dependency identification. Analyzing log entries can reveal the sequence of events, errors, and service calls that occur during application execution. For instance, if a service repeatedly logs connection errors to another service, it suggests a dependency.

- Service Discovery Mechanisms: Utilizing service discovery mechanisms, such as DNS, Consul, or Kubernetes, to identify and map services. These mechanisms maintain a registry of available services and their locations. This allows applications to dynamically discover and connect to other services.

- Dependency Mapping Tools: Employing specialized dependency mapping tools that automate the process of identifying and visualizing dependencies. These tools typically integrate with various data sources, such as network traffic, configuration files, and performance metrics. They provide interactive visualizations that illustrate the relationships between services.

Common Application and Service Types

Identifying the different types of applications and services present in an infrastructure is a key part of workload discovery. Each type has unique characteristics and dependencies. The following list provides examples of common application and service types.

- Web Servers: Applications that serve web content. Examples include Apache, Nginx, and Microsoft IIS. These servers typically depend on other services such as databases and application servers.

- Application Servers: Servers that host and execute application logic. Examples include Tomcat, JBoss, and WebLogic. These servers often depend on databases, message queues, and other backend services.

- Database Servers: Servers that store and manage data. Examples include MySQL, PostgreSQL, Oracle, and Microsoft SQL Server. These servers are often critical infrastructure components, with many applications depending on them.

- Message Queues: Systems that facilitate asynchronous communication between applications. Examples include RabbitMQ, Kafka, and ActiveMQ. These services enable decoupling of applications and improve scalability.

- Caching Servers: Systems that store frequently accessed data in memory to improve performance. Examples include Redis and Memcached. These servers reduce the load on other services, such as databases.

- Load Balancers: Systems that distribute network traffic across multiple servers to improve availability and performance. Examples include HAProxy and AWS Elastic Load Balancer. Load balancers depend on the services they are balancing, such as web servers and application servers.

- Container Orchestration: Platforms that manage and orchestrate containerized applications. Examples include Kubernetes and Docker Swarm. These platforms manage the lifecycle of containerized services and their dependencies.

- Monitoring and Logging Services: Systems that collect and analyze data about the performance and health of applications and infrastructure. Examples include Prometheus, Grafana, and the ELK stack (Elasticsearch, Logstash, Kibana). These services depend on the applications and infrastructure they monitor.

- Authentication and Authorization Services: Services that handle user authentication and authorization. Examples include Active Directory, LDAP, and OAuth providers. These services are critical for security and access control.

- API Gateways: Systems that manage and control access to APIs. Examples include Apigee and Kong. API gateways depend on the APIs they manage and may also depend on authentication and authorization services.

Workload Profiling and Characterization

Workload profiling and characterization are crucial steps in understanding the behavior of identified applications and services. This process allows for a detailed analysis of resource consumption patterns, enabling informed decisions regarding infrastructure sizing, performance optimization, and capacity planning. The goal is to create a comprehensive profile that captures the dynamic nature of each workload, providing insights into its operational characteristics.

Process Design for Workload Profiling and Characterization

The design of a workload profiling and characterization process requires a structured approach, involving data collection, analysis, and categorization. This process should be automated where possible to ensure consistent and reliable data capture.

- Data Collection Strategy: Establish a robust data collection mechanism. This includes identifying the necessary monitoring tools and the frequency of data sampling. The chosen tools should have minimal impact on the workload’s performance.

- Data Storage and Management: Implement a system for storing the collected data, ensuring scalability and efficient retrieval. Time-series databases are often employed due to their suitability for storing performance metrics.

- Data Analysis and Aggregation: Analyze the collected data to identify trends, patterns, and anomalies. This may involve calculating averages, percentiles, and other statistical measures. Data aggregation over time (e.g., hourly, daily) is often necessary to identify long-term trends.

- Workload Categorization: Define a categorization scheme based on the observed resource consumption patterns. This could involve grouping workloads based on their resource intensity (e.g., CPU-bound, I/O-bound).

- Reporting and Visualization: Generate reports and visualizations to communicate the findings effectively. Dashboards can be used to monitor workload performance in real-time.

Key Metrics for Workload Characterization

A comprehensive workload profile requires the monitoring of several key metrics. These metrics provide a detailed view of resource utilization and can be used to identify bottlenecks and areas for optimization. The metrics should be measured at various levels, including the operating system, hypervisor (if applicable), and application level.

- CPU Usage: This metric measures the amount of time the CPU is actively processing instructions. High CPU usage can indicate a CPU-bound workload. Monitoring CPU usage is crucial for understanding the computational demands of each workload.

- Metric Example: Percentage of CPU utilization, average CPU load, and CPU wait time.

- Memory Usage: This metric tracks the amount of physical memory used by a workload. High memory usage can lead to swapping, which can significantly degrade performance. Understanding memory consumption is essential for ensuring sufficient memory allocation.

- Metric Example: Total memory used, memory available, memory used by specific processes, and swap usage.

- Network I/O: This metric measures the amount of data transmitted and received over the network. High network I/O can indicate a network-bound workload. Monitoring network traffic is important for identifying potential network congestion.

- Metric Example: Bytes sent/received, packets sent/received, network latency, and error rates.

- Disk I/O: This metric measures the rate at which data is read from and written to disk. High disk I/O can indicate a disk-bound workload. Analyzing disk I/O is crucial for identifying storage bottlenecks.

- Metric Example: Disk read/write operations per second (IOPS), read/write throughput (MB/s), and disk latency.

Categorization of Workloads Based on Behavior

Categorizing workloads based on their behavior provides a structured approach to understanding their characteristics and resource requirements. Workloads can be grouped into different categories based on their dominant resource consumption patterns. This categorization enables targeted optimization efforts and facilitates resource allocation decisions.

- CPU-Bound Workloads: These workloads primarily consume CPU resources. Their performance is limited by the processing power of the CPU.

- Example: Scientific simulations, video encoding, and computationally intensive applications.

- Characterization: High CPU utilization, relatively low memory and I/O usage.

- Memory-Bound Workloads: These workloads primarily consume memory resources. Their performance is limited by the available memory.

- Example: Database servers with large datasets, in-memory data grids, and applications with large working sets.

- Characterization: High memory utilization, potential for swapping if memory is over-allocated.

- I/O-Bound Workloads: These workloads primarily consume disk or network I/O resources. Their performance is limited by the speed of the I/O devices or network bandwidth.

- Example: Database servers with frequent disk reads/writes, file servers, and applications that heavily access network resources.

- Characterization: High disk or network I/O, potentially lower CPU and memory utilization.

- Network-Bound Workloads: These workloads are primarily constrained by network bandwidth or latency.

- Example: Web servers serving large files, applications that transfer large amounts of data over the network.

- Characterization: High network utilization, potentially lower CPU, memory, and disk utilization.

- Mixed Workloads: Many workloads exhibit a combination of the above characteristics.

- Example: Web applications that perform both CPU-intensive calculations and database queries.

- Characterization: A combination of resource consumption patterns, requiring a holistic analysis.

Dependency Mapping Techniques

Dependency mapping is a crucial process in workload discovery, providing a comprehensive understanding of the relationships between applications, services, and their underlying infrastructure. Accurately identifying these dependencies is essential for effective migration planning, performance optimization, and incident response. This section explores the methodologies employed in dependency mapping, specifically focusing on the contrasting approaches of automation and manual techniques.

Automated vs. Manual Dependency Mapping

The choice between automated and manual dependency mapping significantly impacts the accuracy, efficiency, and scalability of the process. Automated methods leverage specialized tools to discover and visualize dependencies, while manual approaches rely on human expertise and investigation. Both methodologies have distinct advantages and disadvantages, making the selection dependent on the specific project requirements and constraints.

Comparison of Automated and Manual Dependency Mapping

The following table provides a comparative analysis of automated and manual dependency mapping techniques, highlighting their key attributes.

| Feature | Automated Dependency Mapping | Manual Dependency Mapping | Notes |

|---|---|---|---|

| Speed and Efficiency | Significantly faster; can process large, complex environments quickly. | Slower; time-consuming, especially for large-scale environments. | Automated tools reduce the time required for discovery by automating data collection and analysis. |

| Accuracy | Can be highly accurate, depending on the tool and configuration; prone to errors if the tool is not configured correctly or if the environment is complex. | Accuracy is highly dependent on the expertise and thoroughness of the individuals involved; can be prone to human error and incomplete information. | Accuracy depends on the quality of data collected and the ability to interpret that data correctly. |

| Scalability | Highly scalable; can handle large and complex environments with ease. | Scalability is limited; becomes increasingly challenging and resource-intensive as the environment grows. | Automated solutions are designed to scale to meet the demands of large and evolving IT landscapes. |

| Cost | Requires investment in tools and licensing; ongoing maintenance costs. | Lower initial cost, but can become expensive due to the time and resources required; the cost of human resources. | The overall cost should consider the time, resources, and potential for errors. |

| Granularity | Provides detailed dependency information at various levels, including application, service, and infrastructure. | Granularity can vary, depending on the skill of the team and the tools available. | Automated tools offer a more comprehensive view of dependencies. |

| Maintenance | Requires ongoing maintenance of the tools, including updates and configuration changes. | Requires ongoing documentation and updates as the environment changes. | Both methods need maintenance to reflect changes in the environment. |

Tools for Automating Dependency Mapping

Several types of tools are available to automate the dependency mapping process, each employing different techniques to discover and visualize dependencies. These tools typically combine data collection, analysis, and visualization capabilities.

- Network Monitoring Tools: These tools analyze network traffic to identify communication patterns between applications and services. They often use protocols such as TCP/IP and HTTP to track data flow and dependencies. Example: SolarWinds Network Performance Monitor, Dynatrace. These tools excel at identifying network-level dependencies, such as which servers are communicating with each other and the volume of data exchanged.

- Application Performance Monitoring (APM) Tools: APM tools provide insights into application behavior and dependencies by monitoring code execution and interactions between application components. They can identify dependencies at the code level, including calls to databases, APIs, and other services. Example: AppDynamics, New Relic. These tools can automatically map the application architecture and identify dependencies between the various application components.

- Configuration Management Databases (CMDBs): CMDBs store information about IT assets and their relationships, providing a centralized repository for dependency data. CMDBs can be populated through automated discovery processes or manual input. Example: ServiceNow CMDB, BMC Helix CMDB. A well-maintained CMDB can serve as a central source of truth for dependency information, but its accuracy depends on the quality of the data stored within it.

- Discovery and Mapping Tools: Specialized tools designed specifically for dependency mapping use a combination of techniques, including agent-based discovery, agentless scanning, and network analysis, to automatically discover and map dependencies. Example: Micro Focus Universal Discovery, Device42. These tools provide a comprehensive view of dependencies across various layers of the IT infrastructure, from the application to the underlying hardware.

- Agent-based vs. Agentless Discovery:

- Agent-based Discovery: Involves installing agents on servers and other devices to collect detailed information about the system’s configuration and dependencies. This approach provides a high level of accuracy but requires the installation and management of agents.

- Agentless Discovery: Relies on network scanning and other techniques to gather information without requiring the installation of agents. This approach is easier to deploy but may provide less detailed information than agent-based discovery.

Analyzing Dependencies

Understanding workload dependencies is crucial for effective migration planning, performance optimization, and failure mitigation. Accurately mapping these relationships allows for a comprehensive view of how components interact, facilitating informed decision-making throughout the workload lifecycle. This analysis distinguishes between direct and indirect dependencies, each playing a significant role in the overall system architecture.

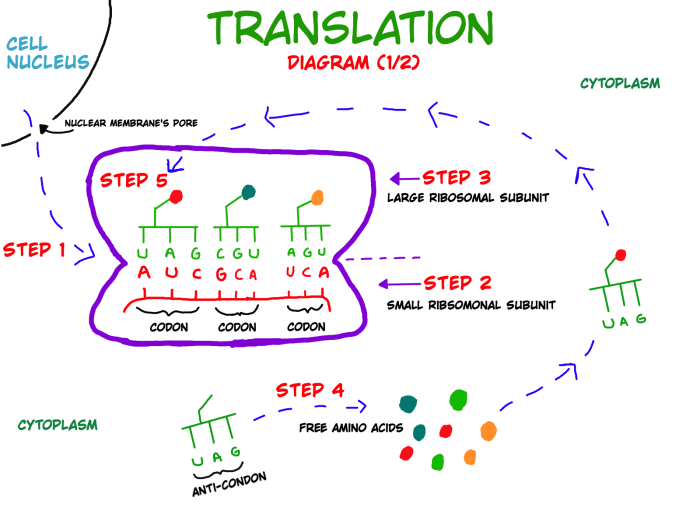

Direct and Indirect Dependency Concepts

Dependencies in a workload represent the relationships between different components, services, or applications. These relationships dictate how changes in one component can impact others. Analyzing these relationships involves differentiating between direct and indirect dependencies.

- Direct dependencies involve a direct interaction between two components. Component A directly calls or relies on Component B for its functionality. Changes to Component B will directly affect Component A.

- Indirect dependencies are more complex, involving a chain of relationships. Component A depends on Component B, which in turn depends on Component C. A change in Component C, therefore, indirectly affects Component A.

Examples of Direct and Indirect Dependencies with Diagrams

To illustrate these concepts, consider a simplified e-commerce application.

Direct Dependency Example:

Imagine a “Product Listing Service” directly accessing a “Database Service” to retrieve product information. The “Product Listing Service” is directly dependent on the “Database Service”. If the database is unavailable, the product listing service will fail.

Diagram Description: A simple block diagram. A rectangle labeled “Product Listing Service” has a directed arrow pointing to another rectangle labeled “Database Service”. The arrow is labeled “Data Retrieval”.

Indirect Dependency Example:

Now, consider the “Product Listing Service” that calls the “Inventory Service” to check the stock availability. The “Inventory Service” then accesses the “Database Service”. In this scenario, the “Product Listing Service” has an indirect dependency on the “Database Service” via the “Inventory Service”. A performance issue in the “Database Service” would impact the “Inventory Service,” which, in turn, affects the “Product Listing Service”.

Diagram Description: A block diagram showing three rectangles. The first rectangle, labeled “Product Listing Service,” has a directed arrow to the second rectangle, labeled “Inventory Service.” The “Inventory Service” has a directed arrow to the third rectangle, labeled “Database Service.” The arrow between “Product Listing Service” and “Inventory Service” is labeled “Stock Check.” The arrow between “Inventory Service” and “Database Service” is labeled “Stock Information”.

Effective Visualization of Dependency Relationships

Visualizing dependency relationships is essential for comprehending the complexity of a workload. Effective visualizations can highlight critical paths, potential bottlenecks, and areas vulnerable to failure.

- Dependency Graphs: These are the most common and effective method. Dependency graphs use nodes to represent components and edges to represent dependencies. The direction of the edge indicates the direction of the dependency. Various graph layouts, such as hierarchical or force-directed, can be employed to enhance readability.

- Matrices: Dependency matrices offer an alternative representation. Each row and column represents a component, and a cell indicates the dependency relationship. This representation is particularly useful for identifying cyclic dependencies.

- Heatmaps: Heatmaps can visualize the intensity of dependencies. The color intensity of a cell in a matrix can represent the volume of traffic or the frequency of interaction between two components. This can help identify high-impact dependencies.

- Interactive Dashboards: Dynamic dashboards that allow users to explore dependencies are beneficial. These dashboards can incorporate real-time data, allowing users to drill down into specific dependencies and observe their impact.

Consider a large financial institution. Analyzing the dependencies within its core banking system might involve hundreds of services. Using a dependency graph, one could identify that the “Transaction Processing Service” directly depends on the “Account Balance Service” and indirectly on the “Database Service.” Furthermore, using a heatmap, the dependency between the “Transaction Processing Service” and the “Database Service” might be colored intensely to show that this is a high-volume, critical dependency.

This level of visualization helps in prioritizing performance tuning and ensuring high availability.

Tools and Technologies for Workload Discovery

The effective identification and mapping of workloads necessitate the utilization of specialized tools and technologies. These solutions automate and streamline the data collection, analysis, and visualization processes, enabling a more comprehensive understanding of application dependencies and resource utilization. The selection of the appropriate tool depends on factors such as the complexity of the IT environment, the specific goals of the discovery process, and the available budget.

Network Packet Capture and Analysis Tools

Network packet capture and analysis tools are essential for identifying communication patterns and dependencies between applications and services. By examining network traffic, these tools can reveal which services are interacting, the frequency of these interactions, and the data exchanged.

- Wireshark: A widely-used, open-source packet analyzer. It captures network traffic and provides detailed analysis capabilities. Wireshark supports a vast array of protocols and offers features such as filtering, coloring rules, and statistics generation. It’s a powerful tool for investigating network performance issues and understanding application communication flows.

- tcpdump: A command-line packet analyzer available on most Unix-like operating systems. It captures network traffic and allows users to filter packets based on various criteria. While less user-friendly than Wireshark, tcpdump is valuable for scripting and automating network analysis tasks.

- SolarWinds Network Performance Monitor (NPM): A commercial network monitoring tool that includes packet analysis capabilities. NPM offers features such as real-time network monitoring, bandwidth analysis, and application dependency mapping. It integrates with other SolarWinds products to provide a comprehensive view of the IT infrastructure.

Agent-Based Discovery Tools

Agent-based discovery tools rely on software agents installed on servers and endpoints to collect detailed information about the running applications, processes, and resource utilization. This approach provides a deep level of visibility into the internal workings of each system.

- Dynatrace: A full-stack monitoring platform that utilizes agent-based instrumentation to automatically discover and map application dependencies. Dynatrace provides real-time performance monitoring, user experience analysis, and AI-powered insights. It supports various programming languages and frameworks, offering broad applicability.

- AppDynamics: Another prominent application performance management (APM) tool that employs agent-based monitoring. AppDynamics automatically discovers application dependencies, tracks transactions, and provides performance metrics. It offers features such as code-level diagnostics and business transaction monitoring.

- New Relic: A cloud-based APM platform that uses agents to collect data on application performance, infrastructure, and user experience. New Relic provides features such as real-time dashboards, alerting, and anomaly detection. It supports a wide range of programming languages and frameworks.

Agentless Discovery Tools

Agentless discovery tools gather information without requiring the installation of software agents on target systems. They typically leverage protocols such as SNMP, SSH, and WMI to collect data. This approach simplifies deployment and reduces the impact on the monitored systems.

- ServiceNow Discovery: A module within the ServiceNow platform that automates the discovery of IT infrastructure and applications. It uses a combination of probes and sensors to gather data about devices, services, and their relationships. ServiceNow Discovery helps organizations build a comprehensive CMDB (Configuration Management Database).

- VMware vRealize Network Insight: A network monitoring and analytics platform that provides visibility into the virtualized and cloud environments. vRealize Network Insight uses agentless discovery to map application dependencies, monitor network performance, and troubleshoot issues.

- Microsoft System Center Configuration Manager (SCCM): Primarily used for software deployment and configuration management, SCCM also includes discovery capabilities that can identify hardware and software assets within an organization. SCCM uses agent-based discovery for more in-depth information, but also leverages network protocols.

Cloud-Native Tools

Cloud-native environments require specialized tools that can integrate with cloud platforms and leverage their APIs to discover and map workloads.

- AWS CloudWatch: A monitoring service provided by Amazon Web Services (AWS) that collects metrics, logs, and events from AWS resources. CloudWatch can be used to monitor application performance, identify dependencies, and trigger alerts.

- Azure Monitor: A monitoring service provided by Microsoft Azure that collects telemetry data from Azure resources. Azure Monitor provides features such as application performance monitoring, log analytics, and alerting.

- Google Cloud Monitoring: A monitoring service provided by Google Cloud Platform (GCP) that collects metrics, logs, and events from GCP resources. Google Cloud Monitoring offers features such as dashboards, alerting, and log analysis.

Example: Using Wireshark for Dependency Mapping

Wireshark can be used to identify application dependencies by capturing and analyzing network traffic. The following steps illustrate a basic example:

- Capture Network Traffic: Launch Wireshark and select the network interface connected to the network segment where the applications reside. Start the capture.

- Filter Traffic (Optional): Use filters to focus on traffic relevant to the target applications. For example, to filter traffic to and from a specific IP address, use the filter

ip.addr ==. - Identify Protocols and Ports: Examine the captured packets to identify the protocols (e.g., HTTP, TCP, UDP) and ports used by the applications.

- Analyze Communication Patterns: Observe the communication flows between different IP addresses and ports to identify dependencies. Look for patterns such as client-server interactions, service discovery protocols, and data exchange.

- Generate Statistics: Utilize Wireshark’s statistics features (e.g., “Conversations” or “Endpoints”) to generate summaries of network traffic, which can help visualize communication patterns and identify key dependencies.

For instance, if analyzing traffic reveals consistent communication between a web server on port 80 and a database server on port 3306, this suggests a dependency of the web application on the database.

Visualizing Workload Dependencies

Visualizing workload dependencies is a crucial step in understanding and managing complex IT environments. Effective visualizations transform raw dependency data into readily interpretable formats, enabling informed decision-making regarding application performance, infrastructure optimization, and risk mitigation. These visual aids facilitate communication among stakeholders, from IT operations to application developers, promoting a shared understanding of the system’s architecture and its interdependencies.

Design Methods for Visualizing Workload Dependencies

Designing effective visualizations requires careful consideration of the audience, the complexity of the dependencies, and the goals of the analysis. A well-designed visualization should be clear, concise, and provide actionable insights.

- Data Selection and Filtering: Before creating a visualization, it’s crucial to select and filter the relevant data. This involves identifying the key dependencies to display and excluding unnecessary information that could clutter the visual. For instance, focusing on critical path dependencies or dependencies impacting performance metrics like latency or throughput can improve clarity.

- Choosing the Right Diagram Type: The choice of diagram type depends on the nature of the dependencies and the desired insights. Common diagram types include:

- Network Diagrams: These diagrams are excellent for visualizing the infrastructure dependencies, showing how different components connect and communicate.

- Application Dependency Maps: These maps focus on the relationships between applications and services, revealing how different applications rely on each other.

- Timeline Diagrams: Timeline diagrams are used to visualize the evolution of dependencies over time, showing when new dependencies were introduced or existing ones were modified.

- Hierarchical Diagrams: Useful for representing the organization of components, such as a service hierarchy or a layered architecture.

- Layout and Arrangement: The layout of the diagram is critical for readability. Consider the following:

- Node Placement: Nodes (representing applications, services, or infrastructure components) should be positioned logically, with related components clustered together.

- Edge Routing: Edges (representing dependencies) should be routed clearly, avoiding overlaps and crossings as much as possible.

- Color Coding and Annotation: Use color coding to highlight different types of dependencies, criticality levels, or performance characteristics. Annotations should provide context and explain the meaning of the different elements in the diagram.

- Interactive Features: Incorporating interactive features can significantly enhance the usability of the visualization. Users should be able to:

- Zoom and Pan: Allow users to zoom in on specific areas of the diagram and pan to explore different parts.

- Filtering and Highlighting: Enable users to filter dependencies based on criteria (e.g., service type, criticality) and highlight specific paths.

- Tooltips and Pop-ups: Provide tooltips or pop-ups that display detailed information about each component or dependency when the user hovers over it.

Guide on Interpreting Dependency Maps Effectively

Interpreting dependency maps requires understanding the diagram’s elements and the relationships they represent. The following guide provides a framework for effectively reading and analyzing dependency maps.

- Understand the Diagram’s Legend: Begin by familiarizing yourself with the legend, which explains the meaning of different symbols, colors, and line styles. This will help you quickly identify the types of components, the nature of the dependencies, and the criticality levels.

- Identify Key Components: Locate the critical components or services within the map. These are typically the core applications or services that support a large number of other components. They are often highlighted or emphasized in the diagram.

- Trace Dependencies: Follow the connections (edges) to understand how components depend on each other. Start with a critical component and trace the dependencies to identify all the services and applications that rely on it. This reveals the potential impact of an outage or performance issue.

- Analyze Dependency Types: Pay attention to the type of dependency (e.g., direct call, data transfer, shared resource). Different dependency types have different implications for performance and resilience. For example, a direct call has a more immediate impact than a dependency on a shared database.

- Assess the Impact of Changes: Use the dependency map to evaluate the potential impact of changes to the infrastructure or applications. For instance, if a service is being updated, identify all the components that depend on it to understand the potential risks and plan accordingly.

- Identify Single Points of Failure: Look for components that have a large number of dependencies or that are critical to multiple other components. These are potential single points of failure, meaning that if they fail, they could disrupt a significant portion of the system.

- Monitor Performance Metrics: Correlate the dependency map with performance metrics (e.g., latency, error rates, throughput). This can help identify bottlenecks and areas of the system that are underperforming.

- Iterative Refinement: Dependency maps are not static; they evolve over time as the system changes. Regularly update and refine the maps to reflect these changes and ensure that they remain accurate and useful.

Examples of Different Diagram Types

Different diagram types serve different purposes in visualizing workload dependencies. The choice of diagram depends on the specific needs of the analysis.

- Network Diagrams: Network diagrams visualize the physical and logical connections between infrastructure components.

- Example: A network diagram might show servers, routers, switches, and firewalls, with lines representing network connections and data flow. Color-coding can be used to indicate the type of network (e.g., internal, external) or the status of the connection (e.g., up, down). Nodes can be labeled with IP addresses, hostnames, or other relevant information.

Description: The diagram depicts a simplified network infrastructure. Servers are represented as rectangular boxes, routers as cylindrical shapes, and switches as squares. Lines with arrows indicate the direction of data flow. Color-coding is used to distinguish different types of network connections, such as internal and external.

The labels include IP addresses and hostnames, providing details about each component.

- Example: A network diagram might show servers, routers, switches, and firewalls, with lines representing network connections and data flow. Color-coding can be used to indicate the type of network (e.g., internal, external) or the status of the connection (e.g., up, down). Nodes can be labeled with IP addresses, hostnames, or other relevant information.

- Application Dependency Maps: Application dependency maps focus on the relationships between applications and services.

- Example: An application dependency map might show a web application interacting with a database, a caching service, and various backend services. The diagram illustrates the flow of requests and data between these components. The dependencies are represented by arrows, with different line styles indicating the type of interaction (e.g., synchronous, asynchronous).

Description: The diagram displays the dependencies of a web application. The web application is at the center, with arrows pointing towards the database, caching service, and backend services. Different arrow styles are used to indicate different types of interactions, such as synchronous or asynchronous calls.

Each component is labeled with its name and the type of service it provides.

- Example: An application dependency map might show a web application interacting with a database, a caching service, and various backend services. The diagram illustrates the flow of requests and data between these components. The dependencies are represented by arrows, with different line styles indicating the type of interaction (e.g., synchronous, asynchronous).

- Timeline Diagrams: Timeline diagrams are useful for visualizing the evolution of dependencies over time.

- Example: A timeline diagram could show the introduction of new dependencies over a period of months or years. The diagram might illustrate when a new service was added, when an existing dependency was modified, or when a component was deprecated. This type of visualization is useful for understanding the impact of changes and planning for future developments.

Description: The diagram shows a timeline with horizontal lines representing the lifecycle of different services. Each line has markers indicating the start, end, and any modifications or dependencies added over time. The timeline provides a historical view of the dependencies and their evolution, allowing analysis of changes and their impacts.

- Example: A timeline diagram could show the introduction of new dependencies over a period of months or years. The diagram might illustrate when a new service was added, when an existing dependency was modified, or when a component was deprecated. This type of visualization is useful for understanding the impact of changes and planning for future developments.

- Hierarchical Diagrams: Hierarchical diagrams represent the structure of a system in a hierarchical manner.

- Example: A hierarchical diagram could show a service hierarchy, with parent services at the top and child services below. This type of diagram is useful for understanding the organization of services and the relationships between them.

Description: The diagram illustrates a hierarchical structure, with parent services at the top and child services branching out below.

The diagram shows the dependencies and the relationships between different levels of the service hierarchy. The layout helps to visualize the overall architecture and the way the services are organized.

- Example: A hierarchical diagram could show a service hierarchy, with parent services at the top and child services below. This type of diagram is useful for understanding the organization of services and the relationships between them.

Addressing Challenges in Workload Discovery

Workload discovery and dependency mapping, while crucial for effective IT management, often face significant hurdles. These challenges stem from the complexity of modern IT environments, the dynamic nature of applications, and the limitations of available tools and techniques. Overcoming these obstacles requires a systematic approach, a clear understanding of the underlying issues, and the application of appropriate solutions.

Data Silos and Incomplete Information

Data silos, where information is fragmented across different systems and teams, represent a significant challenge. This fragmentation hinders the ability to gain a comprehensive view of the IT landscape. Incomplete data leads to inaccurate dependency mapping and can result in significant operational risks.To address data silos:

- Establish Centralized Data Repositories: Consolidate data from various sources into a central repository, such as a Configuration Management Database (CMDB) or a data lake. This requires establishing data integration pipelines and data governance policies.

- Implement Data Quality Checks: Regularly validate data accuracy and completeness using automated tools and manual reviews. Define data quality metrics and monitor them over time.

- Promote Cross-Team Collaboration: Foster communication and collaboration between different IT teams to share information and ensure data consistency. This can be facilitated through shared documentation, regular meetings, and collaborative tools.

Complexity and Scale of IT Environments

Modern IT environments are inherently complex, comprising numerous applications, services, and infrastructure components. The sheer scale of these environments makes manual discovery and dependency mapping impractical and error-prone. The dynamic nature of these environments, with frequent changes and updates, further exacerbates the challenge.Addressing the complexity and scale involves:

- Automate Discovery Processes: Utilize automated tools and scripts to discover workloads and map dependencies. Automation reduces manual effort and improves accuracy.

- Employ Advanced Dependency Mapping Techniques: Leverage techniques such as graph databases and machine learning to handle complex relationships and identify hidden dependencies.

- Implement a Phased Approach: Break down the discovery process into manageable phases, starting with critical applications and gradually expanding to the entire environment. This allows for iterative refinement and reduces the risk of overwhelming the process.

Dynamic and Changing Environments

IT environments are constantly evolving, with applications and infrastructure components being added, removed, and modified. This dynamic nature requires a continuous and automated approach to workload discovery and dependency mapping.To cope with dynamic environments:

- Implement Continuous Monitoring: Establish real-time monitoring of the IT environment to detect changes and updates. This involves using monitoring tools that can automatically discover new resources and track changes to existing ones.

- Automate Dependency Updates: Configure automated processes to update dependency maps whenever changes are detected. This ensures that the maps remain accurate and up-to-date.

- Use Infrastructure-as-Code (IaC): Adopt IaC practices to manage infrastructure configurations and dependencies. IaC allows for automated provisioning and configuration management, reducing manual errors and improving the speed of change management.

Lack of Expertise and Skills

Effective workload discovery and dependency mapping require specialized skills and expertise. A lack of skilled personnel can hinder the ability to implement and maintain these processes.Addressing the skills gap:

- Invest in Training and Development: Provide training and development opportunities for IT staff to acquire the necessary skills. This includes training on relevant tools, techniques, and best practices.

- Outsource or Partner with Experts: Consider outsourcing the workload discovery and dependency mapping process to a third-party provider with specialized expertise. Alternatively, partner with consultants who can provide guidance and support.

- Leverage Community Resources: Utilize online resources, such as forums, blogs, and open-source projects, to access information, share knowledge, and learn from others.

Vendor-Specific Dependencies and Lack of Standardization

Proprietary technologies and vendor-specific dependencies can complicate workload discovery and dependency mapping. Lack of standardization across different systems and platforms further adds to the challenge.Addressing vendor-specific dependencies:

- Prioritize Open Standards: Adopt open standards and technologies to minimize vendor lock-in and improve interoperability. This facilitates the integration of different systems and platforms.

- Understand Vendor Documentation: Thoroughly review vendor documentation to understand the specific dependencies and configurations of their products. This is crucial for accurately mapping dependencies.

- Use Vendor-Specific Tools: Utilize vendor-provided tools and APIs to discover and map dependencies within their specific environments. These tools are often designed to provide detailed information about their products.

Dependency Mapping for Migration and Optimization

Dependency mapping is a critical practice that enables informed decision-making in workload migration and optimization endeavors. By providing a comprehensive understanding of application interdependencies, dependency maps serve as the foundation for strategic planning, risk mitigation, and efficient resource allocation. They allow organizations to move workloads seamlessly and identify areas for performance enhancement and cost reduction.

Supporting Workload Migration and Optimization Initiatives

Dependency mapping fundamentally supports workload migration and optimization initiatives by providing a clear, visual representation of application architectures and their interactions. This clarity is crucial for planning migrations, identifying potential bottlenecks, and optimizing resource utilization. The insights derived from dependency maps facilitate informed decisions throughout the entire lifecycle of a workload, from initial assessment to post-migration monitoring. This proactive approach minimizes disruptions, reduces risks, and maximizes the benefits of both migration and optimization projects.

Steps for Planning Migration Projects with Dependency Maps

Planning migration projects necessitates a structured approach leveraging the insights derived from dependency maps. The following steps Artikel the process:

- Assessment and Discovery: This initial phase involves a thorough assessment of the existing environment. This includes the use of dependency mapping tools to discover and map all applications, services, and their interdependencies. The goal is to create a comprehensive inventory of all components and their relationships.

- Dependency Analysis: Once the dependencies are mapped, the next step involves analyzing these relationships. This analysis aims to identify critical dependencies, potential migration blockers, and the impact of migrating individual components. This often involves categorizing dependencies based on their type (e.g., synchronous, asynchronous), criticality, and performance characteristics.

- Migration Grouping: Based on the dependency analysis, workloads are grouped into logical migration units. These groups are typically based on shared dependencies, business criticality, or technical compatibility. The objective is to minimize disruption and ensure the smooth operation of dependent applications.

- Migration Planning and Sequencing: A detailed migration plan is developed, including the sequence in which the migration units will be moved. This plan considers the order of migration to minimize downtime and ensure that dependencies are satisfied. It includes a rollback strategy in case of failures.

- Execution and Validation: The migration plan is executed, and the migrated workloads are thoroughly validated to ensure that all dependencies are functioning correctly. This involves testing the performance, functionality, and security of the migrated applications.

- Post-Migration Optimization: After the migration, the performance of the migrated workloads is monitored, and the environment is optimized to improve efficiency and reduce costs. This may involve adjusting resource allocation, optimizing application configurations, or implementing new technologies.

Identifying Optimization Opportunities with Dependency Mapping

Dependency mapping reveals optimization opportunities by providing insights into application behavior, resource utilization, and potential bottlenecks. This allows for targeted interventions to improve performance, reduce costs, and enhance overall efficiency. Several optimization strategies can be identified:

- Resource Allocation Optimization: Dependency maps can reveal underutilized resources or over-provisioned components. By analyzing resource consumption patterns, organizations can right-size resources, leading to cost savings. For example, if a dependency map reveals that a database server is consistently underutilized, the organization can reduce its resources or consolidate it with other databases.

- Performance Bottleneck Identification: Dependency maps can pinpoint performance bottlenecks by highlighting slow-performing services or dependencies. For example, if a dependency map reveals that a particular service is experiencing high latency, the organization can investigate the root cause of the latency, such as network congestion or inefficient code.

- Code Optimization and Refactoring: Analyzing dependencies can reveal opportunities to optimize code or refactor applications. For instance, if a dependency map reveals that an application has complex dependencies, the organization can refactor the application to simplify the dependencies and improve performance.

- Service Consolidation: Dependency mapping can identify opportunities to consolidate services, reducing operational overhead and improving efficiency. If multiple applications rely on similar services, these services can be consolidated into a single, optimized service.

- Data Tier Optimization: By understanding the data access patterns through dependency maps, organizations can optimize their data tiers. This includes optimizing database queries, caching frequently accessed data, and choosing the right database technology for the workload.

For example, consider a large e-commerce platform. Using dependency mapping, it is discovered that the product catalog service is heavily reliant on the inventory management service. Further analysis reveals that the inventory service frequently experiences performance issues during peak hours. The organization can then investigate the root cause, perhaps the database queries or the underlying infrastructure, and optimize the service to handle the peak load more efficiently.

This will reduce the impact on the product catalog service and, consequently, improve the user experience.

Maintenance and Updates of Dependency Maps

Dependency maps are not static documents; they are living representations of a system’s architecture and behavior. Their value diminishes rapidly if they are not consistently maintained and updated to reflect changes in the environment. Regular maintenance is critical for leveraging the dependency map’s benefits throughout the lifecycle of a system, including migration, optimization, and incident response. Failure to maintain these maps can lead to inaccurate insights, inefficient resource allocation, and increased risk during critical operations.

Importance of Maintaining and Updating Dependency Maps

The dynamic nature of IT infrastructure, encompassing frequent deployments, upgrades, and changes in service configurations, necessitates continuous maintenance of dependency maps. This process ensures that the maps remain a reliable source of truth for understanding the relationships between components.Maintaining accurate dependency maps offers several key advantages:

- Improved Decision-Making: Accurate maps provide a clear understanding of the impact of changes. For instance, before migrating a service, the map highlights all dependent components, enabling informed decisions regarding the migration strategy, minimizing disruption.

- Reduced Risk: Dependency maps aid in identifying potential risks associated with system changes. For example, if a critical service is scheduled for an update, the map reveals all applications relying on it, helping to assess the potential impact and allowing for proactive mitigation strategies, such as rolling back.

- Enhanced Troubleshooting: When an incident occurs, dependency maps facilitate rapid root cause analysis. They enable teams to quickly pinpoint affected services and their dependencies, accelerating the resolution process.

- Optimized Resource Allocation: By visualizing resource utilization and dependencies, organizations can identify opportunities to optimize resource allocation. For example, identifying underutilized servers that support a particular application, and consolidating them to reduce operational costs.

- Improved Compliance: Dependency maps can aid in regulatory compliance by demonstrating a clear understanding of data flows and system interdependencies, which is essential for data security and privacy regulations like GDPR.

Process for Regularly Updating Dependency Maps

Establishing a well-defined process for regularly updating dependency maps is crucial for maintaining their accuracy and relevance. This process should be automated to the greatest extent possible to reduce manual effort and human error.The process typically involves the following steps:

- Establish a Change Management Process: Integrate dependency map updates into the existing change management workflow. Every change to the infrastructure, whether it is a software update, hardware replacement, or configuration modification, should trigger a dependency map update. This ensures that changes are reflected in the map in a timely manner.

- Automated Data Collection: Utilize automated tools to gather data from various sources. These tools can include:

- Configuration Management Databases (CMDBs): CMDBs store information about hardware, software, and their relationships.

- Monitoring Systems: Monitoring tools provide real-time data on service dependencies and communication patterns.

- Network Scanners: Network scanners can discover network devices and their connections.

- Application Performance Monitoring (APM) Tools: APM tools can identify application dependencies and communication flows.

- Data Analysis and Processing: The collected data needs to be processed and analyzed to identify dependencies. This can involve:

- Data Aggregation: Combining data from multiple sources to create a comprehensive view of the system.

- Dependency Inference: Using algorithms and rules to infer dependencies based on data patterns, such as network traffic analysis or log file analysis.

- Data Validation: Verifying the accuracy of the data and identifying any inconsistencies or errors.

- Map Updates and Visualization: Update the dependency map with the new information. Automated tools should be used to visualize the updated dependencies. This allows for a clear and intuitive understanding of the system’s architecture.

- Regular Audits and Validation: Periodically audit the dependency maps to ensure their accuracy. This involves manually verifying the information and identifying any discrepancies.

- Feedback Loop and Continuous Improvement: Establish a feedback loop to continuously improve the dependency mapping process. This involves collecting feedback from stakeholders, identifying areas for improvement, and implementing changes to optimize the process.

Checklist for Verifying the Accuracy of Dependency Information

A checklist ensures the reliability of the dependency maps. Regularly performing these checks helps maintain the integrity of the information and facilitates the detection of errors.The checklist should include the following elements:

- Data Source Verification: Verify the accuracy and completeness of the data sources used for dependency mapping. Check that CMDBs, monitoring systems, and other data sources are up-to-date and reflect the current state of the infrastructure.

- Dependency Relationship Validation: Manually validate the relationships between components to ensure they are accurate. This includes verifying the direction of dependencies and the type of communication.

- Performance Testing: Perform performance tests to validate the dependencies. For instance, if a database server is critical for an application, conduct load tests to verify that the application functions correctly under stress.

- Network Traffic Analysis: Analyze network traffic to verify communication patterns and identify any unexpected dependencies. Tools like Wireshark can be utilized to analyze network packets and identify communication flows.

- Log Analysis: Review application logs to identify any errors or warnings that might indicate incorrect dependencies. Log files often contain information about service interactions and dependencies.

- User Acceptance Testing (UAT): Conduct UAT to validate the functionality of the system and verify the dependencies. This involves testing the system from an end-user perspective to ensure that all components are working correctly.

- Regular Audits: Conduct regular audits of the dependency maps to ensure they are accurate and up-to-date. Audits should involve both automated and manual checks.

- Documentation Review: Review documentation related to the system to verify the accuracy of the dependency information. Documentation can include system diagrams, configuration files, and operational procedures.

End of Discussion

In conclusion, the meticulous execution of steps for workload discovery and dependency mapping is a foundational practice for modern IT management. From data acquisition to visualization and ongoing maintenance, this process provides a holistic understanding of IT infrastructure. By adopting the discussed strategies, organizations can enhance operational efficiency, streamline migrations, and ensure the long-term health and performance of their IT ecosystems, ultimately enabling informed decision-making and proactive problem resolution.

FAQ Section

What is the primary benefit of workload discovery?

The primary benefit is a clear understanding of the IT environment, leading to improved resource allocation, enhanced performance, reduced downtime, and more informed decision-making.

How frequently should dependency maps be updated?

The frequency of updates depends on the dynamism of the environment. However, regular updates (e.g., weekly or monthly) are recommended, with more frequent updates during periods of significant change.

Are agent-based or agentless approaches always superior?

Neither approach is inherently superior. The optimal choice depends on the specific environment, security considerations, and the level of detail required. Agent-based methods often provide richer data, while agentless methods may be simpler to deploy.

What are some common challenges in dependency mapping?

Common challenges include dealing with complex and dynamic environments, accurately identifying indirect dependencies, managing large datasets, and maintaining up-to-date information.