Sanctum AI

An AI tool specially designed for MacOS to run open-source LLMs locally. It features an advanced encryption system

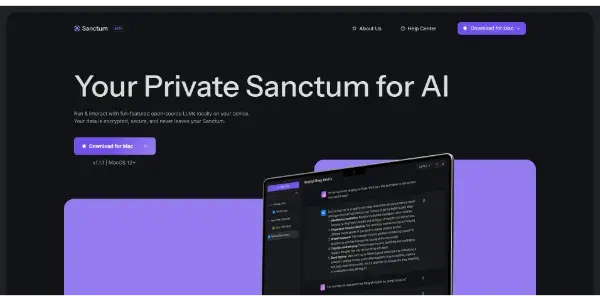

Sanctum AI: Secure Local Execution of Open-Source LLMs on macOS

Sanctum AI is a novel AI tool specifically designed for macOS users seeking to run open-source Large Language Models (LLMs) locally. Unlike cloud-based solutions, Sanctum AI prioritizes user privacy and security by enabling the execution of these powerful models directly on your machine, complete with an advanced encryption system. This article delves into its functionalities, benefits, applications, and how it stacks up against similar tools.

What Sanctum AI Does

Sanctum AI simplifies the often complex process of deploying and utilizing open-source LLMs on macOS. It provides a user-friendly interface for managing models, prompting them, and receiving outputs, all without needing extensive technical expertise. The tool handles the technical intricacies, allowing users to focus on leveraging the capabilities of the LLMs for their specific needs. Its core function is to provide a secure and accessible local environment for running these models, thereby addressing concerns about data privacy and latency.

Main Features and Benefits

- Local Execution: The most significant benefit is the local processing of LLMs. This eliminates the need to send your data to external servers, mitigating privacy risks associated with sharing sensitive information with third-party providers.

- Advanced Encryption: Sanctum AI employs robust encryption methods to protect both the models themselves and the data processed by them, enhancing security considerably.

- User-Friendly Interface: Designed for ease of use, the interface simplifies the complex task of managing and interacting with LLMs, making it accessible to users without a deep technical background.

- Open-Source Model Support: Sanctum AI supports a range of open-source LLMs, offering users flexibility in choosing the model best suited to their requirements. This allows for experimentation with different models and architectures.

- macOS Native: Optimized specifically for macOS, ensuring seamless integration and performance.

Use Cases and Applications

Sanctum AI's capabilities open doors to numerous applications:

- Creative Writing: Generate stories, poems, scripts, and other creative text formats with different stylistic choices.

- Code Generation: Assist in coding tasks by generating code snippets, translating between programming languages, or offering suggestions for improvements.

- Content Summarization: Condense lengthy documents or articles into concise summaries.

- Translation: Translate text between different languages.

- Data Analysis: Extract insights from textual data through tasks like sentiment analysis or topic extraction.

- Personalized Learning: Create tailored educational materials or interactive learning experiences.

Comparison to Similar Tools

While several tools enable local LLM execution, Sanctum AI differentiates itself through its focus on macOS, its advanced encryption, and its user-friendly interface. Many open-source LLM runners require significant technical expertise to set up and manage. Cloud-based solutions, while convenient, compromise user privacy. Sanctum AI aims to bridge the gap between accessibility and security.

Pricing Information

Sanctum AI is currently offered free of charge.

Conclusion

Sanctum AI presents a compelling solution for macOS users seeking to harness the power of open-source LLMs locally while maintaining a high level of privacy and security. Its user-friendly interface and focus on security make it a valuable tool for a range of applications, from creative writing to data analysis. The free pricing model further enhances its accessibility, making it a promising option for both individual users and organizations concerned about data privacy.