OpenAGI

An autonomous multimodal AI that is able to manipulate images. Experimental project

OpenAGI: An Experimental Autonomous Multimodal AI Agent

OpenAGI is an experimental, open-source project focusing on the development of an autonomous multimodal AI agent. Unlike many AI tools that focus on a single modality (like text or images), OpenAGI distinguishes itself by its ability to process and manipulate multiple data types, primarily images, alongside text instructions. This capability opens up exciting possibilities for automating complex tasks that require interaction across different data domains. It's currently available on GitHub and is offered free of charge.

What OpenAGI Does

OpenAGI aims to create an AI agent that can autonomously interpret and execute instructions involving both textual descriptions and images. This involves several key processes:

- Instruction Understanding: The agent processes natural language instructions, understanding the goal and the necessary steps involved.

- Multimodal Processing: It can analyze images, extracting relevant features and information.

- Action Planning and Execution: Based on the instruction and image analysis, it formulates a plan of action and executes the necessary steps, potentially involving image manipulation or interaction with other external tools.

- Feedback Loop: OpenAGI is designed to learn from its actions, improving its performance over time.

Main Features and Benefits

- Multimodality: Its key strength lies in its ability to seamlessly integrate image processing with textual instructions. This surpasses the capabilities of many single-modality AI agents.

- Autonomy: OpenAGI aims for autonomous operation, minimizing the need for constant human intervention.

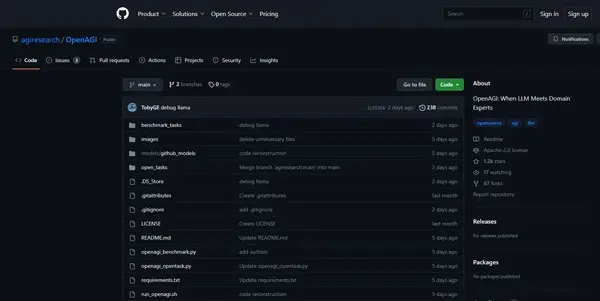

- Open-Source: The project is available on GitHub, fostering community contributions and transparency.

- Experimental Nature: This implies potential for rapid development and innovation, though it also means stability and reliability may be less mature than in commercially established products.

Use Cases and Applications

While still experimental, OpenAGI's capabilities suggest a range of potential applications:

- Image Editing and Manipulation: Automating tasks like image cropping, resizing, object removal, or applying specific filters based on textual descriptions.

- Visual Question Answering: Answering complex questions that require both textual understanding and image analysis.

- Robotics and Automation: Potentially controlling robots to perform tasks based on visual feedback and textual commands.

- Content Creation: Assisting in the creation of visual content by automatically generating or modifying images according to given instructions.

- Data Annotation: Automating parts of the data annotation process by analyzing images and assigning labels based on textual definitions.

Comparison to Similar Tools

OpenAGI stands out from many other AI tools due to its emphasis on multimodal autonomy. While several tools excel at image generation or text-based tasks, OpenAGI attempts to bridge these functionalities. A direct comparison requires specifying the exact tool; however, it differs from large language models (LLMs) that primarily operate on text and from image-generation models that lack the same level of instruction comprehension and task execution.

Pricing

OpenAGI is currently available free of charge. The open-source nature of the project means there are no licensing fees or subscription costs associated with its use.

Conclusion

OpenAGI represents a significant step toward the development of truly autonomous multimodal AI agents. While still in its experimental phase, its potential for revolutionizing various fields involving image processing and task automation is substantial. Its open-source nature encourages community involvement and rapid progress, making it a project to watch closely in the field of artificial intelligence. Further development and testing will be crucial in assessing its long-term viability and impact.