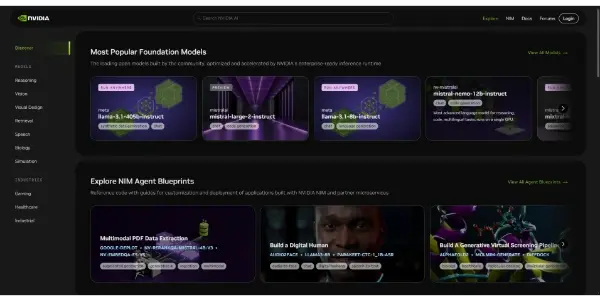

NVIDIA NIM APIs

Explore and integrate advanced AI models into your applications easily. Benefit from optimized performance and a wide range of capabilities for your projects

NVIDIA NIM APIs: Simplifying AI Model Integration

NVIDIA's Neural Inference Model (NIM) APIs offer developers a streamlined way to integrate advanced AI models, particularly Large Language Models (LLMs), into their applications. This allows for leveraging the power of sophisticated AI without requiring deep expertise in model deployment and optimization. NIM APIs abstract away the complexities of infrastructure and model management, enabling developers to focus on building innovative applications.

What NIM APIs Do

NIM APIs provide a bridge between powerful AI models and application developers. They handle the heavy lifting of:

- Model Deployment: Easily deploy pre-trained AI models, including LLMs, on NVIDIA's robust infrastructure, eliminating the need for complex setup and configuration.

- Optimization: The APIs leverage NVIDIA's hardware and software optimizations to ensure maximum performance and efficiency for your AI workloads. This translates to faster inference times and reduced resource consumption.

- Access to Diverse Models: Gain access to a growing catalog of pre-trained models, providing a wide range of capabilities readily available for integration.

- Simplified Integration: The APIs offer a user-friendly interface and well-documented SDKs, reducing the development time and complexity associated with incorporating AI models into existing applications or creating new ones.

Main Features and Benefits

- High Performance: Leverage NVIDIA's optimized hardware and software stacks for significantly faster inference speeds compared to deploying models independently.

- Ease of Use: Intuitive APIs and comprehensive documentation make integration straightforward, even for developers with limited AI expertise.

- Scalability: Easily scale your AI applications to handle increasing workloads and growing data volumes.

- Cost-Effectiveness: By optimizing performance and reducing infrastructure management overhead, NIM APIs can lead to significant cost savings.

- Access to Cutting-Edge Models: Stay up-to-date with the latest AI advancements through access to a constantly evolving library of pre-trained models.

Use Cases and Applications

NIM APIs have wide-ranging applications across various industries:

- Conversational AI: Build sophisticated chatbots and virtual assistants with natural language understanding capabilities powered by LLMs.

- Content Creation: Generate text, images, and other media types using AI models, enabling creative applications in marketing, design, and entertainment.

- Data Analysis: Utilize AI models for complex data analysis tasks, such as sentiment analysis, topic modeling, and anomaly detection.

- Healthcare: Integrate AI models for medical image analysis, diagnosis support, and personalized medicine.

- Finance: Develop AI-powered solutions for fraud detection, risk assessment, and algorithmic trading.

Comparison to Similar Tools

While several other platforms offer AI model deployment capabilities, NVIDIA NIM APIs differentiate themselves through:

- Superior Performance: Leveraging NVIDIA's specialized hardware (GPUs) provides a significant performance advantage over CPU-based solutions or other cloud platforms without such strong hardware optimization.

- Tight Integration with NVIDIA Ecosystem: Seamless integration with other NVIDIA tools and technologies simplifies workflow and enables end-to-end optimization.

- Focus on LLM Support: A strong focus on Large Language Model deployment and optimization sets NIM apart, particularly important given the growing prominence of LLMs. (Note: Specific comparisons require analyzing competitors like AWS SageMaker, Google AI Platform, and Azure Machine Learning, which would need further information to adequately address.)

Pricing Information

NVIDIA NIM APIs operate on a freemium model. This means that basic usage and access to certain models may be free, while more advanced features, higher throughput, and access to specific high-performance models may require paid subscriptions. The exact pricing structure would need to be obtained directly from NVIDIA's official documentation and pricing pages.

Conclusion

NVIDIA NIM APIs offer a compelling solution for developers seeking to seamlessly integrate advanced AI models into their applications. The platform's emphasis on performance, ease of use, and access to cutting-edge models positions it as a valuable tool for accelerating AI development across a wide range of industries. However, a thorough review of the pricing structure and specific model availability is recommended before integrating NIM APIs into production applications.