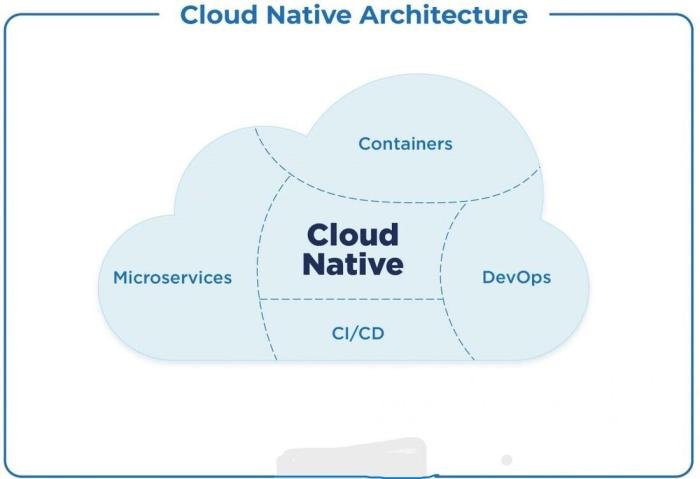

Embarking on a journey into the realm of cloud native architecture, we uncover a transformative approach to building and deploying applications. This paradigm shift moves away from traditional monolithic structures, embracing a dynamic, scalable, and resilient model that leverages the power of the cloud. Understanding these key principles is essential for organizations seeking to modernize their IT infrastructure and achieve greater agility.

This exploration will dissect the core tenets of cloud native architecture, including microservices, containerization, automation, Infrastructure as Code (IaC), and observability. We will delve into the intricacies of API-first design, service meshes, statelessness, and security considerations, providing a holistic view of this evolving landscape. Finally, we will touch upon cloud native databases, resilience, and fault tolerance to provide a comprehensive understanding of the topic.

Microservices Architecture

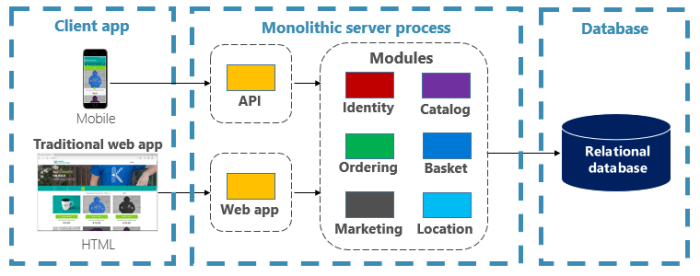

Microservices architecture is a crucial element of cloud native applications, enabling the development of complex systems through a collection of independently deployable services. This approach contrasts sharply with traditional monolithic designs, offering significant advantages in terms of scalability, resilience, and development agility. Understanding microservices is essential for grasping the full potential of cloud native technologies.

Microservices Concept and Role

Microservices represent a software development approach where an application is structured as a collection of loosely coupled services. Each service is a small, self-contained unit that focuses on a specific business capability. These services communicate with each other, typically over a network using lightweight mechanisms such as REST APIs. This architecture allows for independent development, deployment, and scaling of each service.Microservices play a pivotal role in cloud native architecture by:

- Enabling Agility: Microservices promote faster development cycles and easier updates. Small teams can independently develop, deploy, and maintain individual services without affecting the entire application.

- Improving Scalability: Individual services can be scaled independently based on their specific needs. This allows for efficient resource allocation and optimized performance.

- Enhancing Resilience: The failure of one microservice does not necessarily bring down the entire application. Other services can continue to function, ensuring high availability.

- Supporting Technology Diversity: Different microservices can be built using different technologies and programming languages, allowing teams to choose the best tools for the job.

Monolithic vs. Microservices Architectures

The shift from monolithic to microservices architectures offers significant advantages. A comparison of the two approaches highlights these differences:

| Features | Monolithic | Microservices |

|---|---|---|

| Deployment | Single unit, all components deployed together. | Independent deployment of each service. |

| Scalability | Scaling the entire application, even if only a small part needs more resources. | Independent scaling of individual services based on demand. |

| Technology Stack | Typically uses a single technology stack. | Allows for diverse technology stacks for different services. |

| Development Speed | Slower development cycles, as changes require redeploying the entire application. | Faster development cycles, with independent deployments and updates. |

| Fault Isolation | A failure in one component can bring down the entire application. | Faults are isolated to individual services, minimizing impact. |

| Complexity | Can become very complex and difficult to manage as the application grows. | Complexity is distributed across smaller, manageable services. |

Scalability and Resilience through Microservices

Microservices contribute significantly to scalability and resilience in cloud native applications.

- Scalability: Microservices enable horizontal scaling, allowing you to add more instances of a service as needed. For example, if a specific service experiences high traffic, you can deploy additional instances of that service without affecting other parts of the application. This is particularly beneficial for handling peak loads. Amazon, for instance, leverages microservices to scale its various functionalities during events like Prime Day, ensuring a seamless user experience even with massive traffic surges.

- Resilience: The independent nature of microservices enhances resilience. If one service fails, it does not necessarily bring down the entire application. This is achieved through techniques like circuit breakers, which detect failures and prevent cascading failures by rerouting traffic or temporarily disabling the failing service. Netflix is a prime example of a company that heavily relies on microservices and circuit breakers to maintain high availability and prevent outages, even when individual services experience issues.

- Fault Tolerance: Microservices can be designed to be fault-tolerant. This means they can continue to function even when parts of the system fail. This is achieved through redundancy, where multiple instances of a service are running. If one instance fails, the others can continue to handle requests.

Containerization and Orchestration

Containerization and orchestration are foundational to cloud native architectures. They enable the efficient packaging, deployment, and management of applications, offering significant advantages in terms of scalability, portability, and resource utilization. This section delves into the crucial roles these technologies play.

Importance of Containerization

Containerization, primarily utilizing technologies like Docker, is vital for cloud native applications. It provides a consistent and isolated environment for applications and their dependencies.

“Containers package code and all its dependencies so the application runs quickly and reliably from one computing environment to another.”

Docker Documentation

This consistency is achieved through the following:

- Isolation: Containers isolate applications from the underlying infrastructure and other applications, preventing conflicts and ensuring predictable behavior.

- Portability: Containers can run consistently across different environments (development, testing, production) because they package everything an application needs to run. This “write once, run anywhere” capability significantly simplifies deployment.

- Efficiency: Containers share the host operating system’s kernel, making them lightweight and resource-efficient compared to virtual machines, which require a full operating system per instance.

- Version Control: Container images are version-controlled, enabling easy rollback to previous versions and facilitating continuous integration and continuous deployment (CI/CD) pipelines.

A practical example is a web application. Without containerization, deploying this application might involve configuring a specific operating system, installing dependencies (like Python, Node.js, or Java runtime environments), and managing configuration files on each server. With Docker, the application and all its dependencies are packaged into a container image. This image can then be deployed consistently across any environment that supports Docker, streamlining the deployment process and minimizing the risk of configuration drift.

Benefits of Using Container Orchestration Tools

Container orchestration tools, such as Kubernetes, are essential for managing containerized applications at scale. They automate and streamline various aspects of container lifecycle management.The key benefits include:

- Automated Deployment: Orchestration tools automate the deployment of containerized applications, including the creation of pods (the smallest deployable unit in Kubernetes), replication, and rolling updates.

- Scaling: These tools automatically scale applications up or down based on demand, ensuring optimal resource utilization and responsiveness. They can monitor resource usage (CPU, memory) and automatically adjust the number of running container instances.

- Self-Healing: Orchestration platforms monitor the health of containers and automatically restart or replace unhealthy containers, ensuring high availability and resilience.

- Service Discovery: They provide mechanisms for containers to discover and communicate with each other, simplifying service-to-service communication within the application.

- Resource Management: Orchestration tools efficiently manage the allocation of resources (CPU, memory, storage) to containers, optimizing resource utilization and preventing resource contention.

For example, imagine a popular e-commerce website. During peak shopping seasons, the website experiences a surge in traffic. Kubernetes can automatically scale the application’s containerized components (e.g., web servers, database servers) to handle the increased load. When the traffic subsides, Kubernetes can scale down the resources, optimizing resource consumption and reducing costs.

Kubernetes Automation of Deployment, Scaling, and Management

Kubernetes automates various aspects of containerized application management, significantly simplifying the operational burden. It provides a declarative approach to managing applications, where users define the desired state of the application, and Kubernetes works to achieve that state.The automation features include:

- Automated Deployment: Kubernetes uses deployment objects to manage the deployment of applications. Users define the desired state (e.g., the number of replicas, the container image), and Kubernetes handles the creation, updates, and scaling of pods. Rolling updates allow for zero-downtime deployments by gradually updating the application without interrupting service.

- Automated Scaling: Kubernetes supports horizontal pod autoscaling (HPA), which automatically scales the number of pods based on resource utilization (e.g., CPU usage) or custom metrics. This ensures that the application can handle fluctuating workloads. For instance, an HPA can be configured to increase the number of web server pods if the average CPU utilization exceeds a certain threshold.

- Automated Management: Kubernetes provides health checks and self-healing capabilities. If a container fails, Kubernetes automatically restarts it. If a node fails, Kubernetes automatically reschedules the pods to run on healthy nodes. Kubernetes also provides tools for monitoring and logging, which are crucial for managing and troubleshooting applications.

- Service Discovery and Load Balancing: Kubernetes automatically provides service discovery and load balancing. Services provide a stable endpoint for accessing a set of pods, and Kubernetes automatically distributes traffic across the pods. This ensures high availability and simplifies service-to-service communication.

A practical illustration is a microservices-based application deployed on Kubernetes. The application comprises several microservices, each packaged in its own container. Kubernetes manages the deployment, scaling, and health of these microservices. If a microservice experiences a performance issue, Kubernetes can automatically restart it. If the traffic to a specific microservice increases, Kubernetes can automatically scale up the number of pods for that microservice to handle the increased load, maintaining optimal performance and user experience.

Automation and DevOps Practices

Cloud native architecture thrives on speed, agility, and scalability. Automation and DevOps practices are crucial enablers of these characteristics. They streamline the software development lifecycle, reduce manual intervention, and facilitate continuous delivery. This section will explore the pivotal role of automation and the implementation of DevOps practices within a cloud native context.

Automation in Cloud Native Environments

Automation is fundamental to cloud native development. It encompasses automating various aspects of the software lifecycle, from infrastructure provisioning and application deployment to testing and monitoring. This automation eliminates repetitive manual tasks, reduces the potential for human error, and allows developers to focus on building and improving applications.Benefits of automation include:

- Increased Efficiency: Automated processes are significantly faster than manual processes, leading to quicker deployments and faster feedback loops.

- Reduced Errors: Automation minimizes human error, ensuring consistency and reliability across the environment.

- Improved Scalability: Automation allows for the rapid scaling of resources to meet changing demands.

- Faster Time-to-Market: Automated processes accelerate the development and deployment cycles, enabling faster releases and quicker responses to market needs.

- Enhanced Resource Utilization: Automation optimizes resource allocation, reducing waste and improving cost efficiency.

Tools commonly used for automation in cloud native environments include infrastructure-as-code (IaC) tools like Terraform and Ansible for provisioning and managing infrastructure, configuration management tools like Chef and Puppet for automating server configuration, and CI/CD pipelines for automating the build, test, and deployment processes.

DevOps Practices (CI/CD) for Streamlined Application Delivery

DevOps is a set of practices that combines software development (Dev) and IT operations (Ops) to shorten the systems development life cycle and provide continuous delivery with high software quality. Continuous Integration and Continuous Delivery (CI/CD) are core components of DevOps, enabling frequent and reliable software releases. CI/CD pipelines automate the build, test, and deployment phases, facilitating a faster and more efficient release cycle.The CI/CD pipeline generally follows these steps:

- Continuous Integration (CI): Developers frequently merge their code changes into a central repository. Automated builds and tests are triggered with each commit. This ensures that code changes are integrated and tested frequently, identifying and resolving integration issues early in the development process.

- Continuous Delivery (CD): After successful integration and testing, the software is automatically prepared for release. This often involves packaging the application, creating deployment artifacts, and configuring the deployment environment. The software is then ready to be deployed to production.

- Continuous Deployment (CD): In a continuous deployment model, the software is automatically deployed to production after passing all tests and checks. This is the most automated form of CD.

The implementation of CI/CD offers significant advantages:

- Faster Releases: Frequent and automated releases enable faster delivery of new features and bug fixes.

- Reduced Risk: Small, incremental changes are easier to manage and troubleshoot than large, infrequent releases.

- Improved Quality: Automated testing ensures that code changes meet quality standards.

- Increased Collaboration: CI/CD promotes collaboration between development and operations teams.

- Faster Feedback: Developers receive rapid feedback on their code changes, allowing for quick iterations and improvements.

Designing a CI/CD Pipeline for a Sample Cloud Native Application

Let’s consider a sample cloud native application – a simple web application built using microservices, containerized with Docker, and orchestrated using Kubernetes. We will design a CI/CD pipeline for this application using a tool like Jenkins, GitLab CI, or CircleCI.Here’s a sample CI/CD pipeline breakdown:

- Code Commit: Developers commit code changes to a Git repository (e.g., GitHub, GitLab).

- Trigger: The CI/CD pipeline is triggered automatically upon a code commit or a scheduled event.

- Build: The build stage compiles the source code and creates deployable artifacts (e.g., Docker images). This step may involve installing dependencies and running build tools.

- Unit Tests: Automated unit tests are executed to verify the functionality of individual components.

- Integration Tests: Integration tests verify the interaction between different components or microservices.

- Code Analysis: Static code analysis tools (e.g., SonarQube) analyze the code for quality, security vulnerabilities, and coding standards compliance.

- Containerization: Docker images are built for each microservice, containing the application code and its dependencies.

- Image Registry: The Docker images are pushed to a container registry (e.g., Docker Hub, AWS ECR, Google Container Registry).

- Deployment to Staging Environment: The application is deployed to a staging environment (Kubernetes cluster) for further testing and validation.

- User Acceptance Testing (UAT): User acceptance tests are performed in the staging environment.

- Automated Security Testing: Security scans and vulnerability assessments are conducted to ensure the application meets security requirements.

- Deployment to Production: If all tests pass, the application is automatically deployed to the production environment (Kubernetes cluster). This may involve blue/green deployments or rolling updates.

- Monitoring and Alerting: Monitoring tools track application performance, resource utilization, and potential issues. Alerts are triggered based on predefined thresholds.

- Rollback: In case of issues during deployment, the pipeline includes mechanisms for automated rollback to the previous stable version.

This pipeline demonstrates the automation of the software delivery process, ensuring rapid, reliable, and efficient releases. The choice of specific tools and the exact steps may vary depending on the application’s complexity and the organization’s specific needs.

Infrastructure as Code (IaC)

Infrastructure as Code (IaC) is a cornerstone of cloud native architecture, representing a shift from manual infrastructure management to automated, code-driven provisioning and configuration. This approach treats infrastructure, such as servers, networks, and storage, as code, enabling version control, automated testing, and streamlined deployments. IaC empowers organizations to manage their infrastructure more efficiently, reliably, and consistently.

IaC Tools and Management

IaC tools are used to define and manage infrastructure through code. These tools allow engineers to describe the desired state of their infrastructure in a declarative manner, enabling automation of infrastructure provisioning and management tasks.IaC tools typically offer the following capabilities:

- Declarative Configuration: IaC tools allow users to define the desired state of the infrastructure, rather than specifying the steps required to achieve that state. This approach simplifies configuration management and reduces the risk of errors.

- Automation: IaC tools automate the provisioning and configuration of infrastructure resources, reducing manual effort and human error.

- Version Control: Infrastructure configurations are stored as code, allowing for version control, tracking changes, and easy rollback to previous states.

- Collaboration: IaC tools facilitate collaboration among team members, as infrastructure configurations can be shared and reviewed like any other code.

- Idempotency: IaC tools ensure that infrastructure configurations are idempotent, meaning that applying the same configuration multiple times will produce the same result.

Several popular IaC tools are available, including:

- Terraform: Terraform is a widely adopted open-source tool for infrastructure provisioning. It supports multiple cloud providers and allows users to define infrastructure using a declarative configuration language (HCL). Terraform uses a state file to track the current state of the infrastructure, enabling it to manage changes and updates effectively. A key advantage of Terraform is its cross-platform compatibility, allowing users to manage infrastructure across various cloud providers from a single configuration.

- AWS CloudFormation: CloudFormation is a service provided by Amazon Web Services (AWS) for provisioning and managing infrastructure resources on AWS. It uses templates, written in YAML or JSON, to define infrastructure configurations. CloudFormation handles the creation, modification, and deletion of resources, ensuring that the infrastructure remains in the desired state. CloudFormation’s tight integration with AWS services makes it a powerful tool for managing AWS-specific infrastructure.

- Ansible: Ansible is an open-source automation engine that can be used for IaC. It uses a declarative language (YAML) to describe infrastructure configurations and orchestrates the deployment and management of resources across multiple servers. Ansible is agentless, which simplifies deployment and management.

Benefits of IaC

IaC offers significant benefits for cloud native environments, improving efficiency, reliability, and agility.

- Version Control: IaC enables version control of infrastructure configurations, similar to application code. This allows for tracking changes, reverting to previous configurations, and collaborating effectively. Using tools like Git for version control provides a complete history of infrastructure changes, enabling easy rollback and auditing.

- Reproducibility: IaC ensures that infrastructure can be consistently reproduced across different environments (e.g., development, testing, production). This consistency reduces the risk of environment-specific issues and improves the reliability of deployments. The same IaC code can be used to provision identical infrastructure in multiple regions or for disaster recovery purposes.

- Automation: IaC automates infrastructure provisioning and configuration, reducing manual effort and the potential for human error. Automation speeds up deployment cycles and allows for faster scaling of infrastructure to meet changing demands. Automated deployments can be integrated into CI/CD pipelines, enabling continuous delivery of infrastructure changes.

Observability and Monitoring

In cloud native architectures, understanding the internal workings of your applications and infrastructure is crucial. This understanding, achieved through observability and monitoring, allows you to proactively identify and resolve issues, optimize performance, and ensure the overall health and reliability of your systems. Without robust observability, debugging and troubleshooting become significantly more challenging, potentially leading to costly downtime and a poor user experience.

Key Components of Observability

Observability is built upon three core pillars: logging, monitoring, and tracing. Each provides a different perspective on the system’s behavior, and when combined, they offer a comprehensive view.

- Logging: Logging involves capturing discrete events within the system. These events are typically recorded as text messages with timestamps, severity levels (e.g., INFO, WARNING, ERROR), and contextual information (e.g., user ID, request ID). Effective logging helps to reconstruct the sequence of events that led to a specific outcome, whether it’s a successful transaction or an error. Logs are essential for debugging, auditing, and understanding the application’s internal state.

- Monitoring: Monitoring involves collecting and analyzing metrics that reflect the system’s performance and health. Metrics can include CPU utilization, memory usage, network latency, request rates, and error rates. Monitoring tools typically visualize these metrics in dashboards, allowing operators to quickly identify anomalies and trends. Alerting systems can be configured to automatically notify operators when predefined thresholds are breached, enabling proactive intervention.

- Tracing: Tracing provides a way to follow a request as it traverses a distributed system. It involves assigning a unique identifier (trace ID) to each request and propagating this ID across all services involved in handling the request. Each service then emits spans, which represent individual operations performed as part of the request’s processing. By correlating spans across services, tracing tools allow developers to understand the end-to-end flow of a request, identify performance bottlenecks, and diagnose issues that span multiple components.

Monitoring Strategy for a Cloud Native Application

Developing a robust monitoring strategy is crucial for the operational success of any cloud native application. This strategy should cover various aspects of the application and infrastructure, providing insights into performance, health, and resource utilization. A well-defined strategy will allow you to proactively identify and resolve issues before they impact users.

Here is a sample monitoring strategy, presented in a table format:

| Metric | Tool | Threshold | Action |

|---|---|---|---|

| CPU Utilization (per pod) | Prometheus, Grafana | > 80% for 5 minutes | Scale the pod horizontally (increase replicas) |

| Memory Usage (per pod) | Prometheus, Grafana | > 90% for 5 minutes | Scale the pod horizontally (increase replicas) or vertically (increase resource requests/limits) |

| Request Latency (95th percentile) | Prometheus, Grafana | > 1 second | Investigate performance bottlenecks in the application code, database queries, or network infrastructure. Consider caching or code optimization. |

| Error Rate (per service) | Prometheus, Grafana | > 1% | Investigate application logs for error messages. Deploy a fix or rollback to a previous version. |

| Database Connection Pool Size | Prometheus, Grafana | > 90% utilization | Increase the maximum number of database connections. Optimize database queries. |

| Disk I/O Utilization (per node) | Prometheus, Grafana | > 80% | Identify and optimize disk I/O intensive operations. Scale up the storage. |

| Service Availability | Prometheus, Grafana | < 99.9% uptime | Review application health checks and dependencies. Investigate the underlying infrastructure. |

| Number of active users | Custom Metrics, Grafana | Sudden decrease or increase beyond expected bounds | Investigate the cause of the change, review marketing and sales data. Check for issues with authentication and authorization. |

API-First Design

API-First Design is a fundamental principle in cloud-native development, emphasizing the creation and design of APIs as the primary building blocks of an application. This approach prioritizes the definition of API contracts before the implementation of the underlying services, ensuring a clear and consistent interface for all interactions within the system. This methodology fosters modularity, scalability, and reusability, crucial characteristics for successful cloud-native architectures.

Principles of API-First Design

API-First Design is guided by several core principles. These principles shape how APIs are designed, developed, and deployed, promoting interoperability and efficiency.

- Contract-Driven Development: The API contract, often defined using specifications like OpenAPI (formerly Swagger), is the single source of truth. Development begins with defining the API contract, specifying the endpoints, data formats (e.g., JSON, XML), and authentication methods.

- Modularity and Loose Coupling: APIs enable a modular architecture where services are independent and loosely coupled. Each service exposes its functionality through well-defined APIs, allowing changes to one service without affecting others, as long as the API contract remains compatible.

- Reusability: APIs promote the reuse of services across different applications and platforms. Services designed with a well-defined API can be easily integrated into new projects, reducing development time and effort.

- Versioning: APIs are versioned to manage changes and ensure backward compatibility. Versioning allows developers to update services without breaking existing integrations. This can be achieved through URL versioning (e.g., `/v1/users`) or header-based versioning.

- Documentation: Comprehensive and up-to-date documentation is crucial for API-First Design. Documentation, often generated from the API contract, enables developers to understand and use the API effectively. Tools like Swagger UI and Postman are often used to explore and test APIs.

- Security: Security is a critical consideration. APIs must be secured using appropriate authentication and authorization mechanisms. This includes using techniques such as OAuth 2.0, API keys, and rate limiting to protect against unauthorized access and abuse.

Facilitating Communication Between Microservices Through APIs

APIs are the primary means of communication between microservices in a cloud-native application. They act as the bridges that enable services to interact with each other, allowing them to exchange data and trigger actions.

- Data Exchange: Microservices use APIs to exchange data. For example, a “User Service” might expose an API endpoint to retrieve user profile information, which can be accessed by other services like an “Order Service” to display user details related to an order. Data formats like JSON are commonly used for data exchange.

- Service Orchestration: APIs facilitate the orchestration of multiple microservices to perform a complex task. A “Payment Service” might call the “Order Service” to confirm an order, and then call a “Shipping Service” to initiate the shipment.

- Event-Driven Communication: APIs can be used to trigger events. When a user places an order, the “Order Service” might publish an event via an API that other services can subscribe to, such as the “Inventory Service” to update stock levels.

- Decoupling of Services: APIs decouple services, enabling them to evolve independently. Changes to one service’s internal implementation do not affect other services as long as the API contract remains consistent.

Diagram Illustrating API Interactions Within a Cloud Native Application

The following diagram illustrates the interaction between several microservices within a hypothetical e-commerce application. The diagram demonstrates how different microservices communicate with each other via APIs to fulfill a user’s request.

The diagram depicts a user interacting with a “Web Application” (Frontend) that utilizes several microservices. The “Web Application” acts as the entry point for user requests. It interacts with various backend services through APIs.

The following microservices are included in the diagram:

- User Service: Manages user accounts and profiles.

- Product Catalog Service: Provides information about available products.

- Order Service: Handles order creation, management, and processing.

- Payment Service: Processes payments.

- Shipping Service: Manages shipping and delivery.

The arrows in the diagram represent API calls:

- The “Web Application” calls the “User Service” to authenticate a user.

- The “Web Application” calls the “Product Catalog Service” to display product information.

- When a user places an order, the “Web Application” calls the “Order Service” to create the order.

- The “Order Service” then calls the “Payment Service” to process the payment.

- Upon successful payment, the “Order Service” calls the “Shipping Service” to initiate shipping.

- The “Shipping Service” updates the order status in the “Order Service” upon shipping confirmation.

This structure clearly demonstrates how APIs enable a distributed system, where each microservice has a specific responsibility, and they coordinate through well-defined API contracts. This API-First approach allows for scalability, maintainability, and the independent evolution of each microservice.

Service Mesh

Service meshes are a critical component of cloud-native architectures, providing a dedicated infrastructure layer for managing service-to-service communication. They offer a powerful way to control, observe, and secure the interactions between microservices, simplifying complex operational tasks and improving overall system reliability. They essentially act as a network of interconnected proxies, sitting alongside each microservice, intercepting and managing all network traffic.

Role of Service Meshes in Microservices Management

Service meshes, like Istio and Linkerd, play a central role in orchestrating microservices. They provide a standardized way to manage communication between services, decoupling application logic from network concerns. This separation of concerns allows developers to focus on building business logic, while the service mesh handles the complexities of service discovery, traffic routing, security, and observability.

- Traffic Management: Service meshes offer sophisticated traffic management capabilities. This includes:

- Routing: Directing traffic to specific versions of a service based on rules (e.g., header-based routing, weighted routing for A/B testing, canary deployments).

- Load Balancing: Distributing traffic across multiple instances of a service to ensure high availability and performance.

- Rate Limiting: Controlling the rate of incoming requests to prevent service overload and protect against malicious attacks.

- Circuit Breaking: Automatically detecting and preventing cascading failures by isolating unhealthy services.

- Security: They enhance the security posture of microservices by providing features like:

- Mutual TLS (mTLS): Encrypting all service-to-service communication and authenticating service identities.

- Authentication and Authorization: Enforcing access control policies to restrict which services can communicate with each other.

- Security Policies: Implementing fine-grained security policies based on service identity, request attributes, and other criteria.

- Observability: Service meshes provide rich observability data to monitor and troubleshoot microservices. This data includes:

- Metrics: Collecting metrics on request latency, error rates, traffic volume, and other performance indicators.

- Tracing: Tracking requests as they flow through the system, enabling end-to-end monitoring and debugging.

- Logging: Aggregating logs from all services to provide a centralized view of system activity.

Benefits of Service Meshes

Service meshes offer several key benefits that improve the operational efficiency, security, and performance of cloud-native applications. These benefits stem from the centralized control plane and the sidecar proxy architecture that characterizes service meshes.

- Improved Reliability: By providing features like circuit breaking, retry policies, and fault injection, service meshes enhance the resilience of microservices. Circuit breakers automatically stop traffic to failing services, preventing cascading failures. Retry policies automatically resend failed requests, and fault injection helps to test the resilience of the system under adverse conditions.

- Enhanced Security: mTLS encryption and identity-based access control are key features that secure service-to-service communication. They provide a robust security foundation for microservices architectures.

- Simplified Operations: Service meshes centralize operational tasks such as traffic management, security, and observability. This simplifies deployment, monitoring, and troubleshooting.

- Increased Agility: They enable faster deployments and easier updates. They allow for controlled rollouts (canary deployments) and A/B testing.

- Reduced Operational Overhead: The service mesh offloads network-related responsibilities from the application code. This reduces the operational burden on development teams and allows them to focus on building business logic.

Enhancing Observability and Control with Service Meshes

Service meshes significantly enhance observability and control over microservices. They provide a centralized platform for collecting, analyzing, and visualizing data related to service interactions. This data is invaluable for monitoring system health, identifying performance bottlenecks, and troubleshooting issues.

- Comprehensive Metrics: Service meshes automatically collect detailed metrics on request latency, error rates, traffic volume, and resource utilization. These metrics provide insights into the performance of individual services and the overall system. For example, Istio provides metrics through Prometheus, which can be visualized using tools like Grafana. These dashboards can display request rates, error rates, and latency (RED – Rate, Errors, Duration) metrics, allowing operators to quickly identify issues.

- Distributed Tracing: Service meshes integrate with distributed tracing systems (e.g., Jaeger, Zipkin) to track requests as they flow through the system. Distributed tracing provides a detailed view of the path a request takes, the time spent in each service, and any errors encountered. This makes it easier to diagnose performance problems and pinpoint the root cause of failures.

- Centralized Logging: They aggregate logs from all services into a centralized location. This provides a single pane of glass for viewing system activity and troubleshooting issues. Logging can be integrated with tools like Elasticsearch and Kibana (ELK stack) to provide advanced search and analysis capabilities.

- Fine-grained Control: Service meshes offer fine-grained control over traffic management, security policies, and other operational aspects. This control allows operators to implement complex deployment strategies, enforce security policies, and optimize system performance. For instance, using Istio, traffic can be gradually shifted from one version of a service to another (canary deployments), allowing for testing in production with minimal risk.

Statelessness and Immutability

Cloud native architecture prioritizes building applications that are resilient, scalable, and easily manageable. Two crucial principles that contribute significantly to these characteristics are statelessness and immutability. These concepts fundamentally change how applications are designed, deployed, and operated, leading to significant advantages in cloud environments.

Statelessness in Cloud Native Applications

Statelessness in cloud native applications means that a service does not store any client session data on the server. Each request from a client contains all the information necessary for the server to process it. This design contrasts with stateful applications, which maintain information about a client’s session on the server.Stateless applications function in a predictable manner, as any instance of the service can handle any request.

This contrasts with stateful applications, where a client might be tied to a specific server instance that holds its session data.

Benefits of Statelessness for Scalability and Fault Tolerance

Statelessness is a cornerstone of scalability and fault tolerance in cloud native applications. Several key advantages are realized:

- Enhanced Scalability: Stateless applications can be scaled horizontally with ease. Multiple instances of the service can be deployed, and a load balancer can distribute incoming requests among them. Because no instance stores session data, any instance can handle any request. This enables rapid scaling to accommodate increased traffic demands. For example, a popular e-commerce website can quickly deploy more instances of its product catalog service during peak shopping seasons without the need for complex session management.

- Improved Fault Tolerance: If an instance of a stateless service fails, the load balancer can automatically route requests to healthy instances. There is no need to recover session data, as each request is self-contained. This leads to minimal downtime and a more resilient system. Imagine a social media platform; if one server handling user profile requests goes down, the user’s session data is not lost because the information is readily available in each request, ensuring continuous service availability.

- Simplified Deployment and Management: Stateless applications are easier to deploy, update, and manage. Deployments can be rolled out incrementally, and updates can be applied without impacting existing user sessions. The absence of session affinity simplifies operations and reduces the risk of data loss or inconsistencies.

Immutability: Principles and Advantages

Immutability in cloud native infrastructure refers to the practice of treating infrastructure components as immutable entities. Once a component (e.g., a server, a container image) is deployed, it cannot be modified. Instead, any changes require creating a new version of the component and deploying it. This approach provides significant benefits.

- Predictable and Consistent Environments: Immutability ensures that the infrastructure remains consistent and predictable. Every deployment starts from a known, reproducible state. This eliminates configuration drift and reduces the risk of unexpected behavior due to changes in the underlying infrastructure. For instance, imagine a team uses immutable container images for their microservices. Every time a new version of a service is released, a new image is built and deployed, guaranteeing that all instances run the same code and configurations.

- Simplified Rollbacks: If a deployment introduces issues, it’s straightforward to roll back to a previous, known-good version. Because the old version is still available, the system can quickly revert to a stable state. This contrasts with mutable infrastructure, where rollbacks can be complex and error-prone. A practical example is a web application. If a new version of the application causes performance problems, the team can quickly switch back to the previous, tested version without significant downtime or data loss.

- Enhanced Security: Immutability reduces the attack surface. Since infrastructure components are not modified in place, it is more difficult for attackers to compromise them. Furthermore, changes are tracked and auditable, making it easier to identify and respond to security incidents.

- Improved Automation and Efficiency: Immutable infrastructure lends itself well to automation. Infrastructure as Code (IaC) tools can be used to define and provision infrastructure in a declarative manner, ensuring consistency and repeatability. This accelerates deployments and reduces operational overhead. For example, using tools like Terraform or CloudFormation, a team can define the infrastructure required for their application, and every deployment is automatically created based on that definition, ensuring consistency and efficiency.

Security Considerations

Cloud native architectures, while offering significant benefits in terms of scalability and agility, introduce new security challenges. Protecting applications and data in these dynamic environments requires a proactive and comprehensive approach. This involves securing the infrastructure, the applications themselves, and the interactions between them. Understanding and implementing robust security measures is crucial to mitigate risks and maintain the integrity and confidentiality of information.

Security Best Practices for Cloud Native Environments

Implementing security best practices is paramount in cloud native environments. This encompasses a multi-layered approach, integrating security throughout the entire software development lifecycle (SDLC). This means addressing security concerns from the initial design phase through deployment and ongoing operations.

- Shift-Left Security: Integrating security early in the development process. This includes using security scanners in the CI/CD pipeline to identify vulnerabilities in code and dependencies. For example, tools like SonarQube can analyze code for security flaws, and container image scanners like Clair or Trivy can identify vulnerabilities in container images before they are deployed.

- Least Privilege Principle: Granting users and services only the minimum necessary access rights. This limits the potential impact of a security breach. Role-Based Access Control (RBAC) is a common implementation of this principle, allowing administrators to define roles with specific permissions.

- Automated Security Testing: Regularly testing security controls through automated processes. This includes penetration testing, vulnerability scanning, and fuzzing. Automating these tests ensures that security measures are consistently applied and vulnerabilities are identified promptly.

- Immutable Infrastructure: Deploying infrastructure components as immutable units. Changes are made by replacing existing components rather than modifying them in place. This reduces the attack surface and simplifies security audits. Tools like Terraform and Kubernetes facilitate the management of immutable infrastructure.

- Defense in Depth: Employing multiple layers of security controls. This includes network segmentation, firewalls, intrusion detection systems, and data encryption. If one layer fails, other layers provide protection.

- Continuous Monitoring and Logging: Implementing robust monitoring and logging to detect and respond to security threats. Centralized logging solutions like the ELK stack (Elasticsearch, Logstash, Kibana) or Splunk collect and analyze logs from various sources, enabling security teams to identify and investigate suspicious activities.

- Regular Security Audits and Assessments: Conducting periodic security audits and assessments to evaluate the effectiveness of security controls and identify areas for improvement. These audits should be performed by both internal teams and external security experts.

Examples of Security Measures

Various security measures are essential for cloud native applications. These measures protect data and access to resources, ensuring the confidentiality, integrity, and availability of the system.

- Authentication: Verifying the identity of users or services attempting to access a resource. This can involve the use of usernames and passwords, multi-factor authentication (MFA), or service accounts. For example, Kubernetes uses service accounts to authenticate pods when accessing the Kubernetes API.

- Authorization: Determining the permissions of an authenticated user or service. This ensures that users can only access the resources they are authorized to use. RBAC is a common mechanism for managing authorization in cloud native environments.

- Encryption: Protecting data by converting it into an unreadable format. Encryption can be used for data at rest (e.g., encrypting data stored in a database) and data in transit (e.g., using TLS/SSL for secure communication). For instance, encrypting data stored in cloud storage services like Amazon S3 or Google Cloud Storage protects data from unauthorized access.

- Network Segmentation: Dividing a network into isolated segments to limit the impact of a security breach. This prevents attackers from easily moving laterally within the network. Microsegmentation, using technologies like Kubernetes Network Policies, allows for fine-grained control over network traffic.

- Vulnerability Scanning: Identifying vulnerabilities in software and infrastructure components. This can be done using automated scanning tools that analyze code, container images, and operating systems. Tools like OpenVAS or Nessus can scan for known vulnerabilities.

- Web Application Firewall (WAF): Protecting web applications from common attacks, such as SQL injection and cross-site scripting (XSS). WAFs analyze incoming traffic and block malicious requests. Cloud providers like AWS and Google Cloud offer WAF services.

- Container Image Scanning: Analyzing container images for vulnerabilities and misconfigurations. This helps ensure that container images are secure before deployment. Tools like Trivy and Clair can scan container images for known vulnerabilities.

Checklist for Securing a Cloud Native Application

Securing a cloud native application requires a systematic approach. This checklist provides a structured way to ensure that security considerations are addressed throughout the application lifecycle.

- Implement Secure Coding Practices:

- Follow secure coding guidelines.

- Use secure libraries and frameworks.

- Perform regular code reviews.

- Secure Containerization:

- Use minimal base images.

- Scan container images for vulnerabilities.

- Apply security policies to container deployments.

- Implement Network Security:

- Use network segmentation.

- Implement firewalls and intrusion detection systems.

- Use TLS/SSL for secure communication.

- Manage Secrets Securely:

- Store secrets securely (e.g., using a secrets management tool).

- Rotate secrets regularly.

- Restrict access to secrets.

- Implement Authentication and Authorization:

- Use strong authentication mechanisms.

- Implement RBAC.

- Follow the principle of least privilege.

- Monitor and Log Security Events:

- Collect and analyze logs from all components.

- Implement security monitoring and alerting.

- Regularly review security logs.

- Automate Security Testing:

- Integrate security testing into the CI/CD pipeline.

- Perform vulnerability scanning and penetration testing.

- Maintain Compliance:

- Adhere to relevant industry standards and regulations (e.g., PCI DSS, HIPAA).

- Conduct regular security audits.

Cloud Native Databases

Cloud native databases are designed specifically to leverage the benefits of cloud computing, offering scalability, elasticity, and automation. Unlike traditional databases, they are built to operate in distributed environments and are often optimized for specific workloads. They are a crucial component of cloud native applications, providing the data storage and retrieval capabilities necessary for modern, scalable systems.

Characteristics of Cloud Native Databases

Cloud native databases possess several key characteristics that distinguish them from their traditional counterparts. These characteristics are essential for enabling the agility and efficiency required in cloud environments.

- Scalability and Elasticity: Cloud native databases are designed to scale horizontally, meaning they can easily add or remove resources (e.g., compute, storage) based on demand. This elasticity allows them to handle fluctuating workloads without requiring significant manual intervention. This is often achieved through techniques like sharding and replication.

- Automation: Automation is a core principle. They automate tasks such as provisioning, patching, backups, and recovery. This reduces operational overhead and allows developers to focus on building applications rather than managing infrastructure.

- High Availability: Built-in mechanisms for high availability are common. This includes features like automatic failover, data replication across multiple availability zones or regions, and self-healing capabilities. These features ensure that the database remains operational even in the event of hardware or software failures.

- API-Driven: Cloud native databases often expose their functionality through APIs, enabling programmatic access and management. This allows for integration with other cloud services and facilitates automation.

- Containerization and Orchestration Compatibility: Designed to be deployed and managed using containerization technologies (e.g., Docker) and orchestration platforms (e.g., Kubernetes). This facilitates portability, simplifies deployment, and improves resource utilization.

- Pay-as-you-go Pricing: Many cloud native databases offer pay-as-you-go pricing models, allowing users to pay only for the resources they consume. This can significantly reduce costs compared to traditional database deployments.

Comparison of Cloud Native Database Types

Cloud native databases come in various types, each optimized for different use cases. The choice of database depends on the specific requirements of the application, including data model, query patterns, and performance needs.

- NoSQL Databases: NoSQL (Not Only SQL) databases are designed to handle unstructured or semi-structured data. They offer flexibility in data modeling and are often preferred for applications with evolving data requirements. There are several sub-types:

- Key-Value Stores: Simple data models where data is stored as key-value pairs. Examples include Redis and Memcached. These are ideal for caching and session management.

- Document Databases: Store data in document-oriented formats like JSON. Examples include MongoDB and Couchbase. Well-suited for content management systems and e-commerce applications.

- Columnar Databases: Organize data by columns rather than rows. Examples include Cassandra and HBase. Optimized for analytical workloads and large datasets.

- Graph Databases: Focus on relationships between data points. Examples include Neo4j and Amazon Neptune. Used for social networks, recommendation engines, and fraud detection.

- Relational Databases: While traditionally associated with on-premises deployments, relational databases are also available as cloud native solutions. These databases use a structured data model with tables, rows, and columns, and support SQL (Structured Query Language) for querying data.

- Examples include Amazon RDS (for PostgreSQL, MySQL, etc.), Google Cloud SQL, and Azure SQL Database.

- Cloud-native relational databases offer scalability, high availability, and often integrate well with existing relational database skills.

- NewSQL Databases: NewSQL databases aim to combine the scalability of NoSQL databases with the ACID (Atomicity, Consistency, Isolation, Durability) properties of relational databases. They are designed to handle high-volume, high-concurrency workloads.

- Examples include CockroachDB and Google Cloud Spanner.

- They offer strong consistency and are suitable for financial applications and other use cases requiring strict data integrity.

Benefits of Using Cloud Native Databases

Cloud native databases offer significant advantages over traditional database systems, enabling organizations to build and deploy applications more efficiently and effectively in the cloud.

- Improved Scalability: Easily scale up or down based on demand, ensuring optimal performance and cost efficiency. For example, a retail company might see a surge in traffic during a holiday sale and can automatically scale their database resources to handle the increased load.

- Enhanced Agility: Rapidly deploy and update applications with the flexibility to choose the right database for each workload.

- Reduced Operational Overhead: Automation of tasks such as provisioning, patching, and backups frees up IT staff to focus on more strategic initiatives.

- Cost Optimization: Pay-as-you-go pricing models and efficient resource utilization can lead to significant cost savings.

- Increased Resilience: Built-in high availability features minimize downtime and ensure business continuity. For example, a cloud native database might automatically failover to a replica in a different availability zone if the primary database instance fails, ensuring that the application remains available.

- Better Integration: Seamless integration with other cloud services, such as compute, storage, and networking, simplifies application development and deployment.

Resilience and Fault Tolerance

In cloud native architectures, applications must be designed to withstand failures gracefully and maintain availability. This is paramount because cloud environments are inherently dynamic, with potential issues arising from network outages, hardware failures, and software bugs. Resilience ensures that the system remains operational even when individual components experience problems, providing a consistent user experience.

Importance of Building Resilient Applications

Building resilient applications is a critical aspect of cloud native design. Resilience ensures that the application can continue to function even when parts of the system fail. This leads to improved availability, reduced downtime, and enhanced user satisfaction. A resilient application is designed to recover from failures automatically, minimizing the impact on users.

Strategies for Handling Failures in a Cloud Native Environment

Several strategies are employed to handle failures in a cloud native environment. These techniques are crucial for building applications that can withstand unexpected events and maintain operational continuity.

- Redundancy: Implementing redundancy involves having multiple instances of services or components. If one instance fails, another can take over, ensuring continuous operation. For example, multiple database replicas can provide high availability.

- Load Balancing: Distributing traffic across multiple instances of an application helps prevent overload and ensures that no single instance becomes a bottleneck. Load balancers automatically redirect traffic away from unhealthy instances.

- Health Checks: Regularly monitoring the health of application components is essential. Health checks involve automated processes that verify the status of services. If a service fails a health check, it can be automatically removed from the load balancer or restarted.

- Automatic Scaling: Automatically adjusting the number of application instances based on demand is another critical strategy. This ensures that the application can handle fluctuating workloads and maintain performance during peak times.

- Chaos Engineering: Intentionally introducing failures into the system to test its resilience is a proactive approach. Chaos engineering helps identify weaknesses and improve the application’s ability to withstand real-world failures.

- Disaster Recovery: Planning for disaster recovery involves creating strategies to restore application functionality in the event of a major outage or disaster. This includes data backups, replication, and procedures for quickly restoring services.

Implementing Fault Tolerance Mechanisms

Fault tolerance mechanisms are designed to detect and handle failures automatically, preventing them from cascading and impacting the overall system.

- Circuit Breakers: Circuit breakers are a pattern that prevents cascading failures by stopping requests to a failing service. They monitor the number of failures and, when a threshold is reached, “open” the circuit, preventing further requests from reaching the failing service. After a period, the circuit “closes” and allows requests to flow again.

A circuit breaker can have three states: closed (normal operation), open (preventing requests), and half-open (testing if the service has recovered).

- Retries: Retrying failed requests is a common technique to handle transient failures, such as temporary network issues. Implement retry logic with exponential backoff to avoid overwhelming the failing service.

Exponential backoff increases the delay between retries, preventing a flood of requests. For example, the first retry might occur after 1 second, the second after 2 seconds, and the third after 4 seconds.

- Timeouts: Setting timeouts on network requests and service calls prevents indefinite waiting and potential resource exhaustion. If a request takes longer than the specified timeout, it is terminated, and the application can handle the failure gracefully.

- Bulkheads: Bulkheads isolate different parts of an application to prevent a failure in one part from affecting others. This can be achieved by limiting the number of concurrent requests to a service or by using separate thread pools.

Imagine a ship with watertight compartments (bulkheads). If one compartment is breached, the others remain protected, preventing the ship from sinking.

- Idempotency: Ensuring that operations can be safely executed multiple times without unintended side effects is crucial for fault tolerance. This is particularly important when dealing with retries, as the same request might be executed multiple times.

Closing Notes

In conclusion, the key principles of cloud native architecture represent a significant evolution in software development and deployment. By embracing microservices, containerization, automation, and a range of other innovative practices, organizations can build applications that are more scalable, resilient, and adaptable to the ever-changing demands of the digital world. This approach not only streamlines operations but also fosters innovation, allowing businesses to respond more quickly to market opportunities and deliver exceptional value to their customers.

Commonly Asked Questions

What is the primary advantage of a microservices architecture?

The primary advantage is increased agility and faster time to market. Microservices enable independent development, deployment, and scaling of individual components, leading to quicker iteration cycles and reduced risk.

How does containerization benefit cloud native applications?

Containerization, using technologies like Docker, provides a consistent environment for applications, ensuring they run the same way regardless of the underlying infrastructure. This simplifies deployment, improves portability, and enhances resource utilization.

What role does Infrastructure as Code (IaC) play in cloud native environments?

IaC allows you to manage and provision infrastructure through code, automating the process and ensuring consistency. It promotes version control, enables reproducibility, and simplifies the management of complex cloud environments.

What is the significance of statelessness in cloud native applications?

Statelessness enhances scalability and fault tolerance. Stateless applications do not store client-specific data, allowing for easy scaling by adding more instances and ensuring that failures in one instance do not affect other instances.