Chaos Monkey, a seemingly mischievous agent in the realm of software development, actually serves a crucial purpose: fortifying systems against the unpredictable nature of real-world failures. This approach, rooted in the principles of “failure injection,” intentionally introduces disruptions to uncover vulnerabilities and improve overall system resilience. This guide will delve into the core concepts, practical implementations, and strategic considerations necessary to harness the power of Chaos Monkey.

This exploration will cover everything from setting up your environment and simulating various attack scenarios to analyzing results and integrating Chaos Monkey into your existing systems. You’ll learn how to design and execute experiments, monitor system behavior, and interpret findings to identify areas for improvement. We’ll also explore best practices, real-world case studies, and the tools and technologies that can help you on your journey to build more robust and reliable systems.

Introduction to Chaos Monkey and System Resilience

In the realm of modern software development, ensuring system stability and reliability is paramount. As applications become more complex and distributed, the potential for failures increases. To proactively address these challenges, the concept of Chaos Engineering has emerged, with Chaos Monkey as a key tool. This section will delve into the core concepts of Chaos Monkey and system resilience, exploring their significance and benefits.

Core Concept of Chaos Monkey and its Role

Chaos Monkey is a software tool, originally developed by Netflix, designed to simulate failures in a production environment. Its primary role is to randomly terminate instances of running services and infrastructure components. By injecting these failures, Chaos Monkey aims to uncover weaknesses in a system’s architecture and operational practices. This proactive approach allows development teams to identify and address potential vulnerabilities before they can impact real users.

Detailed Explanation of System Resilience and its Importance

System resilience refers to the ability of a system to withstand failures and continue operating effectively. A resilient system can adapt to unexpected events, recover quickly from disruptions, and maintain a consistent level of service. In today’s interconnected world, where applications often serve millions of users, system resilience is not just a desirable feature; it is a critical requirement. Its importance stems from the following:

- Reduced Downtime: Resilient systems minimize downtime, ensuring that services remain available even during failures. This translates to increased user satisfaction and business continuity.

- Improved User Experience: A resilient system provides a more consistent and reliable user experience, as users are less likely to encounter service disruptions or errors.

- Enhanced Business Reputation: Systems with high resilience build trust and confidence among users, leading to a stronger brand reputation and increased customer loyalty.

- Cost Savings: By proactively identifying and addressing vulnerabilities, resilient systems reduce the costs associated with outages, such as lost revenue, support costs, and damage to reputation.

Benefits of Using Chaos Monkey for Enhancing System Resilience

Employing Chaos Monkey offers several advantages in enhancing system resilience:

- Proactive Failure Detection: Chaos Monkey actively seeks out vulnerabilities by simulating real-world failures. This allows teams to identify and fix issues before they impact users.

- Validation of Recovery Mechanisms: By introducing failures, Chaos Monkey validates the effectiveness of automated recovery mechanisms, such as auto-scaling and failover processes.

- Improved System Design: The insights gained from Chaos Monkey experiments can inform system design decisions, leading to more resilient and fault-tolerant architectures.

- Enhanced Team Awareness: Chaos Monkey promotes a culture of resilience by encouraging teams to think about failure scenarios and how to mitigate them.

- Continuous Improvement: Chaos Monkey facilitates continuous improvement by providing a feedback loop that drives ongoing refinement of system resilience.

Relationship Between Chaos Monkey and the Concept of “Failure Injection”

Chaos Monkey is a specific implementation of “failure injection,” which is the practice of intentionally introducing failures into a system to test its resilience. Failure injection is a broader concept, and Chaos Monkey is a tool that automates and simplifies this process.

Failure injection involves simulating various types of failures, such as:

- Service Outages: Terminating or isolating specific services.

- Network Disruptions: Introducing latency, packet loss, or network partitions.

- Resource Exhaustion: Simulating high CPU usage, memory leaks, or disk I/O bottlenecks.

- Data Corruption: Injecting errors into data storage systems.

By systematically injecting these failures, Chaos Monkey and other failure injection techniques help identify weaknesses and ensure that the system can gracefully handle unexpected events. This proactive approach is crucial for building and maintaining highly resilient systems.

Setting Up Your Environment for Chaos Monkey

To effectively test system resilience with Chaos Monkey, a well-prepared environment is crucial. This involves ensuring the necessary prerequisites are met, installing and configuring the tools, and integrating them seamlessly within your existing infrastructure. This section provides a comprehensive guide to setting up your environment, ensuring a smooth and effective Chaos Monkey implementation.

Prerequisites for Implementing Chaos Monkey

Before deploying Chaos Monkey, several prerequisites must be addressed to ensure a successful and safe implementation. These elements lay the foundation for a stable testing environment and mitigate potential risks.

- A Defined Testing Scope: Clearly identify the systems, services, and components to be targeted by Chaos Monkey. This scope should align with your resilience goals and business priorities.

- A Stable Production Environment or a Realistic Testing Replica: It’s crucial to have a production environment or a testing environment that closely mirrors production. This ensures that the chaos injected simulates real-world conditions and provides meaningful results. If using a testing replica, ensure data is synchronized regularly.

- Monitoring and Alerting Systems: Implement robust monitoring and alerting systems. These systems will be critical for observing the impact of Chaos Monkey experiments, detecting anomalies, and responding to incidents. Consider tools like Prometheus, Grafana, or the monitoring tools offered by your cloud provider.

- Automated Deployment and Configuration Management: Use automation tools such as Ansible, Chef, or Puppet to manage the deployment and configuration of your infrastructure. This ensures consistency and repeatability in your environment.

- Backup and Recovery Procedures: Establish comprehensive backup and recovery procedures. In case of unexpected issues during a Chaos Monkey experiment, these procedures will allow you to restore the system to a known good state quickly.

- Authorization and Access Control: Implement strong authorization and access control mechanisms to restrict access to Chaos Monkey tools and experiments. This helps prevent unauthorized modifications and protects the system from malicious activities.

- Clear Communication and Rollback Plan: Define a clear communication plan for notifying stakeholders about the experiments and a rollback plan to revert to a stable state if necessary. This plan should include specific steps and roles to be taken in case of failures.

Installing and Configuring Chaos Monkey Tools

The installation and configuration process for Chaos Monkey tools vary depending on the chosen tool and the environment. The following steps provide a general guide, and it’s important to consult the specific documentation for your selected tool.

- Choosing a Chaos Engineering Tool: Select a tool based on your needs and infrastructure. Popular options include:

- Chaos Monkey (Netflix): Originally developed by Netflix, this tool randomly terminates instances in your AWS environment to test the resilience of your services.

- Gremlin: A commercial platform that offers a wide range of chaos engineering experiments, including host, network, and application attacks.

- LitmusChaos: An open-source tool specifically designed for Kubernetes environments, offering a variety of chaos experiments.

- Chaos Mesh: Another open-source option for Kubernetes, offering extensive features for injecting different types of failures.

- Installation: Follow the tool’s installation instructions. This typically involves:

- Downloading the necessary packages or binaries.

- Configuring environment variables.

- Installing dependencies.

For example, with Chaos Monkey (Netflix), you might deploy it as an AWS Lambda function or a service within your infrastructure. For Gremlin, you will need to create an account and install the agent on your hosts. LitmusChaos and Chaos Mesh are installed using Helm charts or kubectl.

- Configuration: Configure the tool to target the specific services and components you want to test. This includes:

- Specifying the target environment (e.g., AWS region, Kubernetes cluster).

- Defining the types of failures to inject (e.g., instance termination, network latency, CPU stress).

- Setting the experiment frequency and duration.

- Configuring any necessary authentication and authorization settings.

Configuration often involves creating configuration files (e.g., JSON or YAML) or using a web-based interface.

- Testing the Configuration: Before running experiments in production, test the configuration in a staging or development environment. Verify that the tool can successfully inject failures and that your monitoring systems capture the events.

- Integration with Monitoring and Alerting: Configure the Chaos Monkey tool to integrate with your monitoring and alerting systems. This will allow you to monitor the impact of experiments in real-time and receive alerts when issues arise.

Essential System Components Checklist

A successful Chaos Monkey implementation depends on several key system components. This checklist ensures all necessary elements are in place.

- Compute Resources: Adequate compute resources (virtual machines, containers, etc.) to host the services being tested and the Chaos Monkey tool itself.

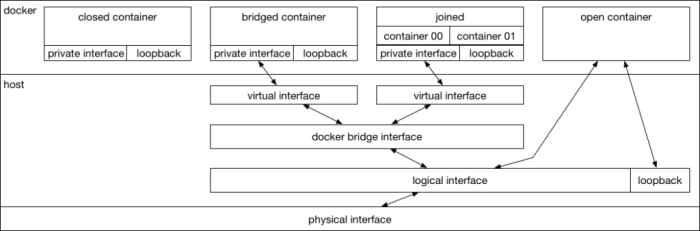

- Networking Infrastructure: A stable and well-configured network infrastructure that allows the Chaos Monkey tool to communicate with the target services.

- Storage Systems: Reliable storage systems for data persistence and backup.

- Monitoring and Logging Systems: Comprehensive monitoring and logging systems to track the impact of the experiments.

- Alerting Systems: Real-time alerting systems to notify the team of any issues detected during experiments.

- Configuration Management Tools: Tools like Ansible, Chef, or Puppet for automated configuration and deployment.

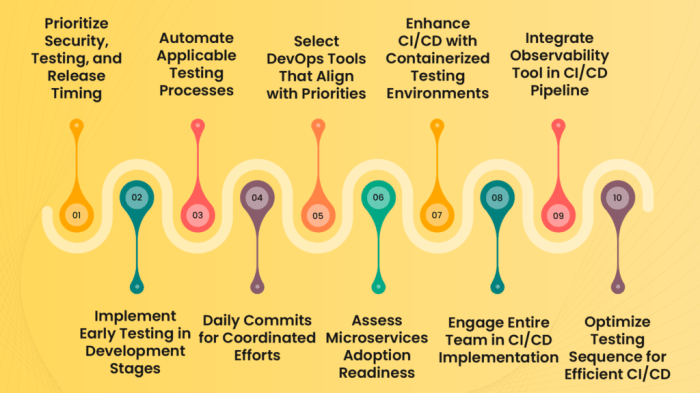

- CI/CD Pipeline: An established CI/CD pipeline for automating the deployment of code and infrastructure changes.

- Authentication and Authorization Mechanisms: Robust security measures to protect the system from unauthorized access.

- Backup and Recovery Systems: Reliable backup and recovery mechanisms to ensure data protection.

Integrating Chaos Monkey with CI/CD Pipelines

Integrating Chaos Monkey into CI/CD pipelines automates resilience testing and provides continuous feedback. This approach allows you to identify and address potential vulnerabilities early in the development lifecycle.

- Automated Experiment Execution: Configure the CI/CD pipeline to automatically trigger Chaos Monkey experiments after code deployments or infrastructure changes.

- Defining Experiment Scope: Specify which services and components to target during each experiment run.

- Analyzing Results: Automate the analysis of experiment results, including monitoring metrics, error logs, and system behavior.

- Feedback Loop: Integrate the results of the experiments into the development workflow. This can involve automatically creating bug reports, notifying developers of issues, or failing the build if critical problems are detected.

- Example Scenario: After a new version of a service is deployed, the CI/CD pipeline triggers a Chaos Monkey experiment to terminate instances randomly. If the service continues to function without significant impact (e.g., no downtime, minimal performance degradation), the build passes. If the service experiences issues (e.g., high error rates, extended downtime), the build fails, and the development team is notified.

- Choosing the Right Frequency: Determine the appropriate frequency for running experiments based on the rate of code changes and the criticality of the services. This might range from running experiments after every deployment to running them on a more scheduled basis.

- Automated Rollback: In the event of a failure during an experiment, configure the CI/CD pipeline to automatically rollback to the previous stable version of the system to minimize the impact.

Types of Chaos Monkey Attacks and Their Impact

Chaos Monkey’s power lies in its ability to simulate real-world failures, allowing teams to proactively identify and address vulnerabilities in their systems. Understanding the different types of attacks and their potential impact is crucial for designing effective resilience strategies. This section explores various attack types, their effects, and practical simulation methods.

Instance Termination Attacks

Instance termination attacks simulate the sudden failure of individual server instances. These attacks are designed to assess how a system responds when a critical component disappears unexpectedly.

- Impact: The primary impact is service disruption. If an instance hosting a vital service is terminated, users may experience errors, slowdowns, or complete service unavailability. The severity depends on the system’s architecture and redundancy. For example, in a system with no redundancy, terminating the only instance of a database server will lead to complete data loss and service outage.

In contrast, a system with multiple database replicas and automated failover mechanisms will experience a brief interruption as the system automatically switches to a healthy replica.

- Simulation: Simulating instance termination typically involves identifying instances to target, then using the Chaos Monkey tool to issue a command to shut down or terminate the instance. This can be achieved through API calls to the cloud provider (e.g., AWS EC2, Google Compute Engine, Azure Virtual Machines). For example, a Chaos Monkey configuration might specify a probability (e.g., 10%) for terminating a randomly selected instance within a specific service group (e.g., web servers) during a defined time window (e.g., business hours).

- Example: Netflix famously used Chaos Monkey to test its AWS infrastructure. They would randomly terminate instances, forcing their systems to adapt and reroute traffic. This led to the development of more resilient systems and automated recovery processes. This proactive approach enabled Netflix to maintain service availability even during major AWS outages.

Network Latency Attacks

Network latency attacks simulate delays in network communication between different parts of a system. This helps to reveal performance bottlenecks and identify services that are overly sensitive to network issues.

- Impact: Increased latency can significantly degrade the user experience. Slow response times, timeouts, and intermittent errors are common symptoms. Systems reliant on real-time communication, such as online games or financial trading platforms, are particularly vulnerable. For example, a slow network connection between a web server and a database server can lead to slow page load times and ultimately frustrate users.

- Simulation: Simulating network latency involves introducing artificial delays into network traffic. This can be achieved using tools like `tc` (traffic control) on Linux systems, or cloud-provider-specific network policies. For example, Chaos Monkey might be configured to add a delay of 100ms to all network traffic between a web server and a database server. This would simulate a network issue, revealing how the system handles the added latency.

- Example: Consider an e-commerce platform. By simulating network latency between the frontend and the payment processing service, engineers can assess how the system handles slow payment confirmations. If the system is poorly designed, users might see errors, or orders might not be processed correctly, leading to revenue loss. Properly designed systems will include timeouts, retries, and other mechanisms to handle network delays gracefully.

DNS Resolution Failure Attacks

DNS resolution failure attacks simulate situations where the system is unable to resolve domain names to IP addresses. This can happen due to DNS server outages, configuration errors, or malicious attacks.

- Impact: DNS resolution failures prevent the system from connecting to other services. This can cause widespread service outages. If a service cannot resolve the DNS name of its database server, it cannot connect to the database, and all requests to that service will fail.

- Simulation: Simulating DNS resolution failures involves manipulating DNS records or blocking DNS queries. Chaos Monkey can be configured to change DNS records to point to incorrect IP addresses, or to block DNS requests entirely.

- Example: Imagine a microservices architecture where each service communicates with others using DNS names. If a Chaos Monkey attack corrupts the DNS records for the authentication service, all other services will be unable to authenticate users, resulting in a complete system outage. This highlights the importance of having redundant DNS servers and implementing service discovery mechanisms that can handle DNS failures.

Disk I/O Attacks

Disk I/O attacks simulate problems with disk input/output operations. This can include slow disk speeds, disk errors, or disk failures.

- Impact: Disk I/O problems can lead to slow application performance, increased latency, and data corruption. If a database server experiences slow disk I/O, queries will take longer to execute, and the entire application may become sluggish. In extreme cases, disk failures can result in data loss.

- Simulation: Simulating disk I/O problems involves introducing artificial delays or errors into disk operations. Tools like `stress-ng` can be used to simulate heavy disk I/O load, or Chaos Monkey can inject errors into disk read/write operations.

- Example: An online file storage service relies heavily on disk I/O. If a Chaos Monkey attack introduces slow disk I/O on the storage servers, users will experience slow file uploads and downloads. This can lead to user frustration and a loss of business. Therefore, it is critical to use RAID configurations, monitor disk performance, and implement failover mechanisms to mitigate disk I/O issues.

CPU Exhaustion Attacks

CPU exhaustion attacks simulate scenarios where the CPU resources of a server are fully utilized. This can happen due to heavy workloads, resource leaks, or malicious attacks.

- Impact: CPU exhaustion can cause slow application performance, unresponsive services, and ultimately, service outages. When a CPU is fully utilized, it cannot process new requests, leading to increased latency and errors.

- Simulation: Simulating CPU exhaustion involves creating processes that consume a large amount of CPU resources. This can be done using tools like `stress-ng` or by running computationally intensive tasks. Chaos Monkey can be configured to launch these processes on target servers.

- Example: A web server with a high volume of traffic can be vulnerable to CPU exhaustion attacks. If a Chaos Monkey attack simulates a sudden spike in traffic, the web server’s CPU might become fully utilized, leading to slow response times and potentially causing the server to become unresponsive. This illustrates the importance of autoscaling and resource monitoring.

Memory Exhaustion Attacks

Memory exhaustion attacks simulate scenarios where the available memory on a server is fully utilized. This can happen due to memory leaks, excessive memory usage by applications, or malicious attacks.

- Impact: Memory exhaustion can cause slow application performance, crashes, and system instability. When a server runs out of memory, the operating system may start swapping data to disk, which is significantly slower than accessing memory directly. This can lead to a dramatic decrease in performance. In extreme cases, the system may crash.

- Simulation: Simulating memory exhaustion involves creating processes that allocate a large amount of memory. This can be done using tools like `stress-ng` or by writing programs that allocate memory without releasing it (memory leaks). Chaos Monkey can be configured to launch these processes on target servers.

- Example: A Java application with a memory leak can gradually consume all available memory. A Chaos Monkey attack can be used to simulate this leak, allowing developers to identify and fix the issue before it causes a production outage. Monitoring memory usage and implementing garbage collection are crucial to prevent memory exhaustion problems.

Dependency Failure Attacks

Dependency failure attacks simulate the failure of external dependencies, such as databases, message queues, or external APIs.

- Impact: The impact of dependency failures depends on the nature of the dependency and how the system handles the failure. If a system relies on a database for all its data, a database failure will cause a complete outage. However, if the system has redundant databases and automated failover mechanisms, the impact will be minimized.

- Simulation: Simulating dependency failures involves either making the dependency unavailable (e.g., by shutting down a database server) or by introducing errors in the communication with the dependency (e.g., by injecting errors into API calls). Chaos Monkey can be configured to perform these actions.

- Example: An e-commerce platform depends on a payment gateway for processing transactions. If the payment gateway fails, the platform will be unable to process orders, leading to lost revenue. A Chaos Monkey attack can be used to simulate this failure, allowing engineers to test the platform’s ability to handle payment gateway outages. This may involve implementing fallback payment methods or displaying an appropriate error message to the user.

Categorizing and Grouping Attacks

Organizing Chaos Monkey attacks into categories is crucial for effective management and analysis. Grouping attacks by type (e.g., instance termination, network latency) allows for easier tracking, reporting, and analysis of system vulnerabilities. Further, attacks can be grouped by the service or component they target. This provides a clear picture of the areas most vulnerable to failure.

- Importance: Categorization simplifies the process of identifying the root causes of issues and allows for better prioritization of remediation efforts.

- Implementation: Implementing a categorization scheme involves assigning tags or labels to each attack. These tags can be used to filter and sort attack results, making it easier to understand the overall impact of Chaos Monkey on the system. For example, an attack that terminates a database instance could be tagged with “instance_termination” and “database”. A dashboard could then be built to show the number of attacks of each type, the services affected, and the overall impact on system performance.

- Example: Consider a system with several microservices. Chaos Monkey attacks could be categorized as follows:

- Category: Instance Termination

- Service: Web Server

- Tag: instance_termination, web_server

- Category: Network Latency

- Service: Database

- Tag: network_latency, database

This allows engineers to quickly identify that web servers are susceptible to instance termination and the database is affected by network latency.

- Category: Instance Termination

Designing and Implementing Chaos Monkey Experiments

Implementing Chaos Monkey effectively requires a structured approach. This involves careful planning, meticulous execution, and thorough documentation to ensure meaningful results and actionable insights into system resilience. A well-defined methodology maximizes the value derived from these experiments.

Design a Methodology for Planning and Executing Chaos Monkey Experiments

A robust methodology ensures Chaos Monkey experiments are well-defined, repeatable, and provide valuable insights. This process involves several key stages, each crucial for success.

- Define Objectives: Clearly state the goals of the experiment. Determine what aspects of the system’s resilience are being tested. For example, are you testing the ability to handle service failures, increased load, or network latency? Define success metrics. These metrics should be measurable and aligned with the overall system goals.

- Scope Definition: Identify the system components and services to be targeted. Consider the dependencies between these components to understand the potential impact of failures. Define the blast radius, which is the potential impact of the attack. Start with a limited scope and gradually expand.

- Scenario Design: Develop specific scenarios that simulate real-world failures. Examples include terminating instances, introducing network latency, or injecting errors into API calls. Consider different attack types and their potential impact. Design scenarios that reflect the types of failures that are most likely to occur in production.

- Environment Setup: Prepare the testing environment. This could be a staging environment that mirrors the production environment. Ensure the environment is isolated from production to prevent unintended consequences. Consider using tools like infrastructure-as-code to automate the environment setup.

- Execution: Run the Chaos Monkey attacks according to the defined scenarios. Monitor the system’s behavior and collect data on performance, error rates, and other relevant metrics. Use monitoring tools to track the impact of the attacks in real time.

- Analysis: Analyze the collected data to identify weaknesses and areas for improvement. Evaluate the system’s response to the attacks and assess whether the predefined success metrics were met. Identify the root causes of any failures or performance degradation.

- Remediation: Implement fixes and improvements based on the analysis. This may involve changes to the code, infrastructure, or monitoring systems. Prioritize the issues based on their impact and the ease of remediation.

- Iteration: Repeat the process with improved scenarios and targets. Continuously refine the Chaos Monkey experiments based on the results of previous runs. Adapt the experiments to reflect changes in the system architecture or infrastructure.

Create a Template for Documenting Experiment Goals, Scenarios, and Expected Outcomes

Documentation is essential for tracking progress, communicating findings, and ensuring the repeatability of Chaos Monkey experiments. A well-structured template facilitates this process.

| Section | Description | Example |

|---|---|---|

| Experiment Name | A descriptive name for the experiment. | “Service A Instance Termination Test” |

| Objective | The goal of the experiment. What is being tested? | “Verify Service A’s ability to recover from instance failures within 60 seconds.” |

| Scope | The specific system components targeted. | “Service A, including its database and dependent services.” |

| Scenario | The specific attack being performed. | “Terminate one instance of Service A every 5 minutes for 1 hour.” |

| Attack Type | Type of attack used | “Instance Termination” |

| Frequency | How often the attack is executed. | “Every 5 minutes” |

| Duration | How long the attack runs. | “1 hour” |

| Expected Outcome | The expected behavior of the system. | “Service A should automatically recover by re-provisioning a new instance, and user requests should not be significantly impacted (e.g., no more than 1% error rate).” |

| Metrics | Key performance indicators (KPIs) to measure. | “Error rate, response time, resource utilization (CPU, memory).” |

| Actual Results | The actual behavior of the system during the experiment. | “The error rate increased to 3% for 2 minutes during instance recovery.” |

| Observations | Any notable observations during the experiment. | “The database connection pool was exhausted during the recovery process.” |

| Recommendations | Suggestions for improvement. | “Increase the database connection pool size.” |

| Remediation Actions | Steps taken to address the identified issues. | “Increased the database connection pool size from 100 to 200.” |

| Date | Date of the experiment. | “2024-10-27” |

| Experimenter | Name of the person who ran the experiment. | “John Doe” |

Organize a Process for Selecting Specific System Components to Target with Chaos Monkey Attacks

Selecting the right components to target is crucial for maximizing the effectiveness of Chaos Monkey. This process should be systematic and risk-aware.

- Identify Critical Components: Determine the components that are most critical to the system’s functionality and user experience. This includes services that handle user authentication, data storage, and core business logic. Prioritize these components for initial testing.

- Analyze Dependencies: Understand the dependencies between different components. Identify which components are critical for the functioning of other components. Consider the impact of a failure in one component on the dependent components.

- Assess Risk: Evaluate the potential impact of a failure in each component. Consider the business impact, the number of users affected, and the potential for data loss. Prioritize components with a higher risk profile.

- Start Small: Begin with less critical components or isolated services. This allows you to gain experience with Chaos Monkey and refine your testing methodology before targeting more critical components.

- Iterate and Expand: Gradually expand the scope of the experiments to include more critical components and complex scenarios. Continuously evaluate the results and adjust the testing strategy based on the findings.

- Use a Risk Matrix: Create a risk matrix to prioritize components for testing. The matrix should consider the likelihood of failure and the impact of failure. Components with a high likelihood and high impact should be prioritized.

- Monitor and Alert: Implement robust monitoring and alerting systems to detect failures and performance degradation. This allows you to quickly identify and respond to any issues that arise during Chaos Monkey experiments.

Provide Guidance on Setting Up the Frequency and Duration of Chaos Monkey Attacks

The frequency and duration of Chaos Monkey attacks must be carefully chosen to balance the need for thorough testing with the risk of disrupting normal operations. The ideal settings depend on the system’s characteristics and the goals of the experiment.

- Start with Low Frequency and Short Duration: Begin with a low frequency (e.g., once per day or week) and a short duration (e.g., a few minutes). This minimizes the risk of causing significant disruptions.

- Consider the System’s Recovery Time: The duration of the attacks should be long enough to allow the system to recover from the simulated failures. However, it should not be so long that it causes prolonged disruptions.

- Gradually Increase Frequency and Duration: As you gain confidence in the system’s resilience, gradually increase the frequency and duration of the attacks. This allows you to test the system’s ability to handle more frequent and sustained failures.

- Automate Attack Scheduling: Use automation to schedule and execute the Chaos Monkey attacks. This ensures that the experiments are run consistently and on a regular basis.

- Use a Ramp-Up Period: When increasing the frequency or duration of attacks, use a ramp-up period. This involves gradually increasing the intensity of the attacks over time, allowing the system to adapt to the changes.

- Monitor Performance Closely: During the experiments, closely monitor the system’s performance. If you observe any significant performance degradation or failures, immediately stop the attacks and investigate the root cause.

- Analyze the Results: After each experiment, analyze the results to identify any weaknesses or areas for improvement. Use the findings to refine the attack frequency and duration for future experiments.

- Examples:

- Example 1: For a web application, you might start by terminating one instance of a web server every hour for 5 minutes.

- Example 2: For a database cluster, you might start by introducing network latency of 100ms for 10 minutes every 6 hours.

Monitoring and Logging Chaos Monkey Activities

Monitoring and logging are crucial components of a successful Chaos Monkey implementation. They provide valuable insights into system behavior during attacks, enabling you to understand the impact of failures, identify vulnerabilities, and refine your resilience strategies. Without effective monitoring and logging, the benefits of Chaos Monkey are significantly diminished, as you lose the ability to interpret results and make informed decisions.

Implementing Monitoring Tools for Tracking System Behavior

To effectively monitor system behavior during Chaos Monkey attacks, you need to integrate appropriate monitoring tools. The selection of tools depends on your existing infrastructure and specific needs.

- Selecting Monitoring Tools: Consider tools that align with your existing infrastructure and support the technologies your system utilizes. Popular choices include Prometheus, Grafana, Datadog, New Relic, and the monitoring solutions provided by your cloud provider (e.g., Amazon CloudWatch, Azure Monitor, Google Cloud Monitoring).

- Tool Integration: Ensure seamless integration of the chosen tools with your applications and infrastructure. This typically involves installing agents, configuring data collection, and setting up alerts.

- Real-time Monitoring: Implement real-time monitoring to observe system behavior during Chaos Monkey attacks. Real-time data provides instant feedback on the impact of injected failures.

- Alerting and Notifications: Configure alerts to notify you of critical events or deviations from expected behavior. Alerts should be specific, actionable, and routed to the appropriate teams.

Essential Metrics to Monitor During a Chaos Monkey Experiment

Monitoring the right metrics is essential for understanding the impact of Chaos Monkey experiments. Focus on metrics that reflect system health, performance, and user experience.

- Service Availability: Track the availability of your services. Monitor uptime, downtime, and the percentage of successful requests.

- Error Rates: Monitor the rate of errors, including HTTP error codes (e.g., 500, 503) and application-specific errors. High error rates indicate potential issues.

- Response Times: Measure the time it takes for your services to respond to requests. Monitor average, median, and percentile response times.

- Throughput: Measure the volume of requests processed by your services. Monitor requests per second (RPS) or transactions per second (TPS).

- Resource Utilization: Monitor resource usage, including CPU utilization, memory usage, disk I/O, and network traffic. Identify bottlenecks and resource exhaustion.

- Dependencies: Monitor the health of external dependencies that your system relies on.

- User Experience: Track metrics that reflect the user experience, such as the number of active users, the time it takes to complete key tasks, and any reported errors or issues.

- Business Metrics: Monitor key business metrics such as conversion rates, revenue, and customer satisfaction scores. These metrics help assess the impact of failures on your business.

Creating Effective Dashboards for Visualizing Chaos Monkey Experiment Results

Visualizing the results of your Chaos Monkey experiments through dashboards is crucial for quickly understanding the impact of attacks and identifying areas for improvement. Effective dashboards present key metrics in an easy-to-understand format.

- Dashboard Design Principles: Design dashboards that are clear, concise, and easy to interpret. Use appropriate chart types (e.g., line charts for trends, bar charts for comparisons, and gauges for key indicators).

- Key Metrics Display: Include the essential metrics mentioned earlier, such as service availability, error rates, response times, and resource utilization.

- Real-time Updates: Ensure dashboards are updated in real-time to reflect the current state of the system during the experiment.

- Comparison and Analysis: Enable the ability to compare metrics before, during, and after the Chaos Monkey attack to assess the impact.

- Annotations and Events: Annotate dashboards with the start and end times of Chaos Monkey attacks, and any significant events that occurred during the experiment.

- Example: A dashboard might display a line chart of service availability over time, with annotations indicating the start and end times of a Chaos Monkey experiment that randomly terminates instances. Alongside, a gauge could display the current error rate.

- Tool-Specific Examples:

- Grafana: Grafana dashboards can be configured to display metrics from various data sources (e.g., Prometheus, InfluxDB). You can create panels for each metric, using different chart types, and set up alerts based on threshold values.

- Datadog: Datadog dashboards allow you to create visualizations from various sources, including application metrics, infrastructure metrics, and logs. Datadog also provides pre-built dashboards for common use cases.

Setting Up Logging to Capture Detailed Information About Chaos Monkey Activities

Comprehensive logging is essential for capturing detailed information about Chaos Monkey activities, providing valuable insights into the root causes of failures and enabling effective debugging.

- Logging Levels: Use appropriate logging levels (e.g., DEBUG, INFO, WARN, ERROR) to capture different levels of detail. Log DEBUG information for in-depth troubleshooting, INFO for general events, WARN for potential issues, and ERROR for critical failures.

- Log Structure: Structure your logs to include relevant information, such as timestamps, log levels, service names, request IDs, and error messages. Consider using a standardized logging format like JSON for easier parsing and analysis.

- Chaos Monkey-Specific Logging: Log all Chaos Monkey activities, including the type of attack, the target resources, the start and end times of the attack, and any relevant configuration parameters.

- Application Logging: Enhance your application’s logging to capture events related to Chaos Monkey attacks. Log events such as failed requests, error messages, and service unavailability.

- Infrastructure Logging: Integrate infrastructure logging, such as server logs, network logs, and container logs, to capture system-level events.

- Centralized Logging: Implement centralized logging using a log aggregation tool like the ELK stack (Elasticsearch, Logstash, Kibana) or Splunk. This enables you to search, analyze, and visualize logs from multiple sources.

- Example: A log entry might include:

"timestamp": "2024-01-20T10:00:00Z", "level": "ERROR", "service": "payment-service", "request_id": "12345", "message": "Failed to process payment", "chaos_monkey_attack": "instance-termination", "target_instance": "i-0abcdef1234567890"

Analyzing Results and Interpreting Findings

Analyzing the data collected during Chaos Monkey experiments is crucial for understanding your system’s resilience. This analysis allows you to identify weaknesses, validate improvements, and refine your testing strategies. The goal is to move beyond simply observing failures and to gain actionable insights into system behavior under stress.

Data Analysis Techniques

Effective data analysis involves several techniques to extract meaningful insights from the experiment results.

- Data Aggregation: Aggregate data across multiple experiments to identify trends. For example, calculate the average impact of a CPU starvation attack on response times. This can involve using tools like Grafana or Prometheus to visualize the data over time.

- Correlation Analysis: Identify correlations between different metrics. For instance, determine if increased latency in one service correlates with increased error rates in another. This can highlight dependencies and cascading failures within the system. Statistical tools or scripting languages like Python with libraries such as Pandas and NumPy are often used for correlation analysis.

- Time-Series Analysis: Analyze data over time to identify patterns and anomalies. This can reveal performance degradations, intermittent failures, or other time-dependent behaviors. Techniques like moving averages or anomaly detection algorithms can be applied.

- Root Cause Analysis: Use the collected data to trace the source of failures. This often involves examining logs, monitoring metrics, and reproducing the failure in a controlled environment. Tools like the ELK stack (Elasticsearch, Logstash, Kibana) or Splunk are valuable for log analysis.

Key Indicators of System Resilience

Several key indicators help assess system resilience based on the experiment results.

- Mean Time To Recover (MTTR): This measures the average time it takes for the system to recover from a failure. A lower MTTR indicates a more resilient system.

- Error Rate: The percentage of requests that result in errors. A lower error rate during attacks indicates a more robust system.

- Latency: The time it takes for the system to respond to a request. Increased latency during attacks can indicate performance bottlenecks.

- Throughput: The number of requests processed per unit of time. A drop in throughput during attacks indicates the system’s capacity to handle the load.

- Resource Utilization: Monitor CPU, memory, disk I/O, and network utilization. High resource utilization during attacks may indicate resource exhaustion.

- Impact on Dependent Services: Measure the effect of failures in one service on other services. A resilient system should limit the impact of failures to the affected service.

Interpreting Experiment Findings and Identifying Areas for Improvement

Interpreting the findings requires a structured approach to translate raw data into actionable insights.

- Establish Baselines: Before running Chaos Monkey experiments, establish baseline performance metrics under normal operating conditions. This provides a reference point for comparison.

- Categorize Failures: Classify failures based on their impact and root cause. For example, categorize failures as “service unavailable,” “data corruption,” or “performance degradation.”

- Prioritize Improvements: Prioritize areas for improvement based on the severity and frequency of failures. Focus on addressing the most critical issues first.

- Implement Fixes and Re-test: After implementing fixes, re-run the Chaos Monkey experiments to validate the effectiveness of the changes. This iterative process helps to ensure continuous improvement.

Distinguishing Expected and Unexpected System Behavior

Understanding the difference between expected and unexpected behavior is crucial for effective analysis.

- Expected Behavior: Expected behavior is what the system is designed to handle gracefully. This includes things like temporary service unavailability, reduced performance during high load, or automatic failover to a backup instance. The expected behavior should be clearly defined in the system’s design and documented in runbooks.

- Unexpected Behavior: Unexpected behavior indicates a weakness in the system. This can include cascading failures, data corruption, or unexpected downtime. This can be caused by a variety of factors, such as:

- Unforeseen Dependencies: A failure in one service unexpectedly impacts another service.

- Resource Exhaustion: A resource (e.g., CPU, memory, network) becomes fully utilized, leading to performance degradation or failures.

- Logic Errors: Bugs in the code cause unexpected behavior under stress.

- Example: Consider a system that uses a database. If a Chaos Monkey experiment simulates a database outage, the expected behavior might be for the system to automatically failover to a replica database. Unexpected behavior could be data loss or widespread service downtime.

Best Practices for Chaos Monkey Implementation

Implementing Chaos Monkey effectively requires careful planning and execution to minimize risks and maximize the benefits of system resilience testing. Following best practices ensures a safe and controlled environment for these experiments, preventing unintended consequences and allowing for meaningful insights into system behavior. This section provides a comprehensive guide to safely integrating Chaos Monkey into production environments.

Defining the Scope and Limitations of Chaos Monkey Experiments

Carefully defining the scope and limitations of Chaos Monkey experiments is paramount to ensure that the tests remain within acceptable bounds and do not jeopardize the stability of the production environment. This involves establishing clear boundaries for the types of attacks, the target systems, and the duration of the tests.

- Start Small and Iterate: Begin with focused experiments on non-critical services or components. Gradually increase the scope and complexity of the attacks as confidence grows and the team gains experience. This incremental approach allows for continuous learning and adaptation. For instance, start by terminating a single instance of a web server and observe the impact. Then, you can progress to more complex scenarios, such as injecting latency or simulating network partitions.

- Identify Critical Systems and Services: Determine which systems and services are essential and should be excluded from initial Chaos Monkey experiments. Prioritize testing less critical components first to mitigate potential risks. A good approach is to create a “Tier 1” and “Tier 2” classification, where Tier 1 services are considered essential and Tier 2 services are less critical.

- Establish Clear Objectives: Define specific goals for each experiment. What are you trying to learn or validate? Are you testing the effectiveness of your failover mechanisms, the resilience of your database, or the performance impact of increased latency? Clear objectives provide a framework for designing the experiments and evaluating the results.

- Define Experiment Duration: Set a reasonable time limit for each experiment. This prevents attacks from running indefinitely and causing prolonged disruption. Short experiments also make it easier to analyze the results and identify the root causes of any issues. A typical experiment might last from a few minutes to an hour, depending on the type of attack and the system being tested.

- Implement Rollback Strategies: Have pre-defined rollback plans in place to quickly revert any changes made by Chaos Monkey. This is crucial in case an experiment has unintended consequences. For example, if a database server is terminated, a rollback strategy might involve automatically restoring the server from a backup.

- Document Experiment Parameters: Maintain detailed documentation of each experiment, including the attack type, target systems, duration, and expected outcomes. This documentation is invaluable for future reference and analysis.

Managing and Controlling the Blast Radius of Chaos Monkey Attacks

Controlling the “blast radius” of Chaos Monkey attacks is critical to prevent widespread system outages and minimize the impact on users. This involves implementing safeguards to contain the effects of the attacks and limit the scope of potential damage.

- Use Canary Deployments: Deploy Chaos Monkey to a subset of your infrastructure (e.g., a canary deployment) before rolling it out to the entire production environment. This allows you to test the experiments on a small scale and identify any unforeseen issues before they affect a large number of users.

- Implement Circuit Breakers: Integrate circuit breakers into your services to automatically stop traffic to a failing service. Circuit breakers prevent cascading failures by isolating unhealthy components and preventing them from affecting other parts of the system.

- Rate Limiting: Apply rate limiting to the Chaos Monkey attacks to prevent them from overwhelming the system. For example, limit the number of instances that can be terminated per minute. This provides a buffer against excessive resource consumption.

- Define Recovery Mechanisms: Implement automated recovery mechanisms to quickly restore services to a healthy state if they are impacted by an attack. This might involve automatically restarting failed instances or rerouting traffic to healthy instances.

- Monitoring and Alerting: Set up comprehensive monitoring and alerting to detect any anomalies or performance degradations caused by the Chaos Monkey attacks. Alerting should be configured to notify the appropriate teams immediately if any issues arise.

- Permissions and Access Control: Restrict access to Chaos Monkey tools and configurations to a limited number of authorized individuals. This helps prevent unauthorized or accidental use of the tools.

Communicating Experiment Results and Findings to Stakeholders

Effective communication of Chaos Monkey experiment results is crucial for fostering a culture of resilience and ensuring that stakeholders understand the value of these tests. This involves sharing findings in a clear, concise, and actionable manner.

- Create Detailed Reports: Generate comprehensive reports that summarize the experiment objectives, the attacks performed, the results observed, and any lessons learned. These reports should be easily understandable by both technical and non-technical stakeholders.

- Visualize Data: Use graphs, charts, and dashboards to visualize the experiment results. This makes it easier to identify trends and patterns in the data.

- Provide Actionable Recommendations: Translate the findings into concrete recommendations for improving system resilience. This might involve fixing bugs, improving monitoring, or refining failover mechanisms.

- Share Results with Relevant Teams: Disseminate the experiment results to all relevant teams, including engineering, operations, and product management. This ensures that everyone is aware of the system’s strengths and weaknesses.

- Regular Feedback Loops: Establish regular feedback loops to discuss the experiment results and gather input from stakeholders. This helps to refine the experiments and ensure that they are aligned with the business goals.

- Track Key Metrics: Monitor key performance indicators (KPIs) related to system resilience, such as mean time to recovery (MTTR) and the number of incidents. Track these metrics over time to measure the impact of Chaos Monkey experiments.

Integrating Chaos Monkey with Existing Systems

Integrating Chaos Monkey effectively is crucial for maximizing its benefits and ensuring a smooth workflow. This involves adapting Chaos Monkey to your specific infrastructure, incorporating it into your monitoring and alerting systems, and automating its execution within your development pipeline. The goal is to create a system where resilience testing becomes an integral, non-disruptive part of your operations.

Integrating Chaos Monkey with Different Cloud Platforms

Cloud platforms offer various tools and APIs that enable seamless integration with Chaos Monkey. The specific integration methods vary depending on the platform (AWS, Azure, GCP), but the underlying principles remain consistent: leveraging platform-specific services to manage resources, trigger attacks, and monitor results.

- AWS Integration: AWS provides a robust ecosystem for integrating Chaos Monkey. You can utilize services like:

- IAM (Identity and Access Management): Configure IAM roles to grant Chaos Monkey the necessary permissions to access and modify resources (e.g., terminating instances, injecting latency). It’s essential to follow the principle of least privilege, granting only the minimum permissions required.

- EC2 (Elastic Compute Cloud): Use the EC2 API to identify and terminate instances. Chaos Monkey can target specific instances based on tags or other criteria, simulating failures.

- Lambda: Deploy Chaos Monkey logic as serverless functions using Lambda. This approach offers scalability and simplifies deployment.

- CloudWatch: Monitor the impact of Chaos Monkey attacks using CloudWatch metrics and logs. Create dashboards to visualize the results and set up alerts for unexpected behavior.

- Azure Integration: Azure offers similar integration capabilities, using services like:

- Azure Active Directory (Azure AD): Manage access control and permissions for Chaos Monkey using Azure AD.

- Virtual Machines (VMs): Use the Azure Resource Manager (ARM) templates and the Azure API to target and manage VMs. Chaos Monkey can simulate VM failures or inject network issues.

- Azure Functions: Deploy Chaos Monkey as serverless functions using Azure Functions, similar to AWS Lambda.

- Azure Monitor: Utilize Azure Monitor to track metrics, logs, and alerts related to Chaos Monkey activities.

- GCP Integration: Google Cloud Platform (GCP) provides a powerful set of tools for integration:

- Cloud IAM: Manage access control and permissions using Cloud IAM.

- Compute Engine: Use the Compute Engine API to target and manipulate virtual machines. Chaos Monkey can be used to simulate various failure scenarios.

- Cloud Functions: Deploy Chaos Monkey as serverless functions using Cloud Functions.

- Cloud Monitoring: Use Cloud Monitoring to track metrics, logs, and create alerts.

Integrating Chaos Monkey with Various Monitoring and Alerting Systems

Integrating Chaos Monkey with your existing monitoring and alerting systems is crucial for providing real-time insights into the impact of attacks and for automating responses. This allows you to quickly identify and address vulnerabilities in your system.

- Integration with Monitoring Systems:

- Prometheus and Grafana: Configure Prometheus to scrape metrics from your application and infrastructure. Create Grafana dashboards to visualize the impact of Chaos Monkey attacks on key performance indicators (KPIs) such as latency, error rates, and resource utilization.

- Datadog: Utilize Datadog’s integrations to monitor metrics, logs, and traces. Create monitors to alert you when specific metrics deviate from expected values during Chaos Monkey experiments. Datadog allows for flexible dashboards and alerts.

- New Relic: Integrate with New Relic to monitor application performance, identify anomalies, and correlate them with Chaos Monkey events. Set up alerts to notify you of issues detected during testing.

- Integration with Alerting Systems:

- PagerDuty: Configure PagerDuty to receive alerts from your monitoring systems when Chaos Monkey experiments reveal issues. Use PagerDuty to trigger incident response workflows and notify the appropriate teams.

- Slack/Microsoft Teams: Integrate your monitoring and alerting systems with communication platforms like Slack or Microsoft Teams. Receive real-time notifications about Chaos Monkey events and their impact, allowing for quick team communication.

- Custom Alerting: Develop custom alerting mechanisms using webhooks or APIs to integrate with your existing systems. This allows you to tailor alerts to your specific needs and workflows.

Creating a Guide for Automating Chaos Monkey Tests as Part of a CI/CD Pipeline

Automating Chaos Monkey tests within your Continuous Integration/Continuous Deployment (CI/CD) pipeline ensures that resilience testing becomes a regular part of your development process. This helps to catch issues early and prevent them from reaching production.

- Define Test Scenarios: Identify the specific failure scenarios you want to test (e.g., instance termination, network latency, database failures). Create well-defined test cases that simulate these scenarios.

- Choose a CI/CD Tool: Select a CI/CD tool like Jenkins, GitLab CI, CircleCI, or GitHub Actions.

- Integrate Chaos Monkey with the CI/CD Tool:

- Scripting: Write scripts (e.g., Bash, Python) to execute Chaos Monkey experiments. These scripts will trigger the attacks and monitor the results.

- API Calls: Utilize the Chaos Monkey API or command-line interface (CLI) to control the experiments.

- Configuration Management: Store Chaos Monkey configuration files (e.g., experiment definitions, target selection criteria) in your version control system (e.g., Git).

- Define Test Stages:

- Build Stage: Compile and build your application code.

- Test Stage: Run unit tests, integration tests, and Chaos Monkey tests.

- Deployment Stage: Deploy the application to a staging or production environment (after successful testing).

- Configure Test Execution:

- Triggering Chaos Monkey: Configure the CI/CD pipeline to automatically trigger Chaos Monkey tests after the build and test stages. This could be after every code commit or on a scheduled basis.

- Target Selection: Define the targets for the Chaos Monkey attacks. This might include specific instances, services, or regions.

- Experiment Duration: Specify the duration of the Chaos Monkey experiments.

- Monitor Results and Reporting:

- Capture Results: Capture the results of the Chaos Monkey tests (e.g., metrics, logs, error messages).

- Generate Reports: Generate reports that summarize the findings of the tests.

- Fail the Build: Configure the CI/CD pipeline to fail the build if any critical issues are detected during the Chaos Monkey tests.

- Continuous Improvement:

- Analyze Results: Regularly analyze the results of the Chaos Monkey tests to identify areas for improvement.

- Refine Test Scenarios: Refine your test scenarios based on the findings of the Chaos Monkey experiments.

- Iterate: Continuously iterate on your Chaos Monkey implementation to improve its effectiveness.

Demonstrating How to Configure Chaos Monkey to Work with Specific Application Architectures

The configuration of Chaos Monkey should be tailored to the specific architecture of your application. This involves identifying the key components, understanding their dependencies, and targeting the appropriate resources for testing.

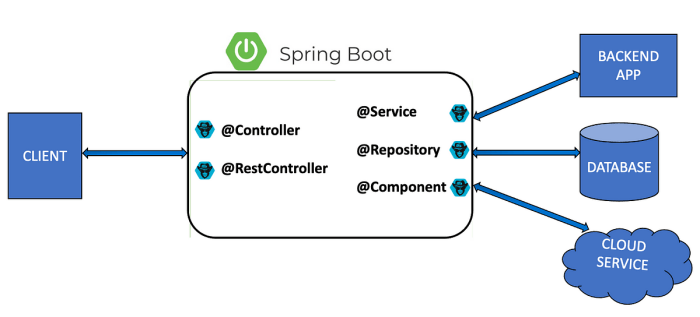

- Microservices Architecture:

- Identify Services: Map out all the microservices that make up your application.

- Define Dependencies: Understand the dependencies between the services.

- Target Specific Services: Use Chaos Monkey to target individual services. For example, you can terminate an instance of a specific service or introduce latency in its network communication.

- Test Communication: Test the communication between services. Simulate network failures or service unavailability to see how the system handles cascading failures.

- Example: A microservices-based e-commerce application. Chaos Monkey could terminate the “Order Processing” service to see how the system handles failed order submissions and retries.

- Monolithic Architecture:

- Identify Key Components: Identify the critical components of your monolithic application (e.g., database, web server, application server).

- Target Infrastructure: Use Chaos Monkey to target the infrastructure supporting the application. This could include terminating virtual machines or injecting network latency.

- Test Resource Consumption: Simulate high load conditions by injecting CPU or memory stress to see how the application handles increased resource consumption.

- Example: A monolithic web application. Chaos Monkey could be used to simulate a database outage or introduce network latency to the database connection.

- Database-Driven Applications:

- Identify Database Servers: Identify the database servers used by your application.

- Target Database Operations: Use Chaos Monkey to simulate database failures, such as:

- Terminating database instances.

- Introducing network latency to database connections.

- Simulating database read/write errors.

- Test Data Consistency: Verify that data consistency is maintained during failures.

- Example: An application that relies on a relational database. Chaos Monkey could be used to simulate a database failover to test the application’s ability to handle the switch.

- Message Queue-Based Systems:

- Identify Message Brokers: Identify the message brokers (e.g., Kafka, RabbitMQ) used by your application.

- Target Message Queue Components: Use Chaos Monkey to target the message queue components, such as:

- Terminating message broker instances.

- Introducing network latency to message queue connections.

- Simulating message loss or delays.

- Test Message Processing: Verify that messages are processed correctly even during failures.

- Example: An application that uses Kafka for event processing. Chaos Monkey could be used to simulate a Kafka broker outage to test the application’s ability to handle message retries and failover.

Tools and Technologies for Chaos Engineering

The effective implementation of Chaos Engineering hinges on the availability and utilization of appropriate tools and technologies. These tools automate and streamline the process of injecting failures, monitoring system behavior, and analyzing the impact of these disruptions. Choosing the right tools is crucial for successful Chaos Engineering, as it allows for the efficient identification and mitigation of vulnerabilities in a system.

Popular Chaos Monkey Tools and Their Respective Features

Several tools have emerged to facilitate Chaos Engineering practices. These tools vary in their features, capabilities, and the environments they support. Understanding these differences is vital for selecting the most appropriate tool for a given project.

- Chaos Monkey (Netflix): The original Chaos Engineering tool. It randomly terminates instances in production to test the resilience of services. Features include automated instance termination, integration with cloud platforms, and customizable failure injection rules. It’s primarily designed for AWS environments.

- Gremlin: A commercial platform offering a wide range of attack types, including CPU, memory, disk I/O, and network attacks. It supports multiple cloud providers and offers advanced features like scheduled attacks, attack orchestration, and detailed reporting. Gremlin provides a user-friendly interface and is suitable for teams of all sizes.

- Chaos Mesh: An open-source cloud-native Chaos Engineering platform built on Kubernetes. It allows for the injection of various faults, such as network delays, pod failures, and resource exhaustion. Chaos Mesh offers a declarative approach, making it easy to define and manage experiments. It is specifically designed for Kubernetes environments.

- LitmusChaos: Another open-source Chaos Engineering platform focused on Kubernetes. LitmusChaos provides a library of pre-built Chaos experiments, supports various Kubernetes operators, and integrates with monitoring and alerting systems. It simplifies the process of running Chaos experiments in Kubernetes.

- PowerfulSeal: An open-source tool from Bloomberg. It automatically finds and deletes pods in Kubernetes clusters, testing the resilience of applications. It is designed to run continuously, providing a constant stream of failures to identify vulnerabilities.

- Kube-monkey: An open-source tool inspired by Netflix’s Chaos Monkey, specifically designed for Kubernetes. Kube-monkey randomly deletes pods in a Kubernetes cluster to test the resilience of applications. It provides a simplified approach to Chaos Engineering within Kubernetes.

Comparative Analysis of Different Chaos Monkey Tools

Choosing the right Chaos Engineering tool requires careful consideration of various factors, including the target environment, the types of failures to be injected, the level of automation required, and the available resources. The following table provides a comparative analysis of several popular tools.

| Tool | Primary Focus | Supported Environments | Key Features | Licensing |

|---|---|---|---|---|

| Chaos Monkey (Netflix) | Instance termination and AWS resilience | AWS | Automated instance termination, customizable failure injection rules, integration with cloud platforms. | Open Source (Apache 2.0) |

| Gremlin | Comprehensive fault injection and resilience testing | Multi-cloud (AWS, GCP, Azure) and On-Premise | Various attack types (CPU, memory, network), scheduled attacks, attack orchestration, detailed reporting, user-friendly interface. | Commercial |

| Chaos Mesh | Cloud-native Chaos Engineering for Kubernetes | Kubernetes | Network delays, pod failures, resource exhaustion, declarative approach, easy to define and manage experiments. | Open Source (Apache 2.0) |

| LitmusChaos | Kubernetes-focused Chaos Engineering with pre-built experiments | Kubernetes | Library of pre-built Chaos experiments, Kubernetes operator support, integration with monitoring and alerting systems. | Open Source (Apache 2.0) |

| PowerfulSeal | Automated pod deletion in Kubernetes | Kubernetes | Continuous pod deletion, focuses on application resilience in Kubernetes. | Open Source (Apache 2.0) |

| Kube-monkey | Simplified Chaos Engineering for Kubernetes | Kubernetes | Random pod deletion, straightforward implementation within Kubernetes. | Open Source (MIT License) |

Resources and Tutorials for Getting Started with Different Chaos Engineering Tools

Getting started with Chaos Engineering tools often requires some initial setup and understanding of the tool’s features. Numerous resources are available to help users get started, including official documentation, tutorials, and community forums.

- Chaos Monkey (Netflix):

- Documentation: Consult the original Netflix Chaos Monkey documentation on GitHub.

- Tutorials: Search for tutorials on setting up Chaos Monkey in your AWS environment.

- Gremlin:

- Documentation: Refer to the Gremlin documentation for detailed information on features and usage.

- Tutorials: Access the Gremlin tutorials and examples to learn about various attack types and experiment design.

- Example: Gremlin provides a step-by-step guide on their website that helps users perform a simple CPU attack, demonstrating how to identify and resolve performance issues.

- Chaos Mesh:

- Documentation: Review the Chaos Mesh documentation for installation, configuration, and experiment creation.

- Tutorials: Follow the tutorials available on the Chaos Mesh website for hands-on experience.

- Example: The Chaos Mesh documentation offers a tutorial on simulating network latency to test application resilience.

- LitmusChaos:

- Documentation: Explore the LitmusChaos documentation to learn about the tool’s features and setup.

- Tutorials: Follow the tutorials and examples provided to run Chaos experiments.

- Example: LitmusChaos offers a tutorial on using pre-built experiments to test the resilience of a deployment.

- PowerfulSeal:

- Documentation: Consult the PowerfulSeal documentation on GitHub for setup and usage instructions.

- Tutorials: Look for tutorials on how to integrate PowerfulSeal into your Kubernetes environment.

- Example: A tutorial might guide users on how to configure PowerfulSeal to automatically delete pods based on specific criteria.

- Kube-monkey:

- Documentation: Refer to the Kube-monkey documentation for installation and configuration instructions.

- Tutorials: Search for tutorials that explain how to deploy and configure Kube-monkey in a Kubernetes cluster.

- Example: A tutorial may walk through the steps of setting up Kube-monkey to randomly delete pods in a test environment to assess application resilience.

Real-World Case Studies and Examples

Understanding how Chaos Monkey has been implemented in real-world scenarios provides invaluable insights into its practical application and effectiveness. Examining specific examples of successful implementations allows us to learn from the strategies employed and the outcomes achieved. Furthermore, studying instances where Chaos Monkey didn’t perform as expected highlights crucial lessons about planning, execution, and the importance of continuous improvement.

Netflix: Pioneering Chaos Engineering

Netflix is arguably the most well-known pioneer of Chaos Engineering, and Chaos Monkey was born out of their need to ensure the resilience of their streaming service. Their architecture, built on Amazon Web Services (AWS), is inherently distributed, making it susceptible to various failures.Netflix’s early adoption of Chaos Monkey was driven by a desire to proactively identify and address potential vulnerabilities in their system.

They aimed to build a system that could withstand failures without impacting the user experience.

- Initial Goals: The primary objective was to simulate failures to uncover weaknesses in their distributed architecture, particularly in the face of AWS outages or component failures.

- Implementation: They initially created Chaos Monkey to randomly terminate instances in their production environment. This forced engineers to build systems that were resilient to individual instance failures. Over time, they expanded their suite of monkeys to simulate other types of failures, such as network latency, DNS failures, and data corruption.

- Impact: Chaos Monkey significantly improved Netflix’s system resilience. They were able to identify and fix vulnerabilities that would have otherwise gone unnoticed until a real outage occurred. This proactive approach allowed them to maintain a high level of service availability, even during periods of high traffic.

- Evolution: The initial Chaos Monkey evolved into a more sophisticated suite of tools, including Chaos Gorilla (which simulates the failure of an entire availability zone) and Janitor Monkey (which cleans up unused resources).

Amazon: Ensuring E-commerce Resilience

Amazon, another major player in cloud computing and e-commerce, also utilizes Chaos Engineering to maintain the reliability of its vast infrastructure. They apply Chaos Monkey and related tools to test the resilience of their services, especially during peak shopping seasons like Prime Day.Amazon’s use of Chaos Engineering is driven by the need to ensure the availability of their e-commerce platform and associated services.

The potential impact of a service outage during peak shopping times is substantial, making resilience a top priority.

- Implementation Focus: Amazon’s Chaos Engineering efforts focus on simulating various failure scenarios, including instance failures, network disruptions, and database issues. They aim to identify and mitigate potential vulnerabilities that could lead to service disruptions.

- Testing Scenarios: They regularly simulate the failure of entire availability zones and test the ability of their systems to automatically failover to other zones. They also test the impact of high traffic loads and the performance of their systems under stress.

- Benefits: Chaos Engineering helps Amazon to proactively identify and fix vulnerabilities, improve the resilience of their systems, and minimize the impact of outages. This results in a more reliable e-commerce platform and a better customer experience.

A Detailed Scenario: Simulating a Database Outage

Here’s a blockquote that demonstrates a Chaos Monkey experiment focused on database resilience:

Scenario: A retail company, “ExampleCorp,” relies on a relational database (PostgreSQL) to store customer data, product information, and order details. Their application is deployed across multiple servers in a cloud environment.

Experiment Goal: To verify the application’s ability to withstand a database outage and maintain functionality, including processing new orders and allowing customers to view their order history.

Chaos Monkey Action: The Chaos Monkey tool is configured to randomly terminate the primary database instance for a duration of 10 minutes.

Pre-Experiment Setup: The application uses a database connection pool and has a failover mechanism configured to automatically switch to a standby database instance when the primary instance becomes unavailable.

Execution: Chaos Monkey randomly selects and terminates the primary database instance. The application’s monitoring system detects the outage and triggers the failover mechanism. The application then starts using the standby database.

Monitoring and Logging: During the experiment, the following metrics are monitored:

- Application response times.

- Number of successful and failed transactions.

- Database connection pool usage.

- Error logs, focusing on database connection errors and transaction failures.

Results:

- Expected Outcome: The application continues to function with minimal impact. Users can still browse products, place orders, and view their order history. There might be a slight delay in some operations due to the failover process, but the overall user experience remains acceptable.

- Unexpected Outcome (Potential): If the failover mechanism is not properly configured or the standby database is not up-to-date, the application might experience significant downtime or data loss.

Analysis:

- Successful Implementation: The experiment demonstrates that the failover mechanism works as expected, and the application can tolerate a database outage. The monitoring data confirms that the application continues to process transactions and serve users.

- Unsuccessful Implementation (If Applicable): If the application fails during the outage, the logs are examined to identify the root cause. This might involve investigating the database connection pool configuration, the failover mechanism, or the standby database synchronization process. Based on the findings, the application is updated to address the identified vulnerabilities.

Lessons Learned:

- Regular testing of failover mechanisms is crucial to ensure they work as expected.

- Proper monitoring and logging are essential for identifying and diagnosing issues during experiments.

- The database standby instance must be synchronized with the primary instance to avoid data loss.

- Database connection pool configurations need to be carefully tuned to handle failover scenarios.

Lessons Learned from Chaos Monkey Implementations

Successful and unsuccessful Chaos Monkey implementations provide valuable lessons for organizations considering or already using this approach.

- Planning is Critical: Before running any Chaos Monkey experiments, organizations must clearly define the goals, scope, and expected outcomes. This includes identifying the systems to be tested, the types of failures to simulate, and the metrics to be monitored.

- Start Small and Iterate: Begin with simple experiments and gradually increase the complexity. This allows engineers to build confidence and learn from each experiment.

- Automate and Integrate: Automate the execution of Chaos Monkey experiments and integrate them into the CI/CD pipeline. This allows for continuous testing and ensures that resilience is a constant focus.

- Communication is Key: Communicate the results of Chaos Monkey experiments to all stakeholders. This includes sharing the findings, the lessons learned, and the actions taken to improve system resilience.

- Monitor and Measure: Implement robust monitoring and logging to track the impact of Chaos Monkey experiments. This data is essential for analyzing the results and identifying areas for improvement.

- Document Everything: Thoroughly document all aspects of Chaos Monkey implementations, including the experiment setup, the results, and the actions taken. This documentation helps to ensure that the knowledge gained is preserved and can be shared with others.

- Unsuccessful Implementations: Unsuccessful implementations can provide valuable insights. Analyze the failures to identify the root causes, and then use this information to improve the design, implementation, and monitoring of future experiments. In some cases, it might be necessary to adjust the scope or approach of Chaos Engineering.

Closing Notes

In conclusion, mastering Chaos Monkey is more than just injecting failures; it’s about cultivating a culture of resilience and proactive problem-solving. By embracing this approach, you can transform your systems into robust, self-healing entities capable of withstanding the unexpected. Remember, the journey to resilience is ongoing, and Chaos Monkey provides a powerful mechanism for continuous improvement, ensuring your systems remain stable and reliable even in the face of adversity.

Embrace the chaos, and build a more resilient future for your applications.

User Queries

What is the primary goal of Chaos Monkey?