Website migrations, while necessary for growth and modernization, often introduce performance challenges. The shift to a new hosting environment, codebase, or infrastructure can expose hidden bottlenecks, leading to slower page load times, increased server resource consumption, and a degraded user experience. Addressing these issues proactively is crucial to maintain user satisfaction, search engine rankings, and ultimately, business success. This guide provides a structured approach to diagnosing and resolving performance problems encountered after a website migration, ensuring a smooth transition and optimal performance.

The following sections detail a methodical process, from pre-migration planning and assessment to post-migration optimization strategies. This includes pre-emptive measures such as performance audits and KPI monitoring, as well as reactive approaches like database optimization, code profiling, and server configuration tuning. Furthermore, the guide delves into network and infrastructure considerations, load balancing, caching strategies, and the importance of robust monitoring and alerting systems.

By following these guidelines, administrators and developers can effectively identify, analyze, and rectify performance issues, ensuring a seamless and efficient user experience post-migration.

Pre-Migration Planning & Assessment

A comprehensive pre-migration performance assessment is crucial for a successful migration. This phase allows for the identification of performance bottlenecks in the existing environment and provides a baseline against which to measure performance after the migration. Without a thorough assessment, performance issues post-migration can be difficult to diagnose and remediate, leading to downtime, user dissatisfaction, and increased costs. This proactive approach minimizes risks and ensures a smooth transition to the new environment.

Importance of a Pre-Migration Performance Audit

A pre-migration performance audit serves as the cornerstone of a well-executed migration strategy. It systematically evaluates the existing system’s performance characteristics under real-world conditions. This understanding forms the foundation for informed decision-making throughout the migration process. The audit identifies areas of potential risk and provides the data necessary to optimize the target environment. A detailed audit also allows for the establishment of Service Level Agreements (SLAs) that can be measured and compared post-migration.The audit should encompass several key areas:

- Application Performance: Evaluate the response times, throughput, and resource utilization of critical applications. This involves analyzing database queries, application code, and external service dependencies.

- Infrastructure Performance: Assess the performance of the underlying infrastructure, including servers, storage, and network components. This involves monitoring CPU usage, memory consumption, disk I/O, and network latency.

- Database Performance: Analyze database query performance, indexing strategies, and data storage efficiency. Database performance is often a critical factor in overall application performance.

- User Experience: Measure user-perceived performance metrics, such as page load times and transaction completion times. This provides insights into the overall user experience.

Key Performance Indicators (KPIs) to Monitor Before Migration

Establishing a robust set of KPIs is essential for tracking performance before and after the migration. These KPIs should be selected to reflect the critical performance aspects of the system. The pre-migration monitoring provides a baseline for comparison after the migration. This baseline facilitates the identification of performance regressions.Here is a checklist of key performance indicators to monitor:

- Response Time: Measure the time it takes for the system to respond to user requests. A longer response time often indicates performance issues. It is typically measured in milliseconds or seconds.

- Throughput: Measure the amount of work the system can handle over a specific period. This is often expressed in transactions per second (TPS) or requests per second (RPS).

- Error Rate: Monitor the percentage of requests that result in errors. A high error rate can indicate application or infrastructure problems.

- CPU Utilization: Measure the percentage of CPU resources being used. High CPU utilization can indicate a CPU bottleneck.

- Memory Utilization: Measure the percentage of memory being used. Insufficient memory can lead to performance degradation.

- Disk I/O: Monitor the rate at which data is read from and written to disk. High disk I/O can indicate a storage bottleneck.

- Network Latency: Measure the delay in data transfer over the network. High latency can impact application performance.

- Database Query Performance: Monitor the execution time of critical database queries. Slow queries can significantly impact application performance.

Strategies for Simulating Production Load During the Pre-Migration Phase

Simulating production load during the pre-migration phase provides a realistic assessment of system performance under stress. This allows for the identification of performance bottlenecks that might not be apparent under normal operating conditions. Load testing should mimic user behavior to provide meaningful insights.Several strategies can be employed:

- Load Testing Tools: Utilize load testing tools, such as JMeter, LoadRunner, or Gatling, to simulate a large number of concurrent users accessing the system. These tools can generate realistic traffic patterns and measure performance metrics.

- Production Data Subsets: Use a subset of production data for testing. This ensures the tests are representative of the actual data volume and complexity. Data masking techniques can be used to protect sensitive information.

- User Behavior Modeling: Model user behavior to simulate realistic traffic patterns. This involves analyzing user access logs and identifying common user workflows. The load testing tools can then be configured to mimic these workflows.

- Ramp-Up and Ramp-Down: Implement ramp-up and ramp-down periods during load testing to simulate the gradual increase and decrease in user traffic. This helps identify performance bottlenecks that might occur under peak loads.

- Monitoring and Analysis: Continuously monitor key performance indicators (KPIs) during load testing. Analyze the results to identify performance bottlenecks and areas for optimization. Tools like Prometheus and Grafana can be used for monitoring and visualization.

Consider the example of a large e-commerce website preparing for a database migration. Before the migration, the team uses JMeter to simulate thousands of concurrent users accessing product pages, adding items to the cart, and completing transactions. They monitor response times, throughput, and error rates. The tests reveal a bottleneck in the database due to inefficient query performance. The team optimizes the queries and indexing strategies before the migration.

After the migration, they repeat the tests, comparing the results to the pre-migration baseline. This process helps ensure the new database environment can handle the expected load.

Identifying Post-Migration Performance Bottlenecks

Performance degradation after a migration can stem from various factors, making it crucial to identify and address these bottlenecks promptly. This section Artikels common performance issues, provides methods for root cause analysis, and details a procedure for effective resource utilization monitoring post-migration.

Common Post-Migration Performance Issues

Several performance issues frequently surface after a migration, often due to differences between the source and target environments or changes in application behavior. Understanding these issues is the first step toward effective troubleshooting.

- Slow Database Queries: Database performance often suffers due to inefficient query plans, inadequate indexing, or resource contention. This is particularly common if the target database system is different from the source or if the migration process did not fully account for schema differences. For example, a query that performed well on an older version of a database might exhibit significant slowdowns on a newer version if its execution plan changes.

- Network Latency: Increased network latency can impact application responsiveness, especially if the target environment is geographically distant from the users or if network configurations are not optimized. Migrations involving cloud environments often introduce latency due to the physical distance between data centers and users. This latency can be exacerbated by poorly configured network devices or inefficient routing.

- Insufficient Server Resources: The target environment might lack sufficient CPU, memory, or disk I/O to handle the application’s workload. This can be due to incorrect sizing during the migration planning phase or an increase in user traffic after the migration. For instance, a web server that functioned adequately in the source environment might become overloaded in the target environment if the target server has fewer resources or if the application’s resource consumption increases.

- Application Code Inefficiencies: The migration process itself, or subsequent code changes, may introduce inefficiencies in the application code. These inefficiencies could include poorly written SQL queries, memory leaks, or inefficient algorithms. For example, a change in the application code to accommodate new features post-migration could inadvertently introduce performance bottlenecks.

- Storage I/O Bottlenecks: Slow disk I/O can significantly impact database performance and overall application responsiveness. This is particularly relevant if the target storage system is slower than the source or if the migration process did not properly address storage performance requirements. Consider a scenario where the target environment utilizes a slower storage tier compared to the source environment, which can directly translate into performance degradation.

- Configuration Issues: Incorrect configuration of the application, database, or operating system can lead to performance problems. This might include incorrect connection pool settings, inadequate caching configurations, or improper network settings. For example, a database server with an inappropriately configured connection pool can experience significant performance degradation due to excessive overhead in establishing and tearing down connections.

Methods for Identifying the Root Cause of Performance Problems

Pinpointing the root cause of performance problems requires a systematic approach, combining profiling, log analysis, and monitoring. Employing these techniques allows for targeted troubleshooting.

- Profiling Tools: Profiling tools provide detailed insights into the application’s execution, identifying the functions or code sections that consume the most resources (CPU, memory, I/O). These tools often offer call graphs and execution time breakdowns. Examples include:

- Application Profilers: Tools like Java profilers (e.g., JProfiler, YourKit) or .NET profilers (e.g., ANTS Performance Profiler) analyze the application’s code to identify bottlenecks in method calls, object creation, and memory usage.

- Database Profilers: Database-specific profilers (e.g., SQL Server Profiler, MySQL Performance Schema) track the execution of SQL queries, providing information about query execution plans, CPU usage, and I/O operations.

- Log Analysis: Analyzing application, database, and system logs is crucial for identifying errors, performance warnings, and resource consumption patterns. Tools for log analysis include:

- Log Aggregation Tools: Centralized log management systems (e.g., ELK Stack, Splunk) collect and analyze logs from multiple sources, enabling efficient search, filtering, and correlation of events.

- Log Parsing and Analysis: Scripting tools (e.g., grep, awk, sed) and specialized log analysis tools can parse log files to identify patterns, anomalies, and performance metrics.

- Resource Monitoring: Monitoring resource utilization (CPU, memory, disk I/O, network) helps identify resource bottlenecks and track performance trends over time.

- System Monitoring Tools: Tools like top, vmstat, iostat (Linux/Unix) and Task Manager, Performance Monitor (Windows) provide real-time and historical data on resource usage.

- Application Performance Monitoring (APM) Tools: APM tools (e.g., Dynatrace, New Relic, AppDynamics) provide comprehensive monitoring of application performance, including transaction tracing, error tracking, and resource utilization.

- Database Performance Analysis: Database performance analysis involves examining query execution plans, monitoring database server metrics, and analyzing slow queries.

- Query Optimization: Tools and techniques for optimizing SQL queries, such as analyzing query execution plans, adding indexes, and rewriting inefficient queries.

- Database Server Metrics: Monitoring database server metrics (e.g., CPU usage, memory usage, disk I/O, connection pool utilization) to identify bottlenecks and performance issues.

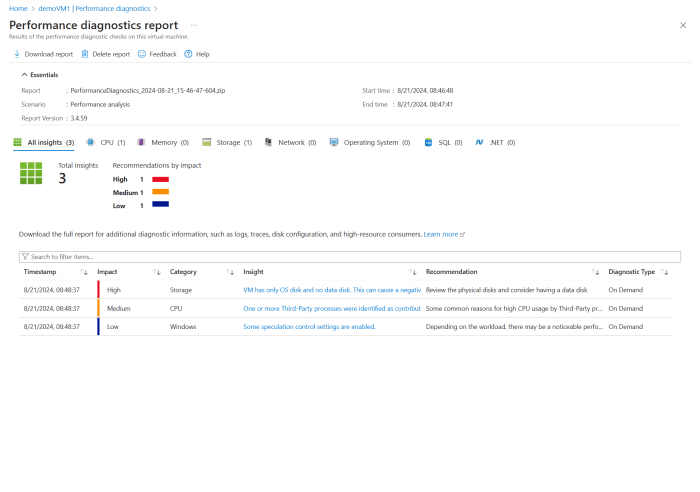

Procedure for Using Monitoring Tools to Track Resource Utilization After Migration

Implementing a robust monitoring strategy is crucial for proactively identifying and addressing performance issues after a migration. This involves selecting appropriate monitoring tools, establishing baselines, and setting up alerts.

- Select Monitoring Tools: Choose monitoring tools based on the application’s architecture, the target environment, and the specific performance metrics to be tracked. Consider tools that provide real-time monitoring, historical data analysis, and alerting capabilities. For example, a cloud-based application might benefit from using the monitoring tools provided by the cloud provider (e.g., AWS CloudWatch, Azure Monitor, Google Cloud Monitoring).

- Establish Baselines: Before the migration, establish performance baselines in the source environment. Collect data on key performance indicators (KPIs) such as response times, transaction throughput, CPU utilization, memory usage, and disk I/O. After the migration, compare the target environment’s performance against these baselines to identify performance degradations. For instance, if the average response time of a web application was 1 second before the migration, a significant increase in response time after the migration would indicate a potential performance issue.

- Define Key Performance Indicators (KPIs): Define a set of KPIs that are critical for monitoring application performance. These KPIs should align with business goals and user experience requirements. Examples of KPIs include:

- Response Time: The time it takes for the application to respond to user requests.

- Transaction Throughput: The number of transactions processed per unit of time.

- Error Rate: The percentage of requests that result in errors.

- CPU Utilization: The percentage of CPU resources used by the application.

- Memory Usage: The amount of memory used by the application.

- Disk I/O: The rate of disk input/output operations.

- Configure Monitoring Agents: Install and configure monitoring agents on the servers and application components in the target environment. These agents collect performance data and send it to the monitoring system. Ensure that the agents are configured to collect the relevant KPIs and that they have sufficient access permissions to collect the required data.

- Set Up Alerts: Configure alerts to notify administrators when performance metrics exceed predefined thresholds. These alerts should be based on the KPIs defined earlier. For example, an alert could be triggered if the CPU utilization of a server exceeds 90% for more than 5 minutes, or if the error rate of a web application exceeds 5%.

- Monitor Resource Utilization: Continuously monitor resource utilization in the target environment using the selected monitoring tools. Regularly review the performance data, identify any anomalies or trends, and investigate any alerts that are triggered. For example, if the monitoring system reports a sudden increase in database query execution times, investigate the queries and optimize them.

- Analyze Performance Data: Analyze performance data to identify the root causes of performance problems. Correlate performance metrics with application logs, database logs, and system logs to gain a comprehensive understanding of the issues. Use profiling tools to identify code bottlenecks and optimize the application. For instance, correlate high CPU utilization with specific application functions or database queries.

- Optimize Performance: Based on the analysis of performance data, implement performance optimization strategies. This may involve optimizing database queries, adding indexes, increasing server resources, optimizing application code, or adjusting network configurations. Continuously monitor the performance of the target environment after making changes to ensure that the optimizations are effective. For example, if database query optimization improves response times, verify the improvement by comparing the performance data before and after the optimization.

- Document Findings and Actions: Document all findings, analyses, and actions taken to address performance problems. This documentation should include the performance metrics, the root causes of the problems, the optimization strategies implemented, and the results of the optimizations. This documentation will be invaluable for future troubleshooting and performance tuning efforts.

Database Performance Optimization

Optimizing database performance post-migration is crucial for ensuring application responsiveness and data integrity. Migrations can expose performance bottlenecks previously masked by the old infrastructure or highlight inefficiencies in existing database design. This section delves into techniques for enhancing database performance, addressing common issues, and providing actionable solutions.

Index Tuning

Indexes significantly improve query performance by enabling rapid data retrieval. However, poorly designed or missing indexes can lead to slow queries, while excessive indexing can degrade write performance.The following points Artikel key aspects of index tuning:

- Index Selection: Choosing the right columns to index is paramount. Consider the following guidelines:

- Index columns frequently used in `WHERE` clauses, `JOIN` conditions, and `ORDER BY` and `GROUP BY` clauses.

- Avoid indexing columns with low cardinality (few unique values), as this offers little benefit.

- Favor composite indexes (indexes on multiple columns) for queries that filter on multiple columns or use `JOIN` conditions involving multiple columns. The order of columns in a composite index matters; the most selective columns should be placed first.

- Index Maintenance: Regularly update index statistics to ensure the query optimizer has accurate information about data distribution. Outdated statistics can lead to suboptimal query plans.

- Index Fragmentation: In some database systems (e.g., SQL Server), indexes can become fragmented over time, reducing performance. Regular index defragmentation or rebuilding is necessary. The level of fragmentation can be assessed using system views or stored procedures provided by the database system.

- Index Monitoring: Monitor index usage to identify unused indexes that can be removed to reduce overhead. Most database systems provide tools to track index usage.

Query Optimization

Query optimization involves rewriting queries to execute more efficiently. This often entails analyzing query execution plans, rewriting complex queries, and utilizing database-specific features.The following are key considerations in query optimization:

- Query Rewriting:

- Simplify complex queries by breaking them down into smaller, more manageable queries.

- Avoid the use of `SELECT

-` whenever possible; explicitly list the columns needed. - Rewrite subqueries, especially correlated subqueries, as joins whenever feasible. Joins are often more efficient.

- Use `EXISTS` instead of `COUNT(*)` when checking for the existence of rows.

- Query Execution Plans: Understanding and interpreting query execution plans is essential. Most database systems provide tools to visualize the execution plan, showing the steps the database engine takes to execute a query. Analyze the plan to identify bottlenecks, such as full table scans, inefficient join strategies, or missing indexes.

- Database-Specific Features: Leverage database-specific features, such as:

- Partitioning: Divide large tables into smaller, more manageable partitions.

- Materialized Views: Precompute and store the results of complex queries.

- Hints: Use query hints (with caution) to guide the query optimizer.

Common Database Performance Issues and Solutions

Several common database performance issues can arise post-migration. Addressing these requires careful analysis and the application of appropriate solutions. The following table provides examples of common issues and their solutions:

| Issue | Cause | Solution |

|---|---|---|

| Slow Queries | Missing or inefficient indexes, poorly written queries, outdated statistics, or excessive locking. | Create or optimize indexes, rewrite queries, update statistics, and review locking strategies. Analyze query execution plans to pinpoint bottlenecks. |

| High CPU Utilization | Inefficient queries, full table scans, or poorly designed indexes. | Optimize queries, add appropriate indexes, and review the overall database design. Consider upgrading hardware resources if the problem persists. |

| High I/O Activity | Inefficient queries, missing indexes, or large table scans. | Optimize queries, add appropriate indexes, and ensure sufficient disk I/O capacity. Consider optimizing the data layout or using solid-state drives (SSDs). |

| Database Locking Contention | Transactions holding locks for extended periods, or poorly designed concurrency control. | Optimize transactions to minimize lock hold times, review transaction isolation levels, and identify and address any long-running queries that might be blocking other processes. |

| Slow Data Loading | Inefficient bulk loading processes, missing indexes, or inadequate resources. | Optimize bulk loading scripts, disable indexes during loading (re-enable them after), and ensure sufficient resources are allocated. |

Analyzing Slow Database Queries Using Query Execution Plans

Query execution plans provide a detailed view of how a database engine executes a query. Analyzing these plans is crucial for identifying performance bottlenecks and optimizing query performance.The following steps are generally involved in analyzing query execution plans:

- Generate the Execution Plan: Use the database system’s tools to generate the execution plan for a slow query. Most database systems provide commands or graphical interfaces for this purpose (e.g., `EXPLAIN` in MySQL, `SHOW PLAN` in PostgreSQL, or the graphical execution plan in SQL Server Management Studio).

- Understand the Plan Structure: Execution plans are typically represented as a tree structure, where each node represents an operation performed by the database engine. Common operations include table scans, index seeks, joins, sorts, and aggregations.

- Identify Bottlenecks: Look for the following indicators of performance problems:

- Full Table Scans: These indicate that the database engine is scanning the entire table, which can be slow, especially for large tables. Consider adding indexes to columns used in the `WHERE` clause.

- Inefficient Join Strategies: Examine the join strategies used (e.g., nested loops, hash joins, merge joins). Poor join strategies can significantly impact performance. Analyze the join conditions and the cardinality of the tables involved.

- High Costs: Each operation in the execution plan has a cost associated with it, representing the estimated resources (CPU, I/O) required to execute that operation. Identify operations with high costs.

- Missing Indexes: The execution plan may indicate missing indexes by suggesting index creation or showing operations that could benefit from indexes.

- Interpret Plan Details: Examine the details of each operation, such as the estimated number of rows processed, the actual number of rows processed, and the estimated cost. Compare the estimated and actual values to identify discrepancies.

- Optimize the Query: Based on the analysis, rewrite the query, add indexes, or adjust database settings to improve performance. After making changes, regenerate and re-analyze the execution plan to verify the improvements.

For example, if a query execution plan shows a full table scan on a large table, it suggests that an index is missing on a column used in the `WHERE` clause. Creating an index on that column will likely improve query performance significantly. Another example involves a slow join operation. By analyzing the execution plan, it may be determined that the join is using a nested loops join, which is inefficient.

Rewriting the query to use a hash join or merge join, or adding indexes to the join columns, can improve performance.

Application Code Optimization

Optimizing application code is crucial after a migration, as the new environment may expose performance bottlenecks that were previously masked or non-existent. This involves identifying and rectifying inefficiencies within the application’s codebase to ensure optimal performance, resource utilization, and scalability in the post-migration state. Careful code analysis and targeted optimization strategies are vital to achieve the desired performance gains and maintain a positive user experience.

Strategies for Code Optimization

Several strategies can be employed to optimize application code after migration. These techniques focus on identifying and addressing performance bottlenecks, improving code efficiency, and ensuring the application runs smoothly in the new environment.

- Code Profiling: Code profiling involves analyzing the application’s runtime behavior to identify performance bottlenecks, such as slow-running functions, inefficient database queries, and excessive memory allocation. Profiling tools, like those available in languages such as Python (e.g., `cProfile`, `line_profiler`) or Java (e.g., Java VisualVM, JProfiler), provide detailed insights into where the application spends its time. The process involves running the application with the profiler enabled, collecting performance data, and then analyzing the data to pinpoint areas for optimization.

For instance, a profiler might reveal that a specific function is called frequently and consumes a significant amount of CPU time. Addressing this function through algorithmic improvements or caching could lead to substantial performance gains.

- Caching: Implementing caching mechanisms can significantly improve performance by reducing the need to repeatedly compute or retrieve data. Caching involves storing frequently accessed data in a faster storage medium, such as memory (e.g., using libraries like Redis or Memcached) or the browser’s cache. Different caching strategies can be used, including:

- Output Caching: Caching the entire output of a function or page.

- Data Caching: Caching frequently accessed data from databases or external sources.

- Object Caching: Caching objects that are expensive to create.

Consider an e-commerce application. Caching product catalogs and user session data in memory can drastically reduce database load and improve page load times. The choice of caching strategy and the duration for which data is cached depend on the specific application requirements and the nature of the data.

- Algorithm Optimization: Optimizing the algorithms used in the application can significantly improve performance. This involves selecting the most efficient algorithms for specific tasks and avoiding inefficient operations. For example, replacing a nested loop with a more efficient data structure like a hash map can drastically reduce the time complexity of a search operation. The Big O notation is useful for evaluating the efficiency of algorithms.

Big O Notation: A mathematical notation that describes the limiting behavior of a function when the argument tends towards a particular value or infinity. It is commonly used to classify algorithms according to how their runtime or space requirements grow as the input size grows.

Algorithms with lower time complexity are generally preferred.

- Code Refactoring: Refactoring involves restructuring existing code without changing its external behavior to improve its readability, maintainability, and performance. This includes techniques like:

- Removing Code Duplication: Identifying and eliminating redundant code blocks.

- Improving Code Readability: Using meaningful variable names, adding comments, and structuring the code logically.

- Simplifying Complex Logic: Breaking down complex functions into smaller, more manageable units.

Refactoring can reveal hidden performance bottlenecks and pave the way for further optimization.

- Asynchronous Operations: Implementing asynchronous operations can improve responsiveness and throughput, particularly in applications that perform I/O-bound tasks such as database queries or network requests. Asynchronous operations allow the application to continue processing other tasks while waiting for these operations to complete. This can be achieved through techniques like:

- Multithreading: Using multiple threads to execute tasks concurrently.

- Asynchronous Programming: Utilizing asynchronous programming models, such as `async/await` in languages like C# or Python, to handle I/O operations without blocking the main thread.

For instance, when an application needs to fetch data from an external API, it can initiate the request asynchronously and continue processing other tasks while waiting for the API response.

Common Code Performance Pitfalls and Avoidance

Identifying and avoiding common code performance pitfalls is essential for building efficient and maintainable applications. Addressing these pitfalls early in the development process can prevent performance degradation and reduce the need for extensive optimization efforts later on.

- Inefficient Database Queries: Inefficient database queries can significantly impact application performance. These can arise from:

- Lack of Indexing: Without proper indexing, database queries may require a full table scan, which is slow.

- Unnecessary Data Retrieval: Retrieving more data than needed can increase network traffic and processing time.

- Poorly Written SQL: Inefficient SQL queries can lead to slow execution times.

To avoid these pitfalls:

- Index frequently queried columns: Creating indexes on columns used in `WHERE` clauses and `JOIN` conditions.

- Select only the necessary columns: Use `SELECT` statements that specify only the required columns.

- Optimize SQL queries: Use query optimization tools and techniques to identify and improve slow queries.

- Memory Leaks: Memory leaks occur when the application fails to release allocated memory, leading to increased memory consumption and potential crashes. Memory leaks can be caused by:

- Unreleased Objects: Objects that are no longer needed but are still referenced.

- Circular References: Objects that reference each other, preventing garbage collection.

To avoid memory leaks:

- Use garbage collection: Leverage garbage collection mechanisms in languages like Java and C#.

- Release resources properly: Ensure that resources such as files and database connections are closed when no longer needed.

- Use memory profiling tools: Utilize memory profiling tools to identify and diagnose memory leaks.

- Inefficient Data Structures: Choosing the wrong data structures can lead to performance bottlenecks. For example, using a list to store data that requires frequent lookups can be inefficient compared to using a hash map. To avoid this:

- Choose the appropriate data structure: Select the data structure that best suits the application’s requirements, considering factors such as the frequency of read and write operations, the size of the data, and the need for ordering.

- Understand the time complexity of different data structures: Consider the time complexity of operations like search, insertion, and deletion when selecting a data structure.

- Excessive Object Creation: Creating numerous objects can consume significant resources, especially in memory-intensive applications. This can lead to performance degradation. To avoid this:

- Reuse objects: Reuse existing objects instead of creating new ones whenever possible.

- Use object pooling: Implement object pooling to reduce the overhead of object creation and destruction.

- Minimize object creation in loops: Avoid creating objects within loops, as this can lead to a significant number of object creations.

- Lack of Caching: Not implementing caching can lead to repeated computations or data retrieval from external sources, which can be time-consuming. To avoid this:

- Identify cacheable data: Determine which data is frequently accessed and relatively static.

- Implement caching mechanisms: Use caching libraries or frameworks to store frequently accessed data in a faster storage medium.

- Choose the appropriate caching strategy: Select the caching strategy that best suits the application’s requirements, such as output caching, data caching, or object caching.

Process for Implementing and Testing Code Optimizations

A structured process is essential for implementing and testing code optimizations effectively. This process ensures that optimizations are implemented correctly, do not introduce new issues, and provide the expected performance improvements.

- Identify Performance Bottlenecks: Begin by identifying the areas of the application that are experiencing performance issues. Use profiling tools, monitoring tools, and user feedback to pinpoint the specific code sections or functionalities that are causing delays or consuming excessive resources.

- Prioritize Optimization Efforts: Prioritize optimization efforts based on the severity of the performance issues and the potential impact of the optimizations. Focus on the areas that have the most significant impact on overall application performance.

- Implement Optimizations: Implement the chosen optimization strategies, such as code refactoring, algorithm optimization, caching, or asynchronous operations. Ensure that the changes are well-documented and follow coding best practices.

- Unit Testing: Perform unit tests to ensure that the optimized code functions correctly and does not introduce any regressions. Unit tests should cover all critical functionalities and edge cases.

- Performance Testing: Conduct performance testing to measure the impact of the optimizations on key performance indicators (KPIs), such as response time, throughput, and resource utilization. Performance testing can involve load testing, stress testing, and soak testing. For example, a load test might simulate a specific number of concurrent users accessing the application, while a stress test might push the application beyond its normal operating limits.

- Monitor and Evaluate: Continuously monitor the application’s performance after implementing the optimizations. Use monitoring tools to track KPIs and identify any unexpected issues or regressions. Evaluate the effectiveness of the optimizations and make adjustments as needed.

- Iterate and Refine: Code optimization is an iterative process. Based on the results of the performance testing and monitoring, iterate on the optimizations, refining the code and making further improvements.

Server-Side Configuration Tuning

Server-side configuration is a critical aspect of post-migration performance optimization, directly influencing application responsiveness and resource utilization. After a migration, the server’s existing configuration might not be optimal for the new environment or the increased load. Fine-tuning these settings can unlock significant performance gains, ensuring a smooth user experience and efficient resource management.

Role of Server Configuration in Performance

Server configuration defines how the web server and application server handle incoming requests, manage resources, and interact with the underlying operating system. Incorrectly configured settings can lead to bottlenecks, slow response times, and even server instability. The performance impact stems from how efficiently the server processes requests, manages memory, utilizes CPU resources, and handles concurrent connections.

Server Configuration Parameters Impacting Performance

Various configuration parameters influence server performance, varying based on the specific server software used (e.g., Apache, Nginx, Tomcat, Node.js). Understanding and adjusting these parameters is essential for optimization.

- Web Server Parameters: These parameters govern how the web server handles incoming HTTP requests.

- Connection Limits: This parameter, often defined by `MaxClients` (Apache) or `worker_processes` and `worker_connections` (Nginx), determines the maximum number of concurrent connections the server can handle. Setting this value too low can lead to connection timeouts and refused connections during peak load. Conversely, setting it too high can exhaust server resources, leading to performance degradation.

For example, if a server experiences frequent 503 Service Unavailable errors, increasing this value might be necessary.

- Keep-Alive Settings: Parameters like `KeepAliveTimeout` and `KeepAlive` (Apache) control persistent HTTP connections. Enabling keep-alive allows the client to reuse the same connection for multiple requests, reducing overhead. However, an excessively long timeout can tie up server resources, especially under heavy load.

- Caching Mechanisms: Web servers often employ caching to store frequently accessed content. Configuration parameters define the cache size, expiration times, and caching strategies. Improper caching can lead to stale content being served, while inadequate cache sizes can diminish performance benefits.

- Request Processing Limits: Parameters like `Timeout` (Apache) control the maximum time a request can take to process. Configuring these parameters appropriately helps prevent resource exhaustion caused by slow or malicious requests.

- Connection Limits: This parameter, often defined by `MaxClients` (Apache) or `worker_processes` and `worker_connections` (Nginx), determines the maximum number of concurrent connections the server can handle. Setting this value too low can lead to connection timeouts and refused connections during peak load. Conversely, setting it too high can exhaust server resources, leading to performance degradation.

- Application Server Parameters: Application server settings manage the execution environment for the application code.

- Heap Size: The Java Virtual Machine (JVM) heap size (e.g., `-Xmx` and `-Xms` for Tomcat) dictates the amount of memory allocated to the application. Insufficient heap size can trigger frequent garbage collection cycles, leading to performance slowdowns. Conversely, allocating too much memory can starve other processes.

- Thread Pool Size: Application servers utilize thread pools to manage concurrent requests. Configuring the thread pool size (e.g., `maxThreads` for Tomcat) determines the maximum number of threads available to handle requests. An undersized thread pool can limit concurrency, while an oversized one can lead to resource contention.

- Connection Pool Settings: Connection pools manage database connections. Parameters such as the maximum pool size and connection timeout affect database access performance. Optimizing these settings prevents connection exhaustion and minimizes database connection overhead.

- Session Management: Parameters that configure how sessions are handled, like session timeout settings, influence performance. For instance, a session timeout set too low might lead to users needing to re-authenticate frequently.

Procedure for Adjusting Server Settings to Improve Performance

A systematic approach is crucial when adjusting server settings to improve performance. This includes careful planning, monitoring, incremental changes, and a robust rollback strategy.

- Baseline Performance Measurement: Before making any changes, establish a baseline performance profile. This involves measuring key metrics like response times, throughput (requests per second), CPU utilization, memory usage, and database query times. Utilize monitoring tools such as `top`, `htop`, `iostat`, `vmstat`, or application performance monitoring (APM) solutions to gather this data.

- Identify Bottlenecks: Analyze the baseline data to pinpoint performance bottlenecks. Common areas include CPU overload, memory exhaustion, slow database queries, or inefficient application code. Tools like profilers, log analysis, and database query analyzers can help identify these bottlenecks.

- Parameter Adjustment: Based on the identified bottlenecks, adjust the relevant server configuration parameters. Start with small, incremental changes. For instance, if the server is experiencing high CPU utilization, you might consider increasing the `worker_processes` (Nginx) or `MaxClients` (Apache) setting, or adjusting the thread pool size in the application server.

- Testing and Validation: After each change, thoroughly test the impact on performance. Repeat the same performance tests conducted during the baseline measurement to compare the results. Ensure that the changes have the desired effect and do not introduce any new performance issues. Consider using load testing tools like JMeter or LoadRunner to simulate realistic user traffic.

- Monitoring and Iteration: Continuously monitor server performance after each adjustment. If performance improves, continue making incremental changes until optimal performance is achieved. If performance degrades, revert to the previous settings and re-evaluate the approach.

- Rollback Strategy: Implement a robust rollback strategy to quickly revert to the previous configuration if a change negatively impacts performance or introduces instability. This involves:

- Configuration Backups: Before making any changes, create a backup of the existing server configuration files.

- Version Control: Use version control systems like Git to track changes to the configuration files, enabling easy rollback to previous versions.

- Automated Rollback: Consider automating the rollback process using scripts or configuration management tools like Ansible or Puppet.

- Documentation: Document all changes made to the server configuration, including the rationale behind each change and the performance impact observed. This documentation will be invaluable for future troubleshooting and optimization efforts.

Network and Infrastructure Considerations

Performance degradation after migration is often attributable to network and infrastructure bottlenecks. Understanding these limitations and implementing appropriate optimizations are crucial for ensuring a smooth transition and maintaining optimal application performance. This section delves into the critical aspects of network and infrastructure, providing insights into identifying and resolving performance issues that may arise post-migration.

Impact of Network Latency and Infrastructure Limitations

Network latency, the time it takes for a data packet to travel from source to destination, and infrastructure limitations, such as insufficient bandwidth or processing power, can significantly impact application performance. High latency can lead to slow response times, especially for applications that rely on frequent communication between the client and server or between different components of the application. Infrastructure limitations can cause bottlenecks, leading to queuing and delays in data processing and transmission.

- Network Latency: Latency, measured in milliseconds (ms), is a critical factor. High latency can stem from several sources, including:

- Geographical Distance: The physical distance between the client and server directly affects latency. Data traveling across continents will inevitably experience higher latency than data traveling within the same data center.

- Network Congestion: Overloaded network links can cause packets to be delayed as they wait to be transmitted. This is particularly common during peak usage hours.

- Routing Issues: Inefficient routing paths can add extra hops and delays to data transmission.

- Network Equipment: Outdated or underpowered network devices, such as routers and switches, can become bottlenecks.

Consider a web application migrated from a data center in New York to one in London. Users in New York will likely experience increased latency compared to their pre-migration experience, as the data now needs to travel a greater distance.

- Infrastructure Limitations: Infrastructure limitations encompass the capabilities of the underlying hardware and software supporting the application. Key areas include:

- Bandwidth: Insufficient bandwidth can restrict the rate at which data can be transmitted. This is particularly problematic for applications that transfer large files or stream multimedia content.

- Processing Power: Limited CPU, memory, or storage resources on servers can lead to slow response times and application crashes.

- Storage I/O: Slow disk I/O can bottleneck database operations and file access.

If a migrated application experiences significantly increased database query times, despite database optimization efforts, the issue might be related to insufficient storage I/O performance on the new infrastructure. Monitoring disk I/O metrics (e.g., read/write speeds, queue length) is crucial in such scenarios.

Identifying and Resolving Network-Related Performance Issues

Identifying network-related performance issues requires a systematic approach, involving monitoring, analysis, and targeted troubleshooting. A proactive strategy helps pinpoint the root causes of performance degradation.

- Monitoring Network Performance: Implementing robust network monitoring tools is essential. These tools should track key metrics, including:

- Latency: Measured using tools like `ping` or specialized network monitoring software.

- Packet Loss: Indicates the percentage of data packets lost during transmission.

- Bandwidth Utilization: Shows how much of the available network bandwidth is being used.

- Throughput: The actual rate of data transfer.

- Error Rates: Number of network errors occurring.

Network monitoring tools, such as SolarWinds Network Performance Monitor or PRTG Network Monitor, can provide real-time and historical data to help identify performance trends and anomalies. Regularly reviewing these metrics is crucial.

- Analyzing Network Traffic: Network traffic analysis tools provide in-depth insights into network communication patterns.

- Protocol Analysis: Identifying the protocols being used and their impact on performance (e.g., HTTP, HTTPS, DNS).

- Traffic Shaping: Prioritizing traffic based on its importance.

- Application-Level Analysis: Analyzing the network traffic generated by specific applications.

Tools like Wireshark allow for detailed packet capture and analysis, enabling identification of slow responses, retransmissions, and other network-related problems. Examining packet headers can reveal latency sources.

- Troubleshooting Network Issues: Once issues are identified, troubleshooting involves systematically investigating potential causes.

- Testing Network Connectivity: Verify that all network devices are functioning correctly. Use tools like `ping` and `traceroute` to check connectivity and identify potential bottlenecks.

- Analyzing DNS Resolution: Slow DNS resolution can significantly impact application response times. Use tools like `nslookup` or `dig` to diagnose DNS issues.

- Optimizing Routing: Ensure that network traffic is taking the most efficient routes. Review routing tables and consider using content delivery networks (CDNs) to cache content closer to users.

- Upgrading Network Infrastructure: If bandwidth or processing limitations are identified, consider upgrading network devices or increasing network capacity.

If a migrated e-commerce site experiences slow page load times, even after application and database optimizations, a network trace might reveal that the DNS resolution time is excessively high. Resolving this by optimizing DNS settings or using a CDN could significantly improve performance.

Best Practices for Ensuring Optimal Network Performance After Migration

Adhering to best practices helps proactively address and mitigate network-related performance issues post-migration.

- Network Planning and Assessment: Before migration, thoroughly assess the network requirements of the application.

- Bandwidth requirements: Estimate the expected network traffic volume.

- Latency requirements: Determine the acceptable latency for the application’s functionality.

- Security requirements: Implement appropriate security measures to protect network traffic.

This involves analyzing the existing network infrastructure, application traffic patterns, and user access patterns.

- Optimizing Network Configuration: Configure network devices and services for optimal performance.

- Firewall rules: Ensure firewall rules are properly configured to allow necessary traffic while blocking malicious requests.

- Load balancing: Distribute traffic across multiple servers to improve performance and availability.

- Quality of Service (QoS): Prioritize critical traffic to ensure that it receives preferential treatment.

Proper configuration of load balancers can distribute user traffic across multiple application servers, improving response times during peak loads.

- Leveraging Content Delivery Networks (CDNs): Use CDNs to cache static content closer to users.

- Reduce latency: Serve content from servers geographically closer to the user.

- Improve website performance: Reduce the load on the origin server.

- Increase website availability: Provide redundancy and failover capabilities.

For a globally accessed web application, using a CDN to cache images, CSS, and JavaScript files can significantly reduce page load times for users located far from the origin server.

- Monitoring and Alerting: Implement continuous monitoring and alerting to detect performance issues proactively.

- Real-time monitoring: Track key network metrics.

- Alerting thresholds: Set up alerts for critical metrics.

- Regular reporting: Generate reports to track performance trends.

Establishing alerts for high latency or packet loss allows for prompt identification and resolution of network problems before they impact users.

- Regular Performance Testing: Conduct regular performance testing to ensure that the application meets performance requirements.

- Load testing: Simulate high user loads to identify performance bottlenecks.

- Stress testing: Push the application to its limits to determine its breaking point.

- Regression testing: Verify that new changes do not negatively impact performance.

Periodic load testing helps identify potential performance bottlenecks before they impact users during peak usage.

Load Balancing and Scalability

Following a migration, a significant increase in user traffic is often observed, which can overwhelm resources and lead to performance degradation. Implementing load balancing and scalability measures becomes crucial to handle this increased demand effectively, ensuring a consistent and responsive user experience. These strategies distribute traffic across multiple servers, preventing any single point of failure and allowing the system to accommodate fluctuating workloads.

Importance of Load Balancing and Scalability

Post-migration, the infrastructure must be capable of handling potentially higher traffic volumes than before. Load balancing and scalability provide this capability by distributing incoming requests across a pool of servers. This approach offers several key advantages:

- High Availability: Load balancing ensures that even if one server fails, traffic is automatically redirected to other available servers, minimizing downtime and maintaining service continuity.

- Improved Performance: Distributing the workload reduces the strain on individual servers, leading to faster response times and improved overall application performance.

- Enhanced Scalability: Scalability allows the system to grow to accommodate increasing traffic by adding more servers to the pool as needed. This ensures the system can handle future growth without requiring significant architectural changes.

- Resource Optimization: Load balancing can optimize resource utilization by distributing traffic evenly across all available servers, preventing bottlenecks and maximizing the efficiency of the infrastructure.

Load Balancing Strategies

Various load balancing strategies are available, each with its strengths and weaknesses. The choice of strategy depends on the specific requirements of the application and the infrastructure.

- Round Robin: This is the simplest strategy, where requests are distributed sequentially to each server in the pool. It is suitable for basic load balancing where all servers have similar capabilities.

- Weighted Round Robin: This strategy assigns weights to each server, allowing for a more granular distribution of traffic. Servers with higher weights receive a larger proportion of requests, useful when servers have different processing capabilities.

- Least Connections: This strategy directs traffic to the server with the fewest active connections. It is effective in situations where server performance varies based on the number of active users.

- Least Response Time: This strategy sends requests to the server with the fastest response time. It is beneficial when servers have varying response times due to fluctuating loads or resource availability.

- IP Hash: This strategy uses the client’s IP address to consistently route requests to the same server. It is helpful for maintaining session affinity, where users need to be routed to the same server for subsequent requests.

Scalable Architecture with Load Balancing Diagram

A scalable architecture incorporates load balancing to distribute traffic across multiple servers, providing high availability and improved performance. The following diagram illustrates a typical architecture:

Diagram Description:The diagram depicts a multi-tiered web application architecture designed for scalability and high availability. The components and their interactions are as follows:

- User/Client: Represents the end-users accessing the application through web browsers or mobile apps.

- Load Balancer: Acts as the entry point for all incoming traffic. It distributes the requests across the available web servers based on the configured load balancing strategy. This component ensures high availability and optimal resource utilization.

- Web Servers (Multiple): These servers host the application code and serve dynamic content. They are behind the load balancer and handle user requests. The use of multiple servers provides redundancy and allows for horizontal scaling.

- Application Servers (Multiple): These servers handle the business logic and data processing. They receive requests from the web servers. The use of multiple servers supports high availability and horizontal scalability.

- Database Servers (Multiple, with Replication): These servers store and manage the application data. They are typically configured in a master-slave replication setup to ensure data consistency and high availability. The master server handles write operations, while the slave servers handle read operations.

- Caching Layer (e.g., Redis, Memcached): An optional but highly recommended component that caches frequently accessed data to reduce the load on the database servers and improve response times.

- Storage (e.g., Object Storage, Network File System): This component stores static assets such as images, videos, and other files. It can be scaled independently to accommodate growing storage needs.

- Monitoring and Logging: These components monitor the performance of the system and log events for troubleshooting and analysis.

Traffic Flow:The traffic flow starts with the user’s request, which is directed to the load balancer. The load balancer then distributes the request to one of the available web servers. The web server processes the request and may interact with the application servers and the database servers to retrieve or update data. If caching is implemented, the application server may check the cache for the requested data before accessing the database.

The response is then sent back to the user via the web server and the load balancer. This architecture is designed to handle a high volume of traffic and to be easily scalable by adding more servers to each tier as needed.

Caching Strategies and Implementation

Caching is a fundamental technique for optimizing application performance, particularly after a migration. It involves storing frequently accessed data in a temporary location (cache) to reduce the need to repeatedly fetch it from the original source (e.g., database, file system, or remote server). This results in faster response times and reduced resource consumption. Effective caching strategies are essential for mitigating performance bottlenecks introduced or exacerbated during the migration process.

Different Caching Strategies and Their Benefits

Caching strategies vary depending on the location of the cache and the type of data being cached. Each strategy offers specific benefits in terms of performance improvement and resource utilization.

- Browser Caching: This involves storing static content (e.g., images, CSS, JavaScript) directly in the user’s web browser.

- Benefits: Significantly reduces page load times for returning visitors, as the browser retrieves content from its local cache instead of requesting it from the server. It minimizes network traffic and server load.

- Server-Side Caching: This involves caching data on the server-side, closer to the application logic and data sources.

- Benefits: Reduces database load, network latency, and the processing time required to generate dynamic content. Server-side caching can be implemented at various levels, including:

- Object Caching: Caches the results of database queries or computationally expensive operations.

- Page Caching: Caches entire HTML pages, serving them directly to users without processing requests.

- Fragment Caching: Caches specific parts of a page, allowing for dynamic content to be combined with cached components.

- Content Delivery Network (CDN) Caching: A CDN caches content across geographically distributed servers.

- Benefits: Improves content delivery speed for users located far from the origin server. It reduces latency by serving content from a server closer to the user’s location. CDNs are particularly effective for static assets like images and videos.

- Database Query Caching: Caches the results of frequently executed database queries.

- Benefits: Reduces the load on the database server and improves query response times. Database query caching can be implemented using database-specific features or external caching systems.

Popular Caching Technologies and Implementation

Several caching technologies are available, each with its strengths and weaknesses. The choice of technology depends on the application’s requirements, architecture, and the type of data being cached.

- Redis: An in-memory data store often used for caching. It supports various data structures (strings, hashes, lists, sets, sorted sets) and provides high performance.

- Implementation: Integrate Redis into the application code using client libraries (e.g., `redis-py` for Python, `Jedis` for Java). Configure connection parameters (host, port, password) and use commands to store, retrieve, and manage cached data. For example, in Python:

import redis redis_client = redis.Redis(host='localhost', port=6379, db=0) redis_client.set('mykey', 'myvalue') value = redis_client.get('mykey') print(value) # Output: b'myvalue' - Memcached: Another in-memory caching system, simpler than Redis but still highly performant.

- Implementation: Use client libraries to connect to Memcached servers. Store data as key-value pairs and retrieve them using the corresponding keys. Example in Python using the `python-memcached` library:

from memcache import Client mc = Client(['127.0.0.1:11211']) mc.set('mykey', 'myvalue') value = mc.get('mykey') print(value) # Output: myvalue - Varnish: A popular HTTP accelerator and reverse proxy, often used for caching web pages.

- Implementation: Configure Varnish to sit in front of the web server. Define caching rules in a Varnish Configuration Language (VCL) file, specifying which content to cache, cache expiration times, and other caching behaviors.

- HTTP Caching Headers: Leverage built-in browser caching mechanisms by setting appropriate HTTP headers in the server’s responses.

- Implementation: Use headers like `Cache-Control` (e.g., `public`, `private`, `max-age`), `Expires`, and `ETag` to control how browsers cache content. For example, in Apache’s `.htaccess` file:

<FilesMatch "\.(jpg|jpeg|png|gif|js|css)$"> Header set Cache-Control "max-age=31536000, public" </FilesMatch>

- Database Caching Mechanisms: Many databases offer built-in caching features.

- Implementation: Configure the database’s caching parameters (e.g., buffer pool size in MySQL, shared pool size in Oracle). Use database-specific query optimization techniques and caching strategies.

Process for Implementing Caching Mechanisms to Improve Application Response Times

Implementing caching requires a systematic approach, starting with identifying cacheable content and ending with monitoring and optimization.

- Identify Cacheable Content: Analyze application performance metrics to identify frequently accessed data and computationally expensive operations. Determine which data is suitable for caching based on its frequency of access, volatility, and impact on performance. Prioritize caching static assets, database query results, and frequently generated content.

- Choose Appropriate Caching Technology: Select the caching technology that best suits the application’s requirements, considering factors like data structure needs, performance characteristics, and scalability. Evaluate the available options (Redis, Memcached, Varnish, HTTP caching, database caching) and choose the most appropriate solution.

- Design Caching Strategy: Define the caching strategy, including the cache key generation, cache expiration policies, and cache invalidation mechanisms. Determine the cache size, the data structures to use, and the caching layers to implement (browser, server-side, CDN). Consider how to handle cache misses and how to update the cache when data changes.

- Implement Caching: Integrate the chosen caching technology into the application code. Implement the caching logic, including the retrieval and storage of data in the cache. Instrument the application to track cache hits and misses.

- Test Caching: Thoroughly test the caching implementation to ensure it functions correctly and improves performance. Measure response times, database load, and server resource utilization before and after caching. Verify that the cache is populated correctly and that data is retrieved from the cache as expected.

- Monitor Caching: Continuously monitor cache performance, including cache hit rates, miss rates, and eviction rates. Use monitoring tools to track cache statistics and identify potential issues. Set up alerts to notify when cache performance degrades.

- Optimize Caching: Regularly review and optimize the caching strategy based on performance monitoring data. Adjust cache expiration times, cache sizes, and cache keys to improve cache hit rates and reduce cache misses. Tune caching configurations to ensure optimal performance. Consider using different caching strategies based on different types of data.

Monitoring and Alerting Systems

Effective monitoring and alerting are critical components of post-migration performance management. They provide real-time insights into system behavior, enabling proactive identification and resolution of performance issues before they impact users. Implementing robust monitoring and alerting systems ensures that performance degradation is detected early, minimizing downtime and maintaining a positive user experience.

Importance of Post-Migration Performance Monitoring

Regular monitoring is essential for validating the success of the migration and for identifying any unforeseen performance bottlenecks introduced during the process. Monitoring helps to understand the system’s behavior under the new environment, comparing pre-migration baselines with post-migration performance. This allows for the continuous optimization of the migrated system.

Setting Up Alerting Systems for Performance Degradation

Proactive alerting systems are designed to automatically notify administrators when predefined performance thresholds are breached. This allows for timely intervention and prevents minor issues from escalating into major outages. Setting up these systems involves choosing the right monitoring tools, defining appropriate alert thresholds, and configuring notification channels.

- Choosing Monitoring Tools: Select tools that are compatible with the new infrastructure and can collect relevant performance metrics. Consider options such as:

- Open-source solutions: Prometheus, Grafana, and Nagios offer flexible monitoring capabilities and extensive integration options.

- Commercial tools: Datadog, New Relic, and Dynatrace provide comprehensive monitoring, alerting, and analysis features.

- Defining Alert Thresholds: Establish baseline performance metrics during the initial post-migration phase. Analyze these baselines to determine acceptable performance ranges. Set thresholds for each metric, such as CPU utilization, memory usage, and response times, to trigger alerts when exceeded. For example, if the average response time of a web application before migration was 200ms, a threshold of 500ms might be set to trigger an alert, allowing time for investigation before users experience significant slowdowns.

- Configuring Notification Channels: Integrate the monitoring system with appropriate notification channels, such as email, SMS, or collaboration platforms (e.g., Slack, Microsoft Teams). Configure the system to send alerts to the right teams or individuals based on the severity of the issue. Critical alerts, indicating a severe outage, should be routed to the on-call team immediately.

- Testing Alerting Systems: Regularly test the alerting system to ensure it functions correctly. Simulate performance issues and verify that alerts are triggered and delivered as expected. This includes confirming that the correct individuals are notified and that the alerts contain sufficient information for troubleshooting.

Performance Metrics to Monitor and Alerting Thresholds

The specific performance metrics to monitor and the thresholds to set depend on the application and the infrastructure. However, some general categories and examples of metrics are widely applicable. It is crucial to customize these based on the specific migrated application and its operational requirements.

- Server-Side Metrics: Monitor server resource utilization to ensure that servers are not overloaded.

- CPU Utilization: Percentage of CPU time used.

- Threshold: Alert if sustained CPU utilization exceeds 80% for more than 5 minutes.

- Memory Usage: Amount of RAM used.

- Threshold: Alert if memory usage exceeds 90% of available RAM.

- Disk I/O: Disk read/write operations.

- Threshold: Alert if disk I/O latency exceeds a certain value (e.g., 10ms) for more than a few minutes, or if the disk queue length is consistently high.

- CPU Utilization: Percentage of CPU time used.

- Application Performance Metrics: Track the performance of the application itself.

- Response Time: Time taken for the application to respond to user requests.

- Threshold: Alert if the average response time exceeds a predefined threshold (e.g., 500ms) for more than a few minutes. Consider differentiating thresholds based on the criticality of the request or the time of day.

- Error Rates: Percentage of requests that result in errors.

- Threshold: Alert if the error rate exceeds a certain percentage (e.g., 1%) for more than a few minutes.

- Throughput: Number of requests processed per unit of time.

- Threshold: Alert if throughput drops significantly below the baseline or expected levels.

- Response Time: Time taken for the application to respond to user requests.

- Database Performance Metrics: Monitor the performance of the database.

- Query Response Times: Time taken for database queries to execute.

- Threshold: Alert if query response times exceed a predefined threshold (e.g., 1 second) for more than a few minutes.

- Database Connections: Number of active database connections.

- Threshold: Alert if the number of connections approaches the maximum allowed by the database server.

- Database CPU/IO Utilization: Resource usage by the database server.

- Threshold: Alert if CPU utilization or disk I/O usage exceeds a predefined threshold.

- Query Response Times: Time taken for database queries to execute.

- Network Metrics: Monitor network performance to identify network-related issues.

- Latency: Delay in network traffic.

- Threshold: Alert if network latency exceeds a predefined threshold.

- Packet Loss: Percentage of packets lost during transmission.

- Threshold: Alert if packet loss exceeds a predefined threshold.

- Bandwidth Utilization: Amount of network bandwidth used.

- Threshold: Alert if bandwidth utilization approaches the maximum capacity.

- Latency: Delay in network traffic.

Example: A company migrated its e-commerce platform to a new cloud environment. They implemented monitoring and alerting using a combination of Prometheus and Grafana. They set the following alerts:

CPU Utilization: Alert if any web server’s CPU utilization exceeded 80% for more than 5 minutes.

Response Time: Alert if the average response time for product listing pages exceeded 700ms.

Database Queries: Alert if the response time for critical database queries exceeded 1 second.

This proactive approach enabled them to quickly identify and resolve performance issues, such as slow database queries and overloaded web servers, resulting in a smooth transition and a positive user experience.

Concluding Remarks

In conclusion, successfully navigating performance challenges post-migration requires a proactive and multi-faceted approach. By meticulously planning, rigorously testing, and continuously monitoring, administrators and developers can identify and resolve performance bottlenecks, leading to a faster, more responsive, and scalable website. The strategies Artikeld in this guide, from pre-migration assessments to advanced optimization techniques, provide a comprehensive framework for ensuring optimal performance and a positive user experience after any website migration.

Continuous vigilance and adaptation to evolving technological landscapes are key to maintaining a high-performing website in the long term.

Query Resolution

What are the most common causes of slow page load times after a migration?

Common causes include inefficient database queries, network latency, improper caching, and poorly optimized code. Server configuration and infrastructure limitations can also contribute to slow performance.

How can I measure website performance after the migration?

Use tools like Google PageSpeed Insights, GTmetrix, and WebPageTest to measure page load times, resource requests, and overall performance scores. Monitor server resource utilization (CPU, memory, disk I/O) using server monitoring tools.

What are the best practices for caching after migration?

Implement browser caching, server-side caching (e.g., using a caching plugin like WP Rocket or W3 Total Cache for WordPress), and CDN (Content Delivery Network) caching to reduce server load and improve response times. Configure caching properly and clear the cache after any significant content changes.

How do I identify slow database queries?

Use database profiling tools (e.g., MySQL slow query log, query monitor plugins for WordPress) to identify queries that take a long time to execute. Analyze query execution plans to pinpoint performance bottlenecks within the queries.

What should I do if my website is experiencing high server resource usage after migration?

Investigate the root cause by monitoring resource usage (CPU, memory, disk I/O). Optimize database queries, optimize application code, adjust server configuration settings, and implement caching strategies to reduce resource consumption. Consider scaling your server resources if necessary.