Delving into the realm of system administration, this exploration focuses on how to run admin processes as one-off tasks (Factor XII). This approach streamlines operations, ensuring efficiency and security. One-off tasks, unlike scheduled or persistent processes, execute once and then terminate, making them ideal for specific administrative needs.

We’ll uncover the core principles of Factor XII, a methodology designed to optimize the execution of these tasks. This includes understanding task isolation, robust security measures, effective scripting techniques, and the crucial aspects of auditing and logging. From scripting languages to automation tools, we’ll cover the essential elements needed to master the art of one-off admin task execution, ultimately enhancing system reliability and performance.

Understanding the Core Concept: One-Off Admin Tasks

The efficient management of system administration tasks often involves distinguishing between activities that require continuous operation and those that are best suited for a single execution. Understanding this distinction is crucial for optimizing system performance, minimizing resource consumption, and ensuring the security and integrity of the system. This section delves into the core concept of “one-off” admin tasks, clarifying their definition, providing illustrative examples, and contrasting them with other task execution methods.

Defining One-Off Tasks

In system administration, a “one-off” task is a command or a script designed to be executed once and then terminate. It’s a discrete operation performed to achieve a specific goal, without the need for continuous monitoring or repeated execution unless explicitly triggered again. These tasks are typically initiated manually or through automated processes designed to run them at a predetermined time or in response to a specific event.

They are not intended to run indefinitely in the background or be part of a scheduled, recurring process.

Examples of One-Off Admin Tasks

Numerous system administration activities are ideally suited for execution as one-off tasks. These tasks are characterized by their limited scope and the absence of a requirement for continuous operation. Here are some common examples:

- System Updates: Installing software updates, patches, or security fixes. These are often performed periodically, but each execution is a discrete event.

- Data Migration: Transferring data from one storage location to another or migrating data between different systems.

- User Account Management: Creating, modifying, or deleting user accounts, including setting up initial configurations or password resets.

- Backup and Recovery: Performing a full or incremental backup of system data or restoring data from a backup.

- Configuration Changes: Applying specific configuration settings to a system or application, such as modifying network settings or adjusting application parameters.

- File System Operations: Performing tasks like disk space cleanup, file system integrity checks, or reorganizing directory structures.

One-Off Tasks vs. Scheduled or Persistent Processes

Understanding the distinctions between one-off tasks and other execution methods is vital for choosing the appropriate approach. The key differences lie in their operational characteristics and intended purpose.

- Execution Frequency: One-off tasks are executed once, whereas scheduled tasks are configured to run automatically at specified intervals (e.g., daily, weekly) and persistent processes run continuously in the background.

- Resource Consumption: One-off tasks typically consume resources only during their execution, while scheduled and persistent processes consume resources continuously, even when idle.

- Monitoring and Maintenance: One-off tasks require monitoring only during their execution, while scheduled and persistent processes require ongoing monitoring and maintenance to ensure they function correctly.

- Use Cases: One-off tasks are ideal for tasks that need to be performed only once or infrequently. Scheduled tasks are best for repetitive maintenance tasks. Persistent processes are essential for services that need to be available continuously (e.g., web servers, database servers).

Choosing the correct method – one-off, scheduled, or persistent – is critical for efficient resource utilization and optimal system performance.

Identifying Factor XII’s Role and Relevance

In the context of running admin processes as one-off tasks, “Factor XII” represents a conceptual framework. This framework emphasizes the efficient and controlled execution of administrative operations, particularly those that are infrequent or require specific configurations. Understanding Factor XII’s role is crucial for optimizing system administration practices and minimizing potential risks.

The Relationship Between “Factor XII” and Admin Tasks

Factor XII, in this context, is not a literal entity but rather a metaphorical representation of a strategic approach. It serves as a lens through which to view and manage administrative tasks that are designed to be executed once, or infrequently. The principles of Factor XII guide the design and implementation of these tasks, ensuring they are isolated, auditable, and easily repeatable if needed.

Challenges Factor XII Solves in System Administration

System administration often involves a diverse range of tasks, some of which are complex and potentially disruptive. Factor XII addresses several key challenges:

- Complexity Management: One-off tasks can be inherently complex, involving multiple steps, dependencies, and configurations. Factor XII promotes breaking down these tasks into smaller, more manageable units, making them easier to understand, troubleshoot, and maintain.

- Risk Mitigation: Incorrectly configured or executed admin tasks can lead to system instability, data loss, or security breaches. By isolating these tasks and controlling their execution environment, Factor XII minimizes the risk of unintended consequences.

- Reproducibility: While designed for one-off execution, admin tasks may need to be repeated in the future. Factor XII emphasizes the importance of documenting and scripting tasks, making them easily reproducible when required.

- Auditability: Tracking changes and actions is crucial for security and compliance. Factor XII encourages logging and monitoring of all task executions, providing a clear audit trail for accountability and troubleshooting.

- Efficiency: By automating and streamlining one-off tasks, Factor XII improves the efficiency of system administrators, freeing them up to focus on more strategic initiatives.

Benefits of Utilizing Factor XII Principles

Adopting the principles of Factor XII yields several significant benefits for system administration:

- Reduced Errors: The modular design and clear documentation associated with Factor XII tasks minimize the likelihood of human error during execution.

- Improved Security: Isolating tasks and controlling their environment reduces the attack surface and protects sensitive data.

- Enhanced Reliability: Well-defined and tested one-off tasks contribute to the overall stability and reliability of the system.

- Increased Efficiency: Automation and streamlined processes save time and resources, allowing administrators to be more productive.

- Better Compliance: Detailed audit trails and standardized procedures facilitate compliance with industry regulations and internal policies.

Security Considerations for One-Off Admin Processes

Admin processes, especially those executed as one-off tasks, represent a critical attack surface within any IT infrastructure. The inherent power granted to these tasks, coupled with their often-infrequent execution, can create significant security vulnerabilities if not managed with extreme care. This section details security best practices, verification checklists, and risk mitigation strategies to fortify the execution of one-off admin processes.

Security Best Practices for One-Off Admin Tasks

Implementing robust security practices is paramount when dealing with one-off administrative tasks. This involves a multi-layered approach that encompasses secure scripting, access control, and rigorous auditing.

- Principle of Least Privilege: Grant only the minimum necessary privileges to the account executing the one-off task. Avoid using highly privileged accounts (e.g., root or administrator) unless absolutely required. Consider creating dedicated service accounts with limited permissions scoped to the specific task. For instance, if a task involves modifying user accounts, the service account should only have permissions to modify user attributes and nothing else.

- Secure Scripting Practices: Adopt secure coding standards when writing scripts. This includes input validation to prevent command injection vulnerabilities, output encoding to prevent cross-site scripting (XSS), and secure storage of sensitive data (e.g., passwords). Always sanitize user inputs to prevent malicious code execution.

- Secure Storage of Credentials: Never hardcode credentials (passwords, API keys, etc.) directly into scripts. Instead, utilize secure credential management solutions like HashiCorp Vault, AWS Secrets Manager, or Azure Key Vault. These tools provide a centralized and secure way to store, manage, and retrieve sensitive information.

- Access Control and Authentication: Implement strong authentication mechanisms for accessing and executing one-off tasks. This might involve multi-factor authentication (MFA) to verify the identity of the user initiating the task. Restrict access based on the principle of “need-to-know.”

- Regular Auditing and Monitoring: Implement comprehensive logging and monitoring to track the execution of one-off tasks. Log all actions, including the user, the script executed, the time of execution, and the outcome. Regularly review these logs to identify any suspicious activity or security breaches. Utilize Security Information and Event Management (SIEM) systems for automated analysis and alerting.

- Code Review and Testing: Subject all one-off task scripts to thorough code reviews by qualified security professionals. Conduct rigorous testing, including penetration testing, to identify and remediate vulnerabilities before deployment. Employ static and dynamic analysis tools to automate the vulnerability assessment process.

- Version Control and Change Management: Store all scripts in a version control system (e.g., Git) to track changes and maintain an audit trail. Implement a change management process that includes approvals and testing before deploying any modifications to the scripts. This helps to prevent unauthorized modifications and ensures that changes are properly vetted.

- Secure Execution Environment: Execute one-off tasks within a secure environment. This could involve using a dedicated server or virtual machine, isolating the execution environment from other systems, and configuring network security controls (e.g., firewalls) to restrict access. Consider using containerization technologies (e.g., Docker) to create isolated and reproducible environments.

Security Checklist for Verifying One-Off Task Scripts

Before executing any one-off admin task script, a rigorous verification process is essential. This checklist helps ensure the integrity and safety of the script.

- Script Origin and Integrity: Verify the script’s source. Ensure the script originates from a trusted and verified source. Check the script’s integrity using checksums (e.g., SHA-256) to confirm that it has not been tampered with.

- Privilege Requirements: Review the script’s privilege requirements. Ensure that the script only requests the minimum necessary permissions to perform its intended function. Identify and eliminate any unnecessary privileges.

- Input Validation: Examine the script for proper input validation. Verify that all user inputs are sanitized and validated to prevent command injection and other vulnerabilities.

- Credential Handling: Check how credentials are handled. Ensure that credentials are not hardcoded in the script. Confirm that secure credential management solutions are used to retrieve and store sensitive information.

- Error Handling and Logging: Assess the script’s error handling and logging mechanisms. Ensure that the script includes robust error handling to gracefully manage unexpected situations. Verify that all actions are logged, including errors, warnings, and successful executions.

- Code Review and Static Analysis: Review the script’s code. Verify that the script has undergone a code review by a qualified security professional. Use static analysis tools to identify potential vulnerabilities (e.g., code smells, security flaws).

- Testing and Validation: Test the script in a non-production environment before deploying it to production. Perform unit testing, integration testing, and user acceptance testing (UAT) to validate the script’s functionality and security.

- Execution Environment: Evaluate the execution environment. Ensure that the script will be executed in a secure and isolated environment. Verify the network security controls (e.g., firewalls) and other security measures.

Security Risks and Mitigation Strategies

The following table Artikels potential security risks associated with one-off admin tasks and corresponding mitigation strategies.

| Potential Security Risk | Description | Mitigation Strategy | Example |

|---|---|---|---|

| Command Injection | Malicious code injected into a script via user input. | Implement strict input validation and sanitization. Use parameterized queries or prepared statements. | A script that directly executes a user-provided filename without proper validation could be exploited to run arbitrary commands. |

| Credential Exposure | Sensitive credentials (passwords, API keys) stored directly in the script. | Utilize secure credential management solutions (e.g., Vault, Secrets Manager). Avoid hardcoding credentials. | A script containing a hardcoded database password would allow unauthorized access to the database if the script were compromised. |

| Privilege Escalation | A one-off task executed with excessive privileges, allowing unauthorized access to sensitive resources. | Apply the principle of least privilege. Use dedicated service accounts with limited permissions. | A script running as root, modifying system files, could be exploited to gain control of the entire system. |

| Uncontrolled Script Execution | Unauthorized or malicious scripts executed without proper authorization or monitoring. | Implement strict access controls and authentication. Implement comprehensive logging and monitoring. | An attacker gaining access to a server could upload and execute a malicious script to steal data or disrupt services. |

| Lack of Auditing | Absence of logs or insufficient logging, hindering the ability to track script execution and identify security incidents. | Implement comprehensive logging of all script executions, including user, time, and outcome. | Without logs, it’s impossible to determine who executed a script, what it did, and whether it was successful, making it difficult to investigate security breaches. |

| Vulnerable Dependencies | Use of outdated or vulnerable libraries and dependencies in the script. | Regularly update all dependencies to the latest secure versions. Use vulnerability scanning tools. | A script relying on a vulnerable library could be exploited to compromise the system through known security flaws. |

| Insufficient Testing | Scripts deployed without adequate testing, potentially leading to unintended consequences or security vulnerabilities. | Thoroughly test scripts in a non-production environment before deployment. Perform unit and integration testing. | A script with a logic error that accidentally deletes critical data would cause significant disruption and data loss. |

Scripting for One-Off Task Execution

Scripting is fundamental to automating and executing one-off administrative tasks. It provides a structured way to define the actions required, making them repeatable and reducing the potential for human error. This section explores common scripting languages, offers a basic script template, and demonstrates how to use command-line arguments and environment variables within these scripts.

Scripting Languages for One-Off Admin Tasks

Several scripting languages are frequently employed for one-off administrative tasks, each with its strengths and weaknesses. The choice of language often depends on the operating system, the complexity of the task, and the administrator’s familiarity.

- Bash: Primarily used on Linux and macOS systems, Bash (Bourne Again Shell) is a powerful and versatile scripting language. It excels at file manipulation, process control, and command-line utility integration. Bash scripts are typically easy to write and execute, making them suitable for a wide range of tasks.

- PowerShell: Designed by Microsoft for Windows systems, PowerShell offers robust capabilities for system administration. It is an object-oriented scripting language, providing access to .NET Framework and Windows Management Instrumentation (WMI). PowerShell is particularly well-suited for managing Windows services, users, and configurations.

- Python: A general-purpose scripting language, Python is platform-independent and widely used in various fields, including system administration. Its extensive libraries and readability make it suitable for complex tasks involving data processing, automation, and interaction with APIs.

- Other Languages: Other languages, such as Perl, Ruby, and VBScript (for Windows), can also be used, though they are less common for one-off tasks compared to the three languages listed above. The suitability of a language often depends on existing infrastructure and administrator preferences.

Basic Script Template for Checking Disk Space

A basic script template provides a starting point for executing a one-off task. This example demonstrates a simple script, written in Bash, that checks disk space usage.

This script uses the `df -h` command to display disk space usage in a human-readable format. It then filters the output to show only the mounted file systems.

#!/bin/bash# Script to check disk space usageecho "Disk space usage:"df -h | grep -vE '^Filesystem|tmpfs|cdrom'To use this script:

- Save the script to a file (e.g., `check_disk_space.sh`).

- Make the script executable: `chmod +x check_disk_space.sh`.

- Run the script: `./check_disk_space.sh`.

The output will display the disk space usage for each mounted file system.

Use of Command-Line Arguments and Environment Variables

Command-line arguments and environment variables provide flexibility and configurability to one-off task scripts.

Command-line arguments allow users to pass values to the script at runtime, making the script more versatile. Environment variables provide access to system-level settings and configuration information.

- Command-Line Arguments:

Bash uses positional parameters (`$1`, `$2`, `$3`, etc.) to access command-line arguments. PowerShell uses the `$args` array.

Example (Bash):

#!/bin/bash# Script to check if a directory existsif [ -d "$1" ]; then echo "Directory '$1' exists."else echo "Directory '$1' does not exist."fiTo run this script, you would use: `./check_directory.sh /path/to/directory`. The `/path/to/directory` is passed as `$1`.

Example (PowerShell):

# Script to check if a file existsparam ( [string]$FilePath)if (Test-Path -Path $FilePath) Write-Host "File '$FilePath' exists." else Write-Host "File '$FilePath' does not exist."To run this script, you would use: `.\check_file.ps1 -FilePath “C:\path\to\file.txt”`.

- Environment Variables:

Environment variables are accessible within the script and can be used to configure its behavior. In Bash, environment variables are accessed using the `$VARIABLE_NAME` syntax. In PowerShell, they are accessed using `$env:VARIABLE_NAME`.

Example (Bash):

#!/bin/bash# Script to print the user's home directoryecho "Your home directory is: $HOME"In this example, the `$HOME` environment variable (which stores the user’s home directory) is used.

Example (PowerShell):

# Script to print the current user's nameWrite-Host "Current user: $($env:USERNAME)"Here, the `$env:USERNAME` environment variable is used to retrieve the current user’s name.

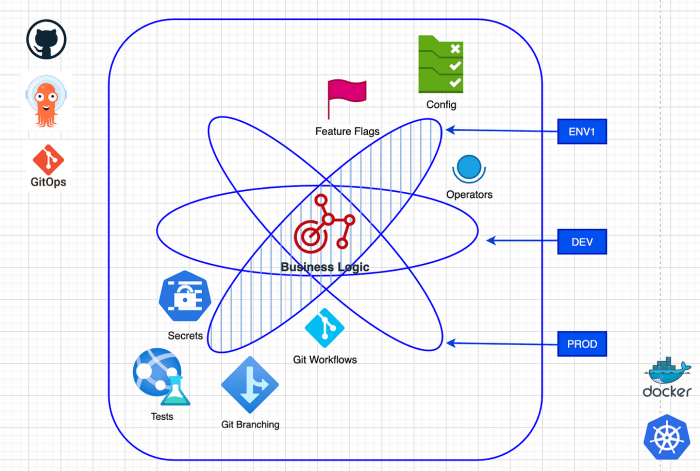

Implementing Factor XII Principles

In the realm of one-off admin tasks, implementing the principles of Factor XII – ensuring secure, isolated, and controlled execution – is paramount. This section focuses on task isolation, a crucial element in preventing unintended consequences and maintaining system stability. By isolating these tasks, we minimize the potential for them to interfere with other critical processes, thereby safeguarding the integrity of the system.

Task Isolation: Importance

Task isolation is crucial to the safe and efficient execution of one-off admin tasks. Isolating tasks prevents them from impacting the main system, ensuring stability and security.

Methods for Isolating One-Off Tasks

Several methods can be employed to achieve task isolation, each with its own strengths and weaknesses. Selecting the appropriate method depends on the specific task, the system environment, and the desired level of isolation.

- User Accounts with Limited Privileges: Running one-off tasks under dedicated user accounts with restricted privileges is a fundamental approach. These accounts should have only the minimum necessary permissions to perform the specific task, preventing them from accessing or modifying sensitive system resources. For example, if a task involves modifying a specific configuration file, the user account should only have read and write access to that file and the directories containing it.

- Chroot Environments: A chroot (change root) environment provides a form of isolation by changing the apparent root directory for a process. This limits the process’s access to the file system, preventing it from interacting with files outside of the chroot environment. This is a useful technique for tasks that involve file system manipulation. Consider a task that needs to process a log file; the task can be chrooted into a directory containing only the log file and its necessary dependencies.

- Virtual Machines (VMs): VMs offer a robust form of isolation by creating a completely independent operating system instance. Each one-off task can be executed within its own VM, providing a high degree of separation from the host system. This method is particularly useful for tasks that require a specific operating system or software configuration that differs from the main system. For instance, a task requiring a specific version of Python can be run in a VM dedicated to that version.

- Namespaces: Linux namespaces provide a lightweight form of isolation, allowing a process to have its own isolated view of system resources such as the file system, network, and process IDs. This approach is more efficient than VMs but offers a lower level of isolation. For example, a task that needs to listen on a specific network port can be run in a network namespace, preventing it from interfering with the host system’s network configuration.

Containerization for Task Isolation

Containerization, a modern and increasingly popular approach, offers a balance between the isolation of VMs and the efficiency of namespaces. Containers package an application and its dependencies into a self-contained unit, ensuring consistent execution across different environments.

- Docker: Docker is a leading containerization platform. It enables the creation and management of containers, allowing one-off tasks to run in isolated environments. Each container encapsulates the task’s dependencies, such as libraries and configurations. This ensures that the task operates consistently regardless of the underlying system. Docker simplifies deployment and management through its image-based approach.

- Container Orchestration: While Docker provides the means to create containers, container orchestration tools such as Kubernetes can manage and scale them. Kubernetes can automatically schedule and manage the execution of one-off tasks within containers, providing robust control and automation. For instance, Kubernetes can automatically restart a container if it fails or scale the number of containers based on demand.

- Benefits of Containerization: Containerization offers several benefits for isolating one-off admin tasks. Containers are lightweight, meaning they consume fewer resources than VMs. They are also portable, allowing tasks to be easily deployed across different environments. Containerization promotes consistency, ensuring that the task behaves predictably regardless of the underlying infrastructure.

Implementing Factor XII Principles

Comprehensive auditing and logging are critical components of the Factor XII framework, ensuring accountability, security, and the ability to troubleshoot issues effectively. By meticulously tracking every one-off admin task, organizations can maintain a robust security posture and meet compliance requirements. This approach enables detailed analysis of actions taken, facilitating incident response and preventing unauthorized activity.

Auditing and Logging for One-Off Task Execution

The foundation of effective Factor XII implementation rests on a well-defined auditing and logging strategy. This strategy should capture all relevant information about each one-off task execution, from the initiation of the task to its successful (or unsuccessful) completion. Detailed logs provide a historical record, enabling administrators to understand the context of any changes made and to trace back any potential security breaches or errors.

This is vital for both proactive security measures and reactive incident response.

Designing a Logging Strategy for One-Off Tasks

A robust logging strategy must encompass several key aspects to ensure comprehensive coverage. This includes defining the level of detail to be captured, the storage location for logs, and the mechanisms for log rotation and retention. The choice of logging tools and technologies should align with the organization’s existing infrastructure and security policies. Regular review and analysis of logs are also essential to identify anomalies, suspicious activity, and potential security threats.

This proactive approach allows for timely intervention and mitigation of risks.

Information to Include in Audit Logs

Audit logs must contain specific details about each one-off task to be useful for analysis and investigation. The following information should be included:

- Task Identifier: A unique identifier assigned to each task, allowing for easy tracking and correlation with other logs and systems. This could be a generated UUID or a sequential task ID.

- Timestamp: The exact date and time when the task was initiated, executed, and completed (or failed). This is crucial for chronological analysis and identifying the sequence of events.

- User/Account Information: The identity of the user or account that initiated the task. This should include the username, account ID, and any associated roles or permissions.

- Task Description: A clear and concise description of the task being performed. This should specify the intended action, such as “Create user account,” “Modify database entry,” or “Restart service.”

- Command/Script Executed: The exact command or script that was executed, including all parameters and arguments. This provides a precise record of the actions taken.

- Target System/Resource: The specific system, server, database, or other resource that the task was performed on. This helps pinpoint the scope of the action.

- Input Parameters: Any input parameters provided to the task, such as user names, IP addresses, or configuration settings.

- Output/Results: The output generated by the task, including any error messages, success codes, or other relevant information. This provides insight into the outcome of the execution.

- Status: The status of the task (e.g., “success,” “failure,” “pending,” “in progress”).

- Source IP Address: The IP address from which the task was initiated. This is important for tracing the origin of the action.

- Log Severity Level: The level of importance or severity associated with the log entry (e.g., “info,” “warning,” “error,” “critical”). This helps prioritize log analysis.

- Contextual Information: Any additional relevant information, such as the operating system version, application version, or any related events.

Task Scheduling and Triggering Mechanisms

Effectively triggering and scheduling one-off admin tasks is crucial for automating system maintenance and ensuring timely execution. This section explores various methods for initiating these tasks, providing examples and comparisons to facilitate informed decision-making when implementing Factor XII principles. Understanding the different approaches allows administrators to optimize task execution based on specific requirements and system environments.

Triggering One-Off Tasks

Several methods can trigger one-off admin tasks, each with its advantages and disadvantages. Choosing the appropriate method depends on the nature of the task, the required frequency, and the system’s architecture.

- Cron Jobs: Cron jobs are a time-based job scheduler in Unix-like operating systems. They allow administrators to schedule commands or scripts to run automatically at specified intervals.

- Event-Driven Systems: These systems trigger tasks in response to specific events. For example, a file creation event could trigger a script to process the new file.

- Manual Execution: Tasks can be initiated manually by an administrator, often through a command-line interface or a dedicated management tool. This approach is suitable for infrequent tasks or tasks that require human oversight.

Scheduling Examples

Scheduling one-off tasks requires understanding the capabilities of the chosen triggering mechanism. The following examples illustrate how to schedule tasks using different methods.

- Cron Job Example: To schedule a script named `backup_database.sh` to run every day at 2:00 AM, the crontab entry would be:

0 2- /path/to/backup_database.sh

This entry specifies the minute (0), hour (2), day of the month (*), month (*), and day of the week (*).

- Event-Driven System Example: Using a file system monitoring tool like `inotifywait` (Linux), a script could be triggered whenever a new file is created in a specific directory. The command might look like:

inotifywait -e create /path/to/directory -m --format '%w%f' | while read file; do /path/to/process_file.sh "$file"; doneThis command monitors the specified directory for file creation events and then executes the `process_file.sh` script for each newly created file.

- Manual Execution Example: A task to update user passwords could be initiated manually by running a script from the command line:

/path/to/update_passwords.shThis approach gives the administrator direct control over when the task is executed.

Comparison of Scheduling Methods

The table below compares the different scheduling methods based on several key factors.

| Method | Triggering Mechanism | Pros | Cons |

|---|---|---|---|

| Cron Jobs | Time-based scheduler | Simple to configure, widely supported, reliable for scheduled tasks. | Not suitable for real-time or event-driven tasks; can be less flexible for complex scheduling. |

| Event-Driven Systems | Event triggers (e.g., file creation, database changes) | Reacts immediately to events, supports real-time processing, efficient for event-based workflows. | More complex to set up than cron jobs; requires appropriate monitoring tools and event handlers. |

| Manual Execution | Administrator command | Provides direct control, suitable for infrequent tasks, allows human oversight. | Requires manual intervention, prone to human error, less suitable for automated processes. |

| Orchestration Tools | Workflow based, event-driven or scheduled. | Complex workflow support, automated retries and error handling, and centralized management of tasks. | Requires an orchestration tool (e.g., Apache Airflow, Argo workflows, etc.) and more overhead. |

Error Handling and Reporting

Robust error handling is paramount in the context of one-off admin tasks. These tasks, often executed with elevated privileges, can have significant consequences if they fail, potentially leading to data corruption, service outages, or security breaches. Implementing comprehensive error handling ensures that failures are detected, addressed, and reported effectively, minimizing the impact of issues and facilitating efficient troubleshooting.

Importance of Robust Error Handling

Error handling is crucial for the reliability and security of one-off admin tasks. Without it, unexpected issues can go unnoticed, leading to cascading failures and potential data loss. Effective error handling provides several key benefits:

- Early Detection: Error handling mechanisms, such as exception handling and validation checks, allow for the early identification of problems. This prevents minor issues from escalating into major failures.

- Reduced Downtime: By quickly identifying and addressing errors, downtime can be minimized. This is particularly important for tasks that support critical infrastructure or services.

- Improved Data Integrity: Error handling helps prevent data corruption by catching errors before they can compromise data integrity.

- Enhanced Security: Error handling can prevent vulnerabilities that could be exploited by malicious actors. For instance, by validating user input, one can mitigate the risk of injection attacks.

- Simplified Troubleshooting: Comprehensive error logs and reports provide valuable information for diagnosing and resolving issues.

Error-Handling Techniques

Several techniques can be employed to implement robust error handling in one-off task scripts. These techniques, often used in combination, ensure that errors are effectively detected, managed, and reported.

- Logging Errors: Logging is the cornerstone of error handling. All errors, along with relevant context (timestamp, task name, user, input parameters), should be logged to a persistent storage location. Log files provide a historical record of issues, enabling analysis and trend identification.

- Sending Notifications: Immediate notification of critical errors is essential. This can be achieved through email, SMS, or other notification systems. Notifications should include details about the error and the affected task, enabling timely intervention.

- Retrying Failed Tasks: Transient errors, such as temporary network outages, can sometimes be resolved by retrying the task. Implement retry mechanisms with exponential backoff to avoid overwhelming resources.

- Exception Handling: Use exception handling within your scripts to gracefully handle unexpected errors. This involves using `try-except` blocks to catch specific exceptions and handle them appropriately.

- Input Validation: Validate all input parameters to prevent unexpected behavior. This helps to prevent errors caused by incorrect data or malicious input.

- Resource Management: Ensure that resources, such as files and database connections, are properly released to avoid resource leaks that could lead to errors.

Example of Python Code for Error Logging:“`python import logging import datetime # Configure logging logging.basicConfig(filename=’admin_task.log’, level=logging.ERROR, format=’%(asctime)s – %(levelname)s – %(message)s’) def my_admin_task(parameter): try: # Simulate a task that might fail if parameter == “fail”: raise ValueError(“Simulated failure!”) print(f”Task executed successfully with parameter: parameter”) except ValueError as e: logging.error(f”Task failed: e”) except Exception as e: logging.exception(“An unexpected error occurred”) “`This example demonstrates how to log errors and exceptions with timestamps, levels, and messages, providing context for troubleshooting.

The use of `logging.exception()` captures the full traceback, which is particularly useful for debugging.

Implementing a Basic Error Reporting System

A basic error reporting system should provide a centralized location for viewing and analyzing errors from one-off tasks. This system can be as simple as a dedicated log file or a more sophisticated system with email alerts and dashboard visualizations.The core components of an error reporting system include:

- Centralized Log Storage: Aggregate logs from all task scripts into a single location. This could be a file server, a database, or a dedicated logging service.

- Error Parsing: Implement scripts or tools to parse the log files and extract relevant information, such as error messages, timestamps, and task names.

- Alerting: Configure alerts to notify administrators of critical errors. This can be done through email, SMS, or other communication channels.

- Reporting and Visualization: Provide reports and visualizations to help identify trends and patterns in errors. This could include dashboards that display the frequency of errors, the tasks that are failing, and the types of errors that are occurring.

Example of Basic Error Reporting using Python and Email:“`python import logging import smtplib from email.mime.text import MIMEText # Configure logging (same as previous example) logging.basicConfig(filename=’admin_task.log’, level=logging.ERROR, format=’%(asctime)s – %(levelname)s – %(message)s’) def send_email(subject, body): sender_email = “[email protected]” # Replace with your email receiver_email = “[email protected]” # Replace with admin email password = “your_password” # Replace with your password message = MIMEText(body) message[‘Subject’] = subject message[‘From’] = sender_email message[‘To’] = receiver_email try: with smtplib.SMTP_SSL(“smtp.gmail.com”, 465) as server: server.login(sender_email, password) server.sendmail(sender_email, receiver_email, message.as_string()) print(“Email sent successfully”) except Exception as e: print(f”Error sending email: e”) def my_admin_task(parameter): try: if parameter == “fail”: raise ValueError(“Simulated failure!”) print(f”Task executed successfully with parameter: parameter”) except ValueError as e: logging.error(f”Task failed: e”) send_email(“Admin Task Failed”, f”The admin task failed with the following error: e”) except Exception as e: logging.exception(“An unexpected error occurred”) send_email(“Admin Task Unexpected Error”, f”An unexpected error occurred: e”) “`This example extends the previous logging example to include email notifications.

When an error occurs, an email is sent to an administrator, providing immediate awareness of the issue. Remember to configure your email settings correctly.

Automation and Orchestration of One-Off Tasks

Automating and orchestrating one-off admin tasks significantly enhances efficiency, reduces errors, and improves overall system stability. This section delves into the use of automation tools and methodologies to streamline the execution of these critical tasks.

Role of Automation Tools in Managing One-Off Admin Tasks

Automation tools are essential for managing one-off admin tasks by providing a framework for consistent, repeatable, and scalable operations. These tools enable administrators to define tasks as code, ensuring that the execution process is predictable and auditable.

- Ansible: Ansible is a popular open-source automation tool that uses a simple, agentless architecture. It uses SSH to connect to managed nodes and executes tasks defined in YAML playbooks. Its ease of use and extensive module library make it suitable for a wide range of one-off tasks, from simple configuration changes to complex deployments. For example, an Ansible playbook can be created to install a specific software package on multiple servers simultaneously.

- Puppet: Puppet is another powerful automation tool, primarily focused on configuration management. It uses a declarative approach, where the desired state of the system is defined, and Puppet ensures that the system conforms to that state. While Puppet is more complex than Ansible, it excels at managing complex infrastructure configurations and can be used for one-off tasks by defining them within Puppet manifests.

- Chef: Chef is a configuration management tool that uses Ruby-based DSLs (Domain-Specific Languages) to define system configurations. Chef is well-suited for managing complex environments and supports a wide range of platforms. Chef can be used to automate one-off tasks by creating cookbooks and recipes that define the necessary actions.

- Other Tools: Other automation tools, such as Terraform (for infrastructure as code), SaltStack, and PowerShell (for Windows environments), can also be employed depending on the specific needs and the existing infrastructure.

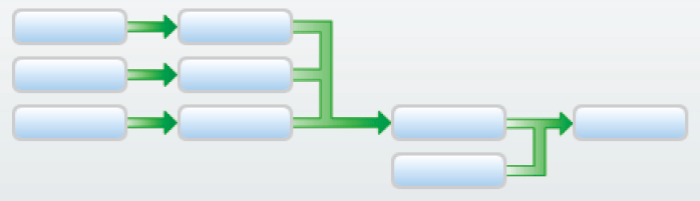

Methods for Orchestrating the Execution of Multiple One-Off Tasks

Orchestrating the execution of multiple one-off tasks involves coordinating the sequence and dependencies of these tasks to achieve a desired outcome. Several methods can be employed to achieve effective orchestration.

- Task Sequencing: Defining the order in which tasks should be executed. This ensures that tasks are completed in the correct order, especially when there are dependencies between them. For example, a task to update a database schema should be executed before a task that uses the updated schema.

- Task Parallelism: Executing multiple tasks concurrently to reduce the overall execution time. This is particularly useful when tasks do not have dependencies on each other. For instance, updating multiple servers with the same security patches can be done in parallel.

- Dependency Management: Identifying and managing dependencies between tasks. This ensures that a task only starts after its dependent tasks have been successfully completed. For example, a task to deploy an application should only start after the underlying infrastructure (e.g., database servers) has been provisioned.

- Workflow Engines: Using workflow engines to define and manage complex task sequences. These engines provide features such as conditional execution, error handling, and logging. Tools like Jenkins, Rundeck, and Airflow can be used to orchestrate one-off tasks.

Design of a Basic Automation Workflow for a Common System Administration Scenario

This example demonstrates a basic automation workflow using Ansible for a common system administration scenario: updating a software package on multiple servers.

Scenario: Updating the Apache web server on a group of Linux servers.

Workflow:1. Inventory

Define the list of servers in an Ansible inventory file (e.g., `hosts`).

2. Playbook Creation

Create an Ansible playbook (e.g., `update_apache.yml`) that includes the following tasks:

- Connect to the target servers using SSH.

- Use the `apt` or `yum` module (depending on the Linux distribution) to update the Apache package.

- Restart the Apache service to apply the changes.

- (Optional) Verify the update by checking the Apache version.

3. Playbook Execution

Run the Ansible playbook against the defined inventory.

ansible-playbook -i hosts update_apache.yml4. Reporting and Logging: Ansible provides detailed output during execution, including the status of each task on each server. This information can be used for reporting and troubleshooting.

Real-World Scenarios and Case Studies

One-off admin tasks are essential for efficient system administration. They allow for the execution of specific, isolated processes without requiring continuous operation. Understanding their application in real-world scenarios is key to appreciating their value and effectiveness. This section will delve into common use cases and demonstrate successful implementations through case studies.

Common Use Cases for One-Off Admin Tasks

One-off admin tasks are incredibly versatile, serving a wide range of purposes. Here are some common applications, each providing distinct benefits to system administration:

- Data Migration: Moving data between different systems or databases is a frequent requirement. One-off tasks facilitate this process, ensuring data integrity and minimizing downtime. For example, migrating customer data from an old CRM to a new one.

- System Updates and Patching: Applying updates and patches to software and operating systems often requires specific actions. One-off tasks can be designed to automate these processes, ensuring consistent and reliable deployments across a network.

- Database Maintenance: Database optimization, index rebuilding, and data archiving are critical for database performance. One-off tasks can automate these maintenance activities, freeing up administrators from manual intervention.

- Security Audits and Remediation: Running security scans, identifying vulnerabilities, and applying security fixes are often time-sensitive. One-off tasks can execute these processes, ensuring systems remain secure.

- User Account Management: Creating, modifying, and deleting user accounts are common administrative tasks. One-off scripts can automate these actions, especially during onboarding or offboarding processes.

- Reporting and Log Analysis: Generating reports and analyzing logs often involves processing large amounts of data. One-off tasks can automate these processes, providing valuable insights into system performance and security.

- Disaster Recovery and Backup Operations: Performing backups and restoring data are critical for business continuity. One-off tasks can automate these processes, ensuring data is protected and readily available.

Case Study: Automated User Account Provisioning

This case study details the successful implementation of one-off tasks for automating user account provisioning in a mid-sized organization. The organization faced challenges with manual user account creation, leading to delays, errors, and inconsistencies in access rights.

Problem: Manual user account creation was time-consuming, prone to human error, and lacked a standardized process. This led to delays in onboarding new employees and potential security vulnerabilities due to incorrect access permissions.

Solution: The organization implemented a system using one-off tasks triggered by a new-hire notification from the HR system. When a new hire record was created, a trigger initiated a PowerShell script (one-off task) to:

- Create the user account in Active Directory: The script automatically generated a unique username, assigned a temporary password, and populated essential user attributes.

- Assign group memberships: Based on the new hire’s role, the script automatically assigned the user to the appropriate security groups, granting them the necessary access to network resources, applications, and shared drives.

- Create a mailbox in Exchange Online (if applicable): The script created the user’s mailbox and configured essential settings, such as storage quotas and email forwarding.

- Send a welcome email: The script sent a welcome email to the new user, providing their username, temporary password, and instructions on how to reset their password.

Task Workflow Visualization:

The workflow starts with the HR system generating a new-hire notification. The notification then triggers a one-off task (PowerShell script) which performs the following steps:

| Step | Description |

|---|---|

| 1. HR Notification | A new hire record is created in the HR system. |

| 2. Trigger | A trigger (e.g., a scheduled task or event handler) detects the new-hire notification. |

| 3. PowerShell Script Execution | The PowerShell script is executed as a one-off task. |

| 4. User Account Creation | The script creates the user account in Active Directory. |

| 5. Group Membership Assignment | The script assigns the user to the appropriate security groups. |

| 6. Mailbox Creation (if applicable) | The script creates the user’s mailbox in Exchange Online. |

| 7. Welcome Email | The script sends a welcome email to the new user. |

| 8. Completion | The one-off task completes successfully. |

Results: The implementation of one-off tasks significantly improved the user account provisioning process. Account creation time was reduced from hours to minutes. Errors were minimized, and access permissions were consistently applied. The organization also benefited from improved security and a streamlined onboarding experience for new employees.

Closure

In conclusion, mastering how to run admin processes as one-off tasks (Factor XII) provides a powerful toolkit for system administrators. By embracing the principles of task isolation, security best practices, and comprehensive auditing, you can significantly enhance the efficiency, security, and maintainability of your systems. This knowledge equips you to handle specific administrative needs effectively and ensure your systems operate smoothly.

FAQs

What is a one-off task?

A one-off task is a script or command designed to execute a specific action once and then terminate, unlike persistent processes that run continuously or scheduled tasks that run at regular intervals.

What are the benefits of using one-off tasks?

Benefits include increased security by limiting the scope of execution, improved resource management by avoiding unnecessary persistent processes, and simplified troubleshooting by focusing on specific actions.

How does Factor XII relate to one-off tasks?

Factor XII provides a structured approach to executing one-off tasks, emphasizing security, isolation, auditing, and automation for optimal performance and reliability.

What scripting languages are commonly used for one-off tasks?

Common languages include Bash, PowerShell, Python, and Perl, chosen based on system compatibility and task complexity.

How can I ensure the security of one-off tasks?

Implement security best practices such as input validation, least privilege access, and thorough auditing to mitigate risks.