The ephemeral nature of serverless computing, while offering scalability and cost-effectiveness, introduces a challenge: cold starts. These initial delays, caused by the need to spin up new function instances, can significantly impact application responsiveness and user experience. Understanding and mitigating cold start latency is crucial for maximizing the benefits of serverless architectures and ensuring optimal performance. This exploration delves into the intricacies of cold starts, providing a scientific analysis of their causes and presenting actionable strategies for their reduction.

This document systematically dissects the multifaceted problem of serverless cold starts, examining performance bottlenecks, code optimization techniques, and infrastructure considerations. It explores the impact of runtime environments, function packaging strategies, and advanced features like provisioned concurrency. Furthermore, it provides practical insights into monitoring, alerting, and architectural design patterns that can be leveraged to build high-performance, serverless applications. The aim is to equip developers with the knowledge and tools necessary to minimize cold start times and create a seamless user experience.

Understanding Serverless Cold Starts

Serverless computing, while offering significant advantages in scalability and cost-effectiveness, introduces a performance characteristic known as cold starts. These are the initial delays experienced when a serverless function is invoked after a period of inactivity. Understanding the nature of cold starts, their impact, and the underlying causes is crucial for optimizing serverless application performance.

Defining Serverless Cold Starts and Performance Impact

The term “cold start” refers to the latency incurred when a serverless function is executed for the first time, or after a period of being idle. During this period, the serverless platform must provision the necessary resources, such as the execution environment (container or virtual machine), load the function’s code, and initialize the runtime. This process can take a significant amount of time, ranging from milliseconds to several seconds, depending on the function’s complexity, the platform used, and the chosen configuration.

The resulting latency directly impacts the user experience, particularly for applications that require rapid response times.

Scenarios with Notable Cold Start Visibility

Cold starts are most noticeable in specific application scenarios. These are areas where response time is critical or where the frequency of invocations is low.

- Interactive Web Applications: Web applications where users expect immediate responses, such as search functionalities, e-commerce platforms, or real-time dashboards, are highly susceptible to the negative effects of cold starts. A slow initial response can lead to user frustration and abandonment.

- API Gateways: API gateways, acting as the entry point for various backend services, often experience cold starts, especially if the underlying functions are infrequently accessed. This can delay API requests and degrade overall system performance.

- Mobile Applications: Mobile applications, especially those that use serverless backends for specific functionalities, can experience cold starts when a user initiates an action that triggers a serverless function. This can lead to a lag in the user interface.

- Event-Driven Architectures: Applications that respond to events, such as file uploads or database updates, may experience cold starts if the function processing the event is idle. This can delay the processing of the event and affect the application’s ability to react in a timely manner.

Root Causes of Cold Start Latency

The primary causes of cold start latency are related to the underlying mechanisms of serverless platforms. The specific details can vary slightly depending on the provider (e.g., AWS Lambda, Azure Functions, Google Cloud Functions), but the general principles remain consistent.

- Environment Provisioning: When a function is invoked, the serverless platform must allocate an execution environment. This may involve starting a container, virtual machine, or other isolated runtime. The time required to provision this environment is a significant contributor to cold start latency. This involves tasks such as selecting the appropriate hardware resources and configuring the operating system.

- Code Loading and Initialization: The function’s code must be loaded into the allocated environment. This includes downloading the code package, unpacking it, and loading any dependencies. The size of the code package and the number of dependencies directly affect the loading time. Once the code is loaded, the runtime environment must be initialized. This can involve initializing the language runtime (e.g., Node.js, Python, Java), and loading any necessary libraries or frameworks.

- Container Startup (if applicable): Some serverless platforms use containers to isolate function executions. Starting a container involves a series of steps, including creating the container image, starting the container process, and configuring the container’s network and storage. The time required to start the container is a key factor in cold start latency.

- Network Latency: Communication between the function and other services (e.g., databases, caches) can introduce network latency. This is especially true if the function and the dependent services are located in different regions or availability zones. The round-trip time (RTT) of network requests can significantly impact overall performance.

- Resource Contention: If multiple functions or users are competing for the same resources (e.g., CPU, memory), this can lead to increased latency. The serverless platform may need to throttle resource allocation, leading to longer cold start times.

Identifying Performance Bottlenecks

Pinpointing the sources of latency in serverless cold starts is crucial for optimization. Understanding where the delays originate allows for targeted improvements, leading to faster function execution and a better user experience. This section focuses on common bottlenecks and the methods used to identify them.

Common Cold Start Bottlenecks

Several factors contribute to the extended duration of cold starts. Addressing these bottlenecks is key to minimizing the impact of serverless function initialization.

- Code Size and Dependencies: The size of the function’s code package and the number and size of its dependencies significantly impact cold start times. Larger packages require more time to download, install, and load. For example, a function with a large number of npm packages will experience a longer cold start than one with minimal dependencies. This is due to the time required to unpack and load all the modules into the function’s execution environment.

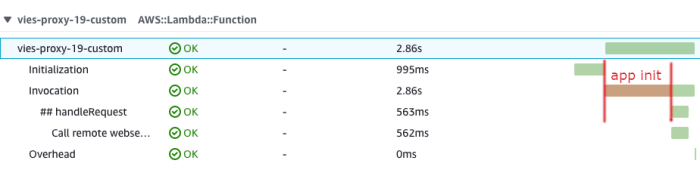

- Initialization Code: The initialization code within the function itself, including database connections, external API calls, and other setup operations, contributes to the cold start duration. If this initialization code is complex or involves network latency, it can significantly increase the time it takes for the function to become ready to serve requests. For instance, a function that connects to a database during initialization will experience a delay based on the database connection time.

- Container Provisioning: The time it takes for the serverless platform to provision a container or execution environment for the function also adds to the cold start latency. This includes tasks like selecting an available instance, configuring the environment, and starting the execution runtime. The provisioning time can vary depending on the platform’s infrastructure and current load.

- Runtime Environment: The specific runtime environment chosen (e.g., Node.js, Python, Java) influences cold start times. Different runtimes have varying initialization overheads. For example, Java often has a higher startup cost due to the JVM’s initialization process, while Node.js might be faster because of its lightweight nature.

Profiling Serverless Functions

Profiling is essential to identify specific performance issues within a serverless function. Various tools and techniques can be used to gather detailed information about function execution.

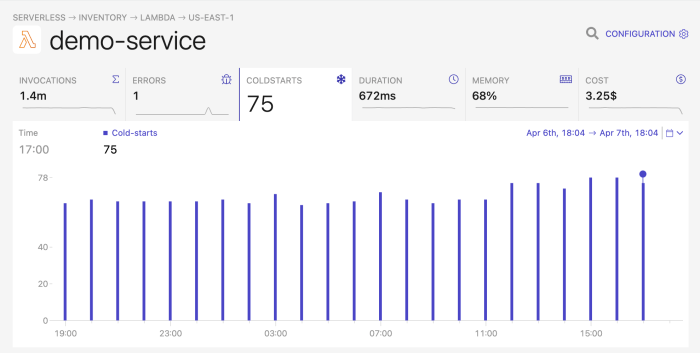

- Cloud Provider Monitoring Tools: Most cloud providers offer built-in monitoring tools (e.g., AWS CloudWatch, Azure Monitor, Google Cloud Monitoring). These tools provide metrics such as invocation duration, memory usage, and cold start times. They often offer features like tracing and logging to pinpoint specific bottlenecks. For example, AWS X-Ray can be used to trace requests and visualize the time spent in different parts of the function, including initialization.

- Custom Logging and Metrics: Implementing custom logging and metrics within the function code allows developers to track the execution time of specific code blocks, such as database connections or external API calls. This provides granular insights into performance. For example, developers can add timestamps at the beginning and end of a database connection attempt to measure the connection time.

- Profiling Tools: Profiling tools, such as those available for specific runtimes (e.g., Node.js profilers, Python profilers), can be used to analyze the function’s code execution at a deeper level. These tools can identify CPU-intensive operations, memory leaks, and other performance issues. For example, using a Node.js profiler can help identify slow code sections within the function logic.

Dependencies significantly influence cold start duration. The time required to download, install, and load these dependencies directly impacts the function’s readiness. A larger number of dependencies, or larger dependency sizes, increase cold start latency. The use of techniques like function bundling and dependency optimization can mitigate this impact. For example, the difference in cold start time can be measured when comparing a function that utilizes only a few core dependencies against one that relies on a substantial number of third-party libraries.

Code Optimization Techniques

Optimizing the code deployed to serverless functions is crucial for minimizing cold start latency. This involves strategies that reduce the time taken for the function to initialize and begin processing requests. Several techniques focus on shrinking package size, delaying initialization of resources, and streamlining the execution path. These optimizations directly contribute to faster cold start times, leading to a more responsive and efficient serverless application.

Reducing Function Package Size

Reducing the size of the deployment package is a fundamental optimization strategy. Smaller packages mean quicker downloads and unzipping during cold starts. This directly impacts the time the function takes to become ready to serve requests. The goal is to include only the necessary code and dependencies.To achieve this, consider the following:

- Dependency Management: Careful dependency management is essential. Use a package manager (e.g., npm, pip) to explicitly declare and manage dependencies. Avoid including unnecessary libraries or versions. Use dependency bundlers (e.g., webpack, esbuild) to bundle your code and its dependencies into a single, optimized file.

- Code Trimming: Analyze the code to identify and remove unused or dead code. This reduces the overall size of the deployment package. Static analysis tools can help identify unused functions, variables, and imports.

- Tree Shaking: Tree shaking is a process that removes unused code from the final bundle. It’s particularly effective in JavaScript environments. Modern bundlers automatically perform tree shaking.

- Minification: Minification reduces code size by removing whitespace, comments, and shortening variable names. This reduces the file size without affecting functionality.

- Native Code Compilation: For languages like Go or Rust, compiling to a native executable can reduce the package size compared to interpreted languages. This is because the runtime environment is included within the executable itself.

- Layering: Serverless platforms often support layers. Layers allow you to share dependencies across multiple functions. By placing common dependencies in a layer, you avoid duplicating them in each function’s package, thus reducing overall package size.

Lazy Loading and Initialization

Lazy loading and initialization are techniques that defer the loading of resources until they are actually needed. This can significantly reduce cold start times by preventing the function from initializing resources that are not immediately required. Instead of initializing everything at function startup, the code initializes only the necessary components when the function receives its first request or when specific code paths are triggered.Consider the following aspects:

- Defer Resource Initialization: Avoid initializing database connections, loading large configuration files, or establishing network connections during function initialization if they are not immediately required. Instead, initialize these resources when the function receives its first request or when the specific functionality that requires them is invoked.

- Lazy Load Modules: In languages like JavaScript, import modules only when they are needed. This prevents the module’s code from being loaded and executed during the cold start.

- On-Demand Configuration Loading: Load configuration files only when the function requires the settings. Avoid loading large configuration files at startup if only a small subset of the configuration is needed initially.

- Connection Pooling: For database connections, use connection pooling. Connection pooling allows you to reuse existing connections instead of establishing new ones for each request. This reduces the time spent on connection setup during cold starts.

- Example: A function that processes images might not need to load a large image processing library until it receives an image processing request. Lazy loading the library ensures that the library is loaded only when it’s required.

Runtime Environment Configuration

The runtime environment significantly impacts serverless cold start performance. Choosing the right environment involves understanding how the underlying infrastructure provisions resources and how the chosen language and runtime interact with that infrastructure. Efficient configuration minimizes the time taken for the function to initialize and begin processing requests. Factors such as language selection, memory allocation, and containerization strategies all play crucial roles in determining cold start latency.The selection of a runtime environment and its associated configuration is a critical determinant of cold start performance.

Different languages and runtimes exhibit varying cold start characteristics due to differences in initialization overhead, library loading times, and the efficiency of their virtual machines or interpreters. Careful consideration of these factors allows for the selection of an environment that aligns with the specific performance requirements of a serverless function.

Impact of Runtime Environment Choices on Cold Start Performance

The choice of runtime environment directly affects cold start latency through several mechanisms. The size of the language runtime, the complexity of its initialization process, and the time required to load dependencies all contribute to the overall cold start duration.

- Language Runtime Size: Languages with larger runtimes, such as Java (with its JVM) and .NET (with its CLR), generally exhibit longer cold start times compared to languages with smaller runtimes, like Go or Node.js. This is because the larger runtime requires more time to load and initialize.

- Initialization Complexity: The complexity of the runtime’s initialization process influences cold start duration. For example, the Java Virtual Machine (JVM) performs extensive initialization tasks, including class loading and just-in-time (JIT) compilation, which contribute to longer cold starts. In contrast, interpreted languages like Python and JavaScript, while still requiring initialization, often have less overhead in this phase.

- Dependency Loading: The number and size of dependencies also affect cold start performance. Functions with numerous or large dependencies, especially those that must be loaded during initialization, experience longer cold start times. This is particularly relevant in languages with complex dependency management systems.

- Containerization: The use of containerization technologies, such as Docker, adds an additional layer of overhead. The container image size and the time required to download and start the container influence cold start latency. Optimization strategies, such as using smaller base images and pre-warming containers, can mitigate this impact.

Comparison of Cold Start Characteristics of Different Programming Languages and Runtimes

Programming languages and their associated runtimes exhibit different cold start characteristics due to variations in their architectures, initialization processes, and dependency management strategies. This comparison provides a general overview of these differences.

- Java: Java, due to the JVM’s overhead, typically exhibits the longest cold start times. The JVM requires significant initialization, including class loading, JIT compilation, and garbage collection setup. However, once initialized, Java functions can often achieve good performance.

- .NET: Similar to Java, .NET functions also experience relatively long cold starts due to the CLR’s initialization process. The .NET runtime needs to load and initialize various components, which contributes to the delay.

- Node.js: Node.js, using the V8 JavaScript engine, generally has faster cold starts than Java or .NET. The V8 engine is relatively lightweight, and the JavaScript runtime is generally quicker to initialize.

- Python: Python’s cold start performance varies depending on the size and complexity of dependencies. However, compared to Java and .NET, Python often has shorter cold starts. The initialization of the Python interpreter is generally faster.

- Go: Go functions often exhibit the fastest cold start times. Go compiles to native machine code, eliminating the need for a virtual machine or interpreter during runtime. This results in significantly reduced initialization overhead.

Memory Allocation Strategies Comparison

Memory allocation strategies play a significant role in serverless function performance, particularly during cold starts. Different approaches to memory allocation impact the time it takes for the function to become ready to process requests. The following table compares three different memory allocation strategies, highlighting their key characteristics.

| Memory Allocation Strategy | Description | Impact on Cold Start | Advantages | Disadvantages |

|---|---|---|---|---|

| Default Allocation (e.g., AWS Lambda Default) | The cloud provider automatically allocates memory based on the function’s configuration. | Generally moderate. The provider manages the allocation, but the function’s dependencies and runtime still influence the start-up time. | Simplicity; minimal configuration required. | Limited control over memory allocation specifics; potential for over-provisioning or under-provisioning, which affects both cold start and runtime performance. |

| Pre-allocation/Memory Reservation (e.g., Container Orchestration with Memory Limits) | Reserving a fixed amount of memory for the function before it starts. This might involve using a container orchestration platform to pre-allocate resources. | Can reduce cold start times because the memory is already available. The function does not need to wait for memory to be provisioned. | Potentially faster cold starts; better control over resource allocation. | Requires more complex infrastructure setup; may lead to wasted resources if the function does not fully utilize the allocated memory. |

| Dynamic Allocation (e.g., Memory Pools or Custom Allocators) | The function dynamically allocates memory as needed during initialization or runtime. This may involve using custom allocators or memory pools within the function’s code. | Can be variable. If the function requires significant memory allocation during initialization, this can increase cold start time. However, efficient allocation strategies can mitigate this. | Flexibility in managing memory usage; can potentially optimize memory allocation based on the function’s needs. | Increased complexity in code; requires careful tuning to avoid performance bottlenecks; can introduce memory management overhead. |

Function Packaging and Deployment Strategies

Optimizing function packaging and deployment is crucial for minimizing serverless cold start latency. Reducing the size of the deployed package directly impacts the time required to download and initialize the function’s runtime environment. Efficient deployment processes, including the use of appropriate tools and strategies, contribute to faster function availability and improved overall performance.

Optimizing Function Package Sizes

Reducing the size of the function package is a key strategy for reducing cold start times. Smaller packages translate to faster downloads and quicker initialization of the function’s execution environment. This can be achieved through several methods, including eliminating unnecessary dependencies and optimizing code size.

- Dependency Management: Careful management of dependencies is critical. Include only the libraries and modules essential for the function’s operation. Use a package manager specific to your chosen language (e.g., npm for Node.js, pip for Python, Maven for Java) to manage dependencies and ensure only required versions are included.

- Code Minimization: Apply code minimization techniques such as minification and tree-shaking. Minification removes unnecessary characters (whitespace, comments) from the code, reducing its size. Tree-shaking identifies and removes unused code, further reducing the package size. Tools like Terser (for JavaScript) and UglifyJS can be employed for minification.

- Leveraging Layers (AWS Lambda): Utilize function layers, particularly in AWS Lambda, to share common dependencies across multiple functions. Layers allow you to package dependencies separately and reuse them, reducing the individual package sizes of each function. This is especially beneficial when multiple functions share the same libraries or runtime environments.

- Removing Unnecessary Files: Ensure that the deployment package does not contain any unnecessary files, such as test files, documentation, or development-related assets. This includes checking the build process to exclude these files from the final deployment artifact.

- Native Compilation (for supported languages): Consider using native compilation for languages like Go or Rust, if supported by your serverless provider. Native compilation produces a single, executable binary, which can result in smaller package sizes and potentially faster startup times compared to interpreted languages.

Analyzing Package Dependencies

Understanding the dependencies within a function package is essential for identifying opportunities for optimization. Tools that analyze package dependencies provide insights into the size and complexity of the function’s dependencies. This analysis can help pinpoint redundant or unnecessary dependencies that contribute to increased cold start latency.

- Dependency Tree Analysis: Utilize tools that generate a dependency tree to visualize the relationships between different packages and their dependencies. This allows for a clear understanding of the package’s complexity and the impact of each dependency. Tools like `npm list` (for Node.js) and `pipdeptree` (for Python) can be used to generate dependency trees.

- Package Size Analysis: Employ tools that provide detailed information about the size of each package and its dependencies. This allows you to identify the largest contributors to the package size and prioritize optimization efforts. Tools like `webpack-bundle-analyzer` (for JavaScript) can visualize the size of each module in a bundle.

- Vulnerability Scanning: Integrate vulnerability scanning tools into the build and deployment pipeline to identify and address potential security vulnerabilities within the dependencies. Addressing these vulnerabilities can sometimes lead to reduced package size by removing outdated or unnecessary dependencies.

- Regular Auditing: Regularly audit the dependencies to ensure they are up-to-date and free from known vulnerabilities. Keeping dependencies updated can sometimes lead to performance improvements and reduced package sizes as newer versions often include optimizations and bug fixes.

Containerization Techniques for Serverless Functions

Containerization offers a powerful approach to packaging and deploying serverless functions, particularly when dealing with complex dependencies or custom runtime environments. Containerization provides a consistent and isolated environment for function execution, potentially leading to improved cold start performance and greater portability.

Containerization encapsulates a function and its dependencies into a single, self-contained unit, known as a container. The container includes the function’s code, runtime environment, and all necessary dependencies. This approach offers several advantages, including:

- Consistency: Ensures consistent execution across different environments, as the function runs within the same container regardless of the underlying infrastructure.

- Isolation: Isolates the function and its dependencies from the host environment, preventing conflicts and ensuring predictable behavior.

- Portability: Enables easy deployment across different serverless platforms and cloud providers.

Example using Docker for containerizing a Python function:

Consider a simple Python function that uses the `requests` library:

# my_function.pyimport requestsdef handler(event, context): response = requests.get("https://www.example.com") return "statusCode": 200, "body": response.text The following Dockerfile creates a container for this function:

FROM public.ecr.aws/lambda/python:3.9 # or another compatible Python runtimeCOPY requirements.txt .RUN pip3 install -r requirements.txt --target "$LAMBDA_TASK_ROOT"COPY my_function.py .CMD ["my_function.handler"]

In this Dockerfile:

- The `FROM` instruction specifies the base image, which is a pre-built Lambda runtime environment for Python 3.9.

- The `COPY` instruction copies the `requirements.txt` file (containing the function’s dependencies) and the function’s code (`my_function.py`) into the container.

- The `RUN` instruction installs the dependencies using `pip3`. The `–target “$LAMBDA_TASK_ROOT”` argument specifies the location where the dependencies should be installed within the container, which is the root directory where the function’s code will be executed.

- The `CMD` instruction specifies the command to be executed when the container starts, which in this case is the function’s handler.

To build and deploy this function using AWS Lambda, you would:

- Create a `requirements.txt` file: Create a file named `requirements.txt` in the same directory as `my_function.py` and add `requests` to the file:

requests

- Build the Docker image: Build the Docker image using the following command:

docker build -t my-function .

- Deploy the image to AWS Lambda: You would then push the Docker image to a container registry (like Amazon ECR) and deploy it as a Lambda function, or use a tool like the Serverless Framework to automate this process.

By containerizing the function, you ensure that all dependencies are included, the environment is consistent, and deployment becomes more streamlined. Containerization can improve cold start times, particularly for functions with complex dependencies, as the runtime environment is pre-built and ready to go. The use of containerization, however, might introduce its own overhead, so it is crucial to measure the performance impact and ensure the benefits outweigh the costs.

Provisioned Concurrency and Warm Instances

Provisioned concurrency represents a proactive strategy to minimize serverless cold start latency by pre-initializing function instances. This approach ensures that function instances are ready to handle incoming requests, thus eliminating the delay associated with instance creation and initialization during cold starts. This section explores the mechanics of provisioned concurrency, its associated trade-offs, and best practices for effective management.

Provisioned Concurrency’s Role in Mitigating Cold Starts

Provisioned concurrency directly addresses the cold start problem by maintaining a pool of pre-initialized function instances. When a request arrives, the serverless platform routes it to one of these warm instances, bypassing the cold start process. This significantly reduces the latency experienced by users. The number of provisioned instances is configurable, allowing developers to tailor the capacity to their expected traffic volume.

A higher number of provisioned instances provides greater responsiveness but also increases costs.

Cost and Performance Trade-offs of Provisioned Concurrency

The utilization of provisioned concurrency introduces a trade-off between cost and performance. While it effectively mitigates cold start latency, it also incurs costs associated with maintaining the warm instances. The cost is proportional to the amount of time the instances are provisioned and the amount of memory allocated to each instance. Conversely, the benefit is a significant reduction in latency, which improves the user experience and can be critical for performance-sensitive applications.

The optimal configuration balances the cost of provisioned concurrency with the desired level of performance. For example, an application experiencing high traffic during peak hours might benefit from a higher level of provisioned concurrency during those times, while a lower level might suffice during off-peak hours to reduce costs.

Best Practices for Managing Warm Instances

Effective management of warm instances is crucial for optimizing both performance and cost-efficiency. Several best practices can be employed to achieve this:

- Right-sizing Provisioned Concurrency: Accurately estimating the required number of provisioned instances is critical. Over-provisioning leads to unnecessary costs, while under-provisioning results in cold starts. Monitoring traffic patterns and performance metrics is essential to fine-tune the provisioned concurrency level. Consider utilizing autoscaling features if available to dynamically adjust the number of warm instances based on demand.

- Monitoring Instance Health: Regularly monitor the health and performance of the warm instances. Metrics such as CPU utilization, memory usage, and error rates can indicate potential issues. Implement monitoring tools to track these metrics and set up alerts to notify you of any anomalies. This allows for proactive identification and resolution of problems before they impact users.

- Optimizing Function Code: While provisioned concurrency reduces cold start latency, it does not eliminate the importance of code optimization. Well-optimized code executes faster, reducing the time each instance spends processing requests and improving overall performance. Continue to apply code optimization techniques, such as minimizing dependencies and optimizing data access patterns, even when using provisioned concurrency.

- Strategic Provisioning: Schedule the provisioning and de-provisioning of warm instances based on anticipated traffic patterns. For instance, provision instances before peak usage times and de-provision them during periods of low activity. This dynamic approach can significantly reduce costs while maintaining acceptable performance levels.

- Consider Function Duration: Functions with longer execution times might benefit more from provisioned concurrency. The longer the execution time, the greater the impact of cold starts on overall request latency. Provisioned concurrency helps to ensure that instances are ready to handle requests for these longer-running functions.

Infrastructure Optimization and Platform Considerations

Optimizing infrastructure and understanding the nuances of different serverless platforms are crucial for minimizing cold start latency. The choice of platform and the configuration of its underlying infrastructure directly impact the speed at which serverless functions become available. This section will delve into specific optimization strategies and compare various platform offerings to guide informed decision-making.

Optimizing Infrastructure Configurations

Infrastructure configuration plays a significant role in cold start performance. Optimizing these settings can lead to substantial improvements.

- Memory Allocation: Increasing the memory allocated to a serverless function often results in faster cold start times. This is because more memory allows for quicker resource allocation and initialization. For example, AWS Lambda functions can benefit from higher memory allocations, particularly for functions handling complex dependencies.

- Networking Configuration: Network configuration, especially within Virtual Private Clouds (VPCs), can influence cold start latency. Functions deployed within a VPC typically experience longer cold starts due to the overhead of setting up network interfaces. However, for security reasons and to access resources within a VPC, this is often unavoidable.

- Region Selection: The geographical region where a serverless function is deployed affects cold start times. Deploying functions closer to the end-users reduces latency, including cold start latency, because the infrastructure resources are geographically nearer.

- Concurrency Limits: Setting appropriate concurrency limits can influence the availability of pre-warmed instances. Properly configuring the maximum number of concurrent executions can prevent resource exhaustion and ensure that functions are readily available.

- Underlying Compute Resources: The type of underlying compute resources used by the serverless platform can also affect cold start times. Some platforms might utilize more performant hardware or have optimized infrastructure for quicker function initialization.

Comparing Cold Start Performance Across Platforms

Different serverless platforms exhibit varying cold start characteristics. Understanding these differences is essential for selecting the optimal platform for a given workload. This section will provide a comparative analysis.

| Platform | Typical Cold Start Duration (seconds) | Factors Influencing Cold Start | Provisioning Options |

|---|---|---|---|

| AWS Lambda | 0.1 – 3+ | Memory allocation, VPC configuration, function size, language runtime. | Provisioned Concurrency, Warm Instances (via tools like Serverless Framework) |

| Azure Functions | 0.5 – 5+ | Consumption plan settings, function size, language runtime, network configuration. | Premium plan (always-on instances), Dedicated App Service Plan. |

| Google Cloud Functions | 0.2 – 4+ | Memory allocation, container size, language runtime, region. | Provisioned Concurrency (currently in beta), Cloud Run (for containerized workloads with more control). |

Note: Cold start times can vary significantly based on the factors listed above and the complexity of the function. The durations provided are indicative and based on typical scenarios.

Choosing the Right Serverless Platform Based on Cold Start Needs

The selection of a serverless platform should align with the specific requirements of the application, particularly the acceptable cold start latency. The following considerations are important.

- Latency Sensitivity: Applications requiring low latency, such as real-time processing or interactive user interfaces, may necessitate platforms with better cold start performance or the use of provisioning strategies.

- Budget Constraints: Some platforms offer more cost-effective options for managing cold starts. For example, AWS Lambda’s provisioned concurrency can incur costs. Understanding the pricing models of each platform is essential.

- Development Experience: The ease of development and deployment on each platform varies. Some platforms offer better tooling and integrations for specific languages or frameworks, which can indirectly impact cold start times through improved code optimization and deployment processes.

- Vendor Lock-in: Consider the potential for vendor lock-in. Migrating between platforms can be complex. Evaluate the portability of the code and dependencies.

- Platform Features and Integrations: The availability of features such as provisioned concurrency, warm instances, and integrations with other services can influence the choice. For example, a platform that readily supports provisioned concurrency may be preferable for latency-sensitive applications.

Caching Strategies for Serverless Functions

Caching is a critical technique for mitigating cold start latency in serverless functions. By storing frequently accessed data in a readily available location, functions can avoid the overhead of retrieving this data from slower sources, such as databases or external APIs, during initialization. This proactive approach significantly reduces the execution time and improves the overall performance of the serverless application, particularly for functions experiencing frequent invocations.

Techniques for Caching Frequently Accessed Data

Several caching strategies can be employed to optimize serverless function performance. These techniques aim to store data that is accessed repeatedly, thereby reducing the need to fetch it from its origin each time the function is invoked.

- In-Memory Caching: This involves storing data within the function’s memory during execution. The advantage is extremely fast access, as the data is immediately available. However, this approach is ephemeral, meaning the data is lost when the function instance is recycled. This is suitable for data that is relatively small, frequently accessed, and can be easily re-fetched if necessary.

- Distributed Caching: Utilizing external caching services, such as Redis, Memcached, or cloud-provider-specific solutions like Amazon ElastiCache or Google Cloud Memorystore, provides a more persistent and scalable caching solution. These services offer the ability to store larger datasets and share cached data across multiple function instances, enhancing efficiency and reducing cold start impact. Data retrieval is still fast, though there is a slight overhead compared to in-memory caching.

This is ideal for data that is accessed by many function instances and needs to be persistent.

- Content Delivery Networks (CDNs): CDNs are highly effective for caching static assets, such as images, videos, and JavaScript files. By caching these assets at edge locations closer to the users, CDNs minimize latency and improve response times. When a function needs to serve static content, it can direct requests to the CDN, which handles the delivery. This is particularly useful for websites and applications that serve a lot of static content.

- Caching Database Queries: Optimizing database interactions is a crucial part of performance improvement. Techniques like query caching store the results of database queries, so subsequent identical queries can retrieve the cached results instead of re-executing the query. This minimizes the time spent interacting with the database, especially for complex queries. This is most effective for read-heavy applications where data doesn’t change frequently.

Implementing Caching in a Serverless Function

Implementing caching in a serverless function requires careful consideration of the data to be cached, the appropriate caching mechanism, and the function’s architecture. Here is an example of how to implement in-memory caching using Python and AWS Lambda.Consider a function that retrieves product details from a database. The product details are accessed frequently, but the information changes infrequently.“`pythonimport jsonimport boto3# In-memory cache for product detailsproduct_cache = def get_product_details(product_id): “””Retrieves product details from the cache or database.””” if product_id in product_cache: print(“Fetching from cache”) return product_cache[product_id] print(“Fetching from database”) # Simulate fetching from database dynamodb = boto3.resource(‘dynamodb’) table = dynamodb.Table(‘ProductsTable’) # Replace with your table name try: response = table.get_item( Key=’ProductID’: product_id ) item = response.get(‘Item’) if item: product_cache[product_id] = item # Cache the result return item else: return None # Product not found except Exception as e: print(f”Error fetching from database: e”) return Nonedef lambda_handler(event, context): “””AWS Lambda handler function.””” product_id = event.get(‘product_id’) if not product_id: return ‘statusCode’: 400, ‘body’: json.dumps(‘message’: ‘Missing product_id’) product_details = get_product_details(product_id) if product_details: return ‘statusCode’: 200, ‘body’: json.dumps(product_details) else: return ‘statusCode’: 404, ‘body’: json.dumps(‘message’: ‘Product not found’) “`In this example:* The `product_cache` dictionary acts as an in-memory cache.

- The `get_product_details` function first checks the cache for the product details. If found, it returns the cached data, bypassing the database query.

- If the data is not in the cache, it fetches the data from the database, stores it in the cache, and then returns it.

- The `lambda_handler` function is the entry point for the AWS Lambda function.

This example demonstrates a simple in-memory caching implementation. In a production environment, you might choose a distributed caching solution like Redis or Memcached for improved scalability and persistence. The choice depends on the specific requirements of your application. The key takeaway is to prioritize caching frequently accessed data to reduce the overhead of cold starts. For instance, if a function is invoked 1000 times a day and each database call takes 200ms, caching the result could save 200 seconds of execution time per day, significantly improving performance and potentially reducing operational costs.

Monitoring and Alerting for Cold Starts

Effective monitoring and alerting are crucial for proactively managing and mitigating the impact of cold starts in serverless environments. By establishing a robust monitoring strategy, developers can gain valuable insights into cold start frequency, duration, and the overall performance implications. This allows for timely identification of issues, optimization of function configurations, and ultimately, a better user experience.

Setting Up Monitoring and Alerting to Track Cold Start Performance

Setting up a comprehensive monitoring and alerting system involves several key steps to effectively track cold start performance. This includes selecting appropriate monitoring tools, configuring metrics collection, defining alert thresholds, and integrating notifications.* Choosing Monitoring Tools: Select a monitoring solution that integrates well with the chosen serverless platform (e.g., AWS CloudWatch, Google Cloud Monitoring, Azure Monitor). Consider tools that provide detailed function-level metrics and support custom metric creation.

Configuring Metric Collection

Configure the monitoring tools to automatically collect relevant metrics. Ensure the metrics include cold start duration, function invocation count, and latency percentiles.

Defining Alert Thresholds

Establish clear alert thresholds based on performance Service Level Objectives (SLOs) and application requirements. Set thresholds for cold start duration, frequency, and impact on overall latency.

Integrating Notifications

Configure alerts to trigger notifications through various channels, such as email, Slack, or PagerDuty, to inform the relevant teams of performance degradations.

Examples of Metrics to Monitor for Cold Start Detection

Monitoring specific metrics provides actionable data to identify and address cold start issues. These metrics offer insights into the frequency, duration, and impact of cold starts on overall application performance.* Cold Start Duration: The time taken for a function to initialize and begin processing requests. This metric directly indicates the performance impact of a cold start. Measure this in milliseconds (ms).

Cold Start Frequency

The percentage or count of function invocations that experience a cold start. Track this metric to understand how often cold starts occur and the associated impact on user experience.

Function Invocation Count

The total number of times a function is invoked within a given time period. This metric provides context for cold start frequency and the overall workload.

Latency Percentiles (p50, p90, p99)

Monitor latency percentiles to understand the distribution of request processing times. An increase in latency, especially in higher percentiles, can indicate the impact of cold starts.

Initialization Time

The time spent by the function runtime environment to initialize the function’s code and dependencies. This metric helps to identify performance bottlenecks within the function code itself.

Memory Utilization

Track memory usage during function invocations. High memory utilization can increase the likelihood of cold starts as the platform might need to allocate new resources.

Designing a Monitoring Dashboard for Cold Start Events

A well-designed monitoring dashboard provides a centralized view of cold start performance, enabling quick identification of issues and effective troubleshooting. The following table Artikels the structure of a responsive monitoring dashboard for cold start events.

| Metric | Description | Threshold/Alert | Visualization |

|---|---|---|---|

| Cold Start Duration (ms) | The time taken for a function to initialize after a cold start. | > 500ms (configurable based on function requirements) | Line Chart: Shows the trend of cold start duration over time. The Y-axis represents time in milliseconds, and the X-axis shows time intervals. A red line indicates the threshold. |

| Cold Start Frequency (%) | Percentage of function invocations that experience a cold start. | > 5% (configurable based on function requirements) | Gauge Chart: Displays the current percentage of cold starts as a value within a range. The gauge is color-coded (e.g., green, yellow, red) to indicate performance levels. |

| Invocation Count | Total number of function invocations. | N/A (Informational) | Bar Chart: Shows the number of invocations per time period (e.g., per minute, per hour). The Y-axis represents the number of invocations, and the X-axis represents time. |

| Latency (p99) (ms) | 99th percentile of request processing time. | > 1000ms (configurable based on function requirements) | Line Chart: Displays the 99th percentile latency over time. The Y-axis represents latency in milliseconds, and the X-axis represents time. A red line indicates the threshold. |

Serverless Function Design Patterns

Architectural design patterns are crucial for mitigating the impact of cold starts in serverless environments. These patterns aim to optimize function invocation, resource utilization, and overall system responsiveness. Employing well-defined patterns facilitates scalability, reduces latency, and improves the user experience.

Event-Driven Architectures for Responsiveness

Event-driven architectures are particularly well-suited for serverless applications due to their inherent scalability and responsiveness. They decouple components, allowing them to react to events asynchronously. This asynchronous processing model minimizes the time a user waits for a response, as functions can be triggered by events without requiring immediate execution.

- Event Producers and Consumers: The fundamental concept involves event producers, which generate events, and event consumers, which react to those events. Event producers could be various services, such as a database, an IoT device, or a user interface. Event consumers are serverless functions that perform specific tasks in response to those events.

- Event Buses and Queues: Event buses (like AWS EventBridge or Azure Event Grid) or message queues (like Amazon SQS or Azure Service Bus) serve as intermediaries, decoupling producers and consumers. They handle event routing, delivery, and buffering, which improves system resilience and allows for asynchronous processing. When an event occurs, it is published to the event bus or queue. The event bus then routes the event to the appropriate serverless functions based on defined rules or subscriptions.

Message queues offer a way to manage and process events, ensuring reliable event delivery even in the face of failures.

- Benefits of Asynchronous Processing: Asynchronous processing, enabled by event-driven architectures, provides several benefits. First, it allows for parallel execution of tasks, increasing throughput and reducing overall processing time. Second, it isolates failures, as a failure in one function does not necessarily affect other functions. Finally, it improves responsiveness by allowing users to receive initial responses quickly, even if background tasks are still in progress.

For example, an e-commerce platform can immediately confirm an order (initial response), while other functions process payment and inventory updates asynchronously.

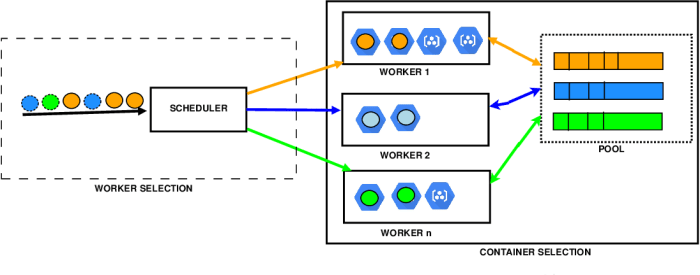

Optimized Serverless Function Architecture Illustration

An optimized serverless function architecture focuses on minimizing cold start latency and maximizing resource utilization. The illustration below Artikels a typical architecture, incorporating several key elements:

- API Gateway: The API Gateway acts as the entry point for all client requests. It handles routing, authentication, and authorization. It also acts as a single point of contact, decoupling the client from the underlying serverless functions.

- Function 1 (Triggered by API Gateway): This function handles initial request validation and any necessary pre-processing. It’s designed to be lightweight and fast to execute, minimizing the impact of a cold start.

- Event Bus/Queue: If the request requires more extensive processing, Function 1 publishes an event to an event bus or queue. This event contains the relevant data for subsequent processing steps.

- Function 2 (Triggered by Event Bus/Queue): This function performs the core business logic. It’s triggered by the event bus or queue, enabling asynchronous processing. The event bus or queue ensures reliable event delivery, even if Function 2 is temporarily unavailable.

- Database: The database stores and retrieves data. It’s designed for efficient access, utilizing appropriate indexing and caching strategies.

- Caching Layer: A caching layer (e.g., Redis, Memcached) is implemented to store frequently accessed data, reducing database load and improving response times.

- Monitoring and Logging: Comprehensive monitoring and logging are integrated throughout the architecture to track function performance, identify bottlenecks, and troubleshoot issues.

Detailed Description of the Architecture:

The client initiates a request through the API Gateway. The API Gateway authenticates the user and routes the request to Function 1. Function 1 performs preliminary validation and, if necessary, publishes an event to an event bus or queue. This event contains the necessary data for further processing. Function 2, triggered by the event bus/queue, processes the event, retrieves data from the database, performs the core business logic, and updates the database.

The results are either directly returned through Function 2, or a subsequent function is triggered to handle final response formatting. The entire system incorporates a caching layer to reduce database load and improve response times, along with robust monitoring and logging for performance tracking and issue resolution. This design ensures minimal cold start impact by using lightweight functions for initial processing, asynchronous task execution, and efficient data retrieval.

Closure

In conclusion, minimizing serverless cold start latency requires a multi-pronged approach. By understanding the root causes, optimizing code, configuring the runtime environment effectively, and employing strategies like provisioned concurrency and caching, developers can significantly reduce the impact of cold starts. Continuous monitoring, proactive alerting, and the adoption of optimized architectural patterns are essential for maintaining optimal performance. This comprehensive guide provides a roadmap for building responsive and efficient serverless applications, ensuring a positive user experience and maximizing the advantages of this powerful computing paradigm.

FAQ Compilation

What is the primary cause of serverless cold starts?

Cold starts are primarily caused by the time it takes to initialize a new function instance, including downloading code, setting up the execution environment, and loading dependencies.

How does the choice of programming language affect cold start times?

Languages with faster startup times, such as Go and Node.js, generally exhibit shorter cold start times compared to languages like Java or Python, which often have larger runtime environments and more complex initialization processes.

What is provisioned concurrency, and how does it help?

Provisioned concurrency pre-initializes a specified number of function instances, ensuring they are readily available to handle incoming requests. This eliminates or significantly reduces cold start latency for those requests.

How can I measure the cold start performance of my serverless functions?

Use cloud provider monitoring tools (e.g., AWS CloudWatch, Azure Monitor, Google Cloud Monitoring) to track function invocation times and identify instances with longer execution durations, which are indicative of cold starts. Implement custom metrics to monitor cold start frequency and duration.

Are there any cost implications associated with reducing cold starts?

Yes, strategies like provisioned concurrency and caching can incur additional costs. Provisioned concurrency requires paying for pre-warmed instances, while caching may involve storage and data transfer fees. It is important to balance performance gains with cost considerations.