Integrating serverless architectures with established systems represents a significant shift in modern software development, offering compelling advantages in scalability, cost-efficiency, and agility. This transition involves strategically leveraging serverless computing to enhance and modernize existing infrastructure, unlocking new possibilities for application development and deployment. This exploration will delve into the complexities of this integration, providing a detailed roadmap for achieving seamless connectivity and maximizing the benefits of both serverless and legacy components.

The journey to serverless integration requires a methodical approach, encompassing careful system assessment, technology selection, and implementation strategies. From understanding the fundamental concepts of serverless to navigating API gateways, database connections, and event-driven architectures, we will explore the essential components and techniques required to build robust and scalable solutions. This guide will provide a comprehensive overview of the challenges and opportunities associated with this paradigm shift, equipping you with the knowledge and insights necessary to successfully integrate serverless with your existing systems.

Understanding Serverless and Its Benefits

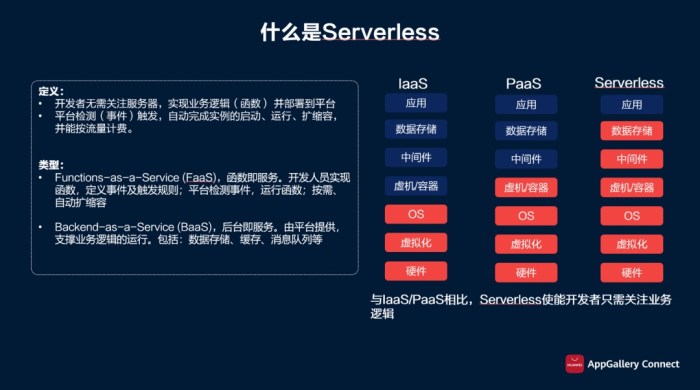

Serverless computing represents a paradigm shift in cloud computing, allowing developers to build and run applications without managing servers. This section delves into the core concepts of serverless, outlining its advantages, and exploring scenarios where it provides the most value.

Core Concepts of Serverless Computing

Serverless computing is not about the absence of servers; rather, it is about abstracting away server management from developers. The cloud provider handles all aspects of infrastructure, including provisioning, scaling, and maintaining servers. Developers focus solely on writing and deploying code. Key components include Function-as-a-Service (FaaS), where individual functions are executed in response to events, and Backend-as-a-Service (BaaS), which provides pre-built backend functionalities such as databases, authentication, and storage.

Serverless architectures are event-driven, meaning functions are triggered by events such as HTTP requests, database updates, or scheduled tasks. Scalability is handled automatically by the cloud provider, ensuring resources are allocated as needed to meet demand. Pay-per-use pricing models are prevalent, where developers are charged only for the actual compute time and resources consumed by their functions.

Advantages of Using Serverless Architectures

Serverless architectures offer a compelling set of advantages, particularly concerning scalability and cost efficiency. These benefits are achieved through automated resource management and a pay-as-you-go pricing model.

- Scalability: Serverless platforms automatically scale resources based on demand. This eliminates the need for manual scaling efforts, ensuring applications can handle fluctuating workloads without performance degradation. For example, if an application experiences a sudden surge in user traffic, the serverless platform automatically provisions additional resources to handle the increased load. This elasticity allows applications to remain responsive during peak times.

- Cost Efficiency: Serverless computing often leads to significant cost savings. Developers pay only for the compute time and resources their functions consume. This pay-per-use model contrasts with traditional server-based architectures, where resources are provisioned and paid for regardless of actual utilization. This model is particularly beneficial for applications with intermittent workloads, such as those that run only during certain hours or in response to specific events.

- Reduced Operational Overhead: Serverless architectures minimize operational overhead. The cloud provider handles server management, including patching, security updates, and infrastructure maintenance. This allows developers to focus on writing and deploying code, reducing the time and effort spent on infrastructure-related tasks. This allows development teams to concentrate on feature development and innovation rather than infrastructure management.

- Faster Development Cycles: Serverless platforms often provide pre-built backend services and integrations. This accelerates development cycles by allowing developers to quickly build and deploy applications. The focus shifts from infrastructure configuration to code development, resulting in faster time-to-market for new features and applications.

Common Use Cases Where Serverless Excels

Serverless computing is well-suited for a variety of use cases, particularly those that benefit from its scalability, cost-efficiency, and reduced operational overhead. Several examples illustrate the effectiveness of serverless architectures in real-world scenarios.

- Web Applications and APIs: Serverless functions can be used to build scalable and cost-effective web applications and APIs. For instance, an API endpoint for handling user authentication can be implemented as a serverless function. This approach allows the API to scale automatically to handle a large number of requests without requiring manual server management.

- Mobile Backends: Serverless architectures are ideal for building mobile application backends. BaaS services provide features like user authentication, data storage, and push notifications, simplifying the development process. Developers can focus on the front-end user experience while leveraging the scalability and cost-efficiency of the serverless backend.

- Data Processing and ETL Pipelines: Serverless functions can be used to process data streams, perform data transformations, and build Extract, Transform, Load (ETL) pipelines. For example, a serverless function can be triggered by the arrival of a new file in an object storage service. The function can then process the file, perform data cleaning, and load the data into a data warehouse.

- Chatbots and Conversational Interfaces: Serverless platforms are well-suited for building chatbots and conversational interfaces. Functions can be triggered by user interactions, process natural language input, and generate responses. The scalability and cost-efficiency of serverless make it an attractive option for building and deploying chatbots that can handle a large number of concurrent users.

Identifying Existing Systems Suitable for Serverless Integration

The successful integration of serverless technologies into existing systems hinges on a careful assessment of system characteristics and business needs. Not all systems are equally suited for serverless adoption; understanding the suitability of a system is crucial for realizing the benefits of this architectural approach. This section Artikels the types of systems that are prime candidates for serverless integration, provides illustrative examples, and details the criteria for selecting systems for migration.

Systems Best Suited for Serverless Integration

Several types of existing systems are particularly well-suited for serverless integration, primarily those characterized by event-driven architectures, fluctuating workloads, and stateless operations. The following systems often demonstrate a high degree of compatibility with serverless principles.

- Web Applications and APIs: Web applications and APIs, especially those handling user requests, are excellent candidates. Serverless functions can be triggered by HTTP requests, allowing for dynamic scaling based on traffic volume. This is particularly advantageous for applications with unpredictable or seasonal traffic patterns.

- Batch Processing Systems: Systems performing batch operations, such as data transformation, reporting, or scheduled tasks, can benefit significantly. Serverless functions can be triggered by events like file uploads or scheduled timers, enabling efficient resource utilization and cost optimization.

- Data Processing Pipelines: Data pipelines that involve ETL (Extract, Transform, Load) processes are well-suited. Serverless functions can be used to process data in real-time or in batches, triggered by events such as data ingestion or database updates. This allows for scalable and cost-effective data processing.

- Mobile Backends: Mobile application backends are frequently designed to handle a high volume of requests from mobile devices. Serverless provides the scalability and responsiveness required to handle these requests efficiently, as well as offering cost savings.

- IoT (Internet of Things) Applications: IoT applications often involve processing large volumes of data from connected devices. Serverless functions can be used to ingest, process, and analyze this data in real-time, providing a scalable and cost-effective solution.

Examples of Legacy Systems and Serverless Enhancements

Legacy systems, often characterized by monolithic architectures, can be modernized and enhanced through strategic serverless integration. Here are examples of how serverless can improve specific legacy systems:

- E-commerce Platforms: A legacy e-commerce platform might struggle to handle peak traffic during sales events. Serverless can be integrated to manage specific functionalities, such as product catalog updates, order processing, and payment gateway integrations. For example, serverless functions could be triggered by user actions, such as adding an item to a cart or completing a purchase, allowing the system to scale dynamically to meet demand.

- Content Management Systems (CMS): Legacy CMS systems often require substantial resources for image processing, content delivery, and search functionality. Serverless functions can be utilized for tasks such as image resizing and optimization, content indexing, and search query processing. For instance, a serverless function could automatically resize images uploaded to the CMS, improving page load times and user experience.

- Financial Transaction Systems: Legacy financial systems can benefit from serverless integration for tasks such as fraud detection, transaction validation, and real-time reporting. Serverless functions can be triggered by transaction events, enabling real-time analysis and immediate response to suspicious activities. For example, a serverless function could be triggered whenever a new transaction occurs, evaluating it against predefined fraud rules and flagging any potential issues.

- CRM (Customer Relationship Management) Systems: CRM systems can use serverless to automate customer communication, data synchronization, and report generation. Serverless functions can be triggered by events such as customer interactions, data updates, or scheduled tasks. For example, a serverless function could automatically send welcome emails to new customers or update contact information in response to form submissions.

Criteria for Selecting Systems for Serverless Migration

The selection of systems for serverless migration should be based on a careful evaluation of several key criteria to ensure a successful transition and maximize the benefits of serverless.

- Workload Characteristics: Systems with fluctuating or unpredictable workloads are ideal candidates. Serverless architectures are designed to scale automatically based on demand, providing cost-effectiveness and high availability.

- Stateless Operations: Systems with stateless operations, where each request is independent of previous requests, are well-suited. Serverless functions are inherently stateless, making them easy to scale and manage.

- Event-Driven Architecture: Systems that can be easily integrated with an event-driven architecture are prime candidates. Serverless functions can be triggered by a variety of events, such as file uploads, database updates, or scheduled timers.

- Cost Optimization Potential: Systems where cost optimization is a priority should be considered. Serverless architectures often offer significant cost savings compared to traditional infrastructure-based solutions, particularly for workloads with variable demand.

- Development and Maintenance Effort: Systems where reduced development and maintenance effort is desired are good candidates. Serverless functions are often easier to develop and deploy than traditional applications, and the operational overhead is significantly reduced.

- Existing Integration Points: The ease of integration with existing systems is an important consideration. Systems with well-defined APIs or integration points are easier to integrate with serverless functions.

Choosing the Right Serverless Technologies

Selecting the appropriate serverless technologies is crucial for successful integration with existing systems. The optimal choice depends on various factors, including the existing infrastructure, specific requirements of the application, and budget constraints. A thorough understanding of available platforms, their features, and pricing models is essential for making an informed decision.

Popular Serverless Platforms and Their Features

Several prominent serverless platforms offer a range of functionalities to facilitate application development and deployment. These platforms provide compute, storage, and other services on a pay-as-you-go basis, enabling developers to focus on code rather than infrastructure management.

- AWS Lambda: Amazon Web Services (AWS) Lambda is a compute service that lets you run code without provisioning or managing servers. It supports multiple programming languages, including Node.js, Python, Java, Go, and C#. Lambda automatically scales your application by running code in response to each trigger. It integrates seamlessly with other AWS services, such as S3, DynamoDB, and API Gateway.

A key feature is its ability to process events from various sources, making it suitable for event-driven architectures.

- Azure Functions: Azure Functions is a serverless compute service offered by Microsoft Azure. It allows you to run event-triggered code without managing infrastructure. It supports a variety of programming languages, including C#, JavaScript, Python, and Java. Azure Functions integrates with other Azure services, such as Cosmos DB, Event Hubs, and Service Bus. It provides features like durable functions for stateful workflows and bindings for simplified integration with other services.

- Google Cloud Functions: Google Cloud Functions is a serverless execution environment that allows you to run your code on Google’s infrastructure. It supports languages like Node.js, Python, Go, and Java. It automatically scales and handles the infrastructure needed to run your code. It integrates with other Google Cloud services, such as Cloud Storage, Cloud Pub/Sub, and Cloud Firestore. Cloud Functions is particularly well-suited for event-driven applications and microservices.

Comparison of Serverless Technologies Based on Pricing Models and Supported Languages

The pricing models and supported languages vary significantly among different serverless platforms. These differences can significantly impact the overall cost and feasibility of a project. Analyzing these aspects is vital when selecting a platform.

- Pricing Models: The pricing models for serverless platforms typically involve a combination of factors, including the number of requests, the duration of execution (measured in milliseconds), and the amount of memory used. Some platforms also charge for data transfer and storage. Understanding the specific pricing structure of each platform is essential for cost optimization. For example, AWS Lambda pricing is based on the number of requests, the duration of the code execution, and the memory allocated to the function.

Azure Functions has a consumption plan with similar pricing metrics. Google Cloud Functions also charges based on execution time, memory usage, and the number of invocations.

- Supported Languages: The supported languages vary across different platforms. AWS Lambda supports a broad range of languages, including Node.js, Python, Java, Go, and C#. Azure Functions supports C#, JavaScript, Python, Java, and PowerShell. Google Cloud Functions supports Node.js, Python, Go, Java, and .NET. The choice of supported languages is a critical factor, especially if the existing systems are built with specific languages.

Table Comparing Serverless Platforms

The following table provides a concise comparison of AWS Lambda, Azure Functions, and Google Cloud Functions, highlighting key features, supported languages, and pricing models. This information aids in a direct comparison of the platforms.

| Platform | Supported Languages | Pricing Model | Key Features |

|---|---|---|---|

| AWS Lambda | Node.js, Python, Java, Go, C#, Ruby, PowerShell | Pay-per-use based on requests, execution time, and memory allocation | Integration with a wide range of AWS services, event-driven architecture, automatic scaling, high availability. Example: Ideal for processing images uploaded to S3, triggering a function to resize and store the images. |

| Azure Functions | C#, JavaScript, Python, Java, PowerShell | Pay-per-use based on execution time and memory consumption | Durable Functions for stateful workflows, triggers and bindings for simplified integration, integration with Azure services. Example: Using Durable Functions to orchestrate a multi-step process, such as order processing, which includes validation, payment processing, and shipping notifications. |

| Google Cloud Functions | Node.js, Python, Go, Java, .NET | Pay-per-use based on execution time, memory usage, and invocations | Integration with Google Cloud services, event-driven architecture, automatic scaling, support for various triggers. Example: Automatically processing data streams from Cloud Pub/Sub to update a dashboard in real-time, triggered by new messages in a topic. |

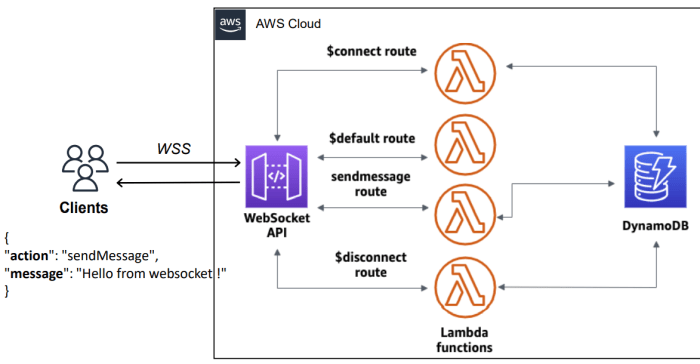

API Gateway Integration Strategies

API gateways are critical components in serverless architectures, acting as the central entry point for all client requests. They provide a layer of abstraction between the client and the underlying serverless functions, enabling features such as authentication, authorization, request routing, and traffic management. This decoupling allows for independent scaling and evolution of the backend services without impacting the client-facing API.

Role of API Gateways in Serverless Integration

API gateways serve multiple crucial functions in serverless integration, contributing to security, scalability, and maintainability. They streamline the interaction between clients and serverless functions.

- Centralized Entry Point: API gateways provide a single point of entry for all API requests. This simplifies client-side code and allows for consistent request handling.

- Authentication and Authorization: Gateways handle authentication (verifying user identity) and authorization (determining user permissions). This offloads security concerns from individual serverless functions, enhancing security posture.

- Request Routing: API gateways route incoming requests to the appropriate serverless functions based on the request path, method, or other criteria. This enables complex API structures with multiple functions serving different purposes.

- Traffic Management: Gateways can throttle requests, set rate limits, and manage API usage to prevent abuse and ensure service availability. This is essential for controlling costs and maintaining performance.

- Transformation and Aggregation: Gateways can transform request and response formats, aggregate data from multiple backend services, and cache responses to improve performance.

- Monitoring and Logging: API gateways provide comprehensive logging and monitoring capabilities, allowing for detailed tracking of API usage, performance, and errors. This is crucial for debugging and optimizing the API.

Designing an API Gateway Architecture for a Web Application Accessing a Database

A well-designed API gateway architecture for a web application accessing a database involves several components. The architecture facilitates efficient request processing, robust security, and scalability.

Consider a web application that allows users to view and update product information stored in a relational database (e.g., PostgreSQL). The architecture would be structured as follows:

- Client (Web Application): The user interface of the web application, built using HTML, CSS, and JavaScript. It makes API calls to the API gateway.

- API Gateway (e.g., AWS API Gateway, Google Cloud API Gateway): This acts as the front door for all API requests. It handles authentication, authorization, request routing, and traffic management.

- Authentication Service (e.g., Amazon Cognito, Auth0): Manages user authentication, providing tokens that are used by the API gateway to verify user identity.

- Serverless Functions (e.g., AWS Lambda, Google Cloud Functions): These functions handle specific API operations, such as retrieving product details, creating new products, updating existing products, and deleting products.

- Database (e.g., PostgreSQL, MySQL): Stores the product information. Serverless functions interact with the database to read and write data.

The flow of a request would be:

- The client sends an API request to the API gateway.

- The API gateway authenticates the request using the authentication service.

- The API gateway authorizes the request based on the user’s permissions.

- The API gateway routes the request to the appropriate serverless function.

- The serverless function interacts with the database to fulfill the request.

- The serverless function returns a response to the API gateway.

- The API gateway returns the response to the client.

Example: When a user wants to retrieve product details, the web application sends a GET request to the API gateway at the endpoint `/products/productId`. The API gateway authenticates the user, authorizes the request, and routes it to the `getProduct` serverless function. This function retrieves the product details from the database and returns the data to the API gateway, which then returns it to the web application.

Configuring an API Gateway to Handle Authentication and Authorization

Configuring an API gateway to handle authentication and authorization is crucial for securing serverless APIs. The configuration process generally involves integrating with an identity provider, defining access control policies, and implementing request validation.

Consider the same web application example from the previous section. Here’s how you would configure the API gateway (using AWS API Gateway as an example) to handle authentication and authorization:

- Integrate with an Authentication Service: Configure the API gateway to use an authentication service like Amazon Cognito. This involves creating a user pool in Cognito, configuring the API gateway to use Cognito authorizers, and setting up the necessary OAuth 2.0 flows.

- Define API Keys (Optional): If API keys are required, create API keys in the API gateway and associate them with usage plans. This allows for rate limiting and usage tracking.

- Implement Authorization: Define IAM roles and policies to grant permissions to the serverless functions. The API gateway can use these roles to determine which users are authorized to access specific API endpoints. For example:

- Create an IAM role for the `getProduct` function with read-only access to the database.

- Create an IAM policy that allows users with the “viewer” role to invoke the `getProduct` function.

- Associate the IAM role with the API gateway’s authorizer.

- Configure Authentication and Authorization in the API Gateway:

- Authentication: Configure the API gateway to use the Cognito authorizer. This will require users to authenticate with Cognito before accessing the API. The authorizer validates the JWT (JSON Web Token) provided by Cognito.

- Authorization: Define IAM policies that restrict access to specific API endpoints based on the user’s roles. The API gateway will evaluate these policies before invoking the serverless functions.

- Request Validation: Configure the API gateway to validate incoming requests. This involves validating request parameters, headers, and body to ensure they meet the expected format and constraints.

- Test the Configuration: Thoroughly test the API gateway configuration by making requests with different user roles and permissions. Verify that authentication and authorization are working as expected.

Example: When a user tries to access the `/products/productId` endpoint, the API gateway will:

- Check for a valid JWT in the request headers.

- If a valid JWT is present, use the Cognito authorizer to verify the token.

- If the token is valid, check the user’s roles and permissions against the defined IAM policies.

- If the user has the necessary permissions, route the request to the `getProduct` serverless function.

- If the user does not have the necessary permissions, return an “Unauthorized” error.

Database Integration Approaches

Integrating serverless functions with databases is crucial for building dynamic and scalable applications. The choice of integration method depends on the database type (relational or NoSQL), the desired performance characteristics, and the specific serverless platform being used. Effective database integration allows serverless functions to read, write, and manipulate data, enabling a wide range of functionalities, from simple data retrieval to complex data processing tasks.

This section details various approaches to facilitate seamless communication between serverless functions and different database systems.

Relational Database Integration

Relational databases, such as PostgreSQL, MySQL, and Microsoft SQL Server, offer structured data storage with ACID (Atomicity, Consistency, Isolation, Durability) properties, which is essential for data integrity in many applications. Integrating serverless functions with relational databases typically involves establishing database connections, executing SQL queries, and managing transactions.Approaches for integrating serverless functions with relational databases often include:

- Direct Database Connections: Serverless functions can directly connect to relational databases using database drivers or SDKs provided by the database vendor. This method offers the most control but requires careful management of connection pooling and security. Connection pooling minimizes the overhead of establishing new database connections for each function invocation, improving performance and resource utilization.

- Database Proxies: A database proxy, such as AWS Database Proxy or a self-managed proxy, can sit between the serverless function and the database. The proxy manages connection pooling, handles database failover, and provides additional security features. This approach simplifies connection management and can improve database performance.

- Serverless Database Services: Some cloud providers offer serverless database services, like AWS Aurora Serverless. These services automatically scale compute resources based on demand, providing a serverless experience for relational databases. This eliminates the need for manual scaling and management.

Demonstrating the use of database connection pooling in a serverless environment involves several considerations:

- Connection Management: Database connections are expensive to create and destroy repeatedly. Connection pooling reuses existing connections, reducing latency and improving performance.

- Connection Pooling Libraries: Use connection pooling libraries specific to the database and the programming language used by the serverless function (e.g., `pg` for PostgreSQL in Node.js, or JDBC connection pools in Java).

- Connection Lifecycle: Ensure connections are properly closed after use to prevent resource leaks. In a serverless environment, connections might need to be established and closed within each function invocation. Consider reusing connections across multiple invocations within the same execution environment to optimize performance, which depends on the serverless platform’s behavior regarding function instance reuse.

For example, in Node.js with the `pg` library for PostgreSQL, the following code snippet illustrates connection pooling:“`javascriptconst Pool = require(‘pg’);const pool = new Pool( user: ‘your_user’, host: ‘your_host’, database: ‘your_database’, password: ‘your_password’, port: 5432, // Default PostgreSQL port max: 20, // Maximum number of connections in the pool idleTimeoutMillis: 30000, // Close idle connections after 30 seconds connectionTimeoutMillis: 2000, // Timeout for acquiring a connection);exports.handler = async (event) => let client; try client = await pool.connect(); const result = await client.query(‘SELECT

FROM your_table;’);

const data = result.rows; return statusCode: 200, body: JSON.stringify(data), ; catch (error) console.error(‘Error executing query’, error); return statusCode: 500, body: JSON.stringify( error: ‘Failed to retrieve data’ ), ; finally if (client) client.release(); // Release the client back to the pool ;“`This code establishes a connection pool when the function is initialized, reuses connections, and releases them back to the pool after use.

NoSQL Database Integration

NoSQL databases, such as MongoDB, Cassandra, and DynamoDB, offer flexible data models and horizontal scalability, making them well-suited for handling large volumes of unstructured or semi-structured data. Integrating serverless functions with NoSQL databases typically involves interacting with APIs provided by the database vendors.Integrating serverless functions with NoSQL databases usually includes:

- Direct API Calls: Serverless functions can directly call the database’s API using SDKs or libraries provided by the database vendor. This approach offers flexibility and control but requires handling connection management and error handling.

- Database Connectors: Some serverless platforms offer built-in database connectors or integrations that simplify interaction with NoSQL databases. These connectors handle connection management, authentication, and other complexities.

- Event-Driven Architectures: Using event-driven architectures, serverless functions can react to database events, such as data updates or new document creations. This allows for building real-time applications and asynchronous data processing pipelines.

For instance, with AWS DynamoDB, a serverless function can use the AWS SDK for JavaScript to read and write data.The steps for creating a serverless function that reads and writes data to a database include:

- Choose a Serverless Platform and Database: Select a serverless platform (e.g., AWS Lambda, Azure Functions, Google Cloud Functions) and a database (e.g., PostgreSQL, MongoDB, DynamoDB).

- Set up the Database: Create the database, configure access credentials, and define the necessary schema (if applicable).

- Create the Serverless Function: Create a new function in the serverless platform. Choose a programming language (e.g., Node.js, Python, Java).

- Install Database SDK or Driver: Install the appropriate SDK or driver for the chosen database within the function’s environment. For example, in Node.js, use `npm install pg` for PostgreSQL or `npm install mongodb` for MongoDB.

- Configure Database Connection: Configure the database connection details (host, port, username, password, database name) within the function’s code or environment variables.

- Implement Data Access Logic: Write the code to read and write data to the database using the SDK or driver. Handle connection management (e.g., connection pooling) and error handling.

- Implement API Gateway Integration (Optional): If the function needs to be accessed via an API, integrate it with an API gateway (e.g., AWS API Gateway, Azure API Management, Google Cloud API Gateway). Define API endpoints and request/response mappings.

- Test the Function: Deploy the function and test it thoroughly to ensure it can read and write data correctly. Monitor function logs and performance metrics.

- Implement Security Measures: Implement appropriate security measures, such as securing database credentials using environment variables or secrets management services.

- Implement Error Handling: Include robust error handling to gracefully manage database connection errors, query execution errors, and data validation errors. Log these errors for debugging and monitoring.

For example, a Python function interacting with DynamoDB might use the following code:“`pythonimport boto3import jsondynamodb = boto3.resource(‘dynamodb’)table_name = ‘your_table_name’table = dynamodb.Table(table_name)def lambda_handler(event, context): try: # Read data from the table response = table.get_item(Key=’id’: ‘your_item_id’) item = response.get(‘Item’) if item: print(f”Retrieved item: item”) # Write data to the table table.put_item(Item=’id’: ‘your_item_id’, ‘data’: ‘some_data’) print(“Successfully wrote item to the table”) return ‘statusCode’: 200, ‘body’: json.dumps( ‘message’: ‘Data read and written successfully’ ) except Exception as e: print(f”Error: e”) return ‘statusCode’: 500, ‘body’: json.dumps( ‘error’: str(e) ) “`

Event-Driven Architecture for Seamless Integration

Serverless computing lends itself exceptionally well to event-driven architectures, offering a powerful paradigm for integrating disparate systems. This approach leverages events – significant occurrences within a system – to trigger actions in a decoupled and scalable manner. This allows for building resilient, responsive applications that react in real-time to changes across various components.

Principles of Event-Driven Architectures in Serverless Context

Event-driven architectures, within the serverless framework, are built upon the core concept of asynchronous communication. Components communicate by emitting and reacting to events, rather than directly calling each other. This fundamental shift from synchronous to asynchronous interactions provides several advantages, including increased scalability, improved fault tolerance, and enhanced decoupling of services. Serverless functions act as event consumers, triggered by specific event sources.

- Event Producers: These are the components that generate events. They could be anything from a database, a message queue, or a third-party service. They are responsible for emitting events to the event bus or directly to event consumers.

- Event Bus (Optional): This serves as a central hub for events, routing them to the appropriate consumers. Services like Amazon EventBridge or Azure Event Grid provide robust event bus capabilities, allowing for filtering, transformation, and routing of events based on various criteria. This facilitates decoupling, allowing event producers and consumers to operate independently.

- Event Consumers (Serverless Functions): These are the serverless functions that are triggered by specific events. They perform actions based on the event data, such as updating a database, sending notifications, or initiating other processes.

- Events: Events represent significant occurrences in the system. They contain information about the event that has occurred. They can be simple notifications (e.g., “file uploaded”) or contain detailed data about the event (e.g., “order created with details”).

Examples of Event Sources and Triggered Serverless Functions

Event sources act as the catalysts that initiate serverless function executions. They can be diverse, encompassing various systems and services. The selection of event sources depends on the specific integration requirements and the nature of the existing systems.

- Message Queues: Message queues, such as Amazon SQS or Azure Service Bus, act as event sources. When a message is added to the queue, a serverless function is triggered to process the message. This is commonly used for asynchronous task processing, such as order processing or image resizing.

- Database Changes: Changes in a database, like inserts, updates, or deletes, can trigger serverless functions. Services like AWS Lambda with DynamoDB streams or Azure Functions with Cosmos DB triggers allow developers to react to data changes in real-time. This is beneficial for tasks like updating search indexes or sending notifications when data is modified.

- Object Storage Events: Events generated by object storage services, such as Amazon S3 or Azure Blob Storage, can trigger serverless functions. For example, uploading a new image to a storage bucket can trigger a function to generate thumbnails or perform image analysis.

- API Gateway Events: API Gateway can act as an event source. When an API request is received, it can trigger a serverless function to handle the request. This is a fundamental building block for creating serverless APIs.

- Scheduled Events: Scheduled events, such as those provided by AWS CloudWatch Events or Azure Scheduler, can trigger serverless functions on a predetermined schedule. This is useful for tasks like running batch jobs, generating reports, or performing regular maintenance tasks.

Illustrative Diagram of Event Flow in an Event-Driven Serverless System

The diagram below illustrates the flow of events through an event-driven serverless system. It depicts a simplified scenario involving a user uploading an image, triggering several serverless functions to perform different operations.

Diagram Description:

The diagram presents a flow chart illustrating the interaction of various components in an event-driven serverless system. At the top, a user uploads an image to an “Object Storage” service (e.g., AWS S3). This upload generates an “Image Uploaded” event. This event is then routed to an “Event Bus” (e.g., Amazon EventBridge), which is a central component that facilitates the routing of events.

The event bus filters and routes this event to three different serverless functions, each performing a specific task.

- Function 1: This function, triggered by the event, is responsible for generating thumbnails of the uploaded image. It receives the event, processes the image, and stores the thumbnails back into the object storage.

- Function 2: This function analyzes the image for content (e.g., using image recognition services). It receives the event, processes the image, and stores the analysis results in a “Database” (e.g., DynamoDB).

- Function 3: This function updates a search index with the metadata of the uploaded image. It receives the event and writes the relevant information to the “Search Index” (e.g., Elasticsearch).

The diagram highlights the decoupling of services. Each function is independent and responsible for a specific task, and the event bus facilitates the routing of events, ensuring that the different components of the system are loosely coupled.

Authentication and Authorization in Serverless

Securing serverless applications requires robust authentication and authorization mechanisms to protect sensitive data and functionalities. Unlike traditional applications where authentication and authorization are often handled centrally, serverless architectures necessitate a distributed approach. This section explores best practices for implementing secure authentication and authorization in serverless environments, focusing on JWTs and role-based access control.

Security Best Practices for Authentication and Authorization in Serverless Applications

Implementing strong security practices is paramount in serverless applications. Serverless architectures, by their nature, introduce new attack vectors that must be addressed. Adhering to established security principles is crucial for maintaining data integrity and preventing unauthorized access.

- Use of Secure Tokens: Employ secure tokens, such as JWTs, for authentication and authorization. These tokens should be signed with a strong cryptographic algorithm (e.g., HMAC-SHA256 or RSA-SHA256) to prevent tampering.

- Minimize Token Lifespan: Reduce the lifespan of access tokens to mitigate the impact of compromised tokens. Implement refresh tokens for extended user sessions, and store them securely.

- Implement Multi-Factor Authentication (MFA): Integrate MFA to enhance security by requiring users to provide multiple forms of verification, such as a password and a one-time code.

- Secure Storage of Credentials: Avoid storing sensitive credentials directly in the code or configuration files. Utilize secrets management services (e.g., AWS Secrets Manager, Azure Key Vault, Google Cloud Secret Manager) to securely store and manage credentials.

- Input Validation and Sanitization: Validate and sanitize all user inputs to prevent injection attacks (e.g., SQL injection, cross-site scripting).

- Least Privilege Principle: Grant functions and services only the minimum necessary permissions to perform their tasks. This reduces the potential damage from compromised components.

- Regular Security Audits and Penetration Testing: Conduct regular security audits and penetration testing to identify and address vulnerabilities in the application.

- Monitor and Log Activity: Implement comprehensive logging and monitoring to detect suspicious activities and security breaches. Analyze logs regularly for potential threats.

- Protect API Endpoints: Secure API endpoints using authentication and authorization mechanisms. Implement rate limiting and throttling to prevent abuse and denial-of-service attacks.

- Keep Dependencies Updated: Regularly update all dependencies, including libraries and frameworks, to patch security vulnerabilities.

Examples of Using JWT (JSON Web Tokens) for Authentication

JWTs are a widely adopted standard for securely transmitting information between parties as a JSON object. In serverless environments, JWTs are commonly used to authenticate users and authorize access to resources.

A typical JWT consists of three parts, separated by dots:

Header.Payload.Signature

Header: Contains metadata about the token, such as the token type (e.g., JWT) and the signing algorithm used (e.g., HMAC-SHA256, RSA-SHA256).

Payload: Contains the claims, which are pieces of information about the user or the token itself. These claims can include user identifiers, roles, permissions, and expiration times. It is important to note that the payload is encoded, not encrypted, and therefore should not contain sensitive information.

Signature: Is created by signing the header and payload with a secret key using the specified algorithm. The signature ensures the integrity of the token and verifies that it has not been tampered with.

Example Implementation using AWS Lambda and API Gateway:

1. User Authentication (Lambda Function):

A Lambda function receives user credentials (username and password). If the credentials are valid, the function generates a JWT containing user information (e.g., user ID, roles) and returns it to the client.

2. API Gateway Configuration:

API Gateway is configured to validate the JWT present in the Authorization header of incoming requests. The API Gateway uses the public key to verify the signature of the JWT. If the token is valid, API Gateway passes the user’s identity information (extracted from the JWT payload) to the backend Lambda function.

3. Backend Lambda Function Authorization:

The backend Lambda function receives the user’s identity information from API Gateway. It uses this information to authorize access to resources. For example, the function can check if the user has the necessary permissions to access a specific resource based on their roles.

4. Client-Side Implementation:

The client (e.g., a web application or mobile app) stores the JWT locally (e.g., in local storage or a cookie). The client includes the JWT in the Authorization header of subsequent requests to the API Gateway.

Code Example (Simplified Python Lambda function to generate a JWT):

import jwtimport timedef generate_jwt(user_id, roles, secret_key, expiry_time_seconds): payload = 'user_id': user_id, 'roles': roles, 'exp': time.time() + expiry_time_seconds token = jwt.encode(payload, secret_key, algorithm='HS256') return token

Code Example (Simplified Python Lambda function to verify a JWT):

import jwtdef verify_jwt(token, secret_key): try: decoded_payload = jwt.decode(token, secret_key, algorithms=['HS256']) return decoded_payload except jwt.ExpiredSignatureError: return None # Token has expired except jwt.InvalidTokenError: return None # Invalid token

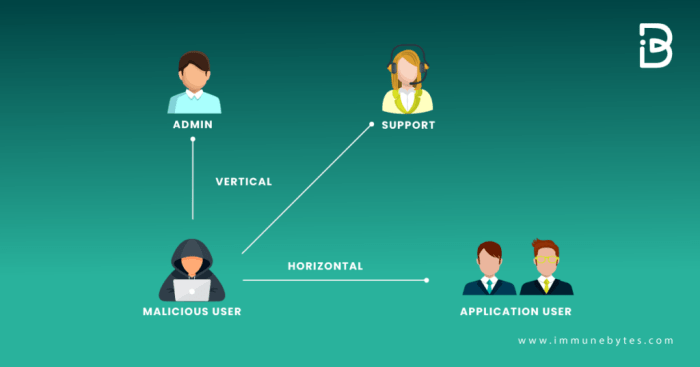

Detailing How to Implement Role-Based Access Control in a Serverless Environment

Role-Based Access Control (RBAC) is a crucial security mechanism for serverless applications.

RBAC simplifies access management by assigning users to roles, and then associating permissions with those roles. This approach allows for efficient management of access rights, making it easier to control who can access which resources and functionalities within the application.

Steps for implementing RBAC in a serverless environment:

- Define Roles: Identify the roles needed for the application (e.g., administrator, editor, viewer). Define the specific permissions associated with each role.

- Assign Users to Roles: Determine which users should be assigned to each role. This can be managed through a user management system or identity provider.

- Implement Authorization Logic in Lambda Functions: Implement authorization logic within the Lambda functions. When a request is received, the function should:

- Retrieve the user’s roles from the JWT payload or user context.

- Check if the user’s roles have the necessary permissions to access the requested resource or perform the desired action.

- If the user has the required permissions, allow access; otherwise, deny access and return an appropriate error response.

- Utilize API Gateway for Authorization: Configure API Gateway to validate JWTs and pass user information (including roles) to the backend Lambda functions. API Gateway can also be used to perform basic authorization checks based on the user’s roles before invoking the backend functions.

- Use Infrastructure-as-Code (IaC) for Role and Policy Management: Leverage IaC tools (e.g., AWS CloudFormation, Terraform) to define and manage roles, permissions, and policies. This ensures consistency and simplifies the deployment and management of access control configurations.

- Regularly Review and Update Roles and Permissions: Regularly review and update the defined roles and permissions to align with evolving business requirements and security best practices.

Example Scenario:

Consider an application that manages blog posts. The application has the following roles:

- Administrator: Can create, read, update, and delete all blog posts.

- Editor: Can create, read, and update blog posts.

- Viewer: Can only read blog posts.

Implementation within a Lambda Function:

The Lambda function that handles the update of blog posts would check the user’s roles from the JWT payload. If the user has the “Editor” or “Administrator” role, the function allows the update operation; otherwise, it returns an “Unauthorized” error.

Example Python Code Snippet (Authorization in Lambda Function):

import jsondef lambda_handler(event, context): # Retrieve user roles from the JWT payload (assuming it's in the event) try: user_roles = event['requestContext']['authorizer']['claims']['roles'].split(',') # Example: 'administrator,editor' except (KeyError, TypeError): return 'statusCode': 403, 'body': json.dumps('message': 'Unauthorized') # Check if the user has the necessary permissions if 'administrator' in user_roles or 'editor' in user_roles: # Allow the update operation # ...(code to update the blog post) return 'statusCode': 200, 'body': json.dumps('message': 'Blog post updated successfully') else: # Deny access return 'statusCode': 403, 'body': json.dumps('message': 'Unauthorized')

Monitoring and Logging Serverless Applications

Effective monitoring and logging are critical for the operational success of serverless applications.

Without these capabilities, debugging, performance optimization, and security incident response become significantly more challenging. Serverless architectures, by their very nature, are distributed and ephemeral, making traditional monitoring approaches less effective. This section details the tools, techniques, and best practices for establishing robust monitoring and logging within a serverless environment.

Importance of Monitoring and Logging

Monitoring and logging are fundamental for maintaining the health and performance of any application, and this is even more crucial in serverless environments. Serverless applications are often composed of numerous independent functions that execute in response to events. The dynamic nature of these applications requires a proactive approach to understand their behavior.

Monitoring provides real-time insights into application performance, allowing for early detection of issues such as slow response times, high error rates, or resource exhaustion. Logging captures detailed information about events, transactions, and errors, providing valuable context for debugging and troubleshooting. Both monitoring and logging are essential for:

- Performance Optimization: Identifying bottlenecks and areas for improvement in function execution and resource utilization.

- Error Detection and Debugging: Quickly diagnosing and resolving issues by analyzing logs and traces.

- Security Auditing: Tracking user activity, detecting suspicious behavior, and ensuring compliance with security policies.

- Cost Optimization: Monitoring resource consumption to identify opportunities for cost savings.

- Capacity Planning: Understanding application load and scaling requirements to ensure optimal performance.

Tools and Techniques for Monitoring Serverless Functions

Several tools and techniques are available for monitoring serverless functions, offering different levels of detail and granularity. The selection of tools should align with the specific requirements of the application, considering factors like scale, complexity, and budget.

- Metrics: Metrics provide aggregated data about function performance, such as invocation count, execution time, error rates, and resource consumption. They are essential for tracking overall application health and identifying trends.

- Cloud Provider Native Metrics: Cloud providers like AWS, Azure, and Google Cloud offer built-in metrics dashboards and APIs for monitoring serverless functions. These metrics typically include:

- Invocation Count: The number of times a function is executed.

- Execution Time: The duration of function execution.

- Error Rate: The percentage of function invocations that result in errors.

- Memory Usage: The amount of memory consumed by the function.

- Concurrent Executions: The number of function instances running simultaneously.

- Custom Metrics: Developers can define custom metrics to track application-specific behavior. This can involve instrumenting code to emit metrics related to business logic, such as the number of successful transactions or the time taken to process a specific request.

- Metric Aggregation: It is often necessary to aggregate metrics across multiple functions or regions to gain a comprehensive view of application performance. This can be achieved using cloud provider tools or third-party monitoring solutions.

- Cloud Provider Native Metrics: Cloud providers like AWS, Azure, and Google Cloud offer built-in metrics dashboards and APIs for monitoring serverless functions. These metrics typically include:

- Tracing: Tracing provides detailed information about the execution flow of a request through the application, including the time spent in each function and the interactions between different services. Tracing is crucial for understanding complex distributed systems and identifying performance bottlenecks.

- Distributed Tracing: Distributed tracing tools, such as AWS X-Ray, Azure Application Insights, and Google Cloud Trace, allow for the tracing of requests across multiple services and functions.

These tools provide visualizations of the request flow and detailed performance data for each component.

- Span Creation: Developers instrument their code to create spans, which represent individual units of work within a request. Each span contains information about the operation being performed, the start and end times, and any relevant metadata.

- Context Propagation: Tracing systems use context propagation to pass trace identifiers between services and functions. This ensures that all related spans are linked together, providing a complete picture of the request flow.

- Distributed Tracing: Distributed tracing tools, such as AWS X-Ray, Azure Application Insights, and Google Cloud Trace, allow for the tracing of requests across multiple services and functions.

- Alerting: Setting up alerts based on metrics and logs is crucial for proactively identifying and responding to issues. Alerts can be triggered when certain thresholds are exceeded, such as high error rates or slow response times.

- Threshold-Based Alerts: Alerts can be configured to trigger when a metric exceeds a predefined threshold. For example, an alert can be sent when the error rate of a function exceeds 5%.

- Anomaly Detection: Some monitoring tools offer anomaly detection capabilities, which can automatically identify unusual patterns in metrics and trigger alerts.

- Notification Channels: Alerts can be sent through various notification channels, such as email, SMS, or integration with incident management systems.

Setting Up Logging and Error Tracking

Implementing effective logging and error tracking is a critical step in building resilient serverless applications. The process involves configuring logging libraries, defining log formats, and integrating with centralized logging and error tracking services.

- Logging Configuration: The first step is to configure logging within the serverless functions. This typically involves using logging libraries or frameworks specific to the programming language being used.

- Log Levels: Choose appropriate log levels (e.g., DEBUG, INFO, WARN, ERROR) to control the verbosity of the logs.

- Log Formatting: Use a consistent log format, such as JSON, to facilitate parsing and analysis.

- Structured Logging: Employ structured logging to include relevant metadata, such as timestamps, function names, request IDs, and user IDs.

- Centralized Logging: It is essential to aggregate logs from all functions into a centralized logging system. This enables searching, filtering, and analysis of logs across the entire application.

- Cloud Provider Logging Services: Cloud providers offer logging services, such as AWS CloudWatch Logs, Azure Monitor Logs, and Google Cloud Logging, which can automatically collect and store logs from serverless functions.

- Third-Party Logging Services: Alternatively, third-party logging services, such as Splunk, Datadog, and Sumo Logic, can be used to provide more advanced features and integrations.

- Log Aggregation and Forwarding: Logs can be forwarded to a centralized logging system using various mechanisms, such as log shippers, agents, or custom integrations.

- Error Tracking: Implementing error tracking is crucial for capturing and analyzing errors that occur within serverless functions. This involves integrating with error tracking services to automatically collect error details and provide insights into the root causes.

- Error Tracking Services: Services like Sentry, Bugsnag, and Rollbar provide error tracking capabilities, including automatic error collection, grouping, and reporting.

- Exception Handling: Implement proper exception handling within functions to catch and log errors.

- Contextual Information: Include contextual information in error reports, such as request IDs, user IDs, and relevant data to aid in debugging.

Versioning and Deployment Strategies

Versioning and deployment strategies are critical for managing the lifecycle of serverless applications. They enable developers to release new features, fix bugs, and roll back to previous versions without disrupting the user experience. Effective versioning ensures that changes are tracked, and deployments are performed in a controlled and repeatable manner.

Versioning Serverless Functions

Versioning serverless functions involves managing multiple iterations of the function’s code and configuration. This allows for experimentation, rollback capabilities, and the ability to support different versions of the function simultaneously.

There are several common versioning strategies for serverless functions:

- Function Aliases: Function aliases provide a way to point a specific URL or trigger to a particular version of the function. For example, an alias “production” might point to the latest stable version, while an alias “staging” points to a version being tested. This is a common pattern in AWS Lambda and Google Cloud Functions. The alias allows for easy switching between versions without changing the function’s name.

- Function Versions: Serverless platforms often create versions of the function automatically with each deployment. Each version is immutable, and you can roll back to any previous version. For instance, in AWS Lambda, each time you deploy a function, a new version is created, and the latest version is typically indicated by the $LATEST qualifier.

- Code Branching and Tagging: Version control systems (like Git) can be used to manage code versions. Branches can represent different features or releases, and tags can mark specific releases. When deploying, a specific tag or branch can be deployed to a serverless environment.

- Semantic Versioning (SemVer): Adopting SemVer (Major.Minor.Patch) can help to communicate the nature of changes. For example, a patch release addresses bug fixes, a minor release adds new features in a backward-compatible manner, and a major release introduces breaking changes.

Deploying Serverless Applications using CI/CD Pipelines

Continuous Integration and Continuous Deployment (CI/CD) pipelines automate the process of building, testing, and deploying serverless applications. They streamline the release process, reduce errors, and enable faster iteration.

The typical steps involved in deploying serverless applications using CI/CD pipelines are:

- Code Commit: Developers commit code changes to a version control system (e.g., Git).

- Trigger: The CI/CD pipeline is triggered automatically by a code commit, a scheduled event, or manually.

- Build: The build process involves compiling code, packaging dependencies, and preparing the function for deployment. This might include running a build tool such as `npm install` or `maven package`.

- Testing: Automated tests (unit tests, integration tests, end-to-end tests) are executed to ensure the code functions as expected. Tests can include validating API endpoints, database interactions, and other functionalities.

- Deployment: The serverless function and associated resources (e.g., API Gateway, databases) are deployed to the target environment (e.g., staging, production). This might involve using a tool like AWS CloudFormation, Serverless Framework, or Terraform to define the infrastructure as code.

- Verification: After deployment, automated tests (often different from those in the testing phase) and/or manual testing may be performed to verify the successful deployment and functionality.

- Monitoring and Logging: The deployed application is monitored for performance, errors, and other relevant metrics. Logs are collected to aid in troubleshooting.

Deployment Patterns

Various deployment patterns can be used to minimize risk and ensure smooth transitions when deploying serverless applications.

Here are some common deployment patterns:

- Blue/Green Deployment: This strategy involves deploying a new version (green) alongside the existing version (blue). Once the green environment is tested and verified, traffic is switched from the blue environment to the green environment. This provides a rollback mechanism if issues are discovered in the green environment. The switchover can be done using API Gateway configurations or traffic shaping tools.

- Canary Deployment: A canary deployment involves gradually rolling out a new version to a small subset of users. This allows for testing the new version in production with real traffic before rolling it out to all users. If no issues are detected, the rollout is expanded. This pattern is often used in conjunction with API Gateway features that allow for traffic shaping.

For example, 1% of the traffic goes to the new version, then 5%, then 20%, etc.

- Rolling Deployment: In a rolling deployment, the new version is deployed to a subset of instances or functions, one at a time or in batches. This allows for gradual rollout and reduces the risk of a complete outage. If issues are detected, the deployment can be paused or rolled back.

- Immutable Deployment: With immutable deployments, the existing version is never modified in place. Instead, a new version is deployed, and traffic is switched over. This simplifies rollback and reduces the risk of configuration drift. This pattern is common in serverless architectures where each function version is essentially immutable.

Testing Serverless Integrations

Testing serverless integrations is crucial for ensuring the reliability, scalability, and maintainability of serverless applications that interact with existing systems. A robust testing strategy allows developers to identify and address potential issues early in the development lifecycle, minimizing the risk of production failures and ensuring a smooth user experience. Thorough testing also helps to validate the correct functioning of serverless functions, API gateways, database interactions, and event-driven workflows.

Testing Strategies for Serverless Applications

Several testing strategies are employed to comprehensively test serverless applications. These strategies vary in scope and focus, from verifying individual function logic to validating end-to-end system behavior.

- Unit Tests: Unit tests focus on isolating and verifying the functionality of individual serverless functions. They examine the function’s input, processing logic, and output. Unit tests help ensure that each function behaves as expected in isolation, making them essential for catching bugs early in the development process.

- Integration Tests: Integration tests verify the interaction between multiple components, such as a serverless function and a database, or a serverless function and an API gateway. They validate that different parts of the system work together correctly. Integration tests are vital for ensuring that the components of a serverless application integrate seamlessly with existing systems.

- End-to-End (E2E) Tests: End-to-end tests simulate real user scenarios, testing the entire application flow from the user’s perspective. These tests often involve interacting with the API gateway, serverless functions, databases, and any other integrated services. E2E tests provide confidence that the entire system functions as intended, validating the complete integration with existing systems.

- Contract Tests: Contract tests ensure that the interfaces between different components of the serverless application and existing systems adhere to predefined contracts. This is particularly important when integrating with external APIs or services. Contract tests help to prevent breaking changes and ensure compatibility between the serverless application and the integrated systems.

- Performance Tests: Performance tests evaluate the performance of serverless functions and the overall system under various load conditions. They measure metrics like response time, throughput, and resource utilization. Performance tests help identify bottlenecks and ensure that the serverless application can handle the expected traffic.

- Security Tests: Security tests assess the security posture of the serverless application, including aspects like authentication, authorization, and data protection. These tests help identify vulnerabilities and ensure that the serverless application is secure against common threats.

Writing Unit Tests for Serverless Functions

Writing effective unit tests is crucial for verifying the functionality of individual serverless functions. These tests should be designed to cover various scenarios, including different input values, edge cases, and error conditions. The use of mocking frameworks is often necessary to isolate the function being tested and simulate interactions with external dependencies.

For example, consider a serverless function written in JavaScript that processes user registration data and stores it in a database. The function might receive a JSON payload containing user details. A unit test for this function would involve the following steps:

- Arrange: Prepare the test environment by setting up mock objects or stubs for any external dependencies, such as the database client. Define the input data that will be passed to the function.

- Act: Invoke the serverless function with the prepared input data.

- Assert: Verify the function’s output or behavior against the expected results. This may involve checking the returned data, verifying that the database was updated correctly, or asserting that any error conditions were handled appropriately.

Example of a unit test in JavaScript using Jest (a popular testing framework):

“`javascript

// Import the function to be tested

const registerUser = require(‘./registerUser’);

// Mock the database client (e.g., using Jest’s mock functions)

jest.mock(‘database-client’, () => (

createUser: jest.fn(),

));

const databaseClient = require(‘database-client’);

describe(‘registerUser function’, () =>

it(‘should successfully register a user’, async () =>

// Arrange

const event =

body: JSON.stringify(

username: ‘testuser’,

email: ‘[email protected]’,

password: ‘password123’,

),

;

databaseClient.createUser.mockResolvedValue( success: true ); // Mock successful database write

// Act

const result = await registerUser.handler(event);

// Assert

expect(result.statusCode).toBe(200);

expect(JSON.parse(result.body)).toEqual( message: ‘User registered successfully’ );

expect(databaseClient.createUser).toHaveBeenCalledWith(

username: ‘testuser’,

email: ‘[email protected]’,

password: expect.any(String), // Password should be hashed, so just check for existence

);

);

it(‘should handle errors during registration’, async () =>

// Arrange

const event =

body: JSON.stringify(

username: ‘testuser’,

email: ‘[email protected]’,

password: ‘password123’,

),

;

databaseClient.createUser.mockRejectedValue(new Error(‘Database error’)); // Mock database error

// Act

const result = await registerUser.handler(event);

// Assert

expect(result.statusCode).toBe(500);

expect(JSON.parse(result.body)).toEqual( error: ‘Internal server error’ );

);

);

“`

In this example:

* The `registerUser` function is imported, and the database client is mocked using `jest.mock`.

– The first test case simulates a successful user registration. The mock `createUser` function is set to resolve successfully. The test asserts that the function returns a 200 status code and a success message, and that the `createUser` function was called with the correct data.

– The second test case simulates an error during registration. The mock `createUser` function is set to reject with an error. The test asserts that the function returns a 500 status code and an error message.

Testing an API Endpoint Interacting with a Serverless Function

Testing an API endpoint that interacts with a serverless function involves verifying the entire request-response cycle. This can be achieved by using a testing framework that can send HTTP requests to the API endpoint and assert the response.

Here’s a code snippet demonstrating how to test an API endpoint using Jest and the `supertest` library (a popular library for testing HTTP requests):

“`javascript

const request = require(‘supertest’);

const app = require(‘./app’); // Assuming your API is defined in app.js

// Mock the serverless function (if needed)

jest.mock(‘./registerUser’, () => (

handler: jest.fn().mockImplementation((event) =>

// Mock the behavior of the serverless function

if (JSON.parse(event.body).username === ‘testuser’)

return Promise.resolve( statusCode: 200, body: JSON.stringify( message: ‘User registered’ ) );

else

return Promise.resolve( statusCode: 400, body: JSON.stringify( error: ‘Invalid user data’ ) );

),

));

describe(‘API Endpoint Tests’, () =>

it(‘should successfully register a user via the API’, async () =>

const response = await request(app) // Assuming your API is exposed via Express or similar

.post(‘/register’) // Replace with your API endpoint path

.send( username: ‘testuser’, email: ‘[email protected]’, password: ‘password123’ );

expect(response.statusCode).toBe(200);

expect(response.body).toEqual( message: ‘User registered’ );

);

it(‘should handle invalid user data’, async () =>

const response = await request(app)

.post(‘/register’)

.send( username: ‘invalid’, email: ‘invalid’, password: ‘invalid’ );

expect(response.statusCode).toBe(400);

expect(response.body).toEqual( error: ‘Invalid user data’ );

);

);

“`

In this example:

* `supertest` is used to make HTTP requests to the API endpoint.

– The `app` variable represents your API application (e.g., an Express app).

– The serverless function `registerUser` is mocked. This allows the test to control the response of the serverless function without actually invoking it. This is crucial for isolating the API endpoint test and ensuring its reliability.

The mock implementation checks for the username and returns a success or failure response accordingly.

– The test cases send POST requests to the `/register` endpoint (replace with your actual endpoint).

– The tests assert the status code and the response body to verify the API’s behavior.

This approach allows you to test the complete API interaction, including the routing, request parsing, and response handling, all while simulating the behavior of the underlying serverless function. By mocking the serverless function, you can test the API endpoint’s logic without needing to deploy or run the actual serverless function.

Real-World Use Cases and Examples

Integrating serverless technologies with existing systems has proven to be a powerful strategy for modernizing applications, improving scalability, and reducing operational costs. Successful implementations demonstrate the versatility and adaptability of serverless architectures across diverse industries and use cases. This section provides examples of successful serverless integrations, details a case study of a legacy system migration, and shares lessons learned from real-world projects.

Successful Serverless Integration Examples

Numerous organizations have successfully leveraged serverless to enhance their existing systems. These examples illustrate the practical application of serverless principles across various domains.

- E-commerce Platform: An e-commerce company integrated serverless functions to handle order processing, payment gateway interactions, and inventory updates. This allowed the company to scale its backend infrastructure dynamically, especially during peak shopping seasons, without over-provisioning resources. The implementation utilized API Gateway to expose serverless functions as APIs, while databases like DynamoDB provided scalable data storage. This resulted in a 30% reduction in infrastructure costs and a significant improvement in application responsiveness.

- Media Streaming Service: A media streaming service employed serverless for video transcoding and content delivery. Serverless functions were triggered by object uploads to cloud storage, initiating transcoding workflows that converted videos into various formats and resolutions. This automated the process, reduced the need for dedicated transcoding servers, and enabled on-demand scaling based on the volume of content being processed. The service saw a 40% improvement in video processing time and a 25% reduction in operational overhead.

- Financial Services Application: A financial institution integrated serverless to build a real-time fraud detection system. Serverless functions were triggered by financial transactions, analyzing them against predefined rules and machine learning models. This real-time analysis allowed for immediate identification of potentially fraudulent activities. The system was able to process thousands of transactions per second, and the cost of the fraud detection infrastructure was reduced by 60% compared to the previous on-premise solution.

- Healthcare Data Processing: A healthcare provider utilized serverless to process and analyze patient data. Serverless functions were used to extract data from various sources, transform it into a standardized format, and store it in a data lake. This enabled efficient data analysis and reporting. The system reduced data processing time by 50% and improved data accessibility for healthcare professionals.

Case Study: Legacy System Migration to Serverless

Migrating a legacy system to a serverless architecture can be a complex but rewarding undertaking. This case study details the migration of a legacy application.

A large retail company, operating for over 30 years, faced the challenge of modernizing its aging point-of-sale (POS) system. The existing system, built on a monolithic architecture, was difficult to scale, maintain, and update. The company decided to migrate portions of the system to a serverless architecture, starting with its loyalty program.

- Assessment and Planning: The first step involved a thorough assessment of the existing system and the identification of components suitable for serverless migration. The loyalty program was chosen due to its independent nature and lower dependency on core POS functionalities. The company’s team defined the scope, migration strategy, and key performance indicators (KPIs) for the project.

- Technology Selection: The team selected AWS as the cloud provider and utilized various services including AWS Lambda for serverless functions, API Gateway for API management, DynamoDB for data storage, and SQS for asynchronous communication. This choice was driven by the company’s existing familiarity with AWS and the comprehensive serverless offerings available.

- Incremental Migration: Instead of a “big bang” approach, the team adopted an incremental migration strategy. This allowed them to gradually migrate functionalities while minimizing disruption to the existing system. The loyalty program’s features were broken down into smaller, manageable units, which were then migrated to serverless functions.

- Integration and Testing: The serverless functions were integrated with the existing POS system using APIs. Rigorous testing was conducted to ensure seamless communication and data consistency between the legacy and serverless components. The company employed both automated and manual testing methodologies to validate the migrated functionalities.

- Deployment and Monitoring: The serverless components were deployed using CI/CD pipelines. The company implemented comprehensive monitoring and logging to track the performance and health of the serverless applications. Metrics such as function invocation duration, error rates, and resource utilization were closely monitored.

Results: The migration of the loyalty program to serverless resulted in significant benefits. The company experienced a 70% reduction in infrastructure costs associated with the loyalty program. The system became more scalable and resilient, with improved response times and availability. The development team was able to iterate faster and deploy new features more frequently. Furthermore, the migration provided valuable experience and knowledge, enabling the company to plan for the future migration of other parts of the POS system.

Lessons Learned from Real-World Serverless Integration Projects

Successful serverless integrations often require careful planning, execution, and continuous improvement. The following lessons learned are based on real-world experiences.

- Embrace a Microservices Approach: Breaking down complex systems into smaller, independent microservices facilitates serverless adoption. This allows for easier deployment, scaling, and maintenance. Each microservice should be designed to perform a specific task, adhering to the single responsibility principle.

- Prioritize Security: Security is paramount in serverless architectures. Implement robust authentication and authorization mechanisms, encrypt data at rest and in transit, and regularly audit security configurations. Consider using serverless-specific security tools and services to enhance protection.

- Implement Effective Monitoring and Logging: Comprehensive monitoring and logging are essential for understanding the behavior and performance of serverless applications. Collect detailed metrics on function invocations, errors, and resource utilization. Utilize centralized logging systems to analyze logs and identify issues quickly.