Navigating the ephemeral landscape of AWS Lambda functions necessitates a deep understanding of temporary file management. Lambda’s stateless nature, where execution environments are created and destroyed frequently, introduces unique challenges and opportunities for utilizing temporary storage. This discussion delves into the practical aspects of leveraging temporary files within the `/tmp` directory, a critical component for intermediate data processing and enhancing Lambda function efficiency.

This exploration covers the intricacies of the `/tmp` directory, including its storage limitations, file lifecycle, and security considerations. We will dissect code examples across multiple programming languages, examine best practices for efficient resource utilization, and investigate common use cases where temporary files significantly streamline operations. Furthermore, the document presents methods for handling large files and integrating with other AWS services like S3, along with detailed guidance on monitoring, logging, error handling, and troubleshooting to ensure robust and reliable Lambda function deployments.

Introduction to Temporary Files in Lambda

The utilization of temporary files within the AWS Lambda execution environment is a crucial aspect of serverless function design, enabling efficient intermediate data handling and facilitating complex processing workflows. Understanding the necessity and limitations of these files is paramount for optimizing Lambda function performance and resource utilization. These files provide a temporary storage mechanism for data that does not need to persist beyond a single function invocation, offering significant advantages over more persistent storage solutions in specific use cases.Lambda functions operate within a containerized environment, and this environment offers a temporary filesystem accessible to the function code.

This temporary storage is vital for intermediate data, enabling operations that would be cumbersome or inefficient using alternative methods.

Ephemeral Nature of the Lambda File System

The Lambda file system, specifically the `/tmp` directory, is ephemeral, meaning its contents are not guaranteed to persist across function invocations. This is a critical characteristic that dictates how temporary files should be managed. The lifespan of files within `/tmp` is tied to the execution duration of a single Lambda invocation. After the function completes, the entire `/tmp` directory and its contents are automatically deleted.The size of the `/tmp` directory is limited.

While the exact size can vary, it’s generally in the hundreds of megabytes range, and this constraint needs careful consideration during design. Exceeding the allocated space will lead to errors and function failure.

Benefits of Using Temporary Files for Intermediate Data Processing

Temporary files provide several advantages in the context of Lambda function development. They enable efficient storage of intermediate data, which can significantly improve performance in specific scenarios.For instance, consider an image processing Lambda function that resizes an image.

- Intermediate Data Storage: The original image might be read from an S3 bucket, processed (e.g., resized), and then the processed image uploaded back to S3. The temporary file system can be used to store the resized image before it is uploaded. This avoids holding the entire image in memory, which can be a significant memory saving, especially for large images.

- Data Transformation: Functions often involve complex data transformations. Temporary files can act as staging areas for these transformations, allowing the function to break down the process into manageable steps. For example, a function that parses a large CSV file might write intermediate results to a temporary file before further processing.

- Caching: Temporary files can be used for simple caching mechanisms. If a function needs to access data that doesn’t change frequently, it can store the data in a temporary file during the first invocation and reuse it in subsequent invocations within the same execution environment. This is only suitable for data that doesn’t require persistence beyond the function’s lifecycle.

Temporary files are especially beneficial in cases where data needs to be accessed sequentially or in chunks, such as processing large text files or streaming media. They facilitate efficient memory management by allowing functions to work with data in smaller, more manageable portions, rather than loading the entire dataset into memory at once. This can lead to significant performance improvements, especially for functions with limited memory allocations.

Understanding the `/tmp` Directory

The `/tmp` directory within AWS Lambda functions serves as a crucial space for temporary file storage during function execution. Understanding its characteristics and limitations is paramount for efficient and reliable Lambda function design. This section delves into the specifics of `/tmp`, outlining its storage capacity, file lifespan, and operational implications.

Characteristics and Limitations of the `/tmp` Directory

The `/tmp` directory in Lambda functions provides a temporary storage location within the execution environment. It’s designed for transient data that doesn’t need to persist beyond a single function invocation.

- Ephemeral Nature: Files stored in `/tmp` are only accessible during a single function invocation. After the function completes, the directory’s contents are typically purged. This makes it unsuitable for long-term storage.

- Shared Resource: The `/tmp` directory is shared across all invocations of a particular Lambda function instance. While this can be leveraged for inter-invocation communication within a single instance (e.g., caching), it introduces potential race conditions and requires careful management.

- Limited Capacity: The storage capacity of `/tmp` is limited. Exceeding this limit will result in errors.

- File System Type: The file system type used for `/tmp` is typically a volatile storage solution, optimized for speed over persistence.

Maximum Storage Capacity Available in `/tmp` for Different Lambda Configurations

The storage capacity available in the `/tmp` directory is a critical factor when designing Lambda functions that utilize temporary files. The capacity is fixed and cannot be dynamically adjusted.

The maximum storage capacity for `/tmp` is currently 512 MB. This limit applies to all Lambda functions, regardless of the allocated memory or other configuration settings. Exceeding this limit will result in an “disk space full” error during function execution.

Lifespan of Files Stored in `/tmp` Concerning Lambda Invocation and Cold Starts

The lifespan of files within `/tmp` is directly tied to the Lambda function’s lifecycle. The following details the behavior concerning function invocations and cold starts.

- Invocation Lifecycle: During a single function invocation, files created in `/tmp` are accessible and modifiable. Once the function execution completes, and the Lambda execution environment is disposed of, the files are removed.

- Cold Starts: When a Lambda function experiences a cold start, a new execution environment is provisioned. This means a fresh `/tmp` directory is created, devoid of any pre-existing files from previous invocations. Cold starts erase the `/tmp` directory contents.

- Warm Starts: In warm starts (where an existing execution environment is reused), the `/tmp` directory’s contents might persist from the previous invocation. However, this behavior is

-not* guaranteed. The Lambda service can choose to reuse or recycle execution environments. The function code should not rely on the presence of data in `/tmp` from previous invocations, even during warm starts.

Writing and Reading Temporary Files

Efficient handling of temporary files within AWS Lambda functions is crucial for various operations, including data processing, caching, and intermediate storage. The `/tmp` directory provides a readily accessible space for these purposes. This section details the mechanisms for writing to and reading from this directory using common programming languages.

Writing Data to `/tmp`

Writing data to the `/tmp` directory involves opening a file, writing the desired content, and then closing the file. The specific implementation varies slightly depending on the programming language, but the fundamental process remains the same. Below are examples demonstrating this process in Python, Node.js, and Java.

Python Example:

The Python example utilizes the built-in `open()` function to create a file object.

The `write()` method is then used to write the data, and the `close()` method ensures the file is properly saved.“`pythonimport osdef lambda_handler(event, context): file_path = “/tmp/example.txt” try: with open(file_path, ‘w’) as f: f.write(“This is a test string written to /tmp.\n”) f.write(f”The current working directory is: os.getcwd()\n”) return ‘statusCode’: 200, ‘body’: f’Successfully wrote to file_path’ except Exception as e: return ‘statusCode’: 500, ‘body’: f’Error writing to file: str(e)’ “`

Node.js Example:

Node.js uses the `fs` (file system) module to interact with the file system.

The `writeFile()` function is used to write data asynchronously. Error handling is essential, and the example includes a basic implementation.“`javascriptconst fs = require(‘fs’);const path = require(‘path’);exports.handler = async (event) => const filePath = path.join(‘/tmp’, ‘example.txt’); // Use path.join for cross-platform compatibility const data = ‘This is a test string written to /tmp.\n’; try await fs.promises.writeFile(filePath, data); return statusCode: 200, body: `Successfully wrote to $filePath` ; catch (err) console.error(‘Error writing to file:’, err); return statusCode: 500, body: `Error writing to file: $err` ; ;“`

Java Example:

Java utilizes the `java.io` package for file operations.

The example demonstrates using `FileWriter` and `BufferedWriter` for efficient writing. Error handling is incorporated using a `try-catch` block.“`javaimport java.io.*;import com.amazonaws.services.lambda.runtime.Context;import com.amazonaws.services.lambda.runtime.RequestHandler;public class WriteTempFile implements RequestHandler

Reading Data from `/tmp`

Reading data from `/tmp` mirrors the writing process in reverse. The file must be opened, the data read, and the file closed. The specific methods and error handling differ by language, but the core functionality remains the same. Below are examples illustrating reading from a file in Python, Node.js, and Java.

Python Example:

The Python example opens the file in read mode (`’r’`), reads the entire content using `read()`, and closes the file.

Error handling is included to manage potential `FileNotFoundError` exceptions.“`pythonimport osdef lambda_handler(event, context): file_path = “/tmp/example.txt” try: with open(file_path, ‘r’) as f: content = f.read() return ‘statusCode’: 200, ‘body’: f’File content:\ncontent’ except FileNotFoundError: return ‘statusCode’: 404, ‘body’: f’File not found: file_path’ except Exception as e: return ‘statusCode’: 500, ‘body’: f’Error reading file: str(e)’ “`

Node.js Example:

The Node.js example uses the `fs.readFile()` function to read the file asynchronously.

The example includes error handling for cases where the file might not exist or be inaccessible.“`javascriptconst fs = require(‘fs’).promises;const path = require(‘path’);exports.handler = async (event) => const filePath = path.join(‘/tmp’, ‘example.txt’); try const data = await fs.readFile(filePath, ‘utf8’); return statusCode: 200, body: `File content:\n$data` ; catch (err) console.error(‘Error reading file:’, err); return statusCode: 500, body: `Error reading file: $err.message` ; ;“`

Java Example:

The Java example uses `BufferedReader` to read the file line by line, demonstrating a common pattern for handling potentially large files.

The code also incorporates exception handling for file not found or other `IOExceptions`.“`javaimport java.io.*;import com.amazonaws.services.lambda.runtime.Context;import com.amazonaws.services.lambda.runtime.RequestHandler;public class ReadTempFile implements RequestHandler

File Naming Conventions within `/tmp`

Adopting a consistent and unique file naming convention within the `/tmp` directory is essential to prevent conflicts, especially when multiple Lambda function invocations might be running concurrently. This ensures data integrity and avoids unintended overwrites or data loss. The following bullet points Artikel effective file naming strategies:

- Use Unique Prefixes: Prefix filenames with a unique identifier, such as the function name, the Lambda invocation ID (provided in the `context` object), or a UUID (Universally Unique Identifier) generated at the start of the function execution. This minimizes the chance of name collisions. For example: `myfunction-invocationId-data.txt` or `uuid-processed_data.csv`.

- Include Timestamps: Incorporate timestamps (e.g., Unix timestamps or ISO 8601 formatted dates and times) into the filenames to provide a chronological order and allow for easy identification of file creation times. This can be helpful for debugging and data analysis. For example: `data-2024-01-20T10:30:00Z.log`.

- Employ File Extensions: Use appropriate file extensions (e.g., `.txt`, `.csv`, `.json`, `.log`) to indicate the file type. This helps in the correct interpretation and processing of the data.

- Consider File Size: Be mindful of the potential file size and the available disk space (512MB). Avoid generating extremely large temporary files that could exhaust the available space. If dealing with large datasets, consider breaking the data into smaller chunks or using a more suitable storage solution like Amazon S3.

- Clean Up Files: Implement mechanisms to delete temporary files after they are no longer needed. This can be done within the Lambda function itself or through a separate cleanup process. Leaving unused files can lead to disk space exhaustion and potential performance issues.

Best Practices for Managing Temporary Files

Efficient management of temporary files within AWS Lambda functions is crucial for both performance and cost optimization. Neglecting this aspect can lead to exceeding the `/tmp` storage limit (512MB), resulting in function failures and potentially impacting overall application availability. This section details strategies for effectively managing temporary file storage, ensuring timely cleanup, and designing a robust file lifecycle management system.

Strategies for Preventing `/tmp` Limit Exceedance

Preventing the `/tmp` limit from being exceeded requires proactive measures to minimize temporary file usage and optimize storage practices. This involves careful planning and implementation across the entire lifecycle of a Lambda function.

- Minimize File Size: The most direct approach is to reduce the size of files written to `/tmp`. Consider compressing data before writing it, using efficient data formats (e.g., optimized for space), and selectively storing only the necessary information. For instance, instead of storing a full image, generate and store thumbnails.

- Optimize Data Processing: Review the data processing logic within the Lambda function. Can operations be performed in memory rather than writing intermediate files? Streamlining data processing can significantly reduce the need for temporary storage. For example, if a function processes a large CSV file, consider using streaming libraries to process it row by row, avoiding the need to load the entire file into memory or write it to disk.

- Chunking and Streaming: For large files, break them into smaller chunks and process each chunk independently. This limits the amount of data stored in `/tmp` at any given time. Using streaming techniques, where data is processed as it arrives, further reduces temporary storage needs.

- Monitor Disk Usage: Implement monitoring and logging to track `/tmp` usage. CloudWatch metrics can be used to track disk space utilization over time. Set up alarms to trigger alerts when usage approaches the limit. This proactive monitoring allows for timely intervention and prevents unexpected failures.

- Leverage External Storage: Consider using external storage services like Amazon S3 for storing large files. While this introduces additional latency due to network I/O, it provides a scalable and cost-effective solution for storing data that exceeds the `/tmp` limit. The Lambda function can then interact with these files through the S3 API.

Methods for Cleaning Up Temporary Files

Effective cleanup of temporary files is critical for preventing disk space exhaustion and ensuring optimal resource utilization. The timing and method of cleanup directly impact function performance and reliability.

- Immediate Deletion: Delete temporary files as soon as they are no longer needed. This can be achieved by explicitly deleting the files using the appropriate file system APIs immediately after they are processed.

- `try…finally` Blocks: Use `try…finally` blocks to ensure files are deleted, even if errors occur during processing. The `finally` block guarantees that the cleanup code is executed regardless of whether an exception is thrown.

- Event-Driven Cleanup: Implement a cleanup mechanism that is triggered by specific events. For example, if a file is used to store intermediate results for a specific request, the cleanup can be triggered after the request is completed. This could involve adding a cleanup step to the function’s final response processing.

- Function Expiration: The `/tmp` directory is automatically cleared when a Lambda function instance is reused. The exact timing is not guaranteed, so rely on explicit deletion strategies. However, if a function instance is not reused (due to scaling or infrequent use), the temporary files will eventually be removed.

- Monitoring and Alerting for Uncleaned Files: Implement logging to track file creation and deletion events. Analyze logs to identify instances where files are not being deleted as expected. Set up alerts to notify you of potential cleanup issues.

File Lifecycle Management System Design

A well-designed file lifecycle management system ensures that temporary files are created, accessed, and deleted in a controlled and efficient manner. This system should incorporate several key considerations.

- File Naming Conventions: Implement a consistent file naming convention to facilitate organization, identification, and deletion. Consider including a unique identifier (e.g., a UUID or a request ID) in the filename to avoid naming conflicts and easily trace files back to their originating request. For example, `temp_data_requestID_timestamp.csv`.

- Access Control: Determine the necessary access permissions for temporary files. Restrict access to only the Lambda function’s execution role to prevent unauthorized access or modification. Use appropriate file system permissions to ensure data security.

- File Expiration Policies: Define a clear expiration policy for temporary files. This policy should specify how long a file should persist before it is automatically deleted. The expiration time should be based on the file’s purpose and the expected lifespan of the data it contains. For instance, files holding intermediate results for a single request should be deleted immediately after the request completes.

- Error Handling: Implement robust error handling to manage potential failures during file operations. Handle exceptions that might occur during file creation, reading, writing, and deletion. Ensure that the function gracefully handles errors and attempts to recover or clean up appropriately.

- Logging and Auditing: Log all file-related operations, including file creation, access, modification, and deletion. This logging provides valuable information for debugging, auditing, and performance analysis. Include timestamps, filenames, and any relevant context (e.g., request ID) in the logs.

- Testing and Validation: Thoroughly test the file lifecycle management system to ensure that files are created, accessed, and deleted as expected. Conduct tests to verify that the system correctly handles different scenarios, including error conditions and concurrent access.

Common Use Cases for Temporary Files in Lambda

Temporary files in AWS Lambda functions provide a crucial mechanism for handling intermediate data during function execution. These files offer a convenient way to store, process, and manage data that doesn’t need to persist beyond a single invocation. This capability is especially valuable given Lambda’s stateless nature and ephemeral execution environment. Utilizing temporary files effectively enhances performance and allows complex tasks to be accomplished efficiently within the function’s execution time constraints.

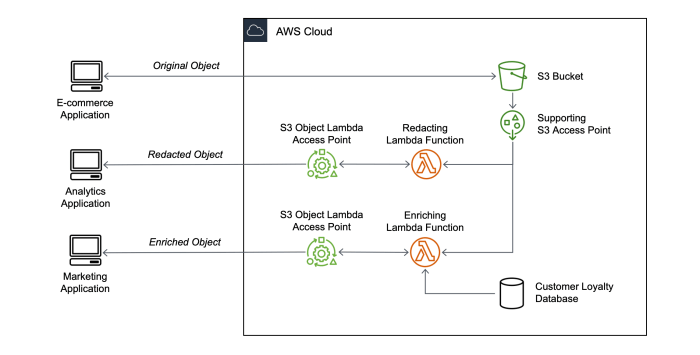

Image Processing

Image processing is a common use case where temporary files are invaluable. Lambda functions can be triggered by events such as object uploads to S3 and then perform operations like resizing, format conversion, or watermarking. The `/tmp` directory provides a location to store the original image, intermediate processing results, and the final processed image before uploading it to another location, such as S3.

Data Transformation

Data transformation tasks frequently require temporary storage. Consider a scenario where a Lambda function receives data in one format (e.g., CSV) and needs to convert it to another (e.g., JSON) before further processing or storage. Temporary files can be used to store the intermediate CSV data, perform the conversion, and then hold the resulting JSON data. This approach is particularly efficient when dealing with large datasets, as it avoids holding the entire dataset in memory during the transformation process.

Caching Data

Lambda functions can benefit from caching frequently accessed data. For instance, a function might retrieve data from a database or external API. To reduce latency and resource consumption, this data can be cached in a temporary file. Subsequent invocations can then check for the existence of the cached file and use its contents if the data is still valid, thereby avoiding redundant API calls or database queries.

Staging Data for S3 Uploads

When dealing with large files or complex processing before uploading to S3, staging data in a temporary file is often the most efficient strategy. Instead of directly streaming data to S3, a Lambda function can first write the processed data to a temporary file. This allows for error handling, data validation, and other transformations to be performed before the upload.

Once the data is staged, the function can upload the entire file to S3 in a single operation, improving performance and simplifying the upload process.

Code Example: Caching Data within a Lambda Function

Caching data in a Lambda function can significantly improve performance, especially for data that changes infrequently. The following Python code demonstrates how to cache data from an external API using a temporary file. The function checks for the existence of a cached file and uses its contents if the data is still valid; otherwise, it retrieves the data from the API, saves it to the temporary file, and then returns it.“`pythonimport jsonimport osimport timeimport requestsdef lambda_handler(event, context): cache_file = ‘/tmp/api_data.json’ cache_expiry_seconds = 3600 # Cache data for 1 hour if os.path.exists(cache_file): try: with open(cache_file, ‘r’) as f: cache_data = json.load(f) if time.time()

cache_data.get(‘timestamp’, 0) < cache_expiry_seconds

print(“Returning data from cache”)

return cache_data[‘data’]

except (FileNotFoundError, json.JSONDecodeError):

print(“Cache file corrupted or not found, fetching from API”)

# Fetch data from external API

try:

response = requests.get(‘https://api.example.com/data’)

response.raise_for_status() # Raise an exception for bad status codes

api_data = response.json()

# Save data to cache

cache_data =

‘timestamp’: time.time(),

‘data’: api_data

with open(cache_file, ‘w’) as f:

json.dump(cache_data, f)

print(“Data fetched from API and cached”)

return api_data

except requests.exceptions.RequestException as e:

print(f”Error fetching data from API: e”)

return ‘error’: ‘Failed to fetch data from API’

“`

This example illustrates the principle of caching using temporary files. The function checks for a cache file (`/tmp/api_data.json`). If the file exists and the data is within the cache expiry period (1 hour in this case), the cached data is returned. Otherwise, the function fetches the data from the API, saves it to the cache file, and returns the data.

This approach reduces the number of API calls, thus improving performance and reducing cost.

Demonstration: Staging Data for S3 Upload

Staging data in a temporary file before uploading it to S3 is a best practice for handling large files and for performing data transformations. The following Python code demonstrates how to stage data in a temporary file and then upload it to S3. This approach allows the Lambda function to handle potential errors during the data processing and to upload the entire file in a single operation.“`pythonimport boto3import osimport jsondef lambda_handler(event, context): # Input data (e.g., from an SQS queue or API) input_data = ‘message’: ‘This is some data to upload to S3.’ # Define the S3 bucket and key bucket_name = ‘your-s3-bucket-name’ s3_key = ‘data.json’ # Create a temporary file temp_file_path = ‘/tmp/temp_data.json’ try: # Write the input data to the temporary file with open(temp_file_path, ‘w’) as f: json.dump(input_data, f) # Upload the temporary file to S3 s3 = boto3.client(‘s3’) s3.upload_file(temp_file_path, bucket_name, s3_key) print(f”Data uploaded to s3://bucket_name/s3_key”) return ‘statusCode’: 200, ‘body’: json.dumps(‘Data uploaded to S3 successfully!’) except Exception as e: print(f”An error occurred: e”) return ‘statusCode’: 500, ‘body’: json.dumps(f’Error: str(e)’) finally: # Clean up the temporary file (optional, but recommended) try: os.remove(temp_file_path) print(f”Temporary file temp_file_path deleted”) except FileNotFoundError: pass“`This code snippet first defines the S3 bucket and key where the data will be stored.

It then creates a temporary file in the `/tmp` directory, writes the input data to this file, and uploads the file to S3 using the `upload_file` method of the boto3 S3 client. The use of a temporary file enables the function to process the data and handle any potential errors before uploading it to S3. After the upload, the temporary file is optionally removed to maintain a clean execution environment.

Handling Large Files and `/tmp` Limitations

Lambda functions, while powerful, are constrained by the resources available to them, particularly concerning temporary storage. The `/tmp` directory, though convenient for temporary file storage, presents limitations when dealing with files exceeding its capacity. Effectively managing large files requires strategic considerations and the utilization of alternative storage solutions.

Strategies for Processing Files Exceeding `/tmp` Storage Capacity

The `/tmp` directory in AWS Lambda functions has a fixed size, typically 512MB, which restricts the size of files that can be directly stored and processed. When encountering files larger than this limit, alternative strategies must be employed to ensure successful processing.

- Chunking and Processing in Segments: Instead of attempting to load the entire large file into `/tmp` at once, the file can be divided into smaller, manageable chunks. Each chunk is then processed individually, and the results are aggregated. This approach minimizes the memory footprint within the Lambda function and allows processing of arbitrarily large files. This method requires careful consideration of the chunk size to balance memory usage and processing overhead.

- Streaming from External Storage: Data can be streamed directly from external storage services like Amazon S3. This method bypasses the need to store the entire file in `/tmp` and allows for on-the-fly processing. As data is read from S3, it can be processed or written to `/tmp` in smaller segments, as needed. This strategy is especially efficient for files that don’t require random access.

- Using Disk-Based Data Structures: If the processing requires intermediate data structures that exceed the available memory, consider using disk-based data structures. Libraries and tools that allow for creating and manipulating data structures on disk can be integrated into the Lambda function. These tools provide an alternative to in-memory data structures, allowing processing of larger datasets.

- Offloading Processing to Other Services: For extremely large files or complex processing requirements, it may be more efficient to offload the processing task to other AWS services designed for handling large datasets, such as Amazon EMR, AWS Batch, or Amazon ECS. These services offer greater scalability and resources for compute-intensive tasks.

Comparing `/tmp` with Other Storage Options

The choice of storage option significantly impacts the performance, cost, and complexity of a Lambda function. Comparing `/tmp` with alternatives like S3 is crucial for making informed decisions. The following table summarizes the key differences.

| Feature | `/tmp` | Amazon S3 | Considerations |

|---|---|---|---|

| Storage Type | Ephemeral, local disk | Object storage | `/tmp` is temporary and lost after the function execution; S3 is persistent. |

| Capacity | Limited (typically 512MB) | Virtually unlimited | `/tmp` is suitable for small temporary files; S3 can store any size object. |

| Access Speed | Fast, local access | Slower, network-based access | `/tmp` provides the fastest access, but S3’s speed is sufficient for many use cases. |

| Cost | Free (part of Lambda execution) | Pay-per-use (storage and request costs) | `/tmp` is included in the Lambda execution cost; S3 incurs additional storage and access costs. |

| Persistence | Ephemeral (lost after function execution) | Persistent | Data in `/tmp` is lost; data in S3 persists unless explicitly deleted. |

| Use Cases | Temporary files, caching, intermediate processing | Large files, backups, long-term storage, data lakes | `/tmp` is ideal for temporary, small files; S3 is best for larger, persistent data. |

Streaming Data from S3 into a Lambda Function and Writing to `/tmp`

Streaming data from S3 and writing it to `/tmp` for processing is a common and efficient pattern for handling large files. This approach minimizes memory usage within the Lambda function and allows for processing data that exceeds the `/tmp` capacity.

- Obtain the S3 Object: Use the AWS SDK (e.g., Boto3 for Python) to retrieve the S3 object as a stream. The SDK provides methods to read the object’s content in chunks, avoiding the need to load the entire file into memory at once.

- Iterate and Process Chunks: Iterate through the stream in manageable chunks. The size of the chunks can be determined based on the processing requirements and the available memory. For example, a chunk size of 1MB or 10MB could be suitable, depending on the specific processing task.

- Write to `/tmp`: As each chunk is read from the S3 stream, write it to a file in the `/tmp` directory. Ensure the file is opened in write mode. This allows for efficient writing of data to the temporary storage.

- Process the `/tmp` File: Once the data has been written to the `/tmp` file (either in its entirety or in sections, depending on the processing needs), perform the required processing operations on the file. This could involve parsing, transformation, or any other data manipulation tasks.

- Clean Up: After processing, remove the temporary file from the `/tmp` directory to free up space for subsequent function executions.

For instance, consider a Python example using the Boto3 library:“`pythonimport boto3import oss3 = boto3.client(‘s3’)def lambda_handler(event, context): bucket_name = event[‘Records’][0][‘s3’][‘bucket’][‘name’] object_key = event[‘Records’][0][‘s3’][‘object’][‘key’] tmp_file_path = ‘/tmp/temp_file.txt’ chunk_size = 1024

1024 # 1MB chunks

try: response = s3.get_object(Bucket=bucket_name, Key=object_key) with open(tmp_file_path, ‘wb’) as f: for chunk in response[‘Body’].iter_chunks(chunk_size): f.write(chunk) # Process the file in /tmp # …

your processing logic here … os.remove(tmp_file_path) #Clean up the temporary file return ‘statusCode’: 200, ‘body’: ‘File processed successfully.’ except Exception as e: print(f”Error: e”) return ‘statusCode’: 500, ‘body’: f’Error processing file: e’ “`In this example, the function retrieves an object from S3, reads it in 1MB chunks, and writes those chunks to a file in `/tmp`.

After writing, the function then processes the file and cleans up. This approach effectively handles large files by avoiding loading the entire file into memory and utilizing the limited `/tmp` space more efficiently.

Security Considerations for Temporary Files

The use of temporary files in AWS Lambda functions introduces several security vulnerabilities that must be carefully addressed. The `/tmp` directory, while providing a convenient space for transient data storage, presents potential attack vectors if not properly secured. Failing to mitigate these risks can lead to data breaches, unauthorized access, and compromise of the function’s integrity. This section will explore these risks and Artikel best practices for safeguarding temporary file usage within the Lambda environment.

Potential Security Risks Associated with Temporary Files

The `/tmp` directory, being publicly accessible within the Lambda execution environment, is susceptible to several security threats. These risks stem from the transient nature of the files and the shared resource model of the Lambda platform.

- Data Exposure: Sensitive data, such as API keys, credentials, or intermediate processing results, might be inadvertently stored in `/tmp`. If these files are not properly secured, they could be accessed by unauthorized users or other Lambda function invocations.

- Cross-Function Contamination: Multiple Lambda function invocations might execute within the same execution environment. If one function leaves sensitive data in `/tmp` and another function inadvertently accesses or modifies it, a security breach can occur. This is particularly concerning if different functions have different access permissions.

- Malicious Code Execution: Attackers might exploit vulnerabilities to upload malicious code to `/tmp` and then execute it. For instance, a function might be tricked into running a script or binary stored in `/tmp`, leading to a complete compromise of the function and potentially the broader AWS account.

- Denial of Service (DoS): Excessive file storage or resource consumption within `/tmp` can lead to a denial-of-service situation. A malicious actor could upload a large file to `/tmp`, exhausting the available disk space and preventing the function from operating correctly. This impacts function availability and potentially other functions sharing the same execution environment.

- Information Leakage: Even seemingly innocuous files in `/tmp` can reveal sensitive information. For example, error logs or debug information stored in temporary files might expose internal system details, which attackers can use to understand the system’s architecture and identify vulnerabilities.

Guidelines for Securing Temporary Files

Securing temporary files in Lambda functions involves implementing several preventative measures. These include strict access controls, data encryption, and regular monitoring to identify and mitigate potential risks.

- Access Control: Implement stringent access control measures to restrict access to `/tmp` files.

- Principle of Least Privilege: Grant functions only the necessary permissions to read, write, and execute files in `/tmp`. Avoid giving broad permissions that could allow unintended access.

- File Permissions: Set appropriate file permissions to restrict access to specific files. For example, use `chmod 600` to grant read and write access only to the owner of the file, preventing access by other users or groups.

- Function Isolation: Design functions to operate in isolated environments as much as possible. Avoid sharing data in `/tmp` between different functions unless absolutely necessary. If sharing is required, implement robust authentication and authorization mechanisms.

- Encryption: Encrypt sensitive data stored in `/tmp` to protect it from unauthorized access.

- Server-Side Encryption (SSE): If the data is to be stored in an object storage service, use server-side encryption to encrypt the data at rest.

- Client-Side Encryption: Implement client-side encryption within the Lambda function to encrypt data before writing it to `/tmp`. Use strong encryption algorithms, such as AES-256, with a securely managed key. For instance, the `cryptography` library in Python provides robust encryption functionalities.

- Key Management: Securely manage encryption keys. Consider using AWS Key Management Service (KMS) to generate, store, and manage encryption keys. This simplifies key rotation and access control.

- File Sanitization: Ensure that any data written to `/tmp` is sanitized to prevent malicious code execution or information leakage.

- Input Validation: Validate all inputs to prevent injection attacks or the creation of unexpected files. For example, when creating filenames, ensure that they are safe and do not contain malicious characters.

- Data Sanitization: Sanitize data before writing it to files to remove any potentially harmful content, such as HTML tags or script tags.

- Regular Auditing and Monitoring: Implement regular auditing and monitoring to detect and respond to security incidents.

- Logging: Implement detailed logging to track file creation, modification, and deletion events within `/tmp`. Log all relevant actions, including the function name, user identity, and timestamps.

- Security Audits: Conduct regular security audits to review the function’s code, configurations, and access controls. Use security scanners to identify vulnerabilities.

- Alerting: Set up alerts to notify security teams of any suspicious activity, such as unauthorized file access or excessive file storage.

Preventing Unauthorized Access to Files Stored in `/tmp`

Preventing unauthorized access to files in `/tmp` requires a combination of technical and procedural measures.

- Secure File Creation: When creating files, use secure file creation methods. Use functions like `os.mkstemp()` in Python, which creates a temporary file with a unique name and ensures that the file is not accessible to other users.

- Timely Deletion: Delete temporary files as soon as they are no longer needed. Implement proper cleanup routines to remove temporary files at the end of the function’s execution. This minimizes the window of opportunity for attackers to access the files.

- Use Unique File Names: Generate unique, unpredictable filenames for temporary files. Avoid using predictable names that could be easily guessed by attackers. Consider using UUIDs or random strings for filenames.

- Restrict Execution Permissions: If a file in `/tmp` contains executable code, restrict its execution permissions. Avoid using the `chmod +x` command on files in `/tmp` unless absolutely necessary.

- Regular Review of Code: Regularly review the Lambda function’s code to identify and address any security vulnerabilities. This includes checking for input validation flaws, insecure file operations, and potential injection attacks.

- Network Isolation: If possible, configure the Lambda function to operate within a private subnet to limit network access to the function and the resources it interacts with. This can reduce the attack surface.

Monitoring and Logging Temporary File Usage

Effectively monitoring and logging temporary file usage within AWS Lambda functions is crucial for maintaining function health, preventing performance bottlenecks, and ensuring cost optimization. Insufficient monitoring can lead to unexpected errors, storage exhaustion, and security vulnerabilities. Comprehensive monitoring and logging strategies enable proactive identification and resolution of issues related to `/tmp` directory utilization.

Monitoring `/tmp` Usage

Monitoring the `/tmp` directory usage is essential for preventing storage-related errors and ensuring the smooth operation of Lambda functions. Regular monitoring allows for the identification of trends, potential issues, and opportunities for optimization.The following methods can be used to monitor `/tmp` usage:

- CloudWatch Metrics: AWS CloudWatch provides built-in metrics for monitoring Lambda function performance, including disk usage. The `DiskSpaceUtilization` metric reports the percentage of disk space used within the function’s execution environment, including `/tmp`. This metric provides a high-level overview of storage consumption. The metric is available for all Lambda functions and is automatically generated by AWS. The granularity of the metric can be adjusted, and alerts can be set based on threshold values.

For instance, an alert could be triggered when `DiskSpaceUtilization` exceeds 80%.

- Custom Metrics: While `DiskSpaceUtilization` provides a general overview, creating custom metrics allows for more granular monitoring. This involves instrumenting the Lambda function code to track specific file operations, file sizes, and the overall storage footprint. For example, a custom metric could track the size of a specific file being created or modified within the `/tmp` directory. These custom metrics are sent to CloudWatch and can be used for more in-depth analysis and alerting.

- Periodic Checks within the Function: Implement periodic checks within the Lambda function to determine `/tmp` usage. The `df -h /tmp` command (available within the Lambda execution environment) can be used to determine the current disk usage. This information can be logged and used to generate custom metrics or trigger alerts. For example, the following Python code snippet demonstrates how to determine the current disk usage and log the output:

import subprocess import logging logger = logging.getLogger() logger.setLevel(logging.INFO) def lambda_handler(event, context): try: df_output = subprocess.check_output(['df', '-h', '/tmp']).decode('utf-8') logger.info(f"Disk usage in /tmp:\ndf_output") except subprocess.CalledProcessError as e: logger.error(f"Error checking disk usage: e") return 'statusCode': 200, 'body': 'Disk usage check completed.'

Logging File Operations for Debugging

Detailed logging of file operations is essential for debugging issues related to temporary files. Logging file creation, modification, and deletion events provides valuable context when troubleshooting storage-related errors, performance problems, or unexpected behavior.

Here’s how to implement logging of file operations:

- Logging File Creation: Log the filename, size, and timestamp whenever a file is created in `/tmp`. This allows you to track the files being created and their respective sizes. The logs can be used to identify which files are contributing the most to storage usage. For example, in Python:

import logging import os logger = logging.getLogger() logger.setLevel(logging.INFO) def create_temp_file(filename, content): try: filepath = os.path.join('/tmp', filename) with open(filepath, 'w') as f: f.write(content) file_size = os.path.getsize(filepath) logger.info(f"File created: filename, Size: file_size bytes") except Exception as e: logger.error(f"Error creating file filename: e") - Logging File Modification: Log file modifications, including the filename, timestamp, and any changes made. This is useful for tracking how files are being updated over time and can help identify issues related to data corruption or unexpected changes. This can be done by logging each write operation or by comparing the content before and after a modification.

- Logging File Deletion: Log the filename and timestamp whenever a file is deleted. This allows you to track which files are being removed and when. It’s useful for verifying that temporary files are being cleaned up correctly. This also helps identify potential issues if files are not being deleted as expected.

- Contextual Logging: Include relevant context in the logs, such as the function’s invocation ID, the event data, and any relevant metadata. This context simplifies the debugging process and allows for easier correlation of logs with specific function executions.

- Structured Logging: Use structured logging formats (e.g., JSON) to make the logs easier to parse and analyze. Structured logs allow you to query and filter log data more efficiently. For example, using JSON format:

import json import logging import os logger = logging.getLogger() logger.setLevel(logging.INFO) def delete_temp_file(filename): try: filepath = os.path.join('/tmp', filename) os.remove(filepath) log_data = "event": "file_deleted", "filename": filename, "timestamp": datetime.utcnow().isoformat() logger.info(json.dumps(log_data)) except FileNotFoundError: log_data = "event": "file_not_found_for_deletion", "filename": filename, "timestamp": datetime.utcnow().isoformat() logger.warning(json.dumps(log_data)) except Exception as e: log_data = "event": "file_deletion_error", "filename": filename, "error": str(e), "timestamp": datetime.utcnow().isoformat() logger.error(json.dumps(log_data))

Alerting on `/tmp` Storage Limits

Implementing an alerting process is essential for proactive management of `/tmp` storage. Setting up alerts enables immediate notification when storage approaches its limit, allowing for timely intervention and preventing function failures.

Here’s a process for alerting when `/tmp` storage approaches its limit:

- Set Thresholds: Define thresholds for disk space utilization. These thresholds should be based on the specific needs of the Lambda function and the expected usage patterns. A common approach is to set two thresholds: a warning threshold (e.g., 75% utilization) and a critical threshold (e.g., 90% utilization).

- Create CloudWatch Alarms: Create CloudWatch alarms that trigger when the `DiskSpaceUtilization` metric exceeds the defined thresholds. These alarms should be configured to send notifications to relevant stakeholders (e.g., developers, operations teams).

- Configure Notifications: Configure the CloudWatch alarms to send notifications via email, SMS, or other communication channels (e.g., Slack, PagerDuty). The notifications should include information about the alarm, the function name, and the current disk usage.

- Implement Remediation Actions: Define remediation actions to be taken when an alarm is triggered. These actions might include:

- Investigating the function’s code to identify and fix storage-related issues.

- Optimizing the function’s storage usage (e.g., reducing the size of temporary files, deleting files more frequently).

- Increasing the function’s memory allocation (which can sometimes indirectly affect the available disk space).

- Pausing function execution if disk space becomes critical to avoid errors and data loss.

- Test and Refine: Regularly test the alerting process to ensure it functions correctly. Refine the thresholds and remediation actions based on the function’s performance and the observed usage patterns. For example, simulate high load scenarios and test whether the alarms are triggered correctly.

Error Handling and Troubleshooting

Effective error handling and troubleshooting are crucial for robust Lambda function design, especially when dealing with temporary files. Unexpected issues with `/tmp` storage can lead to function failures, data loss, and increased operational costs. Implementing proactive measures to identify, diagnose, and resolve errors is essential for maintaining function stability and reliability.

Common Errors Related to Temporary File Management in Lambda

A variety of errors can arise when interacting with temporary files within a Lambda environment. These errors stem from issues related to file access, storage limitations, and concurrency.

- File Access Permissions Errors: These occur when the Lambda function lacks the necessary permissions to read, write, or execute files within the `/tmp` directory. This often arises due to incorrect IAM role configurations.

- Insufficient Disk Space Errors: The `/tmp` directory has a limited size, typically 512MB. Attempting to write files larger than this limit will result in an “out of space” error.

- File Not Found Errors: These happen when a file is expected to exist in `/tmp` but cannot be located. This might be caused by incorrect file paths, premature file deletion, or issues during file creation.

- Concurrent Access Errors: If multiple invocations of the same Lambda function attempt to access the same temporary files concurrently, race conditions can occur, leading to data corruption or unexpected behavior.

- Invalid File Format Errors: Attempting to read a file in an unexpected format, such as trying to parse a binary file as text, can lead to errors.

- Timeout Errors: Long-running file operations, especially when handling large files, can exceed the Lambda function’s execution time limit, leading to timeouts.

Handling Exceptions During File Operations

Implementing robust exception handling is paramount for gracefully managing errors during file operations. This includes anticipating potential issues and providing mechanisms to recover or report failures effectively.

A crucial aspect of exception handling is to enclose file operations within `try-except` blocks. This structure allows the function to catch specific exceptions and handle them accordingly. For example, when using Python, the code might resemble the following:

“`pythonimport ostry: with open(“/tmp/my_file.txt”, “w”) as f: f.write(“Some data”)except OSError as e: print(f”An error occurred: e”) # Handle the error, e.g., log it, retry, or return an error response“`

In this snippet, the `try` block attempts to open and write to a file. If an `OSError` (which encompasses various file-related errors) occurs, the `except` block catches it. The error message is printed, and the function can then take appropriate action, such as logging the error, attempting to retry the operation, or returning an error response to the caller. Different exception types should be handled separately to provide more specific error responses.

Here are additional strategies to implement for effective exception handling:

- Specific Exception Handling: Catch specific exception types (e.g., `FileNotFoundError`, `PermissionError`, `IOError`) rather than a generic `Exception` to provide more precise error handling.

- Error Logging: Implement robust logging to record errors, including the error message, the function’s execution context, and relevant timestamps. This information is essential for debugging.

- Retry Mechanisms: For transient errors (e.g., temporary network issues), consider implementing retry mechanisms with exponential backoff to attempt the operation again after a delay.

- Fallback Mechanisms: If a file operation fails, provide a fallback mechanism. This could involve using an alternative storage location, caching data, or returning a default value.

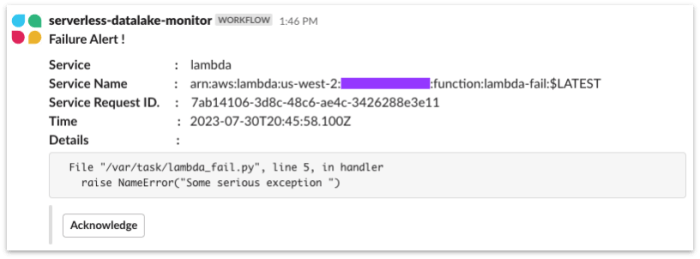

- Error Reporting: Notify relevant stakeholders (e.g., administrators, developers) about critical errors through alerts or notifications.

Troubleshooting Steps for Diagnosing Issues Related to `/tmp` Storage and Access

Troubleshooting issues related to `/tmp` storage and access requires a systematic approach to identify the root cause of the problem. This involves examining logs, verifying configurations, and testing file operations.

A systematic troubleshooting process might include the following steps:

- Review CloudWatch Logs: Examine the Lambda function’s CloudWatch logs for error messages, stack traces, and any other relevant information. Look for specific error codes or descriptions related to file access, storage, or permissions.

- Verify IAM Role Permissions: Ensure that the Lambda function’s IAM role has the necessary permissions to access the `/tmp` directory. This typically involves the `lambda:InvokeFunction` and `s3:GetObject` (if retrieving from S3) permissions.

- Check Disk Space Usage: Monitor the disk space usage of the `/tmp` directory to ensure it is not exceeding the 512MB limit. This can be done by logging the amount of data written.

- Validate File Paths: Double-check the file paths used in the Lambda function to ensure they are correct and that the files are being written to and read from the expected locations.

- Test File Operations: Create simple test cases to verify that file operations are working correctly. This can involve writing and reading small files to the `/tmp` directory.

- Examine Concurrent Access: If concurrency is suspected, investigate potential race conditions by analyzing the execution context of multiple function invocations.

- Monitor Function Performance: Use CloudWatch metrics to monitor function performance, including execution time and memory usage. This can help identify performance bottlenecks related to file operations.

- Consider Function Configuration: Verify that the Lambda function’s configuration, such as memory allocation and timeout settings, is appropriate for the file operations being performed.

By following these troubleshooting steps, developers can efficiently diagnose and resolve issues related to temporary file management in Lambda, ensuring the stability and reliability of their serverless applications.

Conclusion

In conclusion, mastering temporary file management in Lambda functions is essential for optimizing performance, managing intermediate data, and successfully addressing a variety of computational needs. By understanding the limitations, adopting best practices, and implementing robust monitoring and security measures, developers can effectively harness the power of temporary storage to build more efficient, scalable, and secure serverless applications. The strategic use of `/tmp`, alongside other storage options, unlocks greater flexibility and control within the Lambda environment, ultimately enhancing the capabilities of your serverless deployments.

Commonly Asked Questions

What happens to files in `/tmp` after a Lambda function completes?

Files stored in `/tmp` are generally deleted when the Lambda function execution completes. However, there’s no guarantee of persistence across invocations, especially with cold starts. The execution environment can be reused, but it is not recommended to rely on the same environment for each invocation.

How can I determine the current usage of `/tmp` within my Lambda function?

You can monitor `/tmp` usage by utilizing operating system commands like `df -h` within your Lambda function and logging the output. This allows you to track the storage used in real-time and identify potential issues before they impact performance or cause errors.

Is it possible to share files in `/tmp` between different Lambda function invocations?

While the same execution environment

-might* be reused, relying on it is unreliable. It’s generally not advisable to share files between invocations in `/tmp` because the environment can be discarded at any time. For sharing data, consider using S3, a database, or another persistent storage solution.

What are the security implications of storing sensitive data in `/tmp`?

Storing sensitive data in `/tmp` poses security risks. Data is not encrypted by default, and access control is limited. If you must store sensitive data, encrypt it before writing it to `/tmp`, and ensure proper access controls are in place. Consider S3 or other secure storage options for sensitive information.