Designing a multi-cloud serverless strategy represents a paradigm shift in modern application development, enabling organizations to leverage the strengths of multiple cloud providers for enhanced resilience, cost optimization, and vendor diversification. This approach, however, introduces complexities that necessitate careful planning and execution. Understanding the fundamentals, identifying suitable use cases, and mastering the intricacies of architecture, data management, security, and orchestration are crucial for success.

This exploration will delve into the critical aspects of crafting a robust multi-cloud serverless strategy. From evaluating cloud provider options to designing event-driven architectures and implementing cost-effective deployment pipelines, we’ll dissect the essential elements required to build scalable, secure, and highly available serverless applications across diverse cloud environments. This analysis will equip readers with the knowledge and tools needed to navigate the complexities and unlock the full potential of a multi-cloud serverless approach.

Understanding Multi-Cloud Serverless Fundamentals

Multi-cloud serverless architecture represents a significant evolution in cloud computing, offering increased flexibility, resilience, and cost optimization. It involves deploying and managing serverless functions across multiple cloud providers, thereby decoupling application logic from a single vendor’s infrastructure. This approach allows organizations to leverage the unique strengths of each cloud platform, mitigating vendor lock-in and improving overall system availability.

Core Concepts of Multi-Cloud Serverless Architecture

Multi-cloud serverless architecture relies on several core principles that define its operation and benefits. Understanding these principles is crucial for successful implementation.

- Abstraction of Infrastructure: Serverless computing inherently abstracts the underlying infrastructure, and multi-cloud serverless extends this abstraction across multiple cloud providers. Developers focus on writing and deploying code without managing servers, operating systems, or scaling resources. This is achieved through the use of Functions-as-a-Service (FaaS) platforms offered by various cloud providers.

- Vendor-Neutral Deployment: The key to multi-cloud serverless lies in vendor-neutral deployment strategies. This often involves utilizing tools and frameworks that can deploy and manage serverless functions across different cloud providers. This could include the use of infrastructure-as-code (IaC) tools or specialized serverless orchestration platforms.

- Event-Driven Architecture: Serverless functions are typically triggered by events, such as HTTP requests, database updates, or messages from a message queue. In a multi-cloud context, events can originate from any of the connected cloud providers, and functions can be triggered and executed across different platforms.

- Stateless Functions: Serverless functions are ideally stateless, meaning they do not store any persistent data locally. This stateless nature allows for easy scaling and deployment across multiple cloud providers, as each function instance can be executed independently. Data storage is typically handled by external services, such as databases or object storage.

- Orchestration and Management: Effective orchestration and management are critical for a multi-cloud serverless setup. This involves tools and services that can deploy, monitor, and manage functions across different cloud providers. These tools often provide unified logging, monitoring, and alerting capabilities.

Examples of Serverless Function Deployment Across Multiple Cloud Providers

Several deployment scenarios exemplify the practical application of multi-cloud serverless. These examples highlight how functions can be distributed and managed across different cloud platforms.

- Geographic Redundancy: A global e-commerce platform can deploy its order processing function across multiple cloud providers in different geographic regions. If one cloud provider experiences an outage, the function can seamlessly failover to another provider, ensuring high availability for customers worldwide. For example, AWS Lambda in the US East region, Azure Functions in the UK South region, and Google Cloud Functions in the Asia-Pacific region.

- Specialized Services: An organization might choose to leverage the strengths of each cloud provider for specific tasks. For instance, they could use AWS for its robust object storage (S3), Azure for its advanced AI/ML services (Azure Cognitive Services), and Google Cloud for its data analytics capabilities (BigQuery). Serverless functions can then be orchestrated to interact with these specialized services across different cloud providers.

- Cost Optimization: By analyzing pricing models and resource availability across different cloud providers, an organization can optimize its serverless deployments for cost. For example, a function that is triggered frequently during peak hours could be deployed on the cloud provider with the lowest cost at that time, while less critical functions could run on a more cost-effective platform.

- Hybrid Cloud Integration: Multi-cloud serverless can be used to integrate with on-premises infrastructure. A function might be deployed in a cloud provider to process data from an on-premises database. The function would then interact with the database via a secure connection, providing a seamless hybrid cloud experience.

Benefits of Using Multi-Cloud Serverless Compared to Single-Cloud Serverless

Multi-cloud serverless offers several advantages over single-cloud serverless, enhancing flexibility, resilience, and cost efficiency.

- Increased Resilience and Availability: Deploying functions across multiple cloud providers reduces the risk of downtime. If one provider experiences an outage, the application can continue to function on other providers, ensuring high availability and business continuity. This is a significant advantage over single-cloud serverless, where the entire application is dependent on the availability of a single provider.

- Reduced Vendor Lock-in: Multi-cloud serverless prevents vendor lock-in by allowing organizations to distribute their workloads across different cloud providers. This provides the flexibility to switch providers or negotiate better pricing terms without significant disruption.

- Cost Optimization: Organizations can optimize costs by leveraging the competitive pricing models of different cloud providers. They can deploy functions on the provider that offers the best price for the required resources, or they can dynamically shift workloads based on pricing fluctuations.

- Best-of-Breed Services: Multi-cloud serverless allows organizations to choose the best services from each cloud provider. For example, an organization could use AWS for its object storage, Azure for its AI/ML services, and Google Cloud for its data analytics capabilities. This enables them to leverage the unique strengths of each provider and build more powerful and feature-rich applications.

- Improved Performance: Deploying functions closer to end-users can improve performance. By using multiple cloud providers located in different geographic regions, organizations can reduce latency and improve the user experience. For instance, an application can be deployed in the US East region (AWS), UK South region (Azure), and Asia-Pacific region (Google Cloud).

Identifying Business Needs and Use Cases

A successful multi-cloud serverless strategy is predicated on a clear understanding of the business’s objectives and the specific use cases that benefit from a distributed architecture. Identifying these needs and use cases allows for a targeted and effective implementation, maximizing the advantages of serverless computing across multiple cloud providers. This involves careful analysis of existing systems, future requirements, and the potential benefits offered by leveraging the strengths of each cloud platform.

Common Business Scenarios Benefiting from Multi-Cloud Serverless

Several business scenarios demonstrate the value of a multi-cloud serverless approach. These examples showcase how different organizations can leverage this strategy to improve resilience, reduce costs, and enhance performance.

- Disaster Recovery and Business Continuity: Implementing a multi-cloud serverless architecture significantly enhances disaster recovery capabilities. By replicating critical workloads across different cloud providers, businesses can ensure continuous operation even if one provider experiences an outage. Serverless functions can automatically failover to a healthy region or provider, minimizing downtime and data loss. For example, a financial institution could replicate its transaction processing system across multiple clouds, ensuring that transactions continue to be processed even if one cloud provider’s infrastructure is unavailable.

This approach contrasts with traditional disaster recovery methods, which can be complex and expensive.

- Geographic Distribution and Low-Latency Applications: Multi-cloud serverless is ideal for applications that require low latency for users located in geographically diverse regions. Deploying serverless functions closer to end-users improves performance and user experience. Content Delivery Networks (CDNs) can be easily integrated with serverless functions to cache static content closer to users. Consider an e-commerce platform that serves customers globally. Using serverless functions, the platform can deploy product catalogs, shopping cart functionalities, and payment processing services in multiple regions across different cloud providers.

This ensures fast loading times and a responsive user experience for customers regardless of their location.

- Vendor Lock-in Mitigation and Cost Optimization: A multi-cloud serverless strategy reduces vendor lock-in, allowing businesses to choose the best services from each cloud provider and avoid being overly reliant on a single vendor. This also enables cost optimization by leveraging the most cost-effective services for specific workloads. For instance, a company might use one cloud provider for its object storage due to competitive pricing, while utilizing another for its compute-intensive tasks, taking advantage of its specialized services.

This flexibility empowers businesses to negotiate better terms with cloud providers and switch providers as needed, fostering competition and lowering overall costs.

- Compliance and Regulatory Requirements: Certain industries are subject to stringent compliance regulations that dictate where data can be stored and processed. Multi-cloud serverless enables businesses to meet these requirements by deploying workloads in specific geographic regions and using cloud providers that comply with the necessary standards. For example, a healthcare provider might store patient data in a cloud provider located within the same country to comply with data privacy laws.

Another example is a government agency needing to meet sovereign cloud requirements.

- Application Modernization and Innovation: Multi-cloud serverless accelerates application modernization efforts. By decoupling monolithic applications into serverless microservices, businesses can gradually migrate workloads to the cloud and adopt new technologies. This approach allows for faster development cycles and easier integration with third-party services. Furthermore, the agility of serverless allows for rapid experimentation with new features and services, fostering innovation.

Assessing Requirements for Multi-Cloud Serverless Implementation

A thorough assessment of the requirements is crucial for a successful multi-cloud serverless implementation. This process involves analyzing existing systems, identifying key dependencies, and defining specific performance and security requirements.

- Workload Analysis: This involves identifying the specific workloads that are suitable for a multi-cloud serverless architecture. Factors to consider include the nature of the workload (e.g., stateless, event-driven), its resource requirements (e.g., compute, storage, memory), and its dependencies on other services. The analysis should also consider the potential for workload isolation and the ability to deploy workloads across different cloud providers.

- Dependency Mapping: Mapping the dependencies of each workload is essential to ensure that all required services and resources are available across the selected cloud providers. This includes identifying any third-party services, databases, or other components that the workload relies on. Dependency mapping helps in identifying potential integration challenges and the need for specific cross-cloud communication strategies.

- Performance Requirements: Defining clear performance requirements is crucial to ensure that the multi-cloud serverless solution meets the business’s needs. This includes setting Service Level Objectives (SLOs) for latency, throughput, and availability. Performance testing and monitoring tools should be used to validate that the implemented solution meets these requirements.

- Security and Compliance Requirements: Security is a paramount concern in a multi-cloud environment. The assessment must identify the security requirements, including data encryption, access control, and compliance with relevant regulations. The implementation should incorporate robust security measures across all cloud providers, ensuring data protection and compliance. For example, the assessment should consider how to manage secrets, secure network traffic, and implement identity and access management (IAM) across multiple cloud platforms.

- Cost Analysis: Evaluating the cost implications of a multi-cloud serverless strategy is crucial. This involves comparing the pricing models of different cloud providers and optimizing resource allocation to minimize costs. The cost analysis should consider the cost of compute, storage, networking, and other services. It is also important to factor in the costs associated with managing and monitoring the multi-cloud environment.

- Skills and Expertise: Assessing the skills and expertise of the organization’s IT team is vital. A multi-cloud serverless strategy requires expertise in serverless technologies, cloud provider services, and cross-cloud integration. If the necessary skills are lacking, the organization may need to invest in training or hire external consultants.

Factors Driving Adoption of Multi-Cloud Serverless Solutions

Several factors are driving the increasing adoption of multi-cloud serverless solutions across various industries. These factors highlight the benefits and advantages of this approach.

- Increased Business Agility: Multi-cloud serverless enables businesses to respond quickly to changing market demands and innovate faster. The ability to deploy and scale applications rapidly across multiple clouds allows for quicker time-to-market and faster experimentation.

- Improved Resilience and Availability: The distributed nature of a multi-cloud serverless architecture enhances resilience and availability. By replicating workloads across different cloud providers, businesses can minimize the impact of outages and ensure continuous operation.

- Cost Efficiency: Multi-cloud serverless can lead to significant cost savings by allowing businesses to leverage the most cost-effective services from different cloud providers. The pay-as-you-go pricing model of serverless also helps to optimize resource utilization and reduce waste.

- Reduced Vendor Lock-in: Multi-cloud serverless mitigates vendor lock-in by allowing businesses to distribute their workloads across multiple cloud providers. This flexibility gives businesses greater control over their cloud strategy and the ability to switch providers as needed.

- Enhanced Security: Multi-cloud serverless architectures can improve security by providing a layered approach to security. Businesses can use the security features of each cloud provider and implement cross-cloud security controls to protect their data and applications.

- Increased Developer Productivity: Serverless technologies simplify application development and deployment, leading to increased developer productivity. Developers can focus on writing code and building applications without managing infrastructure. The availability of pre-built serverless components and services further accelerates development cycles.

- Growing Maturity of Serverless Technologies: The serverless ecosystem is rapidly maturing, with cloud providers offering a wide range of serverless services and tools. This maturity makes it easier to build, deploy, and manage serverless applications.

- Increased Demand for Scalability and Performance: Businesses are increasingly demanding scalable and high-performance applications. Multi-cloud serverless provides the scalability and performance needed to meet these demands.

Choosing the Right Cloud Providers

Selecting the appropriate cloud providers is a pivotal step in architecting a successful multi-cloud serverless strategy. This decision significantly impacts application performance, cost-efficiency, vendor lock-in, and overall resilience. Careful consideration of each provider’s strengths, weaknesses, and pricing models is crucial to make informed choices that align with specific business requirements and technical objectives.

Comparing Cloud Provider Strengths and Weaknesses for Serverless Deployments

The landscape of serverless computing is dominated by Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). Each provider offers a range of services, but their capabilities, pricing structures, and ecosystems vary. Understanding these differences is fundamental for making informed deployment decisions.

- AWS: AWS offers the most mature and extensive serverless ecosystem, with a wide array of services.

- Strengths: Mature ecosystem, extensive service portfolio, broad geographic availability, and robust community support. AWS Lambda, its flagship compute service, supports numerous programming languages and provides tight integration with other AWS services like API Gateway, DynamoDB, and S3. AWS also offers specialized serverless services such as Step Functions for orchestrating complex workflows and EventBridge for event-driven architectures.

- Weaknesses: Can be complex to navigate due to the sheer number of services, and cost management can be challenging. Vendor lock-in is a concern due to the tight integration of its services. Pricing can be complex, requiring careful optimization.

- Azure: Azure provides a strong serverless platform, particularly well-suited for organizations already invested in the Microsoft ecosystem.

- Strengths: Excellent integration with .NET and Windows technologies, strong focus on hybrid cloud solutions, and competitive pricing. Azure Functions provides a versatile compute service, and its integration with services like Azure Cosmos DB and Azure Event Hubs is seamless. Azure also offers Azure Logic Apps for workflow automation.

- Weaknesses: The serverless ecosystem is less mature than AWS, and geographic availability might be less extensive in certain regions. While improving, the range of programming language support is still somewhat limited compared to AWS.

- GCP: GCP is known for its innovation in areas like containerization and data analytics, and it offers a compelling serverless platform.

- Strengths: Strong focus on open-source technologies, excellent performance, and competitive pricing. Google Cloud Functions provides a scalable compute service, and its integration with services like Cloud Storage, Cloud Pub/Sub, and BigQuery is efficient. GCP also offers Cloud Run for containerized serverless applications.

- Weaknesses: The serverless ecosystem is smaller than AWS, and the user interface can be less intuitive for some users. The overall geographic availability might be less extensive than AWS.

Designing a Decision-Making Framework for Selecting Cloud Providers

A structured decision-making framework is essential for choosing the right cloud providers based on specific requirements. This framework should consider several factors, allowing for a balanced assessment.

- Requirement Analysis: The initial step involves a thorough analysis of the application’s functional and non-functional requirements.

- Performance Requirements: Consider latency, throughput, and scalability needs. Certain providers might offer superior performance for specific workloads. For example, if an application is highly reliant on data analytics, GCP’s BigQuery integration might be a significant advantage.

- Cost Requirements: Evaluate the total cost of ownership (TCO), including compute, storage, networking, and data transfer costs. Use pricing calculators and perform cost simulations to estimate expenses. Consider the different pricing models offered by each provider, such as pay-per-use, reserved instances, and spot instances.

- Compliance and Security Requirements: Determine the required compliance standards (e.g., HIPAA, GDPR) and security features. Assess each provider’s certifications, security protocols, and data residency options.

- Geographic Requirements: Consider the geographic distribution of users and the need for data residency. Ensure the selected providers offer services in the required regions.

- Vendor Lock-in: Evaluate the level of vendor lock-in associated with each provider. Consider the portability of the application and the ease of migrating between providers.

- Provider Evaluation: Once the requirements are defined, evaluate each provider based on the following criteria.

- Service Maturity: Assess the maturity and stability of the serverless services offered by each provider. Consider the availability of features, documentation, and community support.

- Integration Capabilities: Evaluate the integration capabilities of each provider’s services with other services and third-party tools.

- Operational Efficiency: Assess the ease of deployment, monitoring, and management of serverless applications.

- Cost Optimization: Evaluate the pricing models and cost optimization tools offered by each provider.

- Vendor Reputation and Support: Consider the provider’s reputation, customer support, and community support.

- Decision Matrix: Use a decision matrix to compare providers based on the evaluation criteria. Assign weights to each criterion based on its importance and score each provider accordingly. This matrix helps in visualizing the trade-offs and making an informed decision.

- Pilot Project: Conduct a pilot project to validate the chosen provider’s capabilities and performance. Deploy a small-scale application and monitor its performance, cost, and operational efficiency. This helps in identifying potential issues and making adjustments before a full-scale deployment.

- Continuous Monitoring and Optimization: Implement continuous monitoring and optimization strategies to ensure the application’s performance and cost-effectiveness. Regularly review the provider selection and make adjustments as needed.

Comparing Pricing Models for Serverless Services

Pricing models vary significantly across different cloud providers. Understanding these models is critical for cost optimization and making informed decisions. The following table provides a comparative overview.

| Service | AWS | Azure | GCP |

|---|---|---|---|

| Compute (e.g., Function Execution) | Pay-per-use based on invocation duration, memory allocation, and requests. Free tier available. | Pay-per-use based on execution time and memory consumption. Free grant available. | Pay-per-use based on execution time, memory allocation, and requests. Free tier available. |

| Storage (e.g., Object Storage) | Pay-per-use based on storage capacity, data transfer, and requests. Pricing tiers based on storage class (e.g., S3 Standard, S3 Glacier). | Pay-per-use based on storage capacity, data transfer, and requests. Pricing tiers based on storage type (e.g., Azure Blob Storage). | Pay-per-use based on storage capacity, data transfer, and requests. Pricing tiers based on storage class (e.g., Cloud Storage). |

| API Gateway | Pay-per-use based on API calls and data transfer. Free tier available. | Pay-per-use based on API calls. Free tier available. | Pay-per-use based on API calls and data transfer. Free tier available. |

| Database (e.g., NoSQL) | Pay-per-use based on provisioned throughput (e.g., Request Units) and storage. | Pay-per-use based on provisioned throughput (e.g., Request Units) and storage. | Pay-per-use based on provisioned throughput (e.g., Request Units) and storage. |

| Monitoring and Logging | Pay-per-use based on data ingested and stored. | Pay-per-use based on data ingested and stored. | Pay-per-use based on data ingested and stored. |

The table provides a simplified overview, and the actual pricing can vary based on region, usage patterns, and other factors. For example, consider a scenario where an application requires frequent data processing and storage. AWS might offer cost advantages with its S3 storage and Lambda compute services, whereas Azure could be more cost-effective if the application heavily uses .NET-based functions and Azure Blob Storage.

Google Cloud Platform might be more attractive if the application leverages BigQuery for data analysis.

Designing the Serverless Architecture

Designing a multi-cloud serverless architecture requires careful planning and execution to ensure optimal performance, resilience, and cost-effectiveness. This involves selecting the appropriate services from each cloud provider, designing the event flows, and implementing robust monitoring and management strategies. The architecture must be adaptable to changes in workload and capable of handling failures gracefully.

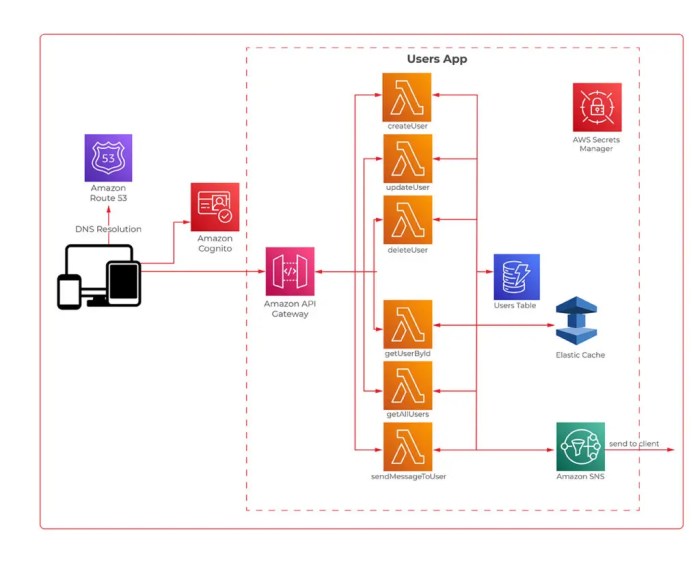

Multi-Cloud Serverless Architecture Diagram for Image Processing

A well-designed multi-cloud serverless architecture for image processing leverages the strengths of different cloud providers to achieve high availability, scalability, and cost optimization. The following diagram illustrates a possible implementation.The architecture uses three cloud providers: AWS, Google Cloud Platform (GCP), and Azure. Each provider hosts specific components of the image processing pipeline.* User Interaction: Users upload images through a web or mobile application.

This application is the entry point for the entire process.

API Gateway (AWS API Gateway)

AWS API Gateway receives image upload requests. It acts as a central point of entry and handles authentication, authorization, and rate limiting.

Object Storage (AWS S3)

The uploaded images are stored in an Amazon S3 bucket. This provides durable and scalable object storage.

Event Trigger (AWS S3 Event Notifications)

When a new image is uploaded to S3, an event notification is triggered.

Function 1 (AWS Lambda)

An AWS Lambda function is triggered by the S3 event. This function performs initial processing steps, such as resizing and format conversion.

Message Queue (AWS SQS)

The processed image data and instructions are sent to an AWS SQS queue.

Function 2 (GCP Cloud Functions)

A Google Cloud Function subscribes to the SQS queue. It handles more complex image processing tasks, such as applying filters or recognizing objects.

Object Storage (GCP Cloud Storage)

Processed images are stored in Google Cloud Storage.

Function 3 (Azure Functions)

An Azure Function is triggered by an event from Google Cloud Storage. It handles tasks such as image analysis or metadata extraction.

Database (Azure Cosmos DB)

The extracted metadata is stored in Azure Cosmos DB, providing a globally distributed, scalable database.

Content Delivery Network (CDN)

A CDN (e.g., CloudFront, Cloud CDN, or Azure CDN) is used to serve the processed images to users. This ensures fast and efficient content delivery.This architecture offers several benefits:* High Availability: By distributing the workload across multiple cloud providers, the system can withstand failures in a single provider.

Scalability

Each function can scale independently based on the demand.

Cost Optimization

The system can utilize the most cost-effective services for each task.

Vendor Lock-in Mitigation

Using multiple providers reduces the risk of vendor lock-in.

API Gateways in a Multi-Cloud Serverless Environment

API gateways play a crucial role in a multi-cloud serverless environment. They provide a single entry point for all API requests, abstracting the underlying complexity of the serverless functions and cloud infrastructure. This abstraction allows developers to manage, secure, and monitor APIs effectively, regardless of the cloud provider hosting the backend services.API gateways offer several key features:* Routing: API gateways route incoming requests to the appropriate backend services.

This routing can be based on the request path, method, or other criteria.

Authentication and Authorization

API gateways handle authentication and authorization, ensuring that only authorized users can access the APIs. This can involve using API keys, OAuth, or other authentication methods.

Rate Limiting

API gateways can implement rate limiting to protect the backend services from being overwhelmed by excessive traffic.

Request Transformation

API gateways can transform incoming requests before forwarding them to the backend services. This can include modifying the request headers, body, or parameters.

Response Transformation

API gateways can transform the responses from the backend services before returning them to the client.

Monitoring and Logging

API gateways provide comprehensive monitoring and logging capabilities, allowing developers to track API usage, performance, and errors.The choice of API gateway depends on the specific requirements of the multi-cloud serverless environment. Options include:* Cloud-Specific API Gateways: AWS API Gateway, Google Cloud API Gateway, and Azure API Management are fully managed services that integrate seamlessly with their respective cloud platforms.

They offer a wide range of features and are easy to configure.

Third-Party API Gateways

Solutions like Kong, Tyk, and Apigee can be deployed across multiple cloud providers, providing a consistent API management experience. These solutions often offer advanced features and integrations.

Open Source API Gateways

Open source API gateways like Apache APISIX and Traefik provide flexibility and customization options.Considerations when selecting an API gateway include:* Feature Set: The API gateway should provide all the necessary features, such as routing, authentication, authorization, rate limiting, and monitoring.

Scalability

The API gateway should be able to handle the expected traffic volume.

Cost

The cost of the API gateway should be reasonable.

Ease of Use

The API gateway should be easy to configure and manage.

Integration

The API gateway should integrate seamlessly with the cloud providers and backend services.

Designing Event-Driven Architectures Across Multiple Cloud Providers

Event-driven architectures are well-suited for multi-cloud serverless environments. They allow for loosely coupled, asynchronous communication between services, promoting resilience and scalability. However, designing event-driven architectures across multiple cloud providers requires careful consideration of the different eventing services and their interoperability.Key considerations include:* Event Format: Establish a standardized event format (e.g., CloudEvents) to ensure compatibility between different cloud providers.

This allows events to be easily consumed and processed by services running on different platforms.

Event Delivery

Implement reliable event delivery mechanisms. This may involve using message queues, event buses, or other services that guarantee event delivery even in the event of failures.

Event Routing

Design event routing strategies to ensure that events are delivered to the appropriate services. This can involve using event filters, topic subscriptions, or other routing mechanisms.

Error Handling

Implement robust error handling mechanisms to handle event processing failures. This may involve retrying failed events, dead-letter queues, or other error handling strategies.

Security

Secure event communication by encrypting events and using authentication and authorization mechanisms.

Monitoring and Observability

Implement comprehensive monitoring and observability to track event flow, performance, and errors.Several approaches can be used to implement event-driven architectures across multiple cloud providers:* Using a Centralized Event Bus: A centralized event bus, such as Apache Kafka, can be deployed across multiple cloud providers. Services on different providers can publish and subscribe to events on the bus.

Using Cloud-Specific Eventing Services with Interoperability

Services like AWS EventBridge, Google Cloud Pub/Sub, and Azure Event Grid can be used with appropriate configuration and connectors. This involves configuring these services to communicate with each other, often using webhooks or other integration mechanisms.

Leveraging Serverless Functions as Event Handlers

Serverless functions can be used to act as event handlers. These functions can be triggered by events from different cloud providers and perform the necessary processing.

Implementing a Hybrid Approach

A hybrid approach that combines the above strategies can be used. For example, a centralized event bus can be used for core events, while cloud-specific eventing services are used for more specific tasks.Example: An e-commerce platform might use an event-driven architecture across multiple cloud providers. When a customer places an order, an event is published to an event bus.

This event triggers functions on different cloud providers to perform various tasks, such as:* AWS: Update the order status in the order management system.

GCP

Send a notification to the fulfillment center.

Azure

Update the customer’s profile in the CRM system.By carefully considering these factors, organizations can design robust and scalable event-driven architectures that leverage the strengths of multiple cloud providers. This allows them to build highly resilient and efficient applications.

Data Management and Storage Considerations

Data management and storage are critical aspects of a multi-cloud serverless strategy. Effective handling of data across diverse cloud environments ensures data integrity, availability, and security. This section Artikels key considerations for data consistency, replication, backup, and security in a multi-cloud serverless setup.

Managing Data Consistency and Synchronization

Maintaining data consistency across different cloud storage services is a significant challenge in a multi-cloud serverless environment. Several strategies can be employed to address this, ranging from eventual consistency models to more complex, strongly consistent solutions. The choice depends on the application’s requirements for data accuracy and the acceptable latency.

Eventual consistency is often a practical approach for many applications where immediate consistency isn’t critical. This model acknowledges that data updates may take time to propagate across all storage locations. Consider the following techniques:

- Timestamping: Implement a system that uses timestamps to track the latest version of the data. When reading data, always retrieve the version with the most recent timestamp. This ensures that the most up-to-date information is accessed, even if there’s a delay in propagation.

- Vector Clocks: Utilize vector clocks to manage concurrent updates and identify causal relationships between data modifications. A vector clock is a logical clock that tracks the version of data across multiple nodes in a distributed system. Each node maintains a vector clock, which is updated whenever the node modifies its local data. The vector clock allows for the detection of concurrent updates and provides a mechanism for resolving conflicts.

- Conflict Resolution Strategies: Employ conflict resolution mechanisms if eventual consistency is chosen. This may involve “last write wins,” where the most recent write overwrites older versions, or more sophisticated methods like application-specific logic to merge conflicting changes.

For applications demanding strong consistency, a distributed transaction approach may be necessary, albeit with added complexity and potential performance impacts. Here’s how to approach this:

- Two-Phase Commit (2PC): Use the two-phase commit protocol to coordinate transactions across multiple cloud storage services. In 2PC, a coordinator manages the transaction. The coordinator first requests all participating storage services to prepare (i.e., ensure they can commit the transaction). If all storage services respond positively, the coordinator then instructs them to commit. If any storage service fails to prepare, the coordinator instructs all participants to roll back the transaction.

While effective, 2PC can be slow and susceptible to failures.

- Distributed Transactions with ACID Properties: Implement a distributed transaction system that adheres to the ACID (Atomicity, Consistency, Isolation, Durability) properties. Several database solutions offer this, allowing for coordinated data changes across cloud providers. This ensures that either all changes succeed or all changes are rolled back.

- Idempotent Operations: Design operations to be idempotent, meaning they can be executed multiple times without changing the outcome beyond the initial execution. This is crucial for handling retries and ensuring data consistency, especially in the presence of network or service failures.

Data Replication and Backup Methods

Data replication and backup strategies are vital for ensuring data availability and disaster recovery in a multi-cloud serverless setup. Different approaches can be used depending on the data volume, recovery time objectives (RTO), and recovery point objectives (RPO).

Consider the following approaches for data replication:

- Asynchronous Replication: Implement asynchronous replication to copy data between different cloud storage services. This method offers good performance because it doesn’t block the primary write operations. Data is replicated at intervals, which means that there is a potential for data loss in the event of a failure.

- Synchronous Replication: Synchronous replication provides higher data consistency. The data is written to multiple storage locations before acknowledging the write operation. This approach is slower than asynchronous replication, but it guarantees that data is replicated across multiple locations.

- Active-Passive Replication: Set up an active-passive configuration where one cloud provider serves as the primary data source (active), and another provider stores a replicated copy (passive). The passive site remains in standby mode until the primary site fails.

- Active-Active Replication: Implement an active-active configuration, where both cloud providers serve read and write requests. This approach improves availability and performance but increases complexity due to the need to resolve data conflicts.

Data backup strategies are essential for protecting against data loss:

- Regular Backups: Establish a schedule for regular data backups to a different cloud provider or an offsite location. The frequency of backups should align with the RPO.

- Snapshotting: Utilize snapshotting features provided by cloud storage services to create point-in-time copies of data. Snapshots are particularly useful for quick data recovery and for testing purposes.

- Object Storage for Backups: Leverage object storage services, which offer high durability and cost-effectiveness, for storing backups.

- Automated Backup and Recovery Procedures: Automate the backup and recovery process using serverless functions and cloud-native tools to ensure consistent and reliable operations. This includes testing the recovery process regularly to validate its effectiveness.

Best Practices for Data Security

Data security is paramount in a multi-cloud serverless environment. Implement robust security measures to protect data confidentiality, integrity, and availability.

- Encryption: Encrypt data both in transit and at rest. Use encryption keys managed by each cloud provider’s key management service (KMS). This includes encrypting data stored in object storage, databases, and any other data repositories.

- Access Control: Implement strict access control policies using Identity and Access Management (IAM) services. Grant the least privilege necessary to each serverless function and user.

- Network Security: Use Virtual Private Clouds (VPCs) and security groups to isolate serverless functions and restrict network traffic. Implement firewalls to control inbound and outbound connections.

- Data Masking and Tokenization: Employ data masking and tokenization techniques to protect sensitive data. This includes masking sensitive data in logs and using tokenization to replace sensitive data with non-sensitive substitutes.

- Regular Security Audits: Conduct regular security audits and penetration testing to identify and address vulnerabilities. Monitor security logs and events for suspicious activity.

- Compliance and Governance: Adhere to relevant industry regulations and compliance standards (e.g., GDPR, HIPAA). Ensure that data handling practices comply with the security requirements of each cloud provider.

- Data Loss Prevention (DLP): Implement DLP measures to prevent unauthorized data access and leakage. This involves monitoring data access and usage patterns to detect and prevent data breaches.

- Secure Serverless Function Code: Secure the code deployed to serverless functions. This includes using secure coding practices, regular code reviews, and vulnerability scanning.

Security and Compliance in a Multi-Cloud Environment

Implementing a robust security and compliance strategy is paramount in multi-cloud serverless deployments. The distributed nature of these architectures, coupled with the inherent complexities of managing resources across multiple cloud providers, introduces significant challenges. This section delves into the intricacies of securing and ensuring compliance in such environments, providing actionable insights for building resilient and compliant serverless applications.

Challenges of Implementing Security Policies Across Multiple Cloud Providers

The heterogeneity of cloud providers presents unique hurdles in enforcing consistent security policies. Each provider offers its own set of security services, interfaces, and configurations, making centralized management and orchestration difficult.The primary challenges include:

- Inconsistent Security Models: Different cloud providers employ varying security models, including identity and access management (IAM), network security, and data encryption methods. This disparity complicates the implementation of unified security policies and can lead to security gaps if not addressed properly. For example, AWS uses IAM roles, while Azure utilizes managed identities, and Google Cloud employs service accounts. Each requires specific configurations and management approaches.

- Lack of Centralized Visibility and Monitoring: Monitoring security events and collecting logs across multiple cloud platforms can be a complex undertaking. The absence of a centralized view hinders incident detection, response, and forensic analysis. Security information and event management (SIEM) tools must be configured to aggregate logs from various sources, which can be resource-intensive and prone to configuration errors.

- Compliance Complexity: Achieving and maintaining compliance with industry regulations and standards (e.g., HIPAA, PCI DSS, GDPR) is significantly more complex in a multi-cloud environment. Each cloud provider’s compliance certifications and offerings need to be evaluated and mapped to the specific compliance requirements. The responsibility for compliance is often shared between the cloud provider and the customer, adding further layers of complexity.

- Vendor Lock-in: While multi-cloud strategies aim to mitigate vendor lock-in, the reliance on specific cloud provider services can still create dependencies. This can impact security decisions, potentially leading to trade-offs between security features and portability. Choosing vendor-agnostic security solutions is crucial to minimize lock-in and maintain flexibility.

- Skill Gap: Managing security across multiple clouds requires a diverse skill set. Security teams need to be proficient in the security services and best practices of each cloud provider, which can be challenging to achieve and maintain. Training and certifications in multiple cloud platforms are essential, but the learning curve can be steep.

Designing a Security Model for Multi-Cloud Serverless Deployments

A well-defined security model is essential to protect multi-cloud serverless applications from common vulnerabilities. The model should incorporate a layered approach, addressing various aspects of security, including identity and access management, network security, data protection, and incident response.Key components of a robust security model include:

- Centralized Identity and Access Management (IAM): Implement a centralized IAM solution that integrates with all cloud providers. This allows for consistent user authentication, authorization, and access control across the entire multi-cloud environment. Utilizing federated identity providers, such as Okta or Azure Active Directory, enables single sign-on (SSO) and simplifies user management.

- Least Privilege Principle: Grant users and services only the minimum necessary permissions required to perform their tasks. Regularly review and update permissions to ensure they remain aligned with the principle of least privilege. Utilize infrastructure-as-code (IaC) tools to automate the provisioning and management of IAM roles and policies.

- Network Security Segmentation: Implement network segmentation to isolate serverless functions and other resources. This limits the impact of security breaches and prevents lateral movement within the environment. Use virtual private clouds (VPCs) or virtual networks to create isolated network segments and configure network security groups or firewalls to control traffic flow.

- Data Encryption: Encrypt data at rest and in transit using encryption keys managed either by the cloud providers or a centralized key management service. This protects sensitive data from unauthorized access. Implement robust key rotation policies to ensure the security of encryption keys.

- Automated Security Scanning and Vulnerability Management: Automate security scanning and vulnerability assessments to identify and remediate security flaws proactively. Integrate security scanning tools into the CI/CD pipeline to catch vulnerabilities early in the development process. Regularly patch and update dependencies to address known vulnerabilities.

- Centralized Logging and Monitoring: Implement a centralized logging and monitoring system to collect and analyze security logs from all cloud providers. This enables real-time threat detection, incident response, and compliance reporting. Utilize SIEM tools to aggregate logs, correlate events, and generate alerts.

- Incident Response Plan: Develop and maintain a comprehensive incident response plan to address security breaches effectively. The plan should include procedures for detecting, containing, eradicating, and recovering from security incidents. Regularly test the incident response plan through simulations and tabletop exercises.

Compliance Considerations for Different Industries Using Multi-Cloud Serverless

Different industries are subject to specific regulatory requirements. Building multi-cloud serverless applications requires careful consideration of these requirements to ensure compliance.Compliance considerations vary depending on the industry:

- Healthcare (HIPAA):

- Data Protection: Ensure Protected Health Information (PHI) is encrypted both in transit and at rest. Implement robust access controls to restrict access to PHI.

- Audit Trails: Maintain comprehensive audit trails of all access to PHI and data modifications.

- Business Associate Agreements (BAAs): Establish BAAs with all cloud providers and third-party service providers that handle PHI.

- Example: A healthcare provider using a multi-cloud serverless architecture for patient record management must ensure that all data stored in serverless databases and accessed through serverless functions is encrypted. The audit logs must be regularly reviewed to detect any unauthorized access.

- Finance (PCI DSS):

- Secure Processing of Cardholder Data: Implement secure coding practices and protect cardholder data (CHD) from unauthorized access.

- Network Segmentation: Segment the network to isolate systems that handle CHD.

- Regular Security Assessments: Conduct regular vulnerability scans and penetration tests.

- Example: A financial institution using serverless functions to process credit card transactions must comply with PCI DSS requirements. This includes encrypting cardholder data, implementing strong access controls, and regularly auditing security logs.

- Government (FedRAMP, etc.):

- Security Controls: Adhere to the security controls specified by the relevant government regulations.

- Authorization: Obtain the necessary authorizations and certifications from the government agencies.

- Data Residency: Ensure data is stored and processed within the geographic boundaries specified by the regulations.

- Example: A government agency deploying a serverless application to process citizen data must comply with FedRAMP requirements. This involves implementing specific security controls, such as multi-factor authentication, and undergoing regular security assessments.

- General Data Protection Regulation (GDPR):

- Data Privacy: Implement data privacy principles, including data minimization, purpose limitation, and data retention.

- Data Subject Rights: Provide data subjects with the ability to access, rectify, and erase their personal data.

- Data Breach Notification: Establish procedures for notifying data protection authorities and data subjects in the event of a data breach.

- Example: An e-commerce company using serverless functions to manage customer data must comply with GDPR. This includes obtaining consent for data processing, providing data subject rights, and implementing data breach notification procedures.

Orchestration and Management Tools

Effective orchestration and management are critical for the success of a multi-cloud serverless strategy. They provide the necessary automation, monitoring, and control to navigate the complexities of deploying and operating serverless applications across multiple cloud providers. This section will delve into the comparison of different orchestration tools, the automation of deployments, and the crucial aspects of monitoring and logging in a multi-cloud serverless environment.

Comparing Orchestration Tools

Several tools are available for orchestrating multi-cloud serverless infrastructure, each with its strengths and weaknesses. Selecting the right tool depends on factors such as team expertise, existing infrastructure, and specific project requirements.

- Terraform: Terraform, developed by HashiCorp, is a popular Infrastructure as Code (IaC) tool that supports multiple cloud providers. It uses a declarative configuration language (HCL – HashiCorp Configuration Language) to define infrastructure resources.

- Advantages: Broad provider support, mature ecosystem, large community, idempotent deployments (ensuring the desired state is always maintained), and version control capabilities.

- Disadvantages: HCL can become complex for large infrastructure deployments. Debugging can be challenging, and the state management can be complex.

- Example: A Terraform configuration can define the creation of an AWS Lambda function, an Azure Function, and a Google Cloud Function, all managed from a single configuration file. This configuration will also create all the necessary resources, such as IAM roles, storage buckets, and API gateways, in each respective cloud.

- Pulumi: Pulumi is another IaC tool that allows infrastructure to be defined using general-purpose programming languages such as TypeScript, Python, Go, and .NET.

- Advantages: Uses familiar programming languages, enabling greater flexibility and code reuse. Strong support for modern software development practices.

- Disadvantages: A smaller community compared to Terraform. Can have a steeper learning curve if the team is not familiar with the chosen programming language.

- Example: A Pulumi program written in Python could define and deploy a serverless application composed of an AWS Lambda function, an Azure Function, and a Google Cloud Function. The same code could also be used to manage the associated resources, such as API gateways, databases, and storage buckets, across the different cloud providers.

- AWS CloudFormation, Azure Resource Manager, and Google Cloud Deployment Manager: These are cloud-provider-specific IaC tools.

- Advantages: Native support for the respective cloud provider, potentially offering better integration and performance. Can leverage provider-specific features.

- Disadvantages: Vendor lock-in, making it difficult to migrate to other cloud providers. Different tools and configuration languages for each cloud.

- Example: Using AWS CloudFormation to deploy a serverless application on AWS, Azure Resource Manager for deployment on Azure, and Google Cloud Deployment Manager for deployment on Google Cloud. Each tool requires its own configuration and management process.

Automating Deployments Across Multiple Cloud Providers

Automating deployments across multiple cloud providers is essential for maintaining consistency, reducing errors, and accelerating the release cycle. This involves using CI/CD pipelines to build, test, and deploy serverless applications.

- CI/CD Pipelines: These pipelines automate the process of building, testing, and deploying code changes.

- Implementation: Utilize tools such as Jenkins, GitLab CI, GitHub Actions, or Azure DevOps to define the steps involved in the deployment process. The pipeline will typically include steps to build the code, run tests, and deploy the application to each cloud provider.

- Example: A CI/CD pipeline could be configured to automatically build, test, and deploy an updated version of a serverless function to AWS Lambda, Azure Functions, and Google Cloud Functions upon code commits to a Git repository. The pipeline would first build the function code, run unit tests, and then deploy the function to each cloud provider using the respective IaC tool (e.g., Terraform or Pulumi).

- IaC Tool Integration: Integrate IaC tools (Terraform, Pulumi, etc.) into the CI/CD pipeline to automate the infrastructure provisioning and configuration.

- Implementation: The pipeline will execute the IaC tool’s commands (e.g., `terraform apply`, `pulumi up`) to provision and update the necessary infrastructure resources.

- Example: A CI/CD pipeline using Terraform could be configured to automatically update the configuration of a serverless application on AWS, Azure, and Google Cloud. The pipeline would execute the `terraform apply` command to update the infrastructure resources, ensuring that the application is deployed consistently across all cloud providers.

- Configuration Management: Use configuration management tools to manage environment-specific configurations.

- Implementation: Store environment-specific configurations (e.g., API keys, database connection strings) in a secure and centralized location. These configurations are then injected into the serverless functions during deployment.

- Example: Using a secrets management service like AWS Secrets Manager, Azure Key Vault, or Google Cloud Secret Manager to store API keys and database connection strings. These secrets can then be injected into the serverless functions during deployment using environment variables or other mechanisms.

Monitoring and Logging in a Multi-Cloud Serverless Environment

Monitoring and logging are critical for understanding the performance, availability, and health of serverless applications. A robust monitoring and logging strategy is essential for quickly identifying and resolving issues in a multi-cloud environment.

- Centralized Logging: Collect logs from all cloud providers into a centralized logging platform.

- Implementation: Use tools such as Elasticsearch, Fluentd, and Kibana (EFK stack), or commercial solutions like Splunk or Datadog, to aggregate and analyze logs from different cloud providers. Configure the serverless functions to send their logs to the centralized logging platform.

- Example: Configure AWS Lambda functions to send logs to CloudWatch Logs, Azure Functions to send logs to Azure Monitor, and Google Cloud Functions to send logs to Cloud Logging. Then, configure a tool like Fluentd to collect these logs and send them to an Elasticsearch cluster for analysis and visualization.

- Unified Monitoring: Implement a unified monitoring solution to track the performance and health of serverless applications across all cloud providers.

- Implementation: Use tools such as Prometheus, Grafana, or commercial solutions like Datadog, New Relic, or Dynatrace to collect metrics from each cloud provider and provide a unified view of the application’s performance. Configure the serverless functions to emit custom metrics.

- Example: Use Datadog to collect metrics from AWS CloudWatch, Azure Monitor, and Google Cloud Monitoring. Create dashboards to visualize the performance of the serverless applications across all cloud providers, including metrics such as invocation counts, latency, and error rates.

- Alerting and Notifications: Configure alerts and notifications to be triggered when specific conditions are met.

- Implementation: Define alerts based on metrics collected by the monitoring solution. Configure notifications to be sent to relevant teams via email, Slack, or other communication channels.

- Example: Configure an alert in Datadog to notify the operations team if the error rate of a serverless function exceeds a certain threshold. The alert could trigger an email or a notification to a Slack channel, enabling the team to quickly investigate and resolve the issue.

- Tracing: Implement distributed tracing to track requests as they flow through the serverless application across different cloud providers.

- Implementation: Use tools such as AWS X-Ray, Azure Application Insights, or Google Cloud Trace to trace requests across different services and cloud providers. Integrate tracing libraries into the serverless functions to capture the necessary information.

- Example: Use AWS X-Ray to trace requests that flow through an application that uses AWS Lambda, Azure Functions, and Google Cloud Functions. The tracing data will show the path of the request, the latency of each service, and any errors that occurred along the way.

Cost Optimization Strategies

Optimizing costs is paramount in a multi-cloud serverless strategy, as the pay-per-use model can quickly lead to unexpected expenses if not carefully managed. Effective cost management involves a combination of proactive planning, continuous monitoring, and iterative adjustments. This section Artikels strategies for optimizing costs, designing a cost monitoring dashboard, and providing examples of cost-saving measures.

Strategies for Optimizing Multi-Cloud Serverless Costs

Implementing these strategies helps control and reduce costs associated with multi-cloud serverless deployments. A comprehensive approach ensures cost-effectiveness across different cloud providers.

- Right-Sizing Resources: Analyze function memory and compute requirements to avoid over-provisioning. Regularly review function performance and adjust resource allocation based on actual usage. For example, if a function consistently uses only 256MB of memory, scaling it down from 512MB can result in cost savings.

- Leveraging Reserved Instances/Capacity: Where applicable, explore reserved instances or committed use discounts offered by cloud providers. These discounts can significantly reduce costs for predictable workloads, especially for compute resources used by serverless functions.

- Optimizing Function Execution Time: Reduce function execution time through code optimization, efficient database queries, and minimizing external dependencies. Faster execution translates directly into lower compute costs.

- Implementing Autoscaling: Utilize autoscaling features to automatically adjust resources based on demand. This prevents over-provisioning during periods of low traffic and ensures resources are available during peak loads.

- Choosing the Right Cloud Provider for Each Workload: Evaluate the cost structures of different cloud providers and select the most cost-effective provider for each serverless function or workload. This requires careful analysis of pricing models and usage patterns.

- Monitoring and Alerting: Implement robust monitoring and alerting systems to track spending and identify anomalies. Set up alerts to notify teams of unexpected cost spikes, enabling prompt action to mitigate the issue.

- Cold Start Optimization: Minimize cold start times, as they can impact latency and indirectly increase costs. Techniques include pre-warming functions and using provisioned concurrency.

- Data Transfer Optimization: Minimize data transfer costs by optimizing data storage and access patterns. Consider data locality and choose storage solutions that minimize cross-region data transfer.

- Using Serverless Database Options: Explore serverless database options like Amazon DynamoDB or Google Cloud Firestore. Serverless databases typically offer pay-per-request pricing, which can be more cost-effective than traditional database solutions for fluctuating workloads.

Designing a Cost Monitoring Dashboard

A cost monitoring dashboard provides real-time visibility into spending patterns across different cloud providers. The dashboard should provide insights into resource consumption, cost allocation, and potential areas for optimization.

- Data Aggregation: The dashboard should aggregate cost data from all cloud providers. This may involve using cloud provider-specific APIs or third-party cost management tools.

- Cost Breakdown: Present a clear breakdown of costs by service, function, region, and other relevant dimensions. This allows users to identify the services consuming the most resources and contributing the most to overall costs.

- Visualization: Use charts and graphs to visualize cost trends over time. This helps identify anomalies and understand the impact of optimization efforts. Consider line graphs to show cost fluctuations and bar charts to compare costs across services.

- Alerting and Notifications: Implement alerts to notify teams of any unexpected cost spikes or budget overruns. This allows for prompt action to prevent excessive spending.

- Reporting: Generate regular reports summarizing spending patterns and providing insights into cost-saving opportunities. These reports can be used to track the effectiveness of cost optimization efforts.

- Forecasting: Include cost forecasting capabilities to predict future spending based on historical data and current usage patterns. This allows teams to proactively manage their budgets and anticipate future costs.

- Integration with DevOps Tools: Integrate the dashboard with existing DevOps tools, such as CI/CD pipelines and monitoring systems. This allows teams to correlate cost data with application performance and deployment activities.

Implementing Cost-Saving Measures for Serverless Functions

The following blockquote illustrates how to implement cost-saving measures for serverless functions, combining code optimization, resource management, and monitoring.

Scenario: A serverless function processes image uploads to an object storage service.

Cost Optimization Measures:

- Code Optimization: Reduce function execution time by optimizing image processing libraries (e.g., using optimized image resizing algorithms).

- Memory Allocation: Analyze function memory usage and reduce the allocated memory if the function consistently uses less than the allocated amount. For example, reduce memory from 1024MB to 512MB.

- Execution Time Monitoring: Use cloud provider monitoring tools (e.g., AWS CloudWatch, Google Cloud Monitoring) to track function execution time and identify slow executions.

- Concurrency Limits: Set concurrency limits to control the number of concurrent function executions and prevent excessive spending during peak loads.

- Alerting: Set up alerts to notify the operations team if the function’s execution time increases beyond a predefined threshold, or if the cost exceeds a budget.

Expected Outcomes: Reduced function execution time, lower memory usage, and controlled costs. Continuous monitoring helps to identify further optimization opportunities.

Testing, Deployment, and CI/CD Pipelines

Effective testing, deployment, and continuous integration/continuous delivery (CI/CD) pipelines are crucial for the success of any serverless application, particularly in a multi-cloud environment. These practices ensure code quality, facilitate rapid iteration, and minimize operational overhead across different cloud providers. Implementing robust processes in these areas enables faster development cycles, reduces the risk of errors, and allows for more efficient management of the application lifecycle.

Importance of Testing Serverless Functions in a Multi-Cloud Environment

Testing serverless functions in a multi-cloud environment presents unique challenges and necessitates a comprehensive approach to ensure consistent functionality and performance across various cloud platforms. The differences in underlying infrastructure, service availability, and API implementations among providers can lead to unexpected behavior if not adequately addressed during testing.

- Functional Testing: This involves verifying that each serverless function behaves as expected under different input conditions. This is especially important in multi-cloud scenarios, where the same function might interact differently with cloud-specific services (e.g., databases, storage). For example, a function that retrieves data from an object storage service (e.g., AWS S3, Google Cloud Storage, Azure Blob Storage) needs to be tested against each provider’s implementation to ensure compatibility and correct data retrieval.

- Integration Testing: Integration tests validate the interactions between serverless functions and other services, both within and outside the cloud environment. This can include testing API gateways, databases, message queues, and other external APIs. In a multi-cloud setup, integration testing is critical to ensure that functions can communicate seamlessly across provider boundaries, such as triggering a function on AWS Lambda from an event on Google Cloud Pub/Sub.

- Performance Testing: Performance tests assess the speed, scalability, and resource utilization of serverless functions under various load conditions. This involves simulating real-world traffic and measuring response times, throughput, and resource consumption (e.g., memory, CPU). The performance of a function can vary across different cloud providers due to differences in hardware and network infrastructure. Therefore, it is crucial to conduct performance testing on each provider to identify and address any performance bottlenecks.

- Security Testing: Security testing aims to identify vulnerabilities and ensure the application’s security posture. This includes testing for common vulnerabilities such as injection flaws, authentication and authorization issues, and data breaches. Security testing should be performed on each cloud provider, taking into account provider-specific security features and configurations. For example, a function accessing a database should be tested to ensure that it adheres to the security policies of each cloud provider.

- End-to-End Testing: End-to-end (E2E) tests simulate user interactions with the entire application, from the user interface to the backend services. E2E tests validate the functionality of the application as a whole and ensure that all components work together correctly. In a multi-cloud environment, E2E testing helps to verify that the application is functioning correctly across all providers and that data is flowing correctly between services.

Best Practices for Setting Up CI/CD Pipelines for Multi-Cloud Serverless Applications

Setting up CI/CD pipelines for multi-cloud serverless applications requires careful planning and implementation to automate the build, test, and deployment processes across different cloud providers. Adhering to best practices ensures efficiency, reliability, and consistency throughout the application lifecycle.

- Infrastructure as Code (IaC): IaC involves defining and managing infrastructure resources using code. Tools like Terraform, AWS CloudFormation, or Azure Resource Manager enable the automated provisioning and configuration of infrastructure components across multiple cloud providers. Using IaC ensures consistency and reproducibility across environments, simplifying the deployment process. For instance, using Terraform to define the resources for a serverless application on AWS Lambda, Google Cloud Functions, and Azure Functions allows for a consistent deployment process across all three providers.

- Version Control: Utilize a version control system (e.g., Git) to manage the source code, configuration files, and IaC templates. This allows for tracking changes, collaboration, and the ability to revert to previous versions if necessary. Each change should be tracked and associated with a specific feature or bug fix.

- Automated Testing: Integrate automated tests (unit, integration, and end-to-end) into the CI/CD pipeline. This ensures that code changes are thoroughly tested before deployment. Automated tests can run on each code commit or pull request, providing quick feedback to developers.

- Containerization: Containerize serverless functions using tools like Docker. Containerization provides a consistent environment for building, testing, and deploying functions across different cloud providers. It also simplifies dependency management and reduces the risk of environment-specific issues.

- Deployment Automation: Automate the deployment process using tools like Serverless Framework, AWS SAM, or Azure Functions Core Tools. These tools simplify the deployment of serverless functions and associated resources across multiple cloud providers. For example, the Serverless Framework allows you to define your serverless application in a `serverless.yml` file and deploy it to multiple providers with minimal configuration.

- Configuration Management: Use configuration management tools to manage application configuration across different environments (e.g., development, staging, production). This ensures that configuration settings are consistent and secure. Tools like AWS Systems Manager Parameter Store, Google Cloud Secret Manager, or Azure Key Vault can be used to store and manage sensitive configuration data.

- Monitoring and Logging: Integrate monitoring and logging into the CI/CD pipeline to collect data about application performance and health. This data can be used to identify and resolve issues, optimize performance, and ensure the application is running as expected. Monitoring tools such as Prometheus, Grafana, and provider-specific services (e.g., AWS CloudWatch, Google Cloud Monitoring, Azure Monitor) can be integrated to collect and analyze metrics.

- Rollback Strategies: Implement rollback strategies to revert to a previous version of the application in case of deployment failures or issues. This minimizes downtime and reduces the impact of errors on users. Strategies include blue/green deployments or canary releases.

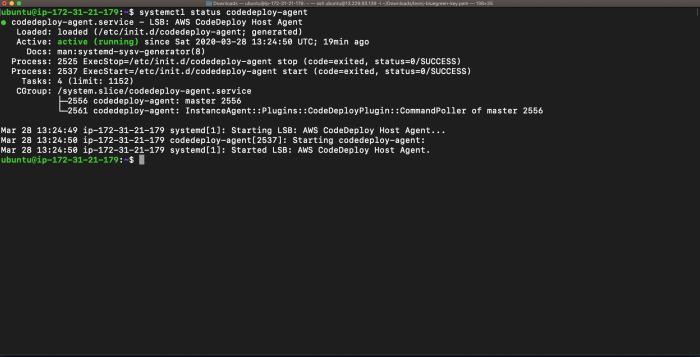

Steps Involved in Deploying Serverless Applications Across Multiple Cloud Providers Using a Specific Tool (e.g., Serverless Framework)

The Serverless Framework is a popular open-source tool that simplifies the deployment and management of serverless applications across multiple cloud providers. The following steps Artikel the process of deploying a serverless application to multiple providers using the Serverless Framework.

- Project Setup: Create a new Serverless Framework project using the command-line interface (CLI). The CLI will generate a `serverless.yml` file, which serves as the configuration file for the application.

- Configuration: Configure the `serverless.yml` file to define the application’s resources, including functions, events, and cloud provider-specific settings. The `provider` section specifies the cloud provider (e.g., `aws`, `google`, `azure`).

- Provider Configuration: For each cloud provider, configure the necessary credentials and settings, such as API keys, region, and account information. The Serverless Framework supports various authentication methods, including environment variables, IAM roles, and service accounts.

- Function Definition: Define the serverless functions and their associated events (e.g., HTTP requests, scheduled events, database triggers). The `functions` section of the `serverless.yml` file defines the functions and their configurations.

- Deployment: Use the `serverless deploy` command to deploy the application to the specified cloud providers. The Serverless Framework will automatically provision the necessary resources and deploy the functions.

- Testing: After deployment, test the functions and their interactions with other services. Use tools like Postman or cURL to test the HTTP endpoints and verify the functionality.

- Monitoring and Logging: Integrate monitoring and logging into the application to collect data about its performance and health. The Serverless Framework can be configured to integrate with provider-specific monitoring and logging services.

- Updates and Rollbacks: When updating the application, make changes to the code and the `serverless.yml` file, and then redeploy using the `serverless deploy` command. The Serverless Framework supports rollback strategies to revert to a previous version of the application in case of deployment failures.

For example, consider deploying a simple “hello world” function to AWS Lambda and Google Cloud Functions. The `serverless.yml` file might look like this:“`yamlservice: multi-cloud-hello-worldprovider: name: aws runtime: nodejs18.x region: us-east-1 # AWS Credentials configured through environment variables or IAM rolefunctions: hello: handler: handler.hello events:

http

path: /hello method: getprovider: name: google runtime: nodejs18 region: us-central1 # Google Cloud Credentials configured through environment variables or service accountfunctions: hello: handler: handler.hello events:

http

path: /hello method: get“`In this example, the same `handler.hello` function will be deployed to both AWS Lambda and Google Cloud Functions, with the `provider` section specifying the configurations for each cloud provider. The Serverless Framework will then handle the deployment to both platforms.

Final Thoughts

In conclusion, the successful implementation of a multi-cloud serverless strategy demands a holistic approach, encompassing meticulous planning, robust architectural design, and a deep understanding of cloud provider capabilities. By addressing the challenges of data consistency, security, and orchestration, organizations can harness the benefits of a multi-cloud approach, including increased resilience, reduced vendor lock-in, and optimized resource utilization. This journey, while complex, offers significant rewards, paving the way for more agile, scalable, and cost-effective cloud-native applications.

FAQ Insights

What are the primary benefits of a multi-cloud serverless approach?

Multi-cloud serverless offers several advantages, including increased application resilience through provider redundancy, reduced vendor lock-in, cost optimization by leveraging competitive pricing, and the ability to utilize the unique strengths of each cloud provider for specific workloads.

How do you handle data consistency across multiple cloud storage services?

Data consistency can be managed through techniques such as data replication, eventual consistency models, and the use of specialized data synchronization tools. Choosing the right approach depends on the specific application requirements and the acceptable level of latency.

What are the key considerations for security in a multi-cloud serverless environment?

Security considerations include implementing consistent security policies across providers, managing identity and access management (IAM) centrally, employing robust encryption methods, and establishing comprehensive monitoring and auditing practices to detect and respond to security threats.

Which orchestration tools are best suited for managing multi-cloud serverless infrastructure?

Tools like Terraform and Pulumi are well-suited for managing multi-cloud serverless infrastructure due to their ability to define infrastructure as code and deploy resources across multiple cloud providers. The choice depends on the team’s familiarity and the specific features required.