Data migration, a critical process in modern IT infrastructure, necessitates careful consideration of data transfer services. The selection of an appropriate service is paramount for ensuring a seamless and secure transition, minimizing downtime, and optimizing costs. This guide delves into the multifaceted aspects of choosing a data transfer service, providing a structured approach to evaluate different options and make informed decisions.

This analysis will navigate the key stages of data migration, from initial planning and assessment to post-migration validation. It encompasses crucial factors like data volume, security protocols, performance optimization, cost analysis, and compliance requirements. The aim is to equip readers with the knowledge to identify their specific needs and select a service that aligns with their project’s objectives, mitigating risks and maximizing efficiency.

Understanding the Migration Process

Data migration, the process of transferring data between storage types, formats, or computer systems, is a critical undertaking for organizations undergoing technological upgrades, mergers, or cloud adoption. A well-executed migration minimizes downtime, data loss, and disruption to business operations. This section Artikels the essential steps and strategies involved in a successful data migration project.

General Steps in a Data Migration Project

Data migration projects typically follow a structured approach to ensure a smooth transition. The key steps, though potentially overlapping and iterative, provide a framework for planning and execution.

- Planning and Assessment: This initial phase involves defining the scope of the migration, identifying data sources and targets, assessing data quality, and establishing migration goals. This includes selecting the appropriate migration strategy and choosing the right data transfer service.

- Data Extraction: Data is extracted from the source systems. This may involve creating copies of the data or identifying the specific data elements to be migrated.

- Data Transformation: Data transformation is the process of modifying data to align with the target system’s requirements. This may involve cleaning, formatting, and converting data types. The complexity of the transformation depends on the differences between the source and target systems.

- Data Loading: Transformed data is loaded into the target system. This step requires careful consideration of data integrity, data validation, and performance optimization.

- Verification and Testing: The migrated data is thoroughly verified and tested to ensure its accuracy and completeness. This involves comparing data in the source and target systems, performing data validation checks, and conducting user acceptance testing.

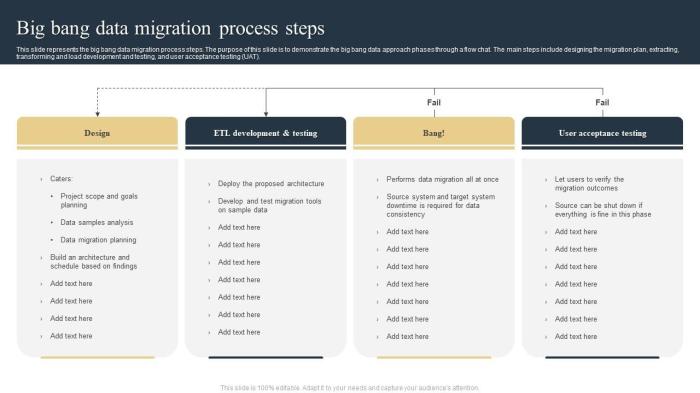

- Cutover and Go-Live: This phase involves transitioning from the source system to the target system. This can be a phased approach, where portions of the data are migrated at a time, or a “big bang” approach, where all data is migrated simultaneously. The cutover plan must minimize downtime and ensure a smooth transition for end-users.

- Post-Migration Validation and Optimization: After the migration, the performance of the target system is monitored, and any issues are addressed. Data quality checks and system optimizations are performed to ensure the long-term success of the migration.

Different Migration Strategies

The choice of migration strategy depends on the specific business requirements, technical constraints, and the characteristics of the data being migrated. Understanding the different strategies is crucial for selecting the most appropriate approach.

- Lift and Shift (Rehosting): This strategy involves moving applications and data to a new infrastructure, typically a cloud environment, with minimal changes. The goal is to replicate the existing environment as closely as possible in the new location. This is often the fastest migration approach, suitable for applications that are already well-suited to the cloud.

- Re-platforming: Re-platforming involves making some modifications to the application to take advantage of the cloud infrastructure. This might include changing the operating system or database to a cloud-native service. While requiring more effort than lift and shift, re-platforming can improve performance and reduce costs.

- Refactoring (Re-architecting): This is the most complex migration strategy, involving significant changes to the application’s architecture and code. The application is redesigned and rebuilt to take full advantage of cloud-native services. This strategy offers the greatest potential for optimization, scalability, and cost savings but requires the most time and resources.

- Replace: In some cases, it may be more cost-effective to replace an existing application with a new, cloud-native application. This approach is suitable when the existing application is outdated or no longer meets the business needs.

Importance of Pre-Migration Planning and Assessment

Thorough pre-migration planning and assessment are essential for a successful data migration. This phase sets the foundation for the entire project and helps to mitigate risks.

- Data Inventory and Profiling: This involves identifying all data sources, understanding the data’s structure, format, and volume, and assessing data quality. Tools for data profiling can help identify data inconsistencies, missing values, and other issues that need to be addressed during the transformation phase.

- System Compatibility Assessment: The assessment ensures compatibility between the source and target systems, including hardware, software, and network infrastructure. This may involve identifying any dependencies or incompatibilities that need to be resolved before the migration.

- Performance Analysis: Assessing the performance characteristics of the source system helps in planning the migration process. This includes understanding the system’s load, response times, and data transfer rates. This information is crucial for setting realistic expectations and identifying potential bottlenecks during the migration.

- Risk Assessment: Identifying and assessing potential risks associated with the migration project is crucial. This includes data loss, downtime, security breaches, and project delays. A comprehensive risk assessment allows for the development of mitigation strategies to minimize the impact of these risks.

- Cost Estimation: Estimating the total cost of the migration project is a critical part of pre-migration planning. This includes labor costs, infrastructure costs, data transfer costs, and any software or licensing fees. Accurate cost estimation helps in securing budget approval and tracking project expenses.

Identifying Data Transfer Service Needs

Selecting the optimal data transfer service necessitates a thorough understanding of the specific migration requirements. This involves a detailed assessment of the data characteristics, the desired performance metrics, and the constraints imposed by the existing infrastructure and budget. A systematic approach to identifying these needs is crucial for a successful and efficient data migration process.

Key Factors Influencing Data Transfer Service Selection

Several factors significantly influence the selection of a data transfer service. These factors, when carefully considered, can lead to the selection of a service that effectively meets the specific needs of the migration project.

- Data Volume: The total amount of data to be transferred is a primary determinant. Services vary significantly in their capacity to handle large datasets efficiently. For instance, migrating petabytes of data necessitates services designed for high-volume transfers, such as those utilizing parallel processing or physical data shipment.

- Data Complexity: The structure and organization of the data impact the choice. Complex data, involving intricate relationships, numerous data types, or frequent updates, demands services capable of handling these complexities while preserving data integrity. Services supporting schema mapping, data transformation, and version control are essential in such scenarios.

- Network Bandwidth and Latency: The available network bandwidth and latency between the source and destination locations significantly affect the transfer speed. Services optimized for specific network conditions or offering features like compression and deduplication can mitigate the impact of bandwidth limitations.

- Security Requirements: Data security is paramount, especially when dealing with sensitive information. The selected service must provide robust security features, including encryption, access controls, and compliance with relevant regulations.

- Budget Constraints: The cost of the service is a practical consideration. Pricing models vary, with some services charging based on data volume, transfer speed, or storage duration. A cost-benefit analysis is essential to identify the most economical solution that meets the required performance and security standards.

- Downtime Requirements: The acceptable downtime during the migration process influences the choice of service. Services that support minimal downtime, such as those offering incremental data transfers or real-time replication, are preferable when continuous availability is critical.

Types of Data Commonly Migrated and Their Specific Requirements

Different types of data present unique challenges and require tailored approaches during migration. Understanding these specific requirements is crucial for selecting a suitable data transfer service.

- Structured Data (Databases): Relational databases (e.g., MySQL, PostgreSQL, Oracle) and NoSQL databases (e.g., MongoDB, Cassandra) require services that can preserve data integrity, relationships, and constraints. These services often include features like schema mapping, data transformation, and support for database-specific protocols. For example, migrating a large e-commerce database might require services that can handle transactions, maintain referential integrity, and minimize downtime during the migration process.

- Unstructured Data (Files, Documents, Images, Videos): These data types, which lack a predefined format, require services capable of handling large files, maintaining file metadata, and ensuring data consistency. Services often support features like file versioning, compression, and deduplication to optimize transfer speed and storage efficiency. For instance, migrating a large collection of video files might necessitate services optimized for high-throughput transfers and storage solutions.

- Semi-structured Data (JSON, XML): This data type combines features of both structured and unstructured data. Services should be able to parse and process the data, handling nested structures and complex relationships. Data transformation capabilities are often required to convert the data into a format compatible with the destination system.

- Archived Data: Data archived for long-term storage requires services that prioritize data integrity, security, and cost-effectiveness. Services often support features like data compression, encryption, and compliance with regulatory requirements. An example is the migration of medical records, which necessitate compliance with HIPAA regulations.

- Real-time Data Streams: Migrating live data streams requires services capable of handling high-velocity data ingestion, processing, and replication. Services often utilize technologies like message queues, stream processing engines, and real-time data replication to ensure data consistency and minimal latency.

Criteria for Evaluating Data Transfer Services

A structured evaluation of different data transfer services involves assessing their features against specific criteria. The following table provides a framework for comparing and contrasting various services.

| Feature | Description | Importance | Consideration |

|---|---|---|---|

| Data Transfer Speed | The rate at which data is transferred, typically measured in bits per second or bytes per second. | High, especially for large datasets or time-sensitive migrations. | Assess the service’s throughput capabilities, network optimization features (e.g., compression, deduplication), and support for parallel transfers. Consider the impact of network bandwidth and latency. |

| Data Security | Measures implemented to protect data during transfer, including encryption, access controls, and compliance with security standards. | Critical, especially for sensitive data. | Verify the service’s encryption methods (e.g., TLS/SSL), access control mechanisms (e.g., role-based access control), and compliance with relevant regulations (e.g., GDPR, HIPAA). |

| Data Integrity | Ensuring that the data transferred remains accurate and complete. | High, to prevent data loss or corruption. | Evaluate the service’s data validation mechanisms (e.g., checksums), error handling capabilities, and support for data consistency checks. |

| Scalability | The ability of the service to handle increasing data volumes and transfer demands. | Important for future growth and flexibility. | Assess the service’s capacity to scale resources, support for parallel transfers, and ability to handle peak transfer loads. |

| Cost | The financial cost associated with using the service. | Essential for budget management. | Compare pricing models (e.g., per-GB, per-transfer, monthly subscription), assess hidden costs (e.g., egress fees, storage costs), and perform a cost-benefit analysis. |

| Ease of Use | The simplicity and user-friendliness of the service’s interface and management tools. | Affects operational efficiency and time to completion. | Evaluate the service’s user interface, documentation, and support resources. Consider the availability of automation tools and APIs. |

| Downtime Requirements | The amount of time the system is unavailable during the migration process. | Crucial for maintaining business continuity. | Assess the service’s support for incremental data transfers, real-time replication, and zero-downtime migration strategies. |

| Support and Documentation | The availability of technical support and comprehensive documentation. | Essential for troubleshooting and resolving issues. | Evaluate the service’s support channels (e.g., email, phone, online chat), response times, and the quality of its documentation. |

Evaluating Data Transfer Service Features

Selecting a data transfer service necessitates a thorough evaluation of its capabilities. The features offered directly impact the efficiency, security, and cost-effectiveness of the migration process. This section explores the core functionalities of data transfer services and provides a comparative analysis of various transfer methods and protocols.

Core Functionalities of Data Transfer Services

Data transfer services are built upon several core functionalities designed to optimize the movement of data. These features address various aspects of the transfer process, from data preparation to secure transmission and verification.

- Data Compression: This reduces the size of the data before transmission, leading to faster transfer times and lower bandwidth costs. Compression algorithms, such as gzip and LZ4, are commonly employed. The efficiency of compression depends on the data type; for example, text files typically compress more effectively than pre-compressed multimedia files. The compression ratio can vary significantly.

- Data Encryption: Encryption protects data confidentiality during transit. Services typically employ encryption protocols like TLS/SSL (Transport Layer Security/Secure Sockets Layer) to secure the data in motion. Encryption keys are critical; services often offer key management features. The strength of the encryption is determined by the algorithm and key length (e.g., AES-256).

- Data Integrity Checks: These ensure the data arrives at the destination without corruption. Techniques such as checksums (e.g., MD5, SHA-256) are used to verify data integrity. The service calculates a checksum before transfer and compares it to the checksum calculated after the transfer to detect any discrepancies.

- Bandwidth Throttling: This feature allows users to control the rate at which data is transferred, preventing network congestion and ensuring consistent performance. It’s especially useful when transferring data alongside other network-intensive applications.

- Data Deduplication: This reduces the amount of data transferred by identifying and eliminating redundant data blocks. This can significantly reduce transfer times and storage costs, especially for large datasets with a high degree of repetition.

- Error Handling and Retry Mechanisms: Robust services include mechanisms to handle network interruptions and other errors. This includes automatic retry attempts and the ability to resume interrupted transfers from the point of failure, ensuring data completeness.

- Monitoring and Reporting: Services provide tools to monitor transfer progress, track performance metrics, and generate reports. These insights are vital for troubleshooting and optimizing the migration process.

Comparison of Data Transfer Methods

The choice of data transfer method depends on several factors, including data volume, network bandwidth, security requirements, and budget constraints. Each method offers a different trade-off between speed, cost, and complexity.

- Online Data Transfer: This involves transferring data directly over a network connection (e.g., internet, private network).

- Offline Data Transfer: This involves physically transporting storage devices containing the data.

- Hybrid Data Transfer: This combines online and offline methods.

The suitability of each method varies depending on the specific migration scenario. For example, large datasets and limited bandwidth may make offline transfers the most cost-effective choice.

- Online Data Transfer: This is suitable for smaller datasets or when time is a critical factor. Online transfers are convenient but can be limited by network bandwidth. The speed of the transfer depends on the network’s speed, the service’s efficiency, and the distance between the source and destination.

- Advantages: Fast setup, real-time transfer, and suitable for continuous data synchronization.

- Disadvantages: Relies on network availability and bandwidth, potentially higher costs for large datasets.

- Use Cases: Migrating small to medium-sized datasets, real-time data replication, and ongoing data synchronization.

- Offline Data Transfer: This method is most suitable for large datasets, where network bandwidth is a bottleneck or when data security is paramount. It involves shipping physical storage devices (e.g., hard drives, SSDs) containing the data to the destination. The transfer speed is limited by the read/write speed of the storage device and the shipping time.

- Advantages: High transfer speeds for large datasets, suitable for environments with limited or unreliable network connectivity, and enhanced data security.

- Disadvantages: Requires physical handling and transportation of storage devices, potential delays due to shipping, and the need for secure storage devices.

- Use Cases: Migrating very large datasets (e.g., petabytes of data), migrating data to or from environments with limited network connectivity, and situations where data security is critical.

- Hybrid Data Transfer: This combines the benefits of both online and offline methods. A portion of the data can be transferred online, while the bulk of the data is transferred offline. This can be particularly useful when a deadline needs to be met, allowing the initial migration of some data online while offline transfer is simultaneously underway.

- Advantages: Combines the speed of offline transfers with the convenience of online transfers.

- Disadvantages: Requires careful planning and coordination between online and offline phases.

- Use Cases: Migrating large datasets where time constraints are present and a balance between speed and cost is desired.

Comparison Chart of Data Transfer Services and Supported Protocols

The following table compares three hypothetical data transfer services based on their supported protocols. This information is crucial for determining compatibility with existing infrastructure and data sources.

| Feature | Service A | Service B | Service C |

|---|---|---|---|

| Supported Protocols |

|

|

|

| Encryption | TLS 1.3 | AES-256 | Proprietary |

| Data Compression | gzip | LZ4 | None |

| Data Integrity Checks | SHA-256 | MD5 | CRC32 |

Security Considerations for Data Transfer

Data security is paramount when transferring data, as breaches can lead to significant financial losses, reputational damage, and legal repercussions. Selecting a data transfer service necessitates a thorough evaluation of its security features and the implementation of robust security practices throughout the migration process. This section Artikels the crucial security considerations for data transfer services, addressing features, risks, and best practices.

Security Features of Data Transfer Services

Data transfer services must incorporate various security features to safeguard data integrity and confidentiality during transit and at rest. These features collectively mitigate potential threats and ensure a secure migration.

- Encryption: Encryption is the process of transforming data into an unreadable format, protecting it from unauthorized access. Data transfer services should employ strong encryption algorithms, such as Advanced Encryption Standard (AES) with a key length of 256 bits, both for data in transit (e.g., using Transport Layer Security/Secure Sockets Layer – TLS/SSL) and data at rest (e.g., at the source or destination storage).

This ensures that even if data is intercepted, it remains unintelligible without the decryption key. The use of end-to-end encryption is particularly important, where only the sender and receiver can decrypt the data, preventing the service provider from accessing the content.

- Access Control: Access control mechanisms restrict who can access data and resources. Data transfer services should provide robust access control features, including role-based access control (RBAC), multi-factor authentication (MFA), and regular security audits. RBAC allows administrators to assign specific permissions to users based on their roles, minimizing the risk of unauthorized access. MFA adds an extra layer of security by requiring users to verify their identity through multiple factors (e.g., password, code from a mobile app, biometric scan).

Regular security audits and penetration testing are essential to identify and address vulnerabilities in the access control system.

- Data Integrity Checks: Data integrity checks ensure that data remains unchanged during transfer. Services should implement mechanisms such as checksums (e.g., SHA-256) and digital signatures to verify data integrity. Checksums are calculated before and after the transfer; if the checksums differ, it indicates data corruption. Digital signatures, using cryptographic techniques, provide a means to verify the data’s authenticity and that it has not been tampered with.

- Audit Trails and Logging: Comprehensive audit trails and logging are crucial for monitoring and investigating security incidents. Data transfer services should maintain detailed logs of all data transfer activities, including user actions, data access attempts, and system events. These logs should include timestamps, user identities, IP addresses, and the actions performed. Audit trails enable organizations to track data movement, identify suspicious activity, and reconstruct events in case of a security breach.

- Compliance Certifications: Data transfer services should adhere to industry-specific compliance standards, such as ISO 27001, SOC 2, and HIPAA, depending on the nature of the data being transferred. These certifications demonstrate the service provider’s commitment to data security and compliance with established security best practices. For example, a service handling Protected Health Information (PHI) must be HIPAA compliant to ensure patient data confidentiality and integrity.

Potential Security Risks During Data Transfer and Mitigation Strategies

Data transfer processes are vulnerable to several security risks. Understanding these risks and implementing appropriate mitigation strategies is essential for a secure migration.

- Man-in-the-Middle (MITM) Attacks: In MITM attacks, an attacker intercepts communication between two parties, potentially eavesdropping on data or modifying it. To mitigate MITM attacks, use TLS/SSL encryption with strong cipher suites and regularly update certificates. Implement strict network segmentation to limit the scope of potential attacks.

- Data Breaches: Data breaches can occur due to vulnerabilities in the data transfer service, weak access controls, or insider threats. To prevent data breaches, employ robust access control measures, including RBAC and MFA. Regularly patch and update the data transfer service to address known vulnerabilities. Conduct thorough background checks on personnel with access to sensitive data. Implement data loss prevention (DLP) measures to prevent unauthorized data exfiltration.

- Malware Infections: Malware can be introduced during data transfer, infecting the source, destination, or the transfer service itself. Employ strong anti-malware and anti-virus software on all systems involved in the data transfer process. Regularly scan files before and after transfer to detect and remove malware. Implement network segmentation to isolate infected systems and prevent the spread of malware.

- Insider Threats: Malicious or negligent insiders can compromise data security. To mitigate insider threats, implement strong access controls, including least privilege principles. Conduct regular security awareness training for all personnel. Monitor user activity and audit logs to detect suspicious behavior. Enforce strict data handling policies and procedures.

- Data Loss: Data loss can occur due to hardware failures, software bugs, or human error. Implement robust data backup and recovery mechanisms. Perform regular data validation checks to ensure data integrity. Utilize redundant storage systems to provide data availability. Develop and test data recovery plans to minimize downtime in case of data loss.

Best Practices for Securing Data During Migration

Implementing best practices throughout the data migration process is crucial to maintain data security.

- Plan and Prepare: Develop a comprehensive data migration plan that includes security considerations. Identify all data assets, assess security risks, and define security requirements. Document all security procedures and policies.

- Choose a Secure Data Transfer Service: Select a data transfer service that offers robust security features, including encryption, access control, and compliance certifications. Evaluate the service provider’s security posture and track record.

- Encrypt Data in Transit and at Rest: Use strong encryption algorithms (e.g., AES-256) to protect data during transfer and at rest. Ensure encryption is enabled by default and is properly configured.

- Implement Strong Access Controls: Enforce RBAC, MFA, and regular security audits to control access to data. Limit access to only authorized personnel.

- Verify Data Integrity: Use checksums and digital signatures to ensure data integrity during transfer. Validate data at the source and destination to detect and correct errors.

- Monitor and Audit: Continuously monitor data transfer activities and maintain detailed audit trails. Review logs regularly to detect and investigate security incidents.

- Secure the Network: Segment the network to isolate the data transfer process. Implement firewalls and intrusion detection systems to protect against unauthorized access.

- Educate and Train Personnel: Provide security awareness training to all personnel involved in the data migration. Educate them on data handling procedures, security policies, and potential threats.

- Test and Validate: Regularly test the data transfer process and validate data integrity and security controls. Conduct penetration testing to identify vulnerabilities.

- Dispose of Data Securely: After successful migration, securely dispose of data from the source systems. Use data wiping or physical destruction methods to prevent data breaches.

Performance and Scalability Aspects

Data transfer services must be evaluated not only for their feature sets and security protocols but also for their ability to handle the practical demands of data migration. This involves a deep dive into performance characteristics, particularly concerning large datasets, and the scalability of the chosen service to accommodate future growth. Understanding these aspects ensures a smooth, efficient, and future-proof migration strategy.

Handling Large Data Volumes

The ability of a data transfer service to effectively manage large data volumes is a critical determinant of migration success. Services employ various techniques to facilitate the transfer of petabytes of data.The primary methods employed are:

- Parallel Transfer: Many services utilize parallel transfer mechanisms, breaking down the data into smaller, manageable chunks that can be transferred simultaneously across multiple connections or threads. This significantly reduces the overall transfer time compared to a single-threaded approach. For instance, services like AWS DataSync use parallel transfer to move data between on-premises storage and AWS storage services. The number of parallel connections can be configured based on network bandwidth and the service’s capabilities.

- Compression and Deduplication: Compression algorithms, such as GZIP or LZ4, are applied to reduce the size of the data before transmission, thereby decreasing the bandwidth requirements and transfer time. Deduplication identifies and eliminates redundant data blocks, sending only unique data. This is particularly beneficial when transferring data that contains many duplicate files or versions.

- Chunking and Checksumming: Data is divided into fixed-size chunks, and a checksum (e.g., MD5, SHA-256) is generated for each chunk. During the transfer, the service verifies the integrity of each chunk by comparing the checksums. This process ensures data accuracy and facilitates resuming interrupted transfers from the point of failure, rather than restarting the entire process.

- Staging and Caching: Some services employ staging areas or caching mechanisms. Data can be temporarily stored in an intermediate location, closer to the source or destination, to reduce latency and improve transfer speeds.

Impact of Network Bandwidth on Transfer Speed

Network bandwidth is a fundamental limiting factor in data transfer speed. The theoretical maximum transfer rate is directly proportional to the available bandwidth. However, achieving this maximum is often challenging due to network overhead, protocol limitations, and other factors.The relationship between bandwidth, data size, and transfer time can be expressed as:

Transfer Time = (Data Size / Bandwidth) + Overhead

Where:

- Data Size: The total amount of data to be transferred, typically measured in bytes, kilobytes, megabytes, gigabytes, or terabytes.

- Bandwidth: The network’s capacity to transmit data, typically measured in bits per second (bps), kilobits per second (kbps), megabits per second (Mbps), or gigabits per second (Gbps).

- Overhead: The additional time required for network protocols, error checking, and other processes that do not directly involve data transfer.

For example, consider a 1 TB (1,000 GB) dataset being transferred over a 1 Gbps (1,000 Mbps) network connection. Assuming minimal overhead, the theoretical transfer time can be estimated as follows:

- Convert data size to bits: 1,000 GB

– 8,000,000,000 bits/GB = 8,000,000,000,000 bits - Calculate transfer time: 8,000,000,000,000 bits / 1,000,000,000 bits/second = 8,000 seconds

- Convert seconds to hours: 8,000 seconds / 3,600 seconds/hour ≈ 2.22 hours

In practice, the actual transfer time will be longer due to network overhead and other factors. Using a faster network connection (e.g., 10 Gbps) will significantly reduce the transfer time.

Strategies for Optimizing Transfer Performance and Scalability

Optimizing data transfer performance and ensuring scalability requires a multi-faceted approach. Services offer several mechanisms for improving transfer efficiency and adapting to increasing data volumes.These strategies include:

- Network Optimization: Prioritizing the use of high-bandwidth, low-latency network connections is critical. Utilizing dedicated network lines, such as leased lines or fiber optic connections, can dramatically improve transfer speeds. Optimizing network configurations, such as using jumbo frames and tuning TCP/IP parameters, can further enhance performance.

- Service-Specific Tuning: Data transfer services often provide configuration options to optimize performance based on the specific use case. These options may include adjusting the number of parallel threads, configuring buffer sizes, and selecting the appropriate compression algorithms. For example, AWS DataSync allows users to configure the number of concurrent tasks to optimize data transfer.

- Data Tiering and Archiving: For large datasets, it may be beneficial to tier the data, moving less frequently accessed data to lower-cost storage tiers or archiving them to reduce the amount of data that needs to be transferred during migration. This reduces the cost and transfer time.

- Monitoring and Reporting: Implementing robust monitoring and reporting mechanisms is crucial. These tools provide insights into transfer performance, identify bottlenecks, and allow for proactive adjustments. Metrics such as transfer speed, error rates, and resource utilization should be tracked.

- Service-Level Agreements (SLAs): Selecting a service that provides SLAs guarantees specific performance levels, such as minimum transfer speeds or uptime. This provides a level of assurance that the service will meet the required performance expectations.

Cost Analysis and Budgeting

The financial aspects of data migration are critical, often representing a significant portion of the overall project cost. A thorough cost analysis and well-defined budget are essential for effective planning and control, minimizing unexpected expenses and ensuring the project’s financial viability. Understanding the various pricing models, comparing costs across different methods, and creating a realistic budget template are vital steps in this process.

Pricing Models of Data Transfer Services

Data transfer services employ various pricing models, each with its own advantages and disadvantages depending on the specific project requirements and data characteristics. Understanding these models is crucial for selecting the most cost-effective solution.

- Pay-per-GB Transfer: This is a common model where the service provider charges based on the volume of data transferred, typically measured in gigabytes (GB) or terabytes (TB). The cost per GB varies depending on the service provider, the region of data transfer, and the service level agreement (SLA). This model is straightforward and easily predictable, making it suitable for projects with a clear understanding of data volume.

- Pay-per-Use with Tiered Pricing: This model offers tiered pricing, where the cost per GB decreases as the total data transferred increases. This is advantageous for large-scale migrations involving significant data volumes, allowing for cost savings as the project progresses.

- Subscription-Based Pricing: Some services offer subscription plans with fixed monthly or annual fees. These plans typically include a certain amount of data transfer capacity, storage, and other features. This model can be cost-effective for projects with predictable data transfer needs and consistent usage patterns. It provides budget certainty.

- Fixed-Price Projects: For specific, well-defined migration projects, some providers offer fixed-price contracts. This model provides the most budget certainty, but it often requires a detailed understanding of the project scope and data characteristics upfront. Changes to the scope can lead to additional costs.

- Usage-Based Pricing (with Additional Fees): While primarily pay-per-GB or subscription-based, additional fees may apply for specific services, such as data transformation, data validation, or specialized security features. It is important to carefully evaluate the potential for these additional costs.

Cost Comparison of Data Transfer Methods

The cost of data transfer varies significantly depending on the method used, impacting the overall project budget. A comparative analysis is essential for making informed decisions.

- Network-Based Transfer (e.g., over the internet): This method is typically the most cost-effective for smaller data volumes and when the source and destination are geographically close. However, the cost can increase with data volume, bandwidth availability, and network latency.

- Offline Data Transfer (e.g., using physical devices): This involves transferring data using physical storage devices like hard drives or tapes. While this method has a higher upfront cost (purchase or rental of devices), it can be more cost-effective for large datasets or when network bandwidth is limited or expensive. Transportation costs and device handling costs are additional considerations.

- Cloud-Based Transfer Services: Cloud providers offer various data transfer services, often integrated with their storage and compute offerings. The cost structure depends on the service used (e.g., data ingress/egress fees, storage costs, and transfer fees). These services often provide scalability and performance benefits but can incur significant costs, particularly for high data volumes.

- Hybrid Approaches: Combining different methods, such as using offline transfer for the initial bulk data migration and network-based transfer for ongoing incremental updates, can optimize costs and minimize downtime.

Sample Budget Template for a Data Migration Project

A well-structured budget template is a crucial tool for planning and managing the financial aspects of a data migration project. It helps to track expenses, identify potential cost overruns, and ensure the project stays within budget.

| Category | Description | Estimated Cost | Actual Cost | Notes |

|---|---|---|---|---|

| Data Transfer Service Fees | Charges for data transfer, storage, and other services provided by the selected vendor. | $XXX,XXX | $XXX,XXX | Based on chosen pricing model and data volume. |

| Network Costs | Costs associated with data transfer over the network, including bandwidth charges. | $XX,XXX | $XX,XXX | Consider network provider fees and data transfer volume. |

| Hardware Costs (if applicable) | Expenses for purchasing or renting physical storage devices for offline transfer. | $X,XXX | $X,XXX | Include device costs, shipping, and handling. |

| Labor Costs | Expenses for personnel involved in the migration, including planning, execution, and monitoring. | $XX,XXX | $XX,XXX | Consider internal staff or contractor rates. |

| Data Transformation Costs | Costs for any data transformation or cleansing activities required during the migration. | $X,XXX | $X,XXX | If data requires transformation, include these costs. |

| Testing and Validation Costs | Expenses for testing and validating the migrated data to ensure accuracy and integrity. | $X,XXX | $X,XXX | Includes test environment costs. |

| Contingency Fund | A buffer for unexpected expenses. | $XX,XXX | $XX,XXX | Typically 5-10% of the total project cost. |

| Total Project Cost | Sum of all categories. | $XXX,XXX | $XXX,XXX |

The “Estimated Cost” column should be populated with initial estimates based on the chosen data transfer method and the project scope. The “Actual Cost” column should be updated as the project progresses to track the actual expenses. The “Notes” column provides space for documenting assumptions, explanations, and any variations from the initial estimates. For example, if a project involves a 10 TB migration using a pay-per-GB service at $0.05/GB, the estimated cost for data transfer would be $500 (10,000 GB$0.05/GB).

If the project encounters unexpected data corruption requiring additional data validation, the costs in the testing and validation category might need to be adjusted, along with the contingency fund.

Service Level Agreements (SLAs) and Support

Service Level Agreements (SLAs) and the quality of support provided are critical components in selecting a data transfer service. These elements directly impact the reliability, performance, and overall success of the data migration process. A robust SLA ensures accountability, while comprehensive support minimizes downtime and facilitates efficient problem resolution.

Importance of Service Level Agreements

An SLA is a contractual agreement between a service provider and a client, defining the level of service expected. It Artikels performance metrics, responsibilities, and remedies for service failures. The presence of a well-defined SLA is crucial for several reasons.

- Guaranteed Uptime and Availability: SLAs typically specify the percentage of time the service will be available. This is critical for ensuring uninterrupted data transfer operations. For instance, a service might guarantee 99.9% uptime, translating to a maximum downtime of approximately 8.76 hours per year.

- Performance Metrics: SLAs define performance targets, such as data transfer speeds and latency. These metrics allow clients to assess whether the service meets their specific needs.

- Remedies for Service Failures: The SLA Artikels the consequences of failing to meet the agreed-upon service levels. These remedies can include service credits, financial compensation, or other forms of redress.

- Clarity and Accountability: SLAs provide clarity on the responsibilities of both the service provider and the client, fostering accountability.

Key Elements to Look for in an SLA

A thorough review of the SLA is essential before selecting a data transfer service. Several key elements should be carefully examined.

- Uptime Guarantee: This specifies the percentage of time the service is guaranteed to be operational. A higher uptime percentage indicates greater reliability. The calculation typically uses the formula:

Uptime (%) = (Total Time – Downtime) / Total Time

– 100For example, 99.99% uptime allows for only about 5 minutes of downtime per month.

- Performance Metrics: This includes data transfer speeds (e.g., gigabytes per second) and latency (the time it takes for data to travel between two points). Consider your specific requirements.

- Data Loss Prevention: The SLA should detail measures to prevent data loss, including data replication, backup procedures, and disaster recovery plans.

- Service Credits and Compensation: The SLA should clearly Artikel the remedies for service failures, such as service credits or financial compensation. These should be proportional to the severity and duration of the outage.

- Incident Response Time: This defines how quickly the service provider will respond to reported issues. A faster response time minimizes the impact of service disruptions.

- Escalation Procedures: The SLA should detail the process for escalating issues if they are not resolved within the specified time frame.

Influence of Support and Documentation

The quality of support and documentation significantly impacts the user experience and the ease with which data migration can be accomplished.

- Availability of Support Channels: The service should offer multiple support channels, such as email, phone, and chat, to ensure prompt assistance. 24/7 support is often essential, especially for critical data migrations.

- Responsiveness of Support Team: The support team should be responsive and knowledgeable, capable of quickly resolving issues. A slow or unhelpful support team can significantly delay the migration process.

- Comprehensive Documentation: The service should provide detailed documentation, including tutorials, FAQs, and troubleshooting guides. This documentation should be easy to understand and regularly updated.

- Examples of Documentation’s Impact:

- Scenario 1: A company migrating a large database encounters an error during the transfer. With comprehensive documentation, the IT team can quickly identify the cause of the error and implement a solution, minimizing downtime. Without adequate documentation, the team would likely need to contact support, leading to delays.

- Scenario 2: A user is unfamiliar with a specific feature of the data transfer service. Clear and well-organized documentation allows the user to independently learn how to use the feature, improving their efficiency.

Testing and Validation Procedures

Rigorous testing and validation are paramount when selecting and utilizing a data transfer service for migration. These procedures ensure data integrity, minimize downtime, and confirm the successful transfer of data from the source environment to the target environment. Implementing these procedures allows for early detection of potential issues, enabling timely remediation and ultimately mitigating risks associated with the migration process.

Testing Data Transfer Services Before Migration

Before committing to a full-scale data migration, a thorough testing phase is crucial. This phase evaluates the chosen data transfer service’s performance, reliability, and suitability for the specific migration requirements. The testing process should encompass various scenarios to simulate real-world conditions and identify potential bottlenecks or limitations.

- Proof-of-Concept (POC) Testing: A POC involves transferring a small, representative subset of the data to the target environment. This allows for a preliminary assessment of the service’s functionality, ease of use, and compatibility with the source and target systems. The size of the data subset should be large enough to simulate the complexities of the actual migration. For instance, if migrating a database, include a sample of different table structures, data types, and sizes.

- Performance Testing: Performance testing measures the data transfer service’s speed, throughput, and resource utilization under varying loads. This involves transferring data of different sizes and types while monitoring key metrics such as transfer rate, CPU usage, memory consumption, and network bandwidth utilization. Utilize tools like `iperf` or built-in monitoring tools within the data transfer service. The goal is to determine the service’s capacity and identify any performance limitations that might impact the overall migration timeline.

- Functional Testing: Functional testing verifies that the data transfer service correctly transfers data according to the defined specifications. This includes validating that data types, formats, and structures are preserved during the transfer. The testing should encompass different data scenarios, including normal data, edge cases, and error conditions. For example, test how the service handles null values, special characters, and large files.

- Security Testing: Security testing assesses the security features of the data transfer service, including encryption, access controls, and authentication mechanisms. The testing should involve simulating security threats, such as unauthorized access attempts, to verify that the service can protect sensitive data during transit and at rest. For example, verify that the encryption implementation adheres to industry standards like AES-256.

- Failover and Recovery Testing: This testing evaluates the data transfer service’s ability to handle unexpected failures and recover gracefully. This involves simulating various failure scenarios, such as network outages, service interruptions, and hardware failures, to assess the service’s resilience and recovery capabilities. The testing should confirm that the service can automatically resume data transfer after a failure without data loss or corruption.

Methods for Validating Data Integrity After the Transfer

Post-migration validation is essential to confirm that the transferred data is accurate, complete, and consistent with the source data. This process involves comparing the data in the target environment with the data in the source environment to identify any discrepancies. The validation methods should be comprehensive and cover various aspects of data integrity.

- Checksum Verification: Checksums, such as MD5 or SHA-256, are used to verify the integrity of data files. A checksum is calculated for the source data before the transfer and recalculated for the target data after the transfer. If the checksums match, it indicates that the data has been transferred without corruption. For large datasets, utilize tools like `md5sum` or `sha256sum`.

- Data Comparison: Data comparison involves comparing the data in the source and target environments to identify any differences. This can be performed at different levels, from comparing individual records to comparing aggregated statistics. For database migrations, use tools like `Data Compare` to compare tables, schemas, and data.

- Sample Data Validation: Sample data validation involves selecting a random sample of data and manually verifying its accuracy. This method is particularly useful for validating complex data structures or data that is difficult to compare automatically. For example, randomly select a subset of customer records and verify their details in both the source and target databases.

- Metadata Validation: Metadata, such as file sizes, timestamps, and permissions, should be validated to ensure that it has been correctly transferred. This is crucial for maintaining data context and functionality. Utilize scripting tools like `find` and `stat` to compare metadata across environments.

- Automated Validation Scripts: Develop automated scripts to perform data validation tasks. These scripts can automate checksum calculations, data comparisons, and metadata validation, making the validation process more efficient and reliable. For example, create a script to compare the number of records in a table in the source and target databases.

Checklist for Post-Migration Validation

A comprehensive checklist provides a structured approach to post-migration validation, ensuring that all critical aspects of data integrity are thoroughly assessed. The checklist should be customized to the specific migration requirements and should be used consistently throughout the validation process.

- Data Completeness: Verify that all data has been transferred to the target environment. This includes checking the number of records, files, or objects transferred.

- Data Accuracy: Validate that the data in the target environment is accurate and consistent with the source data. This involves comparing individual data elements, such as values, formats, and structures.

- Data Consistency: Ensure that the data in the target environment is consistent across all related tables or systems. This includes checking referential integrity, data relationships, and data dependencies.

- Metadata Verification: Validate that all metadata, such as file sizes, timestamps, and permissions, has been correctly transferred.

- Functional Testing: Perform functional tests to verify that the migrated data works correctly in the target environment. This includes testing applications, reports, and other systems that rely on the migrated data.

- Performance Testing: Assess the performance of the target environment after the migration to ensure that it meets the required performance levels.

- Security Testing: Verify that the security configurations in the target environment are correctly implemented and that data is protected from unauthorized access.

- Documentation Review: Review the documentation related to the migration process, including the data transfer service configuration, validation results, and any identified issues.

- Issue Resolution: Document and resolve any identified data integrity issues or discrepancies. This includes identifying the root cause of the issues and implementing corrective actions.

- Sign-off: Obtain sign-off from all stakeholders to confirm that the post-migration validation has been completed successfully and that the data is ready for production use.

Compliance and Regulatory Requirements

Data migration projects often involve the movement of sensitive information across different environments. This necessitates careful consideration of various compliance and regulatory requirements. Selecting a data transfer service that aligns with these requirements is crucial to avoid legal and financial repercussions, maintain data integrity, and ensure the continued trust of stakeholders. Failure to comply can lead to significant penalties and damage to an organization’s reputation.

Addressing Compliance Needs with Data Transfer Services

Data transfer services provide several features designed to address compliance requirements. These features enable organizations to maintain adherence to regulations such as GDPR (General Data Protection Regulation) and HIPAA (Health Insurance Portability and Accountability Act).

- Encryption: Data encryption, both in transit and at rest, is a fundamental security measure for compliance. Services should offer robust encryption protocols (e.g., AES-256) to protect data from unauthorized access. The encryption keys should be managed securely, ideally with features like key rotation and access control. For example, services like AWS Key Management Service (KMS) and Azure Key Vault provide comprehensive key management capabilities.

- Access Control and Authentication: Strong access controls, including multi-factor authentication (MFA) and role-based access control (RBAC), are essential. These features restrict access to sensitive data based on user roles and responsibilities, minimizing the risk of data breaches.

- Audit Trails: Comprehensive audit trails that track all data transfer activities, including access attempts, modifications, and deletions, are crucial for demonstrating compliance. These logs should be tamper-proof and easily accessible for auditing purposes. Services like Google Cloud Audit Logs provide detailed records of user actions.

- Data Retention Policies: Compliance often mandates specific data retention periods. Data transfer services should allow for the configuration of data retention policies to ensure that data is stored for the required duration and then securely deleted.

- Data Loss Prevention (DLP): Some services offer DLP capabilities, which help to identify and prevent the transfer of sensitive data outside of defined boundaries. This can include features like data classification and content filtering.

Identifying Regulatory Considerations Relevant to Data Migration

Data migration projects are subject to various regulatory requirements, depending on the industry, the type of data being transferred, and the geographic locations involved. Organizations must thoroughly assess these requirements before selecting a data transfer service.

- GDPR (General Data Protection Regulation): Applies to organizations that process the personal data of individuals within the European Union (EU), regardless of the organization’s location. Key considerations include data minimization, purpose limitation, data subject rights (e.g., right to access, right to erasure), and the requirement for explicit consent for data processing.

- HIPAA (Health Insurance Portability and Accountability Act): Regulates the handling of protected health information (PHI) in the United States. Data transfer services must comply with HIPAA’s security and privacy rules, including implementing administrative, physical, and technical safeguards to protect PHI.

- CCPA (California Consumer Privacy Act): Grants California residents rights regarding their personal information, including the right to know what information is collected, the right to delete personal information, and the right to opt-out of the sale of personal information.

- Other Industry-Specific Regulations: Industries such as finance (e.g., PCI DSS), education (e.g., FERPA), and government have their own specific regulations that govern data handling and transfer.

- Cross-Border Data Transfers: The transfer of data across international borders is subject to restrictions and requirements. Organizations must comply with data transfer mechanisms such as Standard Contractual Clauses (SCCs) or Binding Corporate Rules (BCRs) to ensure the legality of these transfers.

Data Residency and Its Implications

Data residency refers to the physical or geographic location where data is stored. Regulatory requirements often dictate where specific types of data must reside. Data residency considerations are crucial during data migration.

- Legal Requirements: Many countries and regions have data residency laws that mandate that certain types of data (e.g., financial records, health information) must be stored within their borders. For example, the Cloud Act in the United States allows government access to data stored by U.S. companies, potentially impacting data residency compliance for organizations operating internationally.

- Data Sovereignty: Data sovereignty is the concept that data is subject to the laws of the country in which it is located. Organizations must ensure that their data transfer service supports data residency requirements and that data is stored in the appropriate geographic locations.

- Service Provider Location: The physical location of the data transfer service provider’s infrastructure is a critical factor. Organizations should select providers with data centers located in regions that meet their data residency requirements. For example, a financial institution handling European customer data might choose a provider with data centers within the EU.

- Data Center Security: Data centers must maintain high levels of physical and logical security to protect data from unauthorized access. These measures include controlled access, surveillance, and environmental controls.

- Impact on Performance: Data residency can impact data transfer performance. Transferring data across long distances can introduce latency. Organizations should consider the geographic distance between their data sources and the target location when selecting a data transfer service.

Real-World Case Studies and Examples

Data migration projects, regardless of scale, offer valuable lessons. Analyzing successful implementations and examining common pitfalls provides actionable insights for selecting the appropriate data transfer service and optimizing the migration strategy. This section presents several case studies, highlighting diverse industry applications and the critical considerations for effective data transfer.

Successful Data Migration Projects

The following examples showcase how different data transfer services were utilized to achieve successful migrations across various industries, emphasizing the factors contributing to their success.

- Cloud Migration for a Financial Institution: A major financial institution migrated its on-premises data centers to a public cloud infrastructure using a combination of AWS DataSync and Snowball. This involved transferring petabytes of financial transaction data, customer records, and regulatory documents. The key to success was a phased approach, starting with less critical data and gradually migrating more sensitive information. This approach minimized disruption and allowed for iterative testing and validation.

The chosen services provided high-throughput data transfer, encryption during transit and at rest, and integration with existing security protocols.

The phased approach and robust security features were crucial in meeting stringent regulatory requirements and ensuring data integrity.

The project reduced infrastructure costs by 30% and improved disaster recovery capabilities.

- E-commerce Platform Data Consolidation: An e-commerce platform consolidated data from multiple regional databases into a central data warehouse using a managed data transfer service like Google Cloud Storage Transfer Service. This project involved migrating customer orders, product catalogs, and user activity data. The service’s scalability and ease of use were essential for handling the high volume of data and frequent updates. The project was executed during off-peak hours to minimize impact on customer experience.

This migration improved data analytics capabilities, enabling more personalized recommendations and targeted marketing campaigns. Data transfer speed was improved by 40% and allowed for a 20% reduction in data processing time.

- Healthcare Data Modernization: A healthcare provider migrated its electronic health records (EHR) system to a new platform, utilizing a service with specialized capabilities for handling sensitive patient data. This involved transferring patient medical history, lab results, and imaging data. The selected service provided features like data masking, de-identification, and audit trails to ensure compliance with HIPAA regulations. Careful planning and rigorous testing were implemented to ensure data accuracy and integrity.

This modernization initiative improved patient care by providing clinicians with faster access to critical information and enabling data-driven insights for improved treatment plans. This project improved data access times by 50%.

Common Challenges Encountered During Data Transfer

Understanding the typical obstacles encountered during data transfer helps in proactively addressing them and selecting the right service to mitigate risks.

- Network Bandwidth Limitations: Insufficient network bandwidth can significantly slow down data transfer speeds, especially when migrating large datasets. The choice of service should consider the available bandwidth and offer options for optimizing transfer performance, such as data compression or parallel transfer capabilities.

- Data Integrity Issues: Ensuring data integrity throughout the transfer process is paramount. Errors during data transfer can lead to data corruption or loss. Services should offer features like checksum verification, data validation, and error handling to ensure the accuracy and completeness of the migrated data.

- Security Vulnerabilities: Data transfer processes can expose sensitive data to security risks. It is essential to implement robust security measures, including encryption, access controls, and regular security audits. Services that provide end-to-end encryption and comply with industry security standards are crucial.

- Downtime and Business Disruption: Data migrations can cause downtime and disruption to business operations. Careful planning, scheduling the migration during off-peak hours, and utilizing services with minimal downtime are essential to minimize the impact on users and customers.

- Compliance and Regulatory Hurdles: Compliance with data privacy regulations, such as GDPR or HIPAA, can add complexity to data migration projects. The selected service must offer features and capabilities that help meet these requirements, such as data masking, anonymization, and audit trails.

Choosing the Right Service for Specific Industry Needs

The optimal data transfer service depends on the specific requirements of the industry and the nature of the data being migrated.

- Financial Services: Financial institutions require services that prioritize security, compliance, and high performance. Features like end-to-end encryption, audit trails, and compliance certifications (e.g., SOC 2) are critical. Services that support secure transfer of sensitive data and integration with existing security infrastructure are preferred. Consider services such as AWS DataSync or Google Cloud Storage Transfer Service with appropriate security configurations.

- Healthcare: Healthcare providers must adhere to strict regulations, such as HIPAA, and prioritize patient data privacy. Services that offer data masking, de-identification, and audit logging are essential. The service should support secure data transfer and storage, with strong access controls and data encryption. Solutions like Azure Data Box or specialized data transfer services that meet HIPAA compliance are appropriate.

- E-commerce: E-commerce platforms need services that can handle large volumes of data and ensure fast transfer speeds to minimize downtime. Scalability and cost-effectiveness are also important. Consider services that offer parallel data transfer, data compression, and integration with data warehouse solutions. Services like AWS Snowball or Google Cloud Storage Transfer Service are suitable.

- Media and Entertainment: Media and entertainment companies often work with large video files and require high-bandwidth transfer capabilities. Services with features like data compression, content delivery network (CDN) integration, and support for large file transfers are beneficial. Consider services that can handle high-throughput data transfer and support integration with media asset management systems. Solutions such as Aspera or Signiant are often used.

- Manufacturing: Manufacturing companies deal with a variety of data, including product designs, production data, and supply chain information. The services should support a range of data formats and offer robust security features. Data transfer solutions should integrate with existing manufacturing systems, providing features such as secure data transfer, data validation, and error handling.

Future Trends in Data Transfer Services

The landscape of data transfer services is constantly evolving, driven by technological advancements and the increasing volume, velocity, and variety of data. Understanding these future trends is crucial for organizations planning data migrations and ensuring long-term data management strategies. This section will explore the emerging technologies, identify the evolving trends in data migration, and summarize the changing environment of data transfer services.

Emerging Technologies Impacting Data Transfer

Several technologies are poised to significantly impact data transfer services. Their adoption will reshape how data is moved, processed, and stored, requiring organizations to adapt their strategies.

- Edge Computing: Edge computing moves data processing closer to the data source, reducing latency and bandwidth consumption. This is particularly important for applications like Internet of Things (IoT) devices, where real-time data analysis is crucial. Data transfer services will need to support edge-to-cloud and edge-to-edge data movement, facilitating the transfer of pre-processed data or insights. For instance, consider a smart factory using edge devices to monitor machinery.

Instead of transferring raw sensor data to a central cloud, the edge devices process the data and send only alerts or aggregated data to the cloud, significantly reducing the amount of data transferred.

- 5G and Enhanced Connectivity: The rollout of 5G networks promises significantly faster data transfer speeds and lower latency. This will enable more efficient data migration, especially for large datasets. 5G’s improved bandwidth will also support more complex data transfer scenarios, such as real-time video streaming and virtual reality applications.

- Quantum Computing: While still in its early stages, quantum computing has the potential to revolutionize data processing and potentially data transfer. Quantum algorithms could significantly accelerate complex data transformations and compression techniques, enabling faster and more efficient data migration processes. However, the impact will be felt in the long term.

- AI-Powered Data Transfer: Artificial intelligence and machine learning are being integrated into data transfer services to automate and optimize various aspects of the process. AI can analyze data patterns, predict transfer times, and dynamically adjust bandwidth allocation, resulting in improved efficiency and reduced costs. For example, an AI-powered service could analyze historical data transfer patterns to predict peak demand and proactively provision additional resources.

- Blockchain for Data Integrity: Blockchain technology can be used to enhance the security and integrity of data transfers. By creating an immutable record of data transfers, blockchain can provide an audit trail and ensure data authenticity. This is particularly important for sensitive data and regulatory compliance.

Future Trends in Data Migration Services

Data migration services are also undergoing significant transformations, driven by the need for greater efficiency, security, and flexibility. These trends reflect the evolving needs of organizations dealing with increasingly complex data environments.

- Automated Data Migration: Automation will play a crucial role in future data migration services. Automated tools and processes will reduce manual intervention, accelerate migration timelines, and minimize the risk of human error. This includes automated data mapping, transformation, and validation.

- Hybrid and Multi-Cloud Migration: Organizations are increasingly adopting hybrid and multi-cloud strategies. Data migration services will need to support seamless data transfer across different cloud providers and on-premises environments, enabling organizations to optimize their infrastructure and leverage the best features of each platform.

- Serverless Data Transfer: Serverless computing models are gaining traction in data migration. Serverless data transfer services allow organizations to execute data transfer tasks without managing underlying infrastructure, reducing operational overhead and improving scalability. This model is particularly well-suited for bursty data transfer workloads.

- Data Governance and Compliance: Data governance and compliance requirements are becoming more stringent. Data migration services will need to incorporate robust data governance features, including data masking, anonymization, and access controls, to ensure compliance with regulations such as GDPR and CCPA.

- Focus on Data Transformation: Data migration is no longer just about moving data; it’s about transforming it to meet the requirements of the target environment. Future services will offer more advanced data transformation capabilities, including data cleansing, enrichment, and schema mapping.

- Specialized Data Migration Solutions: There will be a growing demand for specialized data migration solutions tailored to specific industries and data types. For example, healthcare organizations may require solutions that comply with HIPAA regulations, while financial institutions may need solutions that support PCI DSS compliance.

The evolution of data transfer services is characterized by a shift towards automation, enhanced security, and greater flexibility. Emerging technologies such as edge computing and AI are driving innovation, enabling faster, more efficient, and more secure data migration processes. Organizations must proactively adapt to these changes to effectively manage their data and remain competitive.

Closing Summary

In conclusion, the selection of a data transfer service for migration is a complex but manageable endeavor. By understanding the migration process, evaluating service features, prioritizing security, optimizing performance, and considering cost and compliance, organizations can navigate this landscape effectively. This comprehensive guide provides a framework for making informed decisions, ultimately leading to successful data migrations that meet the evolving demands of modern data management.

Essential FAQs

What is the difference between online and offline data transfer methods?

Online methods transfer data over a network connection, suitable for smaller datasets and continuous transfers. Offline methods, such as physical appliances or shipping hard drives, are ideal for large datasets or when network bandwidth is a constraint.

How can I ensure data integrity during the transfer process?

Data integrity can be ensured through checksum verification, data validation, and comparing data before and after the transfer. Utilizing services with built-in integrity checks and thorough testing is also crucial.

What are the typical costs associated with data transfer services?

Costs vary depending on the pricing model (e.g., per GB, per transfer, or subscription-based), data volume, transfer method, and service features. It is important to analyze the total cost of ownership (TCO) considering all factors.

How do data transfer services handle network outages during migration?

Most services offer features like automatic retry mechanisms, checkpoint restarts, and data synchronization to handle network interruptions. These features ensure that the transfer can resume seamlessly without data loss.

What level of technical expertise is required to use a data transfer service?

The level of expertise varies. Some services are designed to be user-friendly, while others require more technical knowledge. It’s essential to evaluate the service’s ease of use, documentation, and available support based on your team’s capabilities.