The decision to migrate data to the cloud is a strategic undertaking, demanding careful consideration and a well-defined approach. Understanding the nuances of cloud data migration, from fundamental principles to the selection of appropriate methods, is paramount for achieving successful outcomes. This process involves navigating various cloud deployment models, assessing the existing data landscape, and aligning migration objectives with business requirements.

This guide offers a structured approach to understanding the intricacies of cloud data migration. It provides a comprehensive overview of the key considerations, including data assessment, method selection, planning, tool utilization, security, compliance, and post-migration optimization. The goal is to equip readers with the knowledge necessary to make informed decisions and execute a data migration strategy that aligns with their specific needs and goals.

Understanding Cloud Data Migration

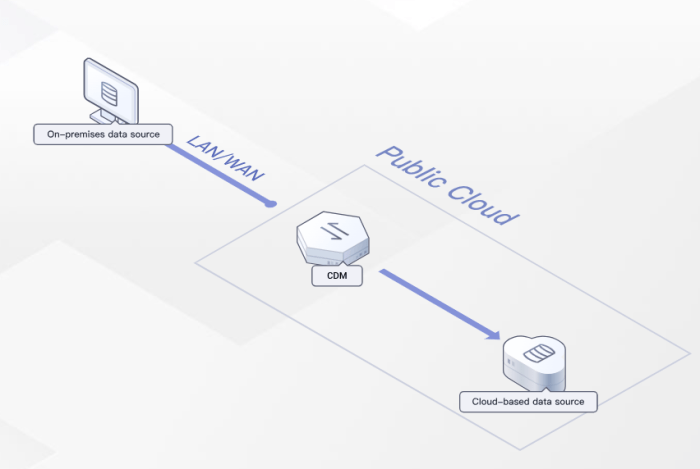

Cloud data migration involves transferring data from one location to another, typically from on-premises infrastructure or another cloud provider to a cloud environment. This process requires careful planning and execution to minimize downtime, data loss, and disruption to business operations. Understanding the core concepts and considerations is crucial for a successful migration strategy.

Fundamental Principles of Cloud Data Migration

Cloud data migration is underpinned by several core principles that guide the process. These principles ensure data integrity, security, and operational efficiency. They include data assessment, migration strategy selection, execution, and validation.

- Data Assessment: This involves evaluating the existing data landscape, including data volume, data types, data dependencies, and data quality. It is essential to understand the characteristics of the data before initiating the migration. The assessment also includes identifying potential challenges, such as data silos, compatibility issues, and compliance requirements. This process helps determine the most suitable migration approach.

- Migration Strategy Selection: Choosing the appropriate migration strategy depends on factors such as the business requirements, the complexity of the data, the desired downtime, and the budget. The strategy dictates the method of transferring data and the tools used. Common strategies include rehosting (lift and shift), re-platforming, refactoring, and rearchitecting.

- Execution: This phase involves implementing the chosen migration strategy. It requires careful planning and coordination to minimize disruption. This includes preparing the target environment, migrating the data, and validating the data after the migration. The execution phase might involve various tools and technologies, depending on the chosen strategy.

- Validation: After data migration, it is crucial to validate the migrated data to ensure its integrity and accuracy. Validation includes comparing the data in the source and target environments, checking for data loss or corruption, and verifying data transformations. Thorough validation is essential to ensure that the migrated data meets the business requirements.

Cloud Deployment Models

Cloud deployment models define how cloud infrastructure is deployed and managed. The choice of deployment model impacts data security, control, and cost. Different models cater to various organizational needs and risk tolerances.

- Public Cloud: Public clouds are owned and operated by third-party cloud providers, such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). Resources are shared among multiple tenants. Public clouds offer scalability, cost-effectiveness, and ease of use, but they may have limited control and customization options. Public clouds are generally the most cost-effective solution for organizations with variable workloads and less stringent security requirements.

- Private Cloud: Private clouds are dedicated to a single organization and can be hosted on-premises or by a third-party provider. They offer greater control, security, and customization options than public clouds. Private clouds are suitable for organizations with strict compliance requirements, sensitive data, or specialized workloads. The trade-off is often higher costs and more complex management.

- Hybrid Cloud: Hybrid clouds combine public and private cloud environments, allowing organizations to leverage the benefits of both. This model enables organizations to move workloads between environments based on their needs, such as cost optimization, disaster recovery, or compliance. A hybrid cloud strategy allows for flexibility and control, but it requires careful management and integration.

- Multi-Cloud: Multi-cloud involves using multiple public cloud providers. This strategy can improve availability, reduce vendor lock-in, and optimize costs by leveraging the strengths of different providers. Multi-cloud environments require sophisticated management tools and expertise to ensure consistent performance and security.

Reasons for Cloud Data Migration

Organizations choose to migrate data to the cloud for a variety of compelling reasons. These drivers often contribute to significant improvements in business agility, cost efficiency, and operational resilience. The specific benefits depend on the organizational context and the chosen migration strategy.

- Cost Reduction: Cloud services often offer a pay-as-you-go pricing model, which can reduce capital expenditures and operational costs. Organizations can avoid the costs associated with on-premises hardware, maintenance, and staffing. Cloud providers handle infrastructure management, freeing up IT resources.

- Scalability and Elasticity: Cloud environments provide virtually unlimited scalability and elasticity. Organizations can easily scale their resources up or down to meet changing demands. This agility is critical for handling peak loads, responding to market changes, and supporting business growth.

- Improved Business Agility: Cloud services enable organizations to deploy new applications and services faster. The cloud provides a more flexible and responsive IT infrastructure, allowing organizations to adapt to changing business needs more quickly. This agility can give organizations a competitive advantage.

- Enhanced Data Security and Disaster Recovery: Cloud providers offer robust security features and disaster recovery capabilities. They invest heavily in security measures, such as encryption, access controls, and threat detection. Cloud-based disaster recovery solutions can minimize downtime and data loss in the event of a disaster.

- Increased Collaboration and Productivity: Cloud services facilitate collaboration and improve productivity by providing access to data and applications from anywhere, at any time. Cloud-based collaboration tools and shared storage solutions enable teams to work together more effectively.

Assessing Your Current Data Landscape

A comprehensive assessment of the existing data environment is crucial before initiating any cloud data migration. This preliminary phase identifies the scope of the migration, potential challenges, and the optimal migration strategy. Neglecting this step can lead to unforeseen costs, project delays, and ultimately, a less-than-optimal cloud implementation. Understanding the data landscape is the cornerstone of a successful migration.

Data Discovery and Assessment Importance

Data discovery and assessment serve as the foundation for a well-planned cloud migration strategy. It involves systematically examining the current data assets, their characteristics, and their suitability for the cloud environment. This process helps in minimizing risks, optimizing resource allocation, and ensuring data integrity throughout the migration process. Failing to conduct a thorough assessment can lead to significant complications down the line.

Key Data Characteristics

Understanding the characteristics of the data is essential for determining the best migration approach. Several key attributes must be considered:

- Data Size: The total volume of data to be migrated significantly impacts the choice of migration method. Large datasets may necessitate techniques like offline migration (e.g., using physical appliances) to minimize downtime and network bandwidth costs.

- Data Structure: Data can exist in various structures, including structured (e.g., relational databases), semi-structured (e.g., JSON, XML), and unstructured (e.g., images, videos). Each structure requires a specific approach for migration and storage in the cloud. For example, relational data might migrate directly to cloud-based databases, while unstructured data could be stored in object storage services.

- Data Sensitivity: The sensitivity of the data, whether it contains personally identifiable information (PII), financial records, or other confidential data, dictates the security measures required. Encryption, access controls, and compliance considerations (e.g., GDPR, HIPAA) are paramount. For instance, data containing PII might necessitate encryption both in transit and at rest within the cloud environment.

- Frequency of Access: The frequency with which data is accessed influences storage and retrieval choices. Frequently accessed data might benefit from faster, more expensive storage tiers, while infrequently accessed data can be stored in cost-effective archival storage. For example, active customer data would likely be stored in a high-performance tier, while historical sales data could be stored in a cold storage tier.

Tools and Techniques for Data Readiness Assessment

Several tools and techniques facilitate the assessment of data readiness for cloud migration. These methods help in gaining a deeper understanding of the data landscape.

- Data Profiling Tools: These tools analyze data to identify characteristics such as data types, value ranges, data quality issues (e.g., missing values, inconsistencies), and potential security vulnerabilities. Examples include tools from Informatica, Talend, and AWS Glue DataBrew. The profiling results provide a comprehensive overview of data characteristics.

- Data Cataloging Tools: Data cataloging tools help in creating a centralized inventory of data assets, including metadata (e.g., data definitions, lineage, ownership). This improves data discoverability and governance. Examples include AWS Glue Data Catalog, Azure Data Catalog, and Google Cloud Data Catalog. This is particularly helpful for large, complex data environments.

- Migration Planning Tools: These tools assist in planning and simulating the migration process, including estimating costs, identifying dependencies, and assessing potential risks. Some cloud providers offer their own migration planning tools, such as AWS Migration Evaluator and Azure Migrate. These tools provide a roadmap for the migration process.

- Data Quality Assessment: Assessing data quality is essential before migration. This involves identifying and rectifying data quality issues, such as data inconsistencies, missing values, and duplicate records. Tools like OpenRefine and commercial data quality solutions are used for this purpose. This ensures the migrated data is reliable and accurate.

- Network Assessment: Evaluating network bandwidth and latency is crucial, especially for online migration methods. Tools that measure network performance help determine the feasibility of transferring data over the network. For example, if the network bandwidth is limited, offline migration methods may be more appropriate.

Identifying Migration Objectives and Requirements

Defining clear objectives and requirements is paramount for a successful cloud data migration. This process ensures alignment between business goals and technical execution, leading to optimized outcomes and a demonstrably positive return on investment. It’s a critical step to avoid pitfalls such as cost overruns, performance degradation, and security vulnerabilities.

Business Goals Driving Cloud Data Migration

The primary business goals driving a cloud data migration vary depending on the organization’s strategic priorities. Identifying these goals early in the process informs the selection of appropriate migration strategies and technologies.

- Cost Reduction: Migrating to the cloud often aims to reduce infrastructure costs. This includes minimizing capital expenditures (CapEx) associated with on-premises hardware and operational expenditures (OpEx) related to maintenance, power, and cooling. For example, companies may achieve significant cost savings by leveraging cloud-based storage solutions, which typically offer lower costs compared to maintaining on-premise data centers. Consider the case of Netflix, which migrated its video processing and storage to AWS, realizing substantial cost reductions due to the scalability and pay-as-you-go pricing model of the cloud.

- Improved Scalability and Agility: The cloud provides inherent scalability, allowing organizations to rapidly adjust resources to meet fluctuating demands. This agility is crucial for businesses experiencing rapid growth or seasonal variations in data processing needs. Businesses can easily scale their compute and storage resources up or down as needed. For example, a retail company may need to scale its data processing capacity during peak shopping seasons.

- Enhanced Data Availability and Disaster Recovery: Cloud platforms offer robust disaster recovery capabilities and high availability options, ensuring business continuity. Data is often replicated across multiple availability zones or regions, minimizing the risk of data loss due to hardware failures or natural disasters. Companies can improve their disaster recovery capabilities.

- Enhanced Security: Cloud providers offer advanced security features, including encryption, access controls, and threat detection. Migrating to the cloud can enhance data security. Data encryption at rest and in transit protects sensitive information.

- Improved Innovation and Time-to-Market: Cloud platforms offer a wide range of services and tools that accelerate innovation. This includes access to advanced analytics, machine learning, and other technologies. Businesses can rapidly deploy new applications and services.

Framework for Defining Performance, Security, and Cost Requirements

Establishing a robust framework for defining specific requirements related to performance, security, and cost is crucial for a successful migration. This framework should be data-driven and aligned with the identified business goals.

- Performance Requirements: Define performance metrics that must be met or exceeded post-migration. This includes latency, throughput, and response times. For instance, if a financial institution is migrating its transaction processing system, the target response time for a transaction might be set at under 50 milliseconds. Establish service level agreements (SLAs) with the cloud provider to ensure performance meets the required standards.

Performance requirements must be clearly documented and measurable.

- Latency: The delay before a transfer of data begins following an instruction for its transfer. Measured in milliseconds (ms).

- Throughput: The rate at which data is successfully transferred. Measured in megabytes per second (MB/s) or gigabytes per second (GB/s).

- Response Time: The time it takes for a system to respond to a request. Measured in milliseconds (ms) or seconds (s).

- Security Requirements: Define the security controls and policies required to protect data in the cloud. This includes access controls, encryption standards, and compliance requirements. For example, if a healthcare provider is migrating patient data, the migration must comply with HIPAA regulations, including data encryption, access controls, and audit logging. Implement multi-factor authentication (MFA) for all cloud accounts. Security requirements are often defined by regulatory compliance and industry best practices.

- Access Controls: Policies and procedures that define who can access what data.

- Encryption: The process of converting data into a form that cannot be easily understood.

- Compliance: Adherence to regulatory requirements and industry standards.

- Cost Requirements: Establish a budget for the cloud migration and ongoing cloud operations. This involves estimating the costs of cloud services, data transfer, and potential migration assistance. Compare cloud provider pricing models and select the most cost-effective options. Optimize cloud resource utilization to minimize costs. For example, a company might set a budget limit of $10,000 per month for cloud storage and compute resources.

Cost requirements should be aligned with the overall business goals and financial constraints.

- Cloud Service Costs: The cost of using cloud resources, such as compute, storage, and networking.

- Data Transfer Costs: The cost of transferring data into and out of the cloud.

- Optimization: The process of improving resource utilization to minimize costs.

Criteria for Selecting the Optimal Migration Method

The selection of the optimal migration method is a critical decision, influenced by the business goals and technical requirements. The following criteria should be considered:

- Data Volume and Complexity: The volume of data to be migrated and its complexity (e.g., structured, unstructured, relational, NoSQL) will influence the choice of migration method. For example, a large-scale migration involving petabytes of data might necessitate a bulk data transfer method like AWS Snowball or Azure Data Box. Consider the size and type of data when selecting the migration method.

- Downtime Tolerance: The acceptable downtime during the migration process is a key factor. Methods like “lift and shift” may result in higher downtime, while methods like database replication can minimize downtime. Consider the business impact of downtime when choosing a migration method.

- Technical Skills and Resources: The availability of internal technical skills and resources will impact the feasibility of different migration methods. Complex methods might require specialized expertise. Ensure that the team has the necessary skills and resources.

- Security and Compliance Requirements: The chosen migration method must meet the required security and compliance standards. This includes data encryption, access controls, and audit logging. Select a method that aligns with security and compliance needs.

- Cost Considerations: The cost of the migration method, including data transfer costs, cloud service costs, and potential migration assistance, should be considered. Evaluate the total cost of ownership (TCO) of each method. Evaluate the total cost of ownership (TCO) of each method, considering both upfront and ongoing costs.

Cloud Data Migration Methods

Choosing the appropriate cloud data migration method is critical for a successful transition, directly impacting cost, downtime, and the overall effectiveness of the migration. The optimal method is determined by factors such as the existing infrastructure, business requirements, and the desired level of modernization.

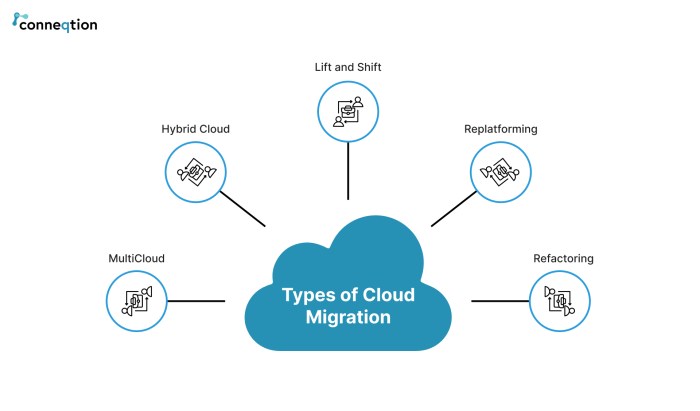

Cloud Data Migration Methods: Categorization and Differentiation

Several distinct methods exist for migrating data to the cloud. Understanding the nuances of each approach allows for a more informed decision-making process.

- Rehosting (Lift and Shift): This method involves migrating applications and data “as is” to the cloud without significant code changes. It’s the simplest and often quickest approach, focusing on moving existing workloads to a cloud environment.

- Replatforming: Replatforming involves making some modifications to the application to leverage cloud-native features, such as using a different database service or managed services. While more complex than rehosting, it offers improved performance and cost optimization.

- Refactoring (Re-architecting): This is the most comprehensive approach, involving a complete redesign of the application to take full advantage of cloud-native architectures, such as microservices. Refactoring provides the greatest benefits in terms of scalability, agility, and cost efficiency but requires the most time and resources.

- Repurchasing: Repurchasing involves replacing an existing application with a Software-as-a-Service (SaaS) solution. This is a good option when a suitable SaaS alternative is available.

- Retiring: Retiring involves decommissioning applications and data that are no longer needed. This can significantly reduce costs and simplify the migration process.

- Retaining: Retaining means leaving some applications or data on-premises. This may be necessary for regulatory reasons or when the cost of migrating is prohibitive.

The following table summarizes the key differences between these methods, including their advantages, disadvantages, and suitable scenarios.

| Migration Method | Description | Advantages | Disadvantages | Suitable Scenarios |

|---|---|---|---|---|

| Rehosting (Lift and Shift) | Migrating applications and data without code changes. | Fastest migration, minimal initial changes, reduced risk. | May not fully leverage cloud benefits, potential for increased costs if not optimized. | Quick migrations, urgent deadlines, limited budget, applications that are not easily modified. |

| Replatforming | Making some modifications to leverage cloud-native features. | Improved performance and scalability, potential for cost optimization. | More complex than rehosting, requires some application understanding. | Applications requiring improved performance, moderate budget, when some cloud services can be easily integrated. |

| Refactoring (Re-architecting) | Redesigning the application for cloud-native architectures. | Maximum cloud benefits, scalability, agility, cost efficiency. | Most complex, time-consuming, and expensive. | Applications needing significant modernization, high scalability requirements, long-term strategic goals. |

| Repurchasing | Replacing the existing application with a SaaS solution. | Reduced infrastructure management, faster time to market. | Limited customization options, potential vendor lock-in. | When a suitable SaaS alternative exists, standardized applications, limited in-house expertise. |

| Retiring | Decommissioning unused applications and data. | Cost savings, simplified infrastructure. | Requires careful assessment of data usage. | Applications no longer needed, data that is no longer relevant. |

| Retaining | Leaving applications or data on-premises. | Compliance with regulations, avoid migration costs. | May not fully leverage cloud benefits, requires maintaining on-premises infrastructure. | Regulatory requirements, latency concerns, when migration costs outweigh the benefits. |

For instance, consider a scenario where a retail company needs to migrate its e-commerce platform. If the company opts for rehosting, they would simply move the existing application and database to the cloud infrastructure. This is the quickest approach but might not fully leverage the cloud’s scalability and cost optimization features. Replatforming, in this case, could involve switching to a cloud-native database like Amazon Aurora or Google Cloud SQL, improving performance and reducing operational overhead.

Refactoring would entail redesigning the entire e-commerce platform using microservices, containerization, and serverless computing, providing greater agility and scalability but requiring significantly more time and investment. The choice depends on the company’s strategic goals, budget, and the current state of their e-commerce platform.

Choosing the Right Migration Strategy

Selecting the optimal cloud data migration strategy is a multifaceted process. It hinges on a thorough understanding of the existing data environment, the defined migration objectives, and the capabilities of various migration methods. This section delves into the critical factors that influence this decision, provides real-world examples, and presents a decision-making framework to aid in the selection process.

Factors to Consider When Selecting a Migration Strategy

Several key factors must be carefully evaluated to ensure a successful cloud data migration. Each factor contributes to the overall complexity, cost, and timeline of the migration project.

- Data Volume and Velocity: The sheer volume of data and the rate at which it changes (velocity) significantly impact strategy selection. For large datasets or high-velocity data streams, strategies like bulk migration or hybrid approaches may be more appropriate. Conversely, smaller datasets might benefit from simpler, more direct methods. For example, a company migrating petabytes of archival data would necessitate a different strategy compared to a startup migrating gigabytes of transactional data.

- Downtime Tolerance: Acceptable downtime is a critical constraint. Strategies like “big bang” migrations, which involve a single, all-at-once transfer, result in extended downtime. Conversely, approaches like “trickle” or “incremental” migrations minimize downtime by transferring data in phases. Consider an e-commerce platform: minimal downtime is crucial to maintain revenue generation.

- Complexity of Data and Applications: The structure and interdependencies of the data, along with the complexity of the applications that use it, influence the choice. Complex data models and intricate application architectures might necessitate more sophisticated migration strategies. A legacy monolithic application requires a different approach than a cloud-native, microservices-based application.

- Budget and Resources: Migration costs, encompassing infrastructure, tools, and personnel, must be considered. The availability of skilled resources also plays a significant role. Some strategies are more resource-intensive than others, and the budget must align with the chosen approach. The costs can vary widely, from the use of managed services to the need for custom-built solutions.

- Security and Compliance Requirements: Data security and adherence to regulatory compliance (e.g., GDPR, HIPAA) are paramount. The migration strategy must incorporate robust security measures and comply with all relevant regulations. Secure data transfer protocols and encryption are essential considerations. For instance, migrating patient health records necessitates stringent security protocols.

- Performance Requirements: The desired performance of the migrated data and applications is a key factor. Performance considerations include data access speed, query response times, and application latency. Some strategies optimize for performance, while others may prioritize cost or downtime. For example, a high-performance database migration requires careful consideration of network bandwidth and storage I/O capabilities.

Examples of Successful Data Migration Strategies for Different Use Cases

The following examples illustrate how different migration strategies are applied in various scenarios. These examples highlight the adaptability and the need to tailor the strategy to the specific use case.

- Use Case: A large financial institution migrating its on-premises data warehouse to a cloud-based data lake.

- Strategy: A hybrid approach combining bulk data transfer (for historical data) with incremental replication (for ongoing transactions). They could initially migrate all historical data using a bulk transfer method, like AWS Snowball or Azure Data Box, to reduce network bandwidth costs and time.

Then, they implement a change data capture (CDC) mechanism to replicate real-time transactions. This approach minimizes downtime and ensures data consistency.

- Strategy: A hybrid approach combining bulk data transfer (for historical data) with incremental replication (for ongoing transactions). They could initially migrate all historical data using a bulk transfer method, like AWS Snowball or Azure Data Box, to reduce network bandwidth costs and time.

- Use Case: A software-as-a-service (SaaS) company migrating its customer relationship management (CRM) system to a new cloud provider.

- Strategy: A “trickle” or “incremental” migration strategy. They could migrate customers in batches, allowing them to test and validate the migration process with a smaller subset of users before migrating the entire customer base. This reduces risk and allows for continuous operation.

They would also employ data validation checks at each stage to ensure data integrity.

- Strategy: A “trickle” or “incremental” migration strategy. They could migrate customers in batches, allowing them to test and validate the migration process with a smaller subset of users before migrating the entire customer base. This reduces risk and allows for continuous operation.

- Use Case: A media company migrating its video archive to a cloud object storage service.

- Strategy: A “lift and shift” or “rehost” strategy using tools that allow for automated transfer and validation. The company would utilize a tool like AWS DataSync or Azure Data Box to efficiently transfer large volumes of video files. The strategy prioritizes speed and cost-effectiveness. They might also use cloud-based transcoding services to optimize video formats for streaming after the migration.

Decision-Making Matrix to Help Choose the Best Migration Strategy

A decision-making matrix can help assess the different migration strategies. The matrix evaluates various strategies based on key criteria, providing a structured approach to choosing the most suitable option. The scoring system, using a scale of 1 to 5 (1 being least suitable and 5 being most suitable), allows for a quantitative comparison.

| Migration Strategy | Data Volume & Velocity | Downtime Tolerance | Complexity of Data | Budget & Resources |

|---|---|---|---|---|

| Rehosting (Lift and Shift) | 3 | 2 | 3 | 4 |

| Replatforming | 3 | 3 | 4 | 3 |

| Refactoring | 2 | 4 | 5 | 2 |

| Repurchasing | 4 | 5 | 2 | 4 |

| Retiring | 5 | 5 | 1 | 5 |

The table is a simplified illustration, and the scores are illustrative. A real-world matrix would include more granular criteria and a more detailed scoring system. The matrix provides a basis for comparison, highlighting the strengths and weaknesses of each strategy based on the specific project requirements. The most suitable strategy is the one that best aligns with the organization’s priorities and constraints.

For example, if downtime tolerance is extremely low, then a strategy with a high score in that category is preferred.

Planning the Data Migration Process

Effective planning is paramount for a successful cloud data migration. A well-defined plan mitigates risks, minimizes downtime, and ensures data integrity throughout the migration lifecycle. This section details the critical steps involved in crafting a comprehensive data migration plan, offering a pre-migration checklist, and illustrating a typical migration workflow.

Creating a Comprehensive Data Migration Plan

The data migration plan serves as a roadmap, outlining the objectives, scope, and execution strategy for the entire migration process. This plan should be a living document, subject to revisions as the project evolves.The following steps are essential in creating a comprehensive data migration plan:

- Define Scope and Objectives: Clearly articulate the goals of the migration. Determine what data will be migrated, to which cloud environment, and the desired outcomes (e.g., cost reduction, improved performance, enhanced scalability). Quantify these objectives wherever possible; for example, aiming for a specific percentage reduction in operational costs or a defined improvement in application response times.

- Assess Data and Systems: Conduct a thorough assessment of the current data landscape. This includes identifying data sources, data volumes, data formats, data dependencies, and data quality issues. Analyze the existing infrastructure, including servers, storage, and network configurations, to understand its capabilities and limitations.

- Select Migration Strategy: Based on the assessment, choose the most appropriate migration strategy (e.g., rehosting, replatforming, refactoring, or a combination thereof). The chosen strategy will significantly impact the overall plan.

- Choose Migration Tools and Technologies: Identify the tools and technologies required for the migration process. This includes data migration tools, data integration platforms, and security solutions. Consider factors such as cost, performance, and compatibility with the target cloud environment.

- Develop a Detailed Schedule: Create a realistic timeline for the migration process, including key milestones, dependencies, and deadlines. The schedule should account for data preparation, migration execution, testing, and cutover activities. Consider using project management tools to track progress and manage tasks effectively.

- Plan for Data Validation and Testing: Establish a comprehensive data validation and testing strategy to ensure data integrity after migration. Define test cases, data validation rules, and acceptance criteria. Plan for both functional and performance testing to verify that the migrated data and applications function as expected.

- Address Security and Compliance: Develop a robust security plan to protect data during migration and in the target cloud environment. Address data encryption, access controls, and compliance requirements (e.g., GDPR, HIPAA). Ensure that all security measures are aligned with organizational policies and industry best practices.

- Plan for Data Backup and Disaster Recovery: Implement a data backup and disaster recovery plan to protect against data loss during migration. This includes creating backups of both the source and target environments. Ensure that the disaster recovery plan addresses potential disruptions and provides for rapid data recovery.

- Define Roles and Responsibilities: Clearly define the roles and responsibilities of all stakeholders involved in the migration process. This includes project managers, data engineers, security specialists, and cloud administrators. Ensure that each team member understands their specific tasks and responsibilities.

- Develop a Communication Plan: Establish a communication plan to keep stakeholders informed about the progress of the migration. This includes regular status updates, issue resolution processes, and escalation procedures. Maintain transparent communication throughout the entire migration lifecycle.

Designing a Checklist for Pre-Migration Activities

A pre-migration checklist ensures that all necessary preparations are completed before the actual data migration begins. This checklist helps to minimize risks and ensure a smooth transition.The following items should be included in a pre-migration checklist:

- Data Backup: Create complete backups of all data sources. This includes full backups, incremental backups, and differential backups, depending on the chosen backup strategy and the criticality of the data. Verify the integrity of the backups to ensure they can be restored if needed.

- Security Configuration: Configure security settings in both the source and target environments. This includes setting up firewalls, access controls, and encryption. Implement multi-factor authentication for all administrative accounts. Review and update security policies to align with cloud security best practices.

- Network Configuration: Configure the network infrastructure to support data transfer between the source and target environments. This includes setting up virtual private networks (VPNs), direct connections, or other network connectivity solutions. Ensure sufficient bandwidth and network capacity to handle the data transfer volume.

- Data Cleansing and Transformation: Cleanse and transform data to meet the requirements of the target environment. This includes data validation, data deduplication, and data standardization. Apply data transformations to ensure data compatibility and consistency.

- Environment Setup: Set up the target cloud environment, including virtual machines, storage, and databases. Configure the necessary infrastructure components to support the migrated data and applications. Test the environment to ensure it meets performance and scalability requirements.

- Tool Installation and Configuration: Install and configure the data migration tools and technologies. Test the tools to ensure they function correctly and can handle the data migration tasks. Verify that the tools are compatible with both the source and target environments.

- User Training: Provide training to users on how to use the new cloud environment. This includes training on data access, data management, and application usage. Provide documentation and support resources to assist users during the transition.

- Compliance Checks: Verify that all data migration activities comply with relevant regulations and industry standards. This includes data privacy regulations, data security standards, and data governance policies. Conduct audits and assessments to ensure compliance.

- Cost Analysis: Perform a detailed cost analysis to estimate the total cost of the migration. This includes the cost of migration tools, cloud infrastructure, and labor. Optimize the migration plan to minimize costs while meeting the project objectives.

Creating a Flowchart Illustrating the Typical Data Migration Workflow

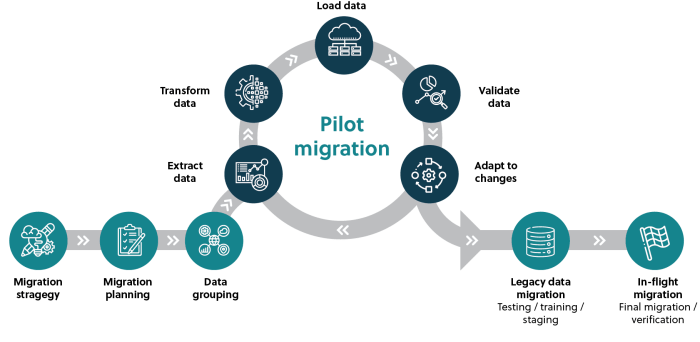

A flowchart visually represents the steps involved in the data migration process, from initiation to completion. This visual representation facilitates understanding and helps to identify potential bottlenecks or areas for optimization.The flowchart typically includes the following stages:

- Initiation: The process begins with the identification of the need for data migration, outlining the project’s scope and objectives.

- Assessment and Planning: This stage involves assessing the current data landscape, selecting a migration strategy, and developing a detailed migration plan, including timelines and resource allocation.

- Data Preparation: The data is prepared for migration, including data cleansing, transformation, and validation. Data is backed up and secured.

- Migration Execution: The actual data migration takes place, using the chosen migration tools and technologies. Data is transferred from the source to the target environment.

- Data Validation and Testing: After migration, data validation and testing are performed to ensure data integrity and application functionality. Test cases are executed, and any discrepancies are addressed.

- Cutover: The cutover process involves transitioning from the source environment to the target environment. This may involve a phased approach or a “big bang” cutover, depending on the project’s requirements.

- Post-Migration Activities: This stage includes monitoring the migrated data and applications, optimizing performance, and addressing any post-migration issues. Data backups and disaster recovery plans are updated.

- Decommissioning (Optional): Once the migration is complete and the source environment is no longer needed, it can be decommissioned.

The flowchart would visually represent these stages, with arrows indicating the flow of the process. Decision points (e.g., choosing a migration strategy) would be represented by diamond shapes, while tasks would be represented by rectangular shapes. The flowchart provides a clear and concise overview of the data migration process.

Data Migration Tools and Technologies

The success of a cloud data migration heavily relies on the appropriate selection and effective utilization of data migration tools and technologies. These tools streamline the complex processes involved in moving data, reducing downtime, minimizing risks, and ensuring data integrity. A thorough understanding of the available options and their capabilities is crucial for a successful migration strategy.

Types of Data Migration Tools

Various data migration tools cater to different needs and migration strategies. These tools can be broadly categorized based on their functionality and the specific aspects of data migration they address. Selecting the right tool depends on factors such as data volume, source and target systems, required downtime, and budget constraints.

- Database Migration Services: These services, often provided by cloud providers, are designed to migrate entire databases with minimal downtime. They typically support a wide range of source and target database systems, including relational and NoSQL databases. These services automate much of the migration process, including schema conversion, data transfer, and validation. For instance, Amazon Database Migration Service (DMS) supports migrations between various database engines, including Oracle, MySQL, PostgreSQL, and others.

Google Cloud’s Database Migration Service offers similar functionalities. Azure Database Migration Service is another such tool. These services generally employ a combination of online and offline migration strategies, often using change data capture (CDC) techniques to minimize downtime during the migration process.

- Data Replication Tools: Data replication tools focus on continuously replicating data from a source system to a target system. They are particularly useful for migrations that require minimal downtime or for maintaining data synchronization between on-premises and cloud environments. These tools capture changes in the source database and apply them to the target database in near real-time. Examples include tools like Qlik Replicate (formerly Attunity), HVR, and Fivetran.

These tools typically offer features like conflict resolution, data transformation, and monitoring capabilities. Replication tools are often employed in hybrid cloud scenarios where data needs to be synchronized between on-premises and cloud-based databases.

- ETL (Extract, Transform, Load) Tools: ETL tools are used to extract data from various sources, transform it according to the target system’s requirements, and load it into the cloud data warehouse or database. These tools offer a wide range of data transformation capabilities, including data cleansing, data aggregation, and data enrichment. Popular ETL tools include Informatica PowerCenter, Talend, and AWS Glue. ETL tools are well-suited for migrating data that requires significant transformation before being loaded into the cloud.

They provide a robust framework for handling complex data transformations and ensuring data quality.

- Data Synchronization Tools: Data synchronization tools facilitate the ongoing synchronization of data between different systems or environments. They are particularly relevant for migrations where data needs to be kept consistent across multiple locations. These tools typically employ techniques like bi-directional synchronization, conflict resolution, and version control. Examples include cloud-based file synchronization services like Dropbox or Box, which can be used to migrate and synchronize files.

Other tools focus on synchronizing databases and other data stores.

- Specialized Migration Tools: Some tools are designed for migrating specific types of data or applications. For instance, there are tools specifically for migrating virtual machines (VMs), applications, or specific file formats. These tools often provide specialized features and functionalities to handle the unique challenges associated with migrating specific data types. Examples include tools for migrating virtual machines to cloud environments or for migrating large files.

Criteria for Evaluating Data Migration Tools

Choosing the right data migration tool involves evaluating several factors to ensure it meets the specific requirements of the migration project. A systematic evaluation process helps identify the tool that best aligns with the project’s objectives and constraints.

- Data Volume and Complexity: Assess the volume of data to be migrated and the complexity of the data structures. Tools that can handle large datasets and complex data transformations are essential for large-scale migrations. Consider the data types, relationships, and any necessary data cleansing or transformation processes.

- Source and Target System Compatibility: Ensure the tool supports the source and target systems involved in the migration. Compatibility with the database engines, operating systems, and cloud platforms is crucial. Verify that the tool supports the required data formats and protocols.

- Migration Strategy Support: Determine the migration strategy (e.g., lift and shift, re-platform, re-architect) and select a tool that aligns with the chosen strategy. Some tools are better suited for certain strategies than others. For example, database migration services are well-suited for lift-and-shift migrations, while ETL tools are often used for re-platforming or re-architecting.

- Downtime Requirements: Evaluate the acceptable downtime for the migration. Some tools offer features like online migration and change data capture (CDC) to minimize downtime. Consider the impact of downtime on business operations and select a tool that meets the required availability.

- Data Transformation Capabilities: Assess the need for data transformation and the tool’s capabilities. Consider data cleansing, data aggregation, data enrichment, and schema conversion requirements. Select a tool that provides the necessary transformation functionalities.

- Security and Compliance: Ensure the tool meets security and compliance requirements. Consider data encryption, access control, and audit logging features. Verify that the tool complies with relevant industry regulations and data privacy standards.

- Performance and Scalability: Evaluate the tool’s performance and scalability. Consider the data transfer speed, processing capacity, and ability to handle increasing data volumes. Select a tool that can meet the performance requirements of the migration.

- Cost and Licensing: Evaluate the tool’s cost, including licensing fees, maintenance costs, and any associated cloud infrastructure costs. Compare the total cost of ownership (TCO) for different tools and select the one that provides the best value for the investment.

- Ease of Use and Management: Consider the tool’s ease of use, including the user interface, documentation, and support resources. Evaluate the tool’s management capabilities, including monitoring, logging, and error handling.

- Vendor Support and Community: Assess the vendor’s support and the availability of community resources. Consider the level of technical support, documentation, and training provided by the vendor. Look for a tool with an active user community.

Demonstration of a Specific Data Migration Tool: AWS Database Migration Service (DMS)

AWS Database Migration Service (DMS) is a cloud service that helps migrate databases to AWS quickly and securely. It supports migrations between a wide variety of database engines. This section demonstrates a simplified example of using AWS DMS to migrate data from an on-premises MySQL database to an Amazon RDS for MySQL instance. This example focuses on the essential steps involved in the migration process, offering a practical illustration of how to leverage AWS DMS for data migration.

- Prerequisites: Before starting, ensure the following are in place:

- An AWS account.

- An on-premises MySQL database with appropriate network connectivity.

- An Amazon RDS for MySQL instance.

- Sufficient IAM permissions for the AWS DMS service.

- Creating a Replication Instance:

- Log in to the AWS Management Console and navigate to the DMS service.

- Create a new replication instance. Specify the instance name, instance class, and the VPC settings. The replication instance acts as a server that runs the data migration tasks. Choose a replication instance class based on the size of your database and the expected data transfer rate.

- Configure the VPC settings. Ensure that the replication instance is in the same VPC as the target RDS instance and can access the source MySQL database.

- Configure the replication instance with the appropriate security group, allowing inbound traffic from the source MySQL database and outbound traffic to the target RDS instance.

- Creating Source and Target Endpoints:

- Create a source endpoint to connect to the on-premises MySQL database. Provide the database endpoint, port, username, password, and database name.

- Test the connection to ensure that AWS DMS can successfully connect to the source database.

- Create a target endpoint to connect to the Amazon RDS for MySQL instance. Provide the database endpoint, port, username, password, and database name.

- Test the connection to ensure that AWS DMS can successfully connect to the target database.

- Creating a Migration Task:

- Create a new migration task. Specify the task name, replication instance, source endpoint, and target endpoint.

- Choose the migration type. Options include:

- Migrate existing data only: Transfers all existing data from the source to the target.

- Migrate existing data and replicate ongoing changes: Transfers existing data and replicates ongoing changes using CDC.

- Replicate data changes only: Replicates ongoing changes from the source to the target.

For a full migration with minimal downtime, select “Migrate existing data and replicate ongoing changes”.

- Configure the task settings, including the table mappings. Specify the tables to migrate and any necessary transformations. You can also use filters to include or exclude specific tables or columns.

- Configure the task settings for logging, error handling, and any other advanced options.

- Starting the Migration Task:

- Start the migration task. AWS DMS will begin transferring the data from the source MySQL database to the target RDS for MySQL instance.

- Monitor the progress of the migration task. You can view the status, logs, and any errors.

- If you chose “Migrate existing data and replicate ongoing changes”, DMS will first copy the existing data and then start replicating ongoing changes.

- After the initial data migration is complete and ongoing replication is running, you can switch over to the target database.

- Verifying the Migration:

- After the migration task is complete, verify that the data has been successfully migrated to the target database. Compare the data in the source and target databases to ensure consistency.

- Check for any errors or warnings in the DMS task logs.

- Perform functional testing to ensure that the migrated data is working as expected in the target database.

Ensuring Data Security and Compliance

Data migration presents significant security and compliance challenges. Organizations must prioritize protecting sensitive data throughout the migration process to prevent data breaches, maintain regulatory compliance, and preserve customer trust. A comprehensive approach, encompassing data encryption, access controls, and adherence to relevant regulations, is essential for a successful and secure cloud data migration.

Security Considerations During Data Migration

Several security considerations are paramount during data migration. These considerations span the entire lifecycle of the migration, from planning to execution and post-migration validation. Neglecting these aspects can expose an organization to significant risks, including data loss, unauthorized access, and non-compliance with legal and regulatory requirements.

- Data at Rest Encryption: Protecting data stored within the source, during transit, and within the target cloud environment. Encryption ensures that even if data is compromised, it remains unreadable without the appropriate decryption keys.

- Data in Transit Encryption: Employing secure protocols such as Transport Layer Security (TLS) or Secure Sockets Layer (SSL) to encrypt data as it moves between the source, the migration tools, and the cloud environment. This prevents eavesdropping and data interception.

- Access Control and Authentication: Implementing robust access controls, including multi-factor authentication (MFA), to restrict access to data and migration tools. This prevents unauthorized users from accessing or modifying sensitive data.

- Data Integrity Checks: Verifying data integrity throughout the migration process. This involves using checksums or hashing algorithms to ensure data has not been altered during transfer.

- Monitoring and Auditing: Continuously monitoring the migration process for any unusual activity and establishing comprehensive audit trails to track data access, modifications, and migrations.

- Vulnerability Assessments and Penetration Testing: Conducting regular vulnerability assessments and penetration testing to identify and address potential security weaknesses in the migration process and the target cloud environment.

- Secure Data Deletion: Ensuring data is securely deleted from the source environment after successful migration. This involves using data sanitization techniques to prevent data recovery.

Data Encryption and Access Control Mechanisms

Data encryption and access control are fundamental security mechanisms for protecting data during cloud data migration. These mechanisms work in tandem to safeguard data confidentiality, integrity, and availability. Implementing these controls is critical for mitigating risks and ensuring a secure migration.

- Data Encryption Methods:

- Symmetric Encryption: Uses a single secret key for both encryption and decryption. Algorithms like Advanced Encryption Standard (AES) are commonly used.

AES-256 offers a high level of security.

- Asymmetric Encryption: Uses a pair of keys – a public key for encryption and a private key for decryption. Rivest–Shamir–Adleman (RSA) is a widely used asymmetric encryption algorithm.

- Symmetric Encryption: Uses a single secret key for both encryption and decryption. Algorithms like Advanced Encryption Standard (AES) are commonly used.

- Access Control Mechanisms:

- Role-Based Access Control (RBAC): Assigns permissions based on user roles, simplifying access management.

- Attribute-Based Access Control (ABAC): Defines access based on attributes of the user, the data, and the environment, providing more granular control.

- Identity and Access Management (IAM) Systems: Centralized systems for managing user identities, authentication, and authorization.

- Multi-Factor Authentication (MFA): Requires users to provide multiple forms of authentication, increasing security.

- Encryption in Practice: Consider a scenario where an organization migrates sensitive customer data to a cloud platform. Before migration, the data is encrypted using AES-256. During transit, the data is secured using TLS. In the cloud, RBAC is implemented to control access to the data, ensuring only authorized personnel can view it.

Best Practices for Ensuring Compliance with Relevant Regulations

Compliance with relevant regulations is a critical aspect of secure cloud data migration. Organizations must understand and adhere to the specific requirements of regulations such as GDPR, HIPAA, and others, depending on their industry and geographic location. Non-compliance can lead to significant penalties and reputational damage.

- GDPR (General Data Protection Regulation):

- Data Minimization: Collect and process only the data necessary for the specified purpose.

- Data Subject Rights: Provide individuals with control over their data, including the right to access, rectify, and erase their data.

- Data Breach Notification: Report data breaches to the relevant supervisory authority within 72 hours.

- Example: A company migrating customer data from the EU must ensure the data is processed in compliance with GDPR, including obtaining explicit consent and providing individuals with the right to be forgotten.

- HIPAA (Health Insurance Portability and Accountability Act):

- Protected Health Information (PHI) Protection: Implement security measures to protect the confidentiality, integrity, and availability of PHI.

- Business Associate Agreements (BAA): Ensure that any cloud service providers sign a BAA that Artikels their responsibilities for protecting PHI.

- Example: A healthcare provider migrating patient data to the cloud must ensure that the data is encrypted, access is strictly controlled, and the cloud provider has signed a BAA.

- Other Regulations:

- CCPA (California Consumer Privacy Act): Similar to GDPR, CCPA grants California residents rights regarding their personal data.

- Industry-Specific Regulations: Financial institutions (e.g., SOX), government agencies (e.g., FedRAMP), and other industries have specific regulatory requirements.

- Best Practices:

- Data Mapping: Identify and map all data elements to understand their sensitivity and regulatory requirements.

- Data Governance: Establish clear data governance policies and procedures.

- Vendor Management: Carefully select and manage cloud service providers to ensure compliance.

- Regular Audits: Conduct regular audits to verify compliance.

Post-Migration Validation and Optimization

Post-migration validation and optimization are critical phases ensuring the integrity, performance, and cost-effectiveness of data residing in the cloud. These steps confirm the successful transfer of data, identify potential performance bottlenecks, and enable continuous improvement. Neglecting these phases can lead to data loss, performance degradation, and unnecessary cloud expenses.

Validating Migrated Data

Validating the migrated data involves a comprehensive process to ensure data accuracy, completeness, and consistency following the migration. This process requires meticulous planning and execution to guarantee the data’s usability and reliability.

- Data Integrity Checks: Implementing checks to verify data integrity is essential. This involves comparing data characteristics between the source and target environments. For instance, checksums (e.g., MD5, SHA-256) can be calculated for files or datasets before and after migration to ensure no data corruption occurred during the transfer. Similarly, comparing the total number of records, data types, and data ranges can reveal inconsistencies.

- Data Completeness Verification: Confirming that all data has been successfully migrated is a key aspect of validation. This includes comparing the number of files, tables, or records between the source and target systems. For example, if migrating a customer database, verify that the total number of customer records in the cloud environment matches the number in the on-premises database. This process often uses automated scripts or tools that compare metadata and perform row counts.

- Data Consistency Assessment: Data consistency is verified by examining the relationships and referential integrity between different datasets. This often includes performing joins, queries, and cross-validations across different tables or datasets to ensure that relationships between data points are maintained. For instance, verifying that foreign key relationships in a relational database are valid after migration.

- Functional Testing: Functional testing involves validating the data’s usability by executing queries, reports, and other data-driven operations. This process tests the migrated data’s functionality by simulating real-world use cases. For example, if migrating a financial system, functional tests might involve generating financial reports and comparing them with reports generated from the source system.

- Performance Testing: Performance testing is used to assess the speed and efficiency of data access and processing in the cloud environment. This involves running benchmark queries and evaluating response times. This might include measuring the time taken to execute complex SQL queries or the speed at which data is retrieved from a cloud data warehouse.

- User Acceptance Testing (UAT): User Acceptance Testing (UAT) involves having end-users validate the migrated data and the applications that utilize it. End-users test the data and applications to ensure that they meet business requirements and that the data is functioning correctly. This is particularly important for business-critical applications where data accuracy is paramount.

Monitoring Performance of Migrated Data

Monitoring the performance of migrated data is an ongoing process that tracks data access, query performance, and system resource utilization in the cloud environment. This continuous monitoring ensures optimal performance and identifies potential issues before they impact business operations.

- Establish Baseline Performance Metrics: Before monitoring, establishing baseline performance metrics is crucial. These baselines serve as a reference point for evaluating performance changes over time. The metrics include query execution times, data access latency, storage I/O operations, and network throughput.

- Implement Performance Monitoring Tools: Cloud providers offer native monitoring tools (e.g., Amazon CloudWatch, Azure Monitor, Google Cloud Monitoring) that provide real-time insights into the performance of cloud resources. These tools monitor CPU utilization, memory usage, disk I/O, and network traffic. In addition to provider-specific tools, third-party monitoring solutions provide enhanced features like customizable dashboards and automated alerts.

- Monitor Key Performance Indicators (KPIs): Define and monitor key performance indicators (KPIs) relevant to the data workload. For instance, in a data warehouse, KPIs could include query execution time, the number of queries per minute, and the success rate of queries. For an object storage service, relevant KPIs might be the number of objects accessed per second, the latency of object retrieval, and the data transfer rate.

- Set Up Alerting and Notifications: Implement alerts and notifications to proactively identify performance issues. Configure alerts based on predefined thresholds for KPIs. For example, if query execution time exceeds a certain threshold, the system should trigger an alert. These alerts notify administrators of potential problems, enabling timely investigation and remediation.

- Analyze Performance Trends: Regularly analyze performance data to identify trends and patterns. Reviewing historical data helps to understand how performance changes over time. Analyzing these trends can identify the root causes of performance degradation, such as inefficient queries, inadequate resources, or network bottlenecks.

- Optimize Query Performance: Query optimization is a critical part of performance monitoring. Analyze slow-running queries and optimize them. This might involve rewriting queries, adding indexes, or optimizing data structures. For example, adding indexes to frequently queried columns in a database can significantly improve query performance.

- Optimize Data Storage and Access Patterns: Monitoring also involves analyzing data access patterns and optimizing data storage accordingly. For example, data that is frequently accessed should be stored in a higher-performance storage tier. The storage tier could be configured to provide better performance, which reduces latency and improves overall performance.

Optimizing Cloud Data Storage and Costs After Migration

Optimizing cloud data storage and costs post-migration is essential for achieving cost-efficiency and maximizing the return on investment (ROI) of cloud data infrastructure. This process involves selecting the appropriate storage tiers, implementing data lifecycle management policies, and continuously monitoring and adjusting resource utilization.

- Choose the Right Storage Tiers: Cloud providers offer different storage tiers optimized for various use cases. Selecting the right storage tier based on data access frequency and performance requirements is crucial. For example, frequently accessed data should be stored in a high-performance tier (e.g., SSD-backed storage), while less frequently accessed data can be stored in a lower-cost tier (e.g., cold storage or archive storage).

- Implement Data Lifecycle Management: Data lifecycle management involves automating the movement of data between different storage tiers based on its age and access frequency. For example, data that is rarely accessed can be automatically moved to a cheaper storage tier to reduce costs. This can be configured using cloud provider tools.

- Optimize Data Compression and Deduplication: Employing data compression and deduplication techniques can reduce the amount of storage space required, thereby reducing costs. Data compression reduces the size of data files by encoding data more efficiently. Data deduplication eliminates redundant data copies, storing only one copy of identical data blocks.

- Right-size Compute Resources: Monitor compute resource utilization and right-size the compute instances to match the workload demands. Over-provisioning compute resources leads to unnecessary costs. Conversely, under-provisioning can lead to performance issues. Regularly assess the compute resources needed and scale up or down as needed.

- Use Reserved Instances and Spot Instances: Cloud providers offer cost-saving options like reserved instances and spot instances. Reserved instances provide significant discounts compared to on-demand pricing for a committed usage period. Spot instances offer even lower prices but can be terminated by the provider if demand increases.

- Monitor Storage Usage and Costs: Regularly monitor storage usage and costs using the cloud provider’s monitoring tools. Identify storage volumes that are underutilized or overutilized. Implement cost allocation tags to track the cost of different data sets and applications. This allows for a granular understanding of where costs are incurred.

- Optimize Data Retention Policies: Review and optimize data retention policies to reduce storage costs. Define how long data needs to be stored based on business and regulatory requirements. Consider deleting or archiving data that is no longer needed.

- Automate Cost Optimization: Implement automated cost optimization processes. This includes using cloud provider tools to automatically scale resources, move data between storage tiers, and identify cost-saving opportunities.

Ultimate Conclusion

In conclusion, selecting the right cloud data migration method is a multifaceted process that requires a thorough understanding of the data, business objectives, and available technologies. By carefully assessing the data landscape, defining clear migration goals, and choosing the appropriate strategy, organizations can successfully navigate the complexities of cloud migration. Through meticulous planning, robust security measures, and post-migration optimization, businesses can unlock the full potential of the cloud, driving innovation, efficiency, and cost savings.

FAQ Insights

What are the primary benefits of cloud data migration?

Cloud data migration offers several advantages, including improved scalability, enhanced data accessibility, reduced IT costs, increased data security, and better business continuity and disaster recovery capabilities.

What are the potential risks associated with cloud data migration?

Potential risks include data loss or corruption, security breaches, downtime during migration, unexpected costs, and compatibility issues between on-premise and cloud systems. Thorough planning and the right tools can mitigate these risks.

How long does a typical cloud data migration project take?

The duration of a cloud data migration project varies widely depending on factors such as data volume, complexity of the data structure, and the chosen migration method. Simple migrations may take weeks, while complex projects can span several months.

What role does data backup play in the migration process?

Data backup is a critical pre-migration step. It ensures that a copy of the data exists in case of errors or data loss during the migration process. Backups also provide a means to revert to the original state if the migration fails.

What is the difference between a ‘lift and shift’ and a ‘replatforming’ migration strategy?

A ‘lift and shift’ (rehosting) strategy involves moving applications and data to the cloud with minimal changes. Replatforming, on the other hand, involves making some modifications to the applications to leverage cloud-native services and optimize performance.