Premature optimization, a common pitfall in software development, often leads to wasted effort and delays. This guide delves into the crucial aspects of recognizing and mitigating this anti-pattern. We’ll explore why focusing on performance bottlenecks early can be detrimental and how a methodical approach can yield superior results.

By understanding the characteristics of premature optimization, and the value of profiling and thorough testing, you can effectively avoid falling into this trap. The guide also highlights the importance of realistic performance testing, and the role of refactoring and code reviews in achieving true optimization without sacrificing project timelines.

Understanding Premature Optimization

Premature optimization is a common pitfall in software development, often leading to wasted time and effort. It’s crucial to understand its characteristics and potential drawbacks to avoid falling into this trap. A well-structured approach prioritizes clarity, maintainability, and performance gains based on measured needs.This section delves into the concept of premature optimization, its detrimental effects, and how to identify situations where it might be a concern.

We will also compare it to a thoughtful, measured optimization approach.

Definition of Premature Optimization

Premature optimization is the practice of optimizing code or algorithms before sufficient data or profiling indicates the need for optimization. It’s characterized by a focus on potential performance gains over demonstrable performance issues. This often leads to over-engineering and unnecessary complexity.

Potential Downsides of Premature Optimization

Premature optimization can significantly hinder the development process in several ways. It often leads to:

- Wasted effort: Resources are spent on optimizations that don’t significantly impact performance. This can lead to frustration and a delay in the project timeline.

- Reduced code readability and maintainability: Overly optimized code can become difficult to understand and modify, making future maintenance a significant challenge. This can impact team collaboration and project longevity.

- Increased complexity: Unnecessary optimizations can introduce complexity into the codebase, making it more prone to errors and harder to debug.

- Missed opportunities for significant gains: Focusing on marginal optimizations can distract from areas where more substantial performance improvements can be achieved. This could involve examining architecture or using more appropriate algorithms.

Common Scenarios of Premature Optimization

Several common scenarios illustrate the pitfalls of premature optimization:

- Using complex algorithms before profiling: Employing sophisticated algorithms without first analyzing the performance of simpler alternatives can be unproductive. The simplest approach may be adequate and should be assessed first.

- Optimizing for edge cases that rarely occur: Concentrating on uncommon or infrequent scenarios may not yield significant benefits. Resources should be allocated to the most frequent use cases first.

- Choosing highly specialized data structures before understanding usage patterns: Selecting a complex data structure without understanding how the data will be accessed and manipulated can lead to inefficiency. A simple data structure might suffice.

- Optimizing code without having any performance problems: Code that operates adequately and within acceptable time frames does not require optimization. This is a prime example of premature optimization.

Identifying Situations Prone to Premature Optimization

Recognizing situations where premature optimization is likely to occur is crucial. Consider these indicators:

- Lack of performance testing and profiling: Without performance data, optimization efforts are speculative. Profiling tools and benchmarks are crucial for identifying actual bottlenecks.

- Focusing on theoretical performance gains over measured results: Theoretical gains might not translate into practical benefits. Measurements are critical for making informed decisions.

- Ignoring the potential impact of other factors: Performance can be impacted by external factors such as hardware or the input data itself. Optimizing code without accounting for these factors can be ineffective.

Comparison: Premature Optimization vs. Thoughtful Optimization

| Characteristic | Premature Optimization | Thoughtful Optimization |

|---|---|---|

| Focus | Potential performance gains, often speculative | Measured performance bottlenecks, data-driven approach |

| Approach | Over-engineering, complex solutions | Incremental, iterative improvements |

| Methodology | Guessing, intuition | Profiling, benchmarking, analysis |

| Result | Increased complexity, wasted effort | Improved performance, maintainable code |

Recognizing the Anti-Pattern

Premature optimization is a common pitfall in software development, often leading to wasted effort and reduced project efficiency. Understanding its detrimental effects is crucial for effective project management and resource allocation. This section delves into the negative consequences of this anti-pattern, provides concrete examples, and contrasts it with a methodical approach to performance tuning.The tendency to optimize code before a thorough understanding of performance bottlenecks is a common trap.

This often results in focusing on areas that don’t significantly impact overall performance while overlooking critical performance issues. A methodical approach, in contrast, prioritizes identifying the actual performance problems and implementing targeted solutions.

Negative Impact on Project Timelines and Resource Allocation

Premature optimization frequently diverts valuable development time and resources. Teams may spend considerable effort optimizing code that is not causing performance problems, leading to delays in delivering core functionalities. This misallocation can result in project deadlines being missed, budget overruns, and a reduction in the overall quality of the final product. The focus shifts from essential features to unnecessary micro-optimizations.

Specific Code Examples Demonstrating the Anti-Pattern

Consider a scenario where a developer, without profiling the application, implements caching strategies for frequently accessed data. This approach, while theoretically beneficial, may not be necessary if the application’s bottlenecks lie elsewhere, such as database queries or network latency. A second example involves rewriting a simple loop with highly optimized but complex algorithms, before evaluating the actual performance implications of the original, seemingly less-efficient code.

This is especially problematic when the original code functions correctly and reliably.

Comparison of Premature Optimization and Methodical Performance Tuning

Premature optimization prioritizes efficiency over functionality. In contrast, a methodical approach first identifies performance bottlenecks using profiling tools and performance monitoring techniques. This data-driven strategy pinpoints the areas that require optimization, ensuring that resources are allocated to the most impactful areas.

Common Misconceptions Surrounding Performance Optimization

A prevalent misconception is that complex, optimized algorithms are inherently superior to simpler, readily understandable ones. Often, the initial, straightforward implementation performs adequately, and optimizing it unnecessarily can lead to a more complex, harder-to-maintain codebase. Another misconception involves prematurely applying advanced techniques, without adequately understanding the context of the application.

Typical Lifecycle of a Project Affected by Premature Optimization

| Stage | Description | Impact of Premature Optimization |

|---|---|---|

| Initial Design | Conceptualization and planning of the project | Focus shifts from core functionality to premature optimization, hindering the design process. |

| Implementation | Development of core functionalities and modules. | Significant time and effort spent on optimizations that don’t address the real bottlenecks. |

| Testing | Thorough testing of functionalities and modules. | Testing becomes inefficient due to the presence of complex, prematurely optimized code, potentially hiding real defects. |

| Deployment | Release and deployment of the application. | Potentially delayed deployment and reduced quality due to the unfocused efforts. |

The Importance of Profiling

Profiling is a crucial technique for identifying performance bottlenecks in software. It involves gathering data about how a program executes, allowing developers to pinpoint sections that consume excessive resources, such as CPU time, memory, or network bandwidth. This proactive approach helps avoid the pitfalls of premature optimization by ensuring that optimization efforts are targeted at actual performance issues rather than potential ones.

By understanding the program’s behavior, developers can allocate resources effectively and deliver optimized code without unnecessary rework.Understanding how a program functions is critical to effectively optimizing it. Profiling tools provide insights into the program’s execution path, allowing developers to identify areas that need improvement. This data-driven approach enables developers to focus on optimizing the most impactful segments, leading to significant performance enhancements.

By focusing on actual bottlenecks, developers can avoid wasting time and resources on areas that have negligible performance impact.

Profiling Tools and Techniques

Profiling tools provide valuable data about program execution, enabling developers to pinpoint performance bottlenecks. These tools analyze various aspects of code execution, such as the time spent in different functions, the frequency of method calls, and memory usage patterns. This detailed analysis facilitates targeted optimization efforts.

Profiling Tools for Different Platforms and Languages

A variety of tools are available for profiling applications across different programming languages and platforms. These tools provide different features and capabilities tailored to specific needs. Choosing the right tool depends on the programming language, the platform, and the specific performance characteristics to be analyzed.

| Programming Language/Platform | Profiling Tool | Key Features |

|---|---|---|

| Java | Java VisualVM | Provides a comprehensive view of memory usage, CPU usage, and thread activity. Can profile both application and JVM behavior. |

| Python | cProfile, line_profiler | cProfile provides detailed function call statistics, while line_profiler measures execution time for individual lines of code. |

| C++ | gprof | A widely used tool that generates call graphs and function call statistics for C and C++ applications. |

| .NET | Performance Explorer | Analyzes .NET applications, showing CPU usage, memory allocation, and other performance metrics. |

| JavaScript (Node.js) | v8 profiler | Allows detailed examination of JavaScript code execution, including profiling time spent in different functions. |

Using Profiling Results for Optimization

Profiling results provide a detailed map of performance characteristics. Analyzing this data helps developers understand where their code spends the most time and what areas require attention. This data is the foundation for effective optimization efforts.

The data obtained from profiling tools should be carefully examined. By identifying functions or code blocks that consume significant execution time, developers can prioritize optimization efforts. This targeted approach helps to maximize the impact of optimization efforts. The results should be viewed holistically, considering the relationships between different parts of the program. Profiling data can be used to create optimized code that is more efficient.

Strategies for Effective Profiling Data Utilization

Understanding how to effectively utilize profiling data is crucial for successful optimization. The following strategies help ensure that profiling efforts lead to tangible performance improvements.

- Identifying Bottlenecks: Profiling tools reveal the parts of the code that consume the most resources. By identifying these bottlenecks, developers can focus their optimization efforts on the most impactful areas.

- Correlation with Code: Understanding the code corresponding to the profiling results is essential for determining the root cause of performance issues. This helps to pinpoint the specific sections of code that require optimization.

- Iterative Refinement: Profiling is not a one-time process. It’s often a series of iterations, where profiling results guide subsequent optimization efforts. This iterative approach allows for continuous improvement and optimization.

- Prioritization: Not all performance issues need immediate attention. Prioritizing based on the impact of each bottleneck on the overall performance is crucial. This allows developers to address the most critical performance issues first.

Measuring Performance Effectively

Accurate performance measurement is crucial for identifying and addressing bottlenecks in software. Without precise metrics, optimization efforts can be misguided, leading to wasted time and resources. A well-defined performance testing strategy, using appropriate metrics, allows developers to pinpoint areas requiring improvement and implement targeted solutions.

Various Performance Metrics

Performance in software is multifaceted. Different metrics provide insights into various aspects of application behavior. Understanding these metrics is essential for identifying performance bottlenecks. Common metrics include execution time, throughput, resource utilization (CPU, memory, network), and response time.

Using Metrics to Identify Bottlenecks

Performance metrics are invaluable tools for pinpointing bottlenecks. For instance, if execution time for a specific function is significantly higher than expected, it suggests a potential performance issue within that module. Similarly, high CPU usage during a specific task might indicate a computationally intensive operation that needs optimization. Analyzing resource utilization patterns helps isolate and understand the root cause of performance problems.

Collecting Metrics without Overhead

Collecting performance metrics should not introduce substantial overhead, hindering the application’s normal operation. Lightweight profiling tools and sampling techniques can be employed to gather data without significantly impacting performance. Tools that measure execution time using sampling techniques can capture data points without interrupting the application’s flow. Tools that allow for selective monitoring of specific code segments minimize the performance impact.

A Simple Performance Testing Framework

A simple framework for measuring code efficiency can incorporate several key elements. First, define specific test cases that represent typical application usage scenarios. Second, utilize a timer to measure the execution time of these test cases. Third, track resource utilization (CPU, memory, network) during test execution. Finally, analyze the results to identify performance bottlenecks.

This framework can be extended by integrating logging capabilities to record relevant events and data.

Common Performance Metrics and Their Significance

- Execution Time: Measures the duration of a specific operation or function. High execution time often indicates inefficient code or algorithms. This metric helps pinpoint sections of the code needing optimization.

- Throughput: Indicates the rate at which a system processes requests or data. Low throughput might signify that the system is not processing requests quickly enough, possibly due to resource constraints.

- Response Time: Measures the time taken for a system to respond to a request. Long response times can negatively impact user experience and need optimization. This metric directly correlates with user satisfaction.

- CPU Utilization: Reflects the percentage of CPU time used by the application. High CPU usage suggests that the application is computationally intensive and may require optimization of algorithms.

- Memory Usage: Indicates the amount of memory consumed by the application. High memory usage can lead to performance issues, particularly in memory-intensive tasks.

- Network I/O: Measures the network activity of the application. High network I/O can impact performance if the network is slow or congested.

Focusing on Performance Critical Sections

Identifying and optimizing performance bottlenecks in code requires a focused approach. Simply applying general optimization techniques across the entire codebase may not yield the desired results. Instead, pinpointing the specific sections of code that contribute most significantly to slowdowns is crucial. This targeted approach maximizes efficiency and avoids unnecessary effort on areas that don’t significantly impact overall performance.Effective optimization often involves a deep dive into the code, meticulously examining each section to identify and address areas where improvements are possible.

This involves understanding the code’s flow, identifying potential performance hazards, and developing strategies to address them. This methodology is a key part of avoiding premature optimization, as it ensures efforts are focused on the most impactful areas.

Identifying Performance Critical Sections

Performance bottlenecks often reside in specific code segments. These areas can be isolated through various techniques, such as profiling tools, to pinpoint the sections that consume the most time or resources. By understanding where the performance issues lie, optimization efforts can be concentrated on those regions, yielding greater returns.

Methods for Isolating and Evaluating Performance

Profiling tools are essential for identifying performance critical sections. These tools provide detailed information on the execution time of different parts of the code, allowing developers to pinpoint the segments that consume the most time.

- Profiling Tools: Modern programming languages and environments often include profiling tools that provide comprehensive data about the execution path of the code. These tools can reveal which functions, loops, or code blocks are the most time-consuming, allowing for a direct focus on the performance bottlenecks.

- Statistical Analysis: Analyzing execution times across different inputs or scenarios can provide insights into the variability of performance. By understanding how execution time fluctuates under different conditions, developers can prioritize optimizations based on the impact of the variations. For instance, if a function consistently takes a significant amount of time under specific input parameters, it’s a high-priority candidate for optimization.

- Performance Monitoring: Observing real-time performance metrics during execution can help in identifying performance-intensive sections. Monitoring can involve logging the time taken for specific code blocks or tracing the flow of execution. This method is especially useful in understanding how the code behaves in a production-like environment.

Prioritizing Optimization Efforts

Once critical sections are identified, prioritize optimization based on the impact on overall performance. Don’t waste time optimizing sections that contribute only a minor portion to the overall slowdowns. Focus on the sections that yield the biggest improvements.

- Impact Assessment: The impact of an optimization is a key factor. A small optimization on a frequently called function may have a larger overall effect than a major optimization on a function rarely invoked.

- Effort vs. Gain: Balance the effort required for optimization against the potential performance gain. Minor optimizations in frequently called functions can lead to substantial improvements, making them a priority over optimizing less frequently called, potentially more complex sections.

- Resource Constraints: Time and resource constraints should be considered. If time is limited, prioritize the most impactful areas first. Optimizing only the top performance bottlenecks may be more effective in the short term.

Code Snippet Examples

Consider the following code examples illustrating potential performance improvements in critical sections.

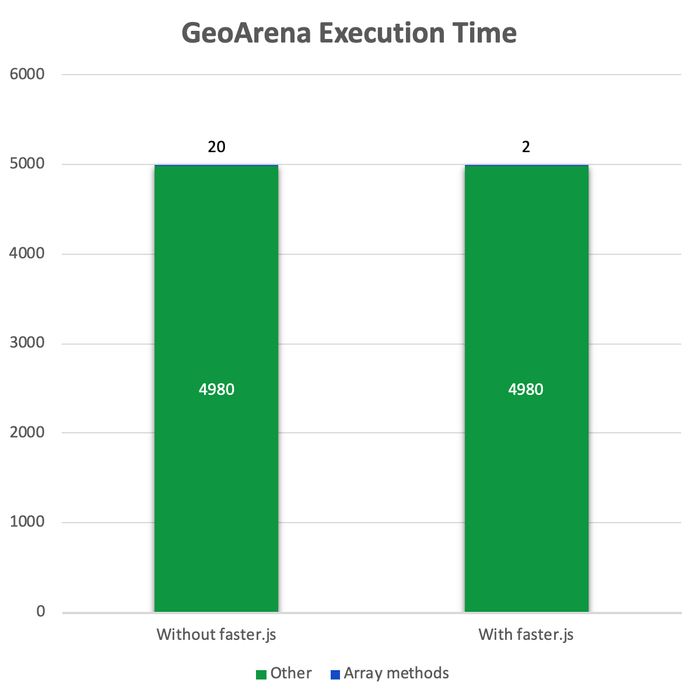

// Inefficient code snippetfunction calculateSum(arr) let sum = 0; for (let i = 0; i < arr.length; i++) sum += arr[i]; return sum;// Optimized code snippetfunction calculateSumOptimized(arr) return arr.reduce((sum, current) => sum + current, 0); The optimized code snippet leverages the built-in `reduce` method, potentially offering a significant performance boost for large arrays, demonstrating how understanding the inherent characteristics of the language can lead to optimized performance.

Steps to Identify and Address Performance Critical Sections

Following these steps can systematically address performance bottlenecks:

- Profile the code: Utilize profiling tools to identify the most time-consuming sections of the code.

- Isolate the bottlenecks: Determine the specific code blocks or functions responsible for the performance issues.

- Analyze the bottleneck: Investigate the logic and algorithms within the critical sections.

- Prioritize optimization: Rank bottlenecks based on their impact on the overall performance.

- Implement optimization: Apply suitable optimization techniques to the identified critical sections.

- Re-profile and measure: Re-evaluate performance after applying the optimization techniques.

Optimizing for Real-World Scenarios

Real-world performance testing is crucial for validating the effectiveness of optimizations. Simply optimizing in isolation, on a small dataset or in a controlled environment, can be misleading. A successful optimization must demonstrably improve performance under realistic conditions, replicating how users interact with the system. This section details the importance of real-world testing and how to conduct it effectively.

Optimizations, though potentially beneficial, are often not universally effective across all scenarios. A particular optimization might yield significant gains in certain use cases but have minimal impact or even a detrimental effect in others. Understanding the real-world impact is essential to ensure the optimization enhances the overall user experience and aligns with the project’s goals.

Importance of Real-World Testing

Optimizations are only meaningful when evaluated in realistic scenarios that mirror how users interact with the system. This ensures the gains are not isolated to controlled test environments. In a real-world setting, the system is exposed to various data inputs, user interactions, and resource constraints. A comprehensive evaluation requires replicating these conditions to gain a true understanding of the optimization’s impact.

Creating Realistic Test Cases

Creating realistic test cases is a critical step in real-world performance testing. These cases should simulate typical user behavior and data patterns. This includes:

- Defining User Profiles: Different user profiles will have varying needs and interactions. A system designed for a wide range of users must consider the range of data inputs and requests they generate. For instance, a social media platform will need to consider high-volume data uploads from frequent users versus sporadic updates from less active users.

- Simulating Data Loads: Realistic data loads are crucial. This might involve simulating large file uploads, database queries, or network requests, mimicking the actual volume and characteristics of user interactions. A website experiencing seasonal peaks will have a different workload than a site with consistent traffic.

- Replicating User Interactions: Test cases must replicate typical user interactions. This includes simulating actions like browsing, searching, purchasing, or sharing content. The sequence and frequency of these actions are critical. For example, a e-commerce site must consider the typical checkout flow and the likelihood of users adding items to their cart and browsing before purchasing.

Evaluating Optimization Impact

Evaluating the impact of optimizations on the overall system performance involves precise measurement and analysis. Tools like performance monitoring tools, profiling utilities, and specialized performance testing frameworks are essential to gather data.

- Performance Metrics: Defining clear and relevant performance metrics is crucial. These metrics could include response time, throughput, resource utilization, error rates, and other relevant indicators. Measuring these metrics before and after implementing the optimization is essential to quantify the change.

- System Load: Performance testing needs to evaluate how the system behaves under increasing load. A real-world scenario often involves peak periods of high user activity. This requires a controlled environment where load is gradually increased to assess the system’s capacity and the impact of the optimization under stress.

- Contextual Evaluation: The impact of an optimization must be evaluated within the context of the project’s overall goals. Is the optimization improving user experience, reducing costs, or increasing efficiency? A successful optimization aligns with these objectives.

Performance Testing Approaches

Different performance testing approaches are suitable for various environments and applications. These include:

- Load Testing: Simulates a high volume of users interacting with the system, providing insights into system scalability and stability. It is suitable for web applications, APIs, and other systems designed to handle many concurrent users.

- Stress Testing: Exposes the system to extreme conditions, such as unusually high load or resource constraints. This helps identify system vulnerabilities and the maximum capacity the system can withstand.

- Spike Testing: Simulates sudden surges in user activity. This tests the system’s ability to handle unpredictable load changes, like a sudden influx of users during a promotional event.

Techniques to Avoid Premature Optimization

Premature optimization is a common pitfall in software development, often leading to wasted effort and suboptimal solutions. Understanding how to avoid this anti-pattern is crucial for building robust and efficient applications. This section provides practical techniques and best practices for effectively identifying and addressing performance concerns, ensuring that optimization efforts are well-directed and yield meaningful results.

Addressing performance concerns proactively, but only after proper identification and analysis, is key to avoiding premature optimization. By focusing on the most critical sections of code and employing rigorous testing methodologies, developers can ensure that optimization efforts lead to tangible improvements, rather than introducing unnecessary complexity or hindering maintainability.

Practical Techniques for Avoiding Premature Optimization

Proactive identification and analysis of potential performance bottlenecks are essential to avoid premature optimization. These techniques help in understanding where performance issues might arise, enabling targeted optimization efforts without the risk of optimizing unnecessary parts of the code.

- Focus on Profiling: Profiling tools provide detailed insights into code execution, pinpointing sections that consume significant resources. Profiling helps identify performance hotspots and areas where optimization efforts can yield the greatest return on investment. For instance, a profiler might reveal that 90% of execution time is spent within a single function, indicating a prime target for optimization.

- Measure Performance Effectively: Thorough and consistent performance measurements are crucial. Establish baselines and track performance metrics throughout development. Use tools and techniques that measure the impact of changes accurately, allowing for informed decisions on where to invest optimization efforts. This involves not just timing code execution, but also analyzing resource usage, memory allocation, and other relevant factors.

- Prioritize Optimization Efforts: Identify performance critical sections based on profiling data and real-world usage patterns. Don’t optimize every line of code; instead, prioritize areas with the greatest impact. For example, if user interface rendering takes significantly longer than database queries, concentrate on optimizing the UI elements, ensuring a smoother user experience.

Best Practices for Identifying and Addressing Performance Concerns

Developing a structured approach to identifying and addressing performance concerns is vital for avoiding the premature optimization anti-pattern. This approach ensures that efforts are targeted and produce the desired results.

- Establish Performance Baselines: Before making any changes, establish clear performance baselines. This allows for objective comparisons and helps in assessing the impact of optimization efforts. For instance, record response times for key operations before introducing any code modifications.

- Iterative Refinement: Employ an iterative approach to optimization. Optimize in small steps, measure the impact of each change, and refine the solution as needed. Avoid trying to implement a large, complex optimization at once. This avoids introducing potential bugs and ensures incremental improvements.

- Thorough Testing: After implementing any optimization, conduct comprehensive testing across various scenarios. This ensures that the optimization is effective and doesn’t introduce unintended side effects. Consider using different input data sets, edge cases, and different hardware configurations to ensure broad applicability.

Checklist for Evaluating Potential Optimization Opportunities

A structured checklist can help in evaluating potential optimization opportunities before implementing them.

| Criteria | Description |

|---|---|

| Impact on Functionality | Does the optimization affect the functionality of the application? |

| Potential for Side Effects | Are there any potential side effects, such as unexpected behavior or compatibility issues? |

| Maintainability | Is the optimization easily maintainable and understandable in the long run? |

| Real-World Impact | Will the optimization improve performance for real-world use cases? |

Refactoring for Efficiency

Refactoring is a crucial technique for improving code quality and performance without altering the program’s external behavior. It’s a process of restructuring existing code, improving its design, and enhancing its readability without modifying its functionality. This systematic approach allows for better maintainability and efficiency in the long run.

Refactoring is inherently connected to the avoidance of premature optimization. By focusing on clean, well-structured code, developers can make performance improvements incrementally, addressing only the areas that truly need optimization based on concrete performance measurements. This process helps maintain code quality, readability, and avoids the potential pitfalls of premature optimization, which often leads to unnecessary complexity and reduced maintainability.

Role of Refactoring in Optimization

Refactoring is a powerful tool for improving code efficiency without changing its functionality. It’s not about optimizing individual lines of code but rather about enhancing the overall architecture and structure of the program. By improving code structure, developers make the code easier to understand, maintain, and ultimately optimize. This structured approach helps avoid unnecessary complexity and makes the code more robust against future changes.

Connection to Avoiding Premature Optimization

Refactoring allows for a more measured approach to optimization. Instead of jumping to complex optimizations before identifying performance bottlenecks, refactoring focuses on improving the code’s structure and design, which can often lead to performance improvements as a byproduct. This approach prioritizes the readability and maintainability of the code, reducing the risk of introducing bugs or making the code harder to understand.

By avoiding premature optimization, refactoring allows for a more efficient and focused approach to performance enhancement.

Refactoring Examples for Efficiency

Refactoring can be applied to various aspects of code. One common technique is to replace complex conditional logic with simpler, more readable alternatives. For example, a series of nested `if-else` statements can be replaced with a more structured `switch` statement, improving code clarity and maintainability.

- Improving Conditional Logic: Nested `if-else` statements can be replaced with a more structured `switch` statement, which is often more efficient for a larger number of possible conditions. This refactoring improves code clarity and reduces the potential for errors.

- Simplifying Data Structures: Converting an inefficient data structure to a more suitable one, such as changing from a linked list to an array for random access or a hash table for fast lookups, can improve performance significantly.

- Eliminating Redundant Code: Identifying and removing duplicated code segments can improve performance and readability by reducing the number of instructions the program needs to execute. This is often achieved through extracting common logic into reusable methods or functions.

- Improving Algorithm Selection: If a less efficient algorithm is used, refactoring to a more optimized algorithm (like using binary search instead of linear search for a sorted dataset) can substantially enhance performance.

Importance of Code Reviews in Refactoring

Code reviews are critical during refactoring efforts. They provide an external perspective on the code changes, allowing for identification of potential issues, improvements, and adherence to coding standards. This collaborative approach helps ensure that the refactoring improves code quality and doesn’t introduce unforeseen problems. Code reviews play a crucial role in ensuring refactoring efforts lead to actual improvements rather than just cosmetic changes.

Refactoring to Eliminate Unnecessary Code

Refactoring often involves identifying and removing unnecessary code, including unused variables, functions, or classes. This removal reduces the program’s size, improving performance by minimizing the amount of code that needs to be processed.

- Unused Variables and Functions: Identifying and removing unused variables and functions can significantly reduce the code’s size, leading to improved performance.

- Dead Code: Eliminating code paths that are no longer used or reached is another key aspect of refactoring to improve performance. Identifying and removing dead code is essential for ensuring the code’s efficiency.

Code Reviews and Peer Feedback

Code reviews are a critical component of software development, providing an opportunity for developers to learn from each other and improve the overall quality of the codebase. A crucial aspect of effective code reviews is identifying and mitigating premature optimization tendencies. By actively scrutinizing code for potential performance bottlenecks, developers can ensure that optimization efforts are targeted and yield meaningful results.

Peer feedback, a core part of the code review process, plays a pivotal role in evaluating optimization strategies. A diverse perspective on the code, coupled with technical expertise, allows for a comprehensive assessment of potential performance impacts. By openly sharing concerns and insights, developers can identify blind spots and refine their approach to optimization.

Role of Code Reviews in Identifying Premature Optimization

Code reviews provide a structured environment for examining code and identifying potential areas of premature optimization. Reviewers can scrutinize code logic, algorithm choices, and data structures to determine if optimization efforts are justified. This process helps prevent unnecessary work that might not yield substantial performance gains. For instance, if a developer prematurely optimizes a section of code that is not a performance bottleneck, the code review process can highlight this issue and encourage a more focused approach.

Importance of Peer Feedback in Evaluating Optimization Strategies

Peer feedback is invaluable in evaluating the effectiveness and appropriateness of optimization strategies. Different developers bring diverse experiences and perspectives to the table, enabling a more comprehensive evaluation of the optimization approach. This collaborative approach can lead to the identification of potential pitfalls and the development of more robust and efficient solutions. For instance, a colleague might point out a more efficient algorithm that the original developer hadn’t considered, leading to a significant performance improvement.

Examples of Code Reviews Highlighting Potential Performance Issues

Code reviews can highlight potential performance issues by focusing on areas of potential bottlenecks. For example, a review might point out the use of inefficient loops or data structures. Consider a situation where a developer uses nested loops to process a large dataset. A code review can identify this as a potential performance bottleneck, prompting a discussion about alternative approaches such as using vectorized operations or optimized data structures.

Another example is the use of redundant calculations. The code review process can help identify and eliminate redundant computations, thus improving efficiency.

Checklist for Conducting Code Reviews Focusing on Premature Optimization

This checklist can be used to guide code reviews, ensuring that premature optimization tendencies are effectively identified and addressed.

- Review Algorithm Complexity: Analyze the algorithm used to determine its time and space complexity. Is the chosen algorithm the most efficient for the task? Are there potential alternatives with better time complexity?

- Identify Potential Performance Bottlenecks: Focus on areas of the code where performance issues are likely to occur. Consider the size of the data being processed, the frequency of operations, and the computational cost of different components.

- Examine Data Structures: Evaluate whether the selected data structures are appropriate for the intended use case. Are there more efficient data structures that could improve performance?

- Evaluate Existing Performance Metrics: If applicable, review any existing performance metrics to determine if optimization efforts are justified. Do the proposed changes actually improve performance, or are they likely to have a negligible impact?

- Consider Alternative Approaches: Explore alternative solutions or approaches that could potentially improve performance without excessive optimization. Are there more efficient ways to accomplish the task? Discuss alternative algorithms or data structures.

Sample Code Review Discussion Addressing Premature Optimization Concerns

A code review might involve a discussion on a particular function that iterates through a large dataset to calculate a sum.

“While this function calculates the sum correctly, the use of nested loops for processing a large dataset is a potential performance bottleneck. Could we consider using a vectorized operation or a more efficient data structure to achieve the same result?”

The developer might respond:

“I hadn’t considered vectorized operations. This is a good suggestion. I’ll investigate and implement that change. Also, I’m now considering if this large dataset can be processed in smaller batches to avoid loading the entire dataset into memory at once.”

This exchange demonstrates how code reviews can lead to identifying and discussing potential premature optimization issues, encouraging a more effective approach.

Learning from Past Mistakes

Avoiding premature optimization is crucial for effective software development. Understanding the potential pitfalls and learning from past projects where this anti-pattern significantly impacted timelines and quality is essential for building robust and efficient systems in the future. This section delves into real-world examples and the lessons learned to prevent similar mistakes.

Projects often suffer delays when optimization efforts are prioritized prematurely. Instead of focusing on the core functionality and user experience, resources are diverted to optimizing sections that aren’t critical performance bottlenecks. This can lead to wasted effort, frustration, and ultimately, a less user-friendly product.

Examples of Projects Affected by Premature Optimization

Several software projects have experienced significant delays due to premature optimization. One example is a large-scale e-commerce platform where developers, eager to improve response times, implemented numerous micro-optimizations in the initial stages of development. While these optimizations seemed beneficial on paper, they actually added complexity to the codebase and hindered the overall development process. This project experienced significant delays and ultimately had to re-evaluate its approach, focusing on performance profiling and identifying actual performance bottlenecks.

Another example is a mobile application where developers attempted to optimize the rendering of complex graphics before ensuring the core application logic was robust. This approach led to unnecessary complexity and code maintenance issues, while the user experience remained poor due to the lack of focus on fundamental functionalities.

Lessons Learned from These Projects

The key lesson from these examples is the importance of focusing on the core functionality first. Prioritizing user experience and core features before extensive optimization is paramount. Thorough analysis and profiling are critical to identify performance bottlenecks accurately.

- Projects often underestimate the time required for thorough analysis. Developers need to schedule dedicated time for profiling and performance testing.

- Implementing premature optimizations can create technical debt, making future maintenance and updates significantly more difficult.

- The initial focus should be on the core functionality and user experience. Optimizations should only be considered after the core features are working correctly and the performance bottlenecks are identified.

Incorporating Lessons Learned into Future Development Processes

The lessons learned from these past projects can be incorporated into future development processes in several ways. First, developers should be educated on the pitfalls of premature optimization. Second, establishing clear guidelines and processes for performance analysis and optimization is essential. Third, fostering a culture of thorough analysis and a healthy skepticism towards immediate optimization efforts will greatly benefit the development team.

- Conduct thorough performance analysis using profiling tools to pinpoint the actual bottlenecks in the application.

- Develop a clear performance testing strategy that includes benchmarks and realistic user scenarios.

- Establish clear communication channels for performance concerns, enabling developers to discuss potential optimization approaches before implementing them.

Key Takeaways from Real-World Experiences

The following list summarizes crucial takeaways from real-world experiences with premature optimization:

- Prioritize functionality over immediate optimization.

- Focus on the user experience and core features first.

- Conduct thorough performance profiling and analysis.

- Implement optimizations only after identifying real performance bottlenecks.

- Establish a process for iterative optimization and continuous improvement.

Strategies for Creating a Culture that Values Thorough Analysis

Creating a culture that values thorough analysis over premature optimization requires a multi-faceted approach. First, encourage a collaborative environment where developers feel comfortable questioning optimization efforts. Second, reward thorough analysis and the identification of real performance bottlenecks. Third, use tools and techniques to help developers measure performance effectively.

- Implement code reviews with a specific focus on performance analysis and optimization.

- Establish a dedicated team or individual responsible for performance analysis and optimization.

- Use performance testing frameworks and tools to provide objective data about the application’s performance.

Example Case Studies

Avoiding premature optimization is crucial for software development projects. A project that prioritizes performance too early often leads to wasted effort and suboptimal solutions. Successful projects, instead, focus on understanding the actual performance bottlenecks and address them strategically. This section will explore specific case studies illustrating this approach.

Projects that avoided premature optimization benefited from a methodical, data-driven approach to performance improvement. They prioritized understanding the actual performance characteristics of their applications rather than making assumptions or guesses about potential bottlenecks.

Case Study 1: A Web Application Redesign

This project involved redesigning a web application to improve user experience and responsiveness. Instead of immediately optimizing code, the team meticulously analyzed user behavior and identified areas where the application experienced delays.

- The team utilized web performance monitoring tools to capture real-world usage patterns. This included detailed analysis of request durations, server response times, and client-side rendering performance.

- Profiling revealed that database queries were the primary source of slowdowns, not code in the application’s logic layer. This was a significant discovery, as initial assumptions suggested otherwise.

- To address the bottleneck, the team implemented database optimization techniques. They rewrote slow queries, optimized indexes, and adjusted database caching strategies. The focus remained on the critical section, the database interactions.

- Performance was measured before and after each optimization step, providing concrete data for evaluation. This allowed for a clear understanding of the effectiveness of the changes.

Case Study 2: A Mobile Application with Frequent Data Updates

A mobile application frequently updated user data. Early attempts to optimize the data processing algorithms were unsuccessful.

- Profiling identified that network latency was the primary cause of poor performance, not the application’s data handling logic.

- The team focused on optimizing network requests by implementing caching strategies and minimizing the number of requests. This involved using techniques like prefetching data and optimizing the data transfer format.

- Performance testing revealed that these changes significantly reduced latency and improved responsiveness. The initial optimization of the algorithm itself did not have a noticeable impact, as it was a bottleneck in the external network.

Case Study 3: A Large-Scale Data Processing Pipeline

A large-scale data processing pipeline experienced significant delays during peak hours. Early attempts to optimize individual components proved ineffective.

- Profiling the pipeline identified that the data ingestion stage was the primary bottleneck, not the intermediate processing steps.

- The team focused on optimizing the ingestion process by implementing parallel processing and optimizing data ingestion pipelines. This was crucial for handling the increased data volume during peak times.

- Performance measurements indicated a significant improvement in processing speed during peak hours. The team had correctly identified the critical section and applied optimizations.

Last Word

In conclusion, avoiding premature optimization is a crucial aspect of successful software development. By prioritizing a methodical approach, leveraging profiling tools, and focusing on real-world performance needs, developers can achieve optimal performance without the costly and unproductive delays associated with premature optimization. The key takeaway is to measure, analyze, and optimize, rather than guess and optimize prematurely.

FAQ Section

What are some common misconceptions about performance optimization?

A common misconception is that optimizing every line of code is always necessary. In reality, focusing on the most critical performance bottlenecks is more effective and efficient. Another misconception is that optimization should be done in isolation, without considering the broader context of the system or user experience.

How can I effectively use profiling data to guide optimization efforts?

Profiling tools provide insights into the areas of code that consume the most resources. Use this data to identify performance bottlenecks and prioritize optimization efforts accordingly. Focus on optimizing the most time-consuming functions first, then refine further based on subsequent profiling results.

What are some best practices for identifying and addressing performance concerns?

Thoroughly document the initial performance metrics. Develop and run realistic test cases to simulate user scenarios. Focus on critical sections of the code, where significant time is spent, and then prioritize optimization efforts. Implement changes incrementally and perform rigorous testing after each step to evaluate the impact on the system.

How can code reviews help in avoiding premature optimization?

Code reviews provide an opportunity for peer feedback on potential performance issues. Experienced developers can identify code patterns or assumptions that may lead to premature optimization. Focus on the overall architecture, data structures, and algorithms, as well as specific code snippets that might require optimization.