This guide to designing scalable cloud applications provides a comprehensive overview of the essential concepts and strategies for building robust and adaptable cloud-based systems. From foundational principles to advanced architectural patterns, we delve into the intricacies of designing applications that can seamlessly handle growing demands and ensure consistent performance.

The guide covers critical aspects like choosing the right cloud platform, designing microservices architectures, implementing load balancing strategies, and managing data effectively. Security considerations and performance optimization techniques are also explored, enabling readers to build highly reliable and secure applications. The content concludes with case studies and real-world examples to provide practical insights into successful scalability implementations.

Introduction to Scalable Cloud Applications

Scalable cloud applications are designed to adapt and grow in response to changing demands and user needs. They leverage the elasticity and resource provisioning capabilities of cloud platforms to handle fluctuations in traffic, data volume, and user requests without performance degradation. This adaptability is critical for businesses looking to maintain optimal service levels while minimizing operational costs.Core principles underpinning the design of scalable applications include modularity, decoupling, and automation.

These principles allow for independent scaling of different components, enabling efficient resource allocation and optimized performance. Furthermore, robust monitoring and logging mechanisms are vital to ensure the health and performance of the application.The cloud provides several key advantages for scalability. These include the ability to provision resources on-demand, pay-as-you-go pricing models, and global reach. This enables businesses to scale their applications rapidly and cost-effectively, without the upfront investment in hardware or infrastructure.

Architectural Patterns for Scalable Cloud Applications

Various architectural patterns can be employed to design scalable cloud applications. These patterns define how different components of the application interact and how they are deployed and scaled. Microservices architecture, for example, decomposes the application into smaller, independent services, allowing for greater agility and scalability. Serverless functions offer another approach, where code is executed in response to events without managing servers, facilitating cost optimization and scaling.

Furthermore, the use of containerization technologies like Docker and Kubernetes allows for consistent and portable deployments across different environments, simplifying scaling and management.

Key Benefits of Cloud Platforms for Scalability

Cloud platforms provide several benefits for designing scalable applications. The elasticity offered by cloud providers enables rapid scaling of resources to meet fluctuating demand. This eliminates the need for upfront infrastructure investment and allows for efficient resource utilization. Cloud providers typically offer robust monitoring and logging tools, which help in tracking application performance and identify potential bottlenecks.

Furthermore, pay-as-you-go pricing models offer cost-effective scaling options. Businesses can scale their applications up or down based on actual needs, avoiding unnecessary costs.

Common Challenges in Designing Scalable Cloud Applications

Several challenges exist in designing scalable cloud applications. One key challenge is maintaining performance as the application scales. Carefully designed load balancing strategies are essential to distribute traffic evenly across available resources. Ensuring data consistency across different instances or replicas is another critical concern. Furthermore, securing a cloud application and ensuring data protection across multiple cloud regions requires robust security measures.

Comparison of Cloud Platforms

| Feature | AWS | Azure | GCP |

|---|---|---|---|

| Scalability Options | Extensive range of services and tools for scaling compute, storage, and networking resources. Provides autoscaling capabilities for automated scaling based on defined metrics. | Offers robust autoscaling features for various compute resources. Excellent support for scaling storage and networking. | Provides autoscaling options for compute resources. Offers a wide range of tools for managing and scaling storage solutions. |

| Global Reach | Extensive global infrastructure with data centers in numerous locations, enabling low-latency access for users worldwide. | Globally distributed infrastructure with data centers in diverse regions, providing excellent support for global applications. | Widely distributed infrastructure with data centers worldwide, enabling low-latency access for users in different regions. |

| Pricing Models | Offers various pricing models, including pay-as-you-go and reserved instances, enabling cost optimization based on usage patterns. | Provides a range of pricing options including pay-as-you-go and reserved instances, allowing for flexible cost management. | Provides pay-as-you-go and various other pricing models for optimized cost management based on resource usage. |

Different cloud platforms offer varying degrees of support for various use cases. A comprehensive assessment of scalability needs and existing infrastructure should guide the selection of the appropriate cloud platform.

Choosing the Right Cloud Platform

Selecting the appropriate cloud platform is crucial for building scalable applications. A well-chosen platform provides the necessary infrastructure, tools, and support to accommodate future growth and changing demands. The platform’s scalability features, service models, and pricing structure must align with the application’s needs and the organization’s budget.Careful consideration of various factors, including scalability features, service models, and pricing, is paramount to ensure a smooth and cost-effective transition to the cloud.

This section details the key aspects to consider when selecting a cloud platform for building scalable applications.

Factors to Consider When Choosing a Cloud Platform

Several factors influence the optimal choice of cloud platform for scalable applications. Understanding these factors is essential for making informed decisions. These factors include the application’s specific needs, the organization’s budget, and the desired level of control over infrastructure.

- Application Requirements: The application’s specific workload characteristics, such as expected traffic volume, data storage needs, and processing power requirements, significantly impact the platform selection. Applications with fluctuating demand might require platforms offering automated scaling capabilities.

- Budgetary Constraints: Cloud platforms offer various pricing models, and understanding these models is essential to aligning the platform’s cost with the project’s budget. Cost-effective solutions should be considered, such as reserved instances or spot instances.

- Desired Level of Control: The level of control over infrastructure varies across different cloud service models. Organizations seeking more control over hardware and software configurations might opt for Infrastructure as a Service (IaaS) platforms.

- Security and Compliance: Security and compliance considerations are critical for all cloud deployments. The chosen platform should align with the organization’s security policies and regulatory requirements.

Cloud Service Models and Scalability

Different cloud service models offer varying degrees of scalability. Understanding the trade-offs between these models is essential for making the right choice.

- Infrastructure as a Service (IaaS): IaaS provides the most granular control over infrastructure. Scalability is achieved by provisioning and configuring resources as needed. This model allows for high customization but requires significant technical expertise.

- Platform as a Service (PaaS): PaaS offers a platform for developing, running, and managing applications without the need for managing the underlying infrastructure. Scalability is often handled automatically by the platform. This model is suitable for applications with less complex requirements.

- Software as a Service (SaaS): SaaS delivers software applications over the internet. Scalability is managed by the provider, offering the least amount of control over infrastructure but simplifying application deployment.

Importance of Robust Scalability Features

A platform with robust scalability features is essential for handling fluctuating workloads and future growth. These features allow applications to adapt to changes in demand without compromising performance.

- Automated Scaling: Platforms with automated scaling capabilities can dynamically adjust resources to meet changing demands, ensuring optimal performance and cost-effectiveness.

- Horizontal Scaling: The ability to scale horizontally by adding more resources allows applications to handle increasing workloads without performance degradation. This is a key differentiator for platforms.

- Vertical Scaling: Scaling vertically involves increasing the capacity of existing resources. This option is often limited by physical constraints of individual resources.

Platform-Specific Tools and Scalability

Platform-specific tools significantly impact scalability. Tools for monitoring, managing, and optimizing resources can enhance the overall scalability of applications.

- Monitoring Tools: Robust monitoring tools provide insights into application performance and resource utilization. These insights allow for proactive identification and resolution of potential bottlenecks.

- Management Tools: Platforms with comprehensive management tools streamline resource provisioning, scaling, and maintenance.

- Performance Optimization Tools: Tools designed to optimize application performance ensure efficient resource utilization and smooth scalability.

Migrating Existing Applications to the Cloud

Migrating existing applications to the cloud while maintaining scalability requires careful planning and execution. Strategies for a smooth migration include gradual migration and testing in a staging environment.

- Gradual Migration: Migrating applications in stages allows for thorough testing and validation of scalability before full deployment.

- Staging Environment: Setting up a staging environment enables testing the application’s scalability in a controlled environment before migrating to production.

Pricing Models for Scalable Cloud Services

Different cloud providers offer various pricing models for scalable cloud services. Understanding these models is critical for budgeting and cost optimization.

| Cloud Provider | Pricing Model | Description |

|---|---|---|

| Amazon Web Services (AWS) | On-demand, Reserved Instances, Spot Instances | Offers various pricing models to accommodate diverse needs and budgets. |

| Microsoft Azure | Pay-as-you-go, Reserved Virtual Machines, Hybrid options | Provides a range of pricing options, including those designed for hybrid cloud environments. |

| Google Cloud Platform (GCP) | Compute Engine pricing, Persistent Disks | Offers pricing structures tailored to various compute and storage needs. |

Designing Microservices Architecture

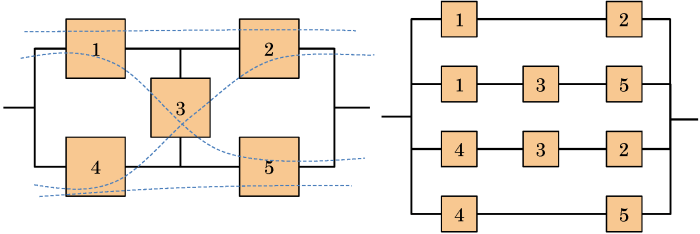

Microservices architecture has emerged as a powerful approach for building scalable and maintainable cloud applications. This architectural style decomposes an application into small, independent services, each responsible for a specific business function. This modularity fosters greater flexibility, enabling teams to work independently and deploy changes incrementally. It’s a particularly valuable strategy in cloud environments, where the ability to scale individual components is crucial for handling fluctuating workloads.The core concept behind microservices is to treat each service as a self-contained unit, with its own data store and business logic.

This allows for independent scaling and deployment of individual services, maximizing resource utilization and application resilience. The communication between these services is typically handled via lightweight protocols like REST APIs, promoting loose coupling and facilitating rapid iteration.

Microservices in Cloud Scalability

Microservices architecture aligns perfectly with the principles of cloud scalability. The independent nature of each service allows for scaling individual components up or down based on demand. For example, if a particular service experiences a surge in traffic, its corresponding instances can be easily increased, without affecting other parts of the application. Conversely, during periods of low demand, instances can be reduced to optimize resource consumption.

This adaptability is a key advantage over monolithic architectures, where scaling the entire application is often necessary, even if only a small part requires more resources.

Benefits of Microservices for Scalability

The benefits of microservices for building scalable applications are substantial. Firstly, independent scaling, as discussed, ensures that resources are allocated efficiently based on the specific needs of each service. Secondly, fault isolation is improved. If one service fails, the impact on other services is minimal, ensuring the overall application remains operational. Thirdly, deployment becomes more agile, allowing for quicker release cycles and faster feedback loops.

Finally, technology diversity is enabled. Each microservice can be built using the most suitable technology stack for its specific function, promoting innovation and efficiency.

Designing Microservices for Different Applications

The design of microservices should align with the specific needs of the application. For example, a e-commerce application might have microservices for product catalog, order processing, payment gateway, and user management. A social media platform could have microservices for user accounts, content creation, notifications, and friend management. A cloud-based gaming platform might include microservices for user accounts, game servers, and matchmaking.

The critical aspect is to identify clear boundaries and responsibilities for each service, ensuring data consistency and efficient communication.

Service Discovery and Load Balancing

Implementing service discovery and load balancing are crucial for effective microservices operation. Service discovery mechanisms enable services to locate each other dynamically, facilitating communication without requiring hardcoded addresses. Load balancing distributes incoming requests across multiple instances of a service, ensuring high availability and preventing overload on any single instance. This often involves specialized tools and services in cloud environments.

Tools like Kubernetes provide comprehensive service discovery and load balancing features.

Microservices vs. Monolithic Architecture (Scalability Comparison)

| Feature | Microservices | Monolithic |

|---|---|---|

| Scalability | Scalable independently based on demand for individual services. | Scaling the entire application, even if only a small part needs more resources. |

| Fault Tolerance | Failures in one service do not affect others, improving overall resilience. | A failure in one component can bring down the entire application. |

| Deployment | Faster and more agile release cycles due to independent deployments. | Deployment is often more complex and time-consuming. |

| Technology Diversity | Allows use of the best technology for each service. | Limited by the technology stack of the entire application. |

| Maintainability | Easier to maintain and update individual services. | More challenging to maintain and update large, complex codebases. |

Implementing Load Balancing Strategies

Load balancing is a critical component of designing scalable cloud applications. It distributes incoming network traffic across multiple servers, ensuring high availability and preventing overload on any single server. Effective load balancing is essential for handling fluctuating traffic volumes, accommodating growth, and maintaining consistent performance. Properly configured load balancers are vital for ensuring that applications can seamlessly manage an increasing user base without experiencing performance degradation.Load balancing algorithms are fundamental to achieving optimal performance.

By strategically distributing traffic, these algorithms ensure that all servers are utilized efficiently. This approach prevents bottlenecks and enhances the overall responsiveness of the application. A well-implemented load balancing strategy can significantly contribute to the resilience and scalability of a cloud application.

Different Load Balancing Techniques

Various load balancing techniques are available, each with its strengths and weaknesses. Understanding these techniques is crucial for selecting the appropriate solution for a given application. Common techniques include:

- Round Robin: This technique distributes incoming requests in a cyclical manner across available servers. It’s a simple and effective method for distributing traffic evenly, ensuring that each server gets a fair share of requests. This method is well-suited for applications with relatively uniform request patterns.

- Least Connections: This approach directs new requests to the server with the fewest active connections. It aims to distribute traffic based on the current workload of each server, dynamically adapting to changes in server utilization. This is particularly helpful for applications with varying request durations, where maintaining a balanced workload is crucial.

- Weighted Round Robin: This method extends the round-robin approach by assigning weights to different servers. Servers with higher weights receive a proportionally larger share of requests. This is valuable when servers have different processing capabilities or when specific servers need to handle more traffic than others.

- IP Hashing: This technique uses the client’s IP address to determine the server to which a request is routed. This ensures that requests from the same client are consistently handled by the same server, which is beneficial for maintaining session persistence. This is particularly helpful for applications requiring session management, like e-commerce websites.

Configuring Load Balancers for Optimal Performance

Proper configuration of load balancers is essential for maximizing performance and ensuring optimal traffic distribution. Factors to consider include:

- Health Checks: Implementing health checks ensures that only healthy servers receive traffic. This prevents requests from being directed to servers experiencing issues, thereby maintaining application availability. Regular health checks are critical for continuous performance monitoring.

- Monitoring and Logging: Monitoring load balancer performance is essential for identifying bottlenecks and optimizing traffic distribution. Logging provides valuable insights into traffic patterns and potential issues, enabling proactive troubleshooting and adjustments to the load balancing configuration.

- Scalability: Load balancers should be designed to scale with the application. The ability to add or remove servers as needed is essential for adapting to fluctuating traffic demands and ensuring the application remains responsive.

Importance of Load Balancing in Handling Increased Traffic

Load balancing is crucial for handling increased traffic. It prevents any single server from being overwhelmed, thereby maintaining application responsiveness and preventing service disruptions. A well-configured load balancer can effectively manage traffic spikes and ensure that the application can handle a larger number of users or requests without compromising performance.

Load Balancing Algorithms and Their Impact on Scalability

Different load balancing algorithms have varying impacts on scalability. For example, round-robin distributes traffic evenly, while least connections adapts to fluctuating workloads. Weighted round-robin allows for prioritizing servers based on their capacity. The choice of algorithm depends on the specific application requirements and the anticipated traffic patterns.

Choosing the Right Load Balancer for Specific Application Needs

Selecting the appropriate load balancer depends on factors such as the application’s architecture, traffic patterns, and scalability requirements. Consider the specific needs of the application and the features offered by different load balancer providers. Carefully evaluating these factors helps to ensure that the load balancer chosen aligns with the application’s long-term goals.

Data Management for Scalability

Effective data management is crucial for the success of any scalable cloud application. Robust database design and selection, coupled with intelligent data sharding and caching strategies, directly impact application performance and maintainability. This section details critical aspects of designing scalable data systems for cloud environments.Designing a scalable database system requires careful consideration of the anticipated volume and velocity of data, as well as the complexity of the queries.

Choosing the right database technology, implementing appropriate sharding strategies, and employing effective caching mechanisms are all key to achieving and maintaining high performance and availability. Data consistency is paramount, requiring strategies that ensure data integrity throughout the system.

Database Technologies for Cloud Environments

Various database technologies are well-suited for cloud environments, each with its own strengths and weaknesses. Choosing the appropriate technology depends on the specific needs of the application, such as data structure, query patterns, and scalability requirements.

- Relational databases (SQL): Relational databases, like PostgreSQL and MySQL, excel at structured data and complex queries. Their ACID properties (Atomicity, Consistency, Isolation, Durability) guarantee data integrity, which is essential for many applications. Cloud providers offer managed services for these databases, simplifying deployment and maintenance.

- NoSQL databases: NoSQL databases, such as MongoDB and Cassandra, are designed for handling unstructured and semi-structured data. They offer high scalability and horizontal scalability, making them suitable for applications with rapidly growing datasets. The trade-off is often a less stringent data consistency model compared to relational databases.

- Cloud-native databases: Cloud providers offer specialized database services tailored to specific needs. These services often integrate seamlessly with other cloud resources, offering simplified management and improved performance. Examples include Amazon Aurora, Google Cloud Spanner, and Azure Cosmos DB.

Data Sharding and Partitioning Strategies

Sharding and partitioning are essential techniques for horizontally scaling databases. Sharding involves dividing the data across multiple database instances, while partitioning involves dividing a single database into smaller, more manageable units.

- Horizontal Sharding: Horizontal sharding distributes data across multiple servers. This approach enables the system to handle a large volume of data by distributing the load across multiple instances. A key challenge is ensuring data consistency and efficient query routing across the shards.

- Vertical Partitioning: Vertical partitioning involves separating tables or columns into different databases based on their usage patterns. This can improve query performance by reducing the size of the data accessed in a single query.

- Hash-based Sharding: Hash-based sharding uses a hash function to determine which shard a piece of data belongs to. This is a common approach due to its simplicity and efficiency for distributing data evenly.

Caching Mechanisms for Performance Improvement

Caching mechanisms store frequently accessed data in memory to reduce database load and improve response times.

- In-memory caching: In-memory caching stores frequently accessed data in RAM, allowing for very fast retrieval. Redis and Memcached are popular choices for in-memory caching.

- Content Delivery Networks (CDNs): CDNs store static content, such as images and videos, closer to users, significantly reducing latency. This is particularly effective for applications with geographically dispersed users.

Data Consistency in Scalable Systems

Ensuring data consistency across a distributed system is critical. Different database technologies offer varying levels of consistency guarantees.

“ACID properties are essential for maintaining data integrity in relational databases.”

Database Suitability for Application Scenarios

| Database Type | Suitable Application Scenarios |

|---|---|

| Relational (SQL) | Applications requiring strong data consistency, complex queries, and well-defined data structures. Examples include e-commerce platforms, financial systems, and enterprise resource planning (ERP) applications. |

| NoSQL (Document, Key-Value, Graph) | Applications with unstructured or semi-structured data, high scalability requirements, and high read/write volume. Examples include social media platforms, content management systems, and IoT applications. |

| Cloud-native | Applications requiring high availability, low latency, and seamless integration with other cloud services. Examples include microservice architectures, real-time analytics, and global applications. |

Security Considerations for Scalable Applications

Ensuring the security of scalable cloud applications is paramount. Robust security measures are not just desirable but crucial for maintaining data integrity, user trust, and overall system reliability. Compromised security can lead to significant financial losses, reputational damage, and legal repercussions. This section delves into critical security vulnerabilities, best practices for securing cloud infrastructure, and strategies for implementing robust security measures throughout the application lifecycle.Scalable cloud applications, by their nature, often involve intricate interactions across multiple services and environments.

This complexity introduces unique security challenges that need careful consideration. Addressing these challenges proactively through well-defined security protocols and best practices is vital for preventing and mitigating potential vulnerabilities.

Identifying Security Vulnerabilities in Scalable Cloud Applications

Security vulnerabilities in scalable cloud applications can stem from various sources, including misconfigurations, inadequate access controls, and vulnerabilities in third-party components. These vulnerabilities can be exploited by malicious actors to gain unauthorized access, manipulate data, or disrupt services. Understanding potential vulnerabilities is crucial for proactively mitigating risks. Common vulnerabilities include insecure APIs, weak passwords, and insufficient input validation.

Best Practices for Securing Cloud Infrastructure

Implementing strong security measures across the cloud infrastructure is essential for securing scalable applications. These measures include employing strong encryption for data at rest and in transit, utilizing secure network configurations, and regularly updating and patching all software components. Using Identity and Access Management (IAM) solutions to control user access is also critical.

Strategies for Implementing Access Control and Authorization

Robust access control and authorization strategies are vital for securing scalable applications. This involves implementing granular permissions to restrict access to sensitive data and resources based on user roles and responsibilities. Multi-factor authentication (MFA) adds an extra layer of security by requiring multiple forms of verification before granting access. Regularly reviewing and updating access controls ensures the system remains secure as the application evolves.

Importance of Encryption and Data Protection in Scalable Systems

Encryption plays a critical role in securing data in scalable systems. Data encryption, both at rest and in transit, protects sensitive information from unauthorized access. Data loss prevention (DLP) policies and procedures further safeguard data integrity and prevent sensitive data from leaving the system without proper authorization. Regular audits and penetration testing help identify and address potential vulnerabilities in the encryption process.

Implementing Security Measures Throughout the Application Lifecycle

Security should be integrated into every phase of the application lifecycle. From initial design and development to deployment and maintenance, security measures must be implemented consistently. This includes conducting security audits, performing penetration testing, and ensuring compliance with relevant security standards and regulations. Regular security assessments help identify vulnerabilities and ensure ongoing security throughout the application’s lifespan.

Security Best Practices for Various Cloud Services

| Cloud Service | Security Best Practices |

|---|---|

| Compute Instances | Employ strong passwords, use MFA, configure firewalls, regularly update software. |

| Storage Services | Enable encryption at rest and in transit, implement access controls, monitor storage activity. |

| Networking | Configure secure virtual networks, utilize VPNs, apply network segmentation, implement intrusion detection systems. |

| Databases | Use strong passwords, implement access controls, regularly back up data, enable encryption. |

| API Gateway | Implement rate limiting, validate inputs, authorize requests, encrypt API traffic. |

Monitoring and Performance Optimization

Effective monitoring and performance optimization are crucial for the success of scalable cloud applications. These strategies ensure applications remain responsive, efficient, and reliable under varying workloads. Without robust monitoring, potential bottlenecks and performance issues can go unnoticed, leading to degraded user experience and increased operational costs. Proactive monitoring and optimization prevent these problems, allowing for quick identification and resolution of performance issues.Continuous monitoring and optimization are essential to maintain application performance, availability, and scalability.

By implementing robust monitoring tools and techniques, teams can identify potential issues early, implement corrective actions, and avoid catastrophic failures. This proactive approach ensures the application continues to function seamlessly under fluctuating workloads.

Importance of Monitoring Scalable Applications

Monitoring scalable applications is vital for maintaining optimal performance and ensuring user satisfaction. It allows for the detection of performance issues before they impact users, enabling prompt resolution and preventing service disruptions. Real-time insights into application behavior, resource utilization, and user traffic patterns provide crucial data for proactive performance management and scaling strategies.

Different Monitoring Tools and Techniques

Various monitoring tools and techniques are available to support scalable applications. These tools range from simple logging systems to sophisticated performance management platforms. Comprehensive monitoring often involves a combination of approaches, leveraging different tools for different aspects of the application’s operation.

Identifying Performance Bottlenecks

Identifying performance bottlenecks is a critical aspect of optimization. Performance bottlenecks can stem from various sources, including database queries, network latency, or inefficient code. Careful analysis of application logs, metrics, and user behavior provides valuable insights into potential issues. Tools like profiling tools can pinpoint code segments that consume excessive resources.

Methods for Optimizing Application Performance

Optimizing application performance involves a multifaceted approach. Efficient code, optimized database queries, and strategic caching can significantly improve performance. Careful code reviews and profiling identify areas for improvement. Load balancing distributes traffic effectively, preventing overload on specific components.

Strategies for Proactive Performance Management

Proactive performance management is critical for maintaining a high-quality user experience. Regular performance testing under various load conditions is essential. Monitoring key performance indicators (KPIs) allows for early detection of potential issues. Alerting systems provide immediate notification of deviations from expected performance levels, facilitating rapid response.

Monitoring Tools and Their Features

| Monitoring Tool | Key Features |

|---|---|

| CloudWatch (AWS) | Comprehensive monitoring for AWS resources; metrics, logs, and alarms; detailed visualization and analysis; scalable dashboards; integration with other AWS services. |

| Datadog | Broad range of monitoring tools, including infrastructure, applications, and databases; real-time insights and alerts; customizable dashboards; strong integration capabilities. |

| Prometheus | Open-source monitoring system; powerful query language for data analysis; flexible alerting; highly customizable; integrates with various tools and services. |

| New Relic | Application performance monitoring; provides deep insights into application behavior; performance metrics; user experience monitoring; integrates with various cloud platforms. |

| Grafana | Open-source visualization tool; integrates with various data sources (including Prometheus); creates dashboards for monitoring; highly customizable. |

Auto-Scaling Strategies

Auto-scaling is a crucial aspect of designing scalable cloud applications. It dynamically adjusts the resources allocated to an application based on demand, ensuring optimal performance and cost efficiency. This flexibility is essential for handling fluctuating workloads and avoiding performance bottlenecks or unnecessary resource consumption.Effective auto-scaling allows applications to adapt to unpredictable traffic spikes and periods of low activity, maintaining responsiveness and preventing service disruptions.

This adaptability is critical for maintaining a positive user experience.

Understanding Auto-Scaling

Auto-scaling in cloud computing automatically adjusts the number of computing resources, such as virtual machines, based on demand. This dynamic adjustment allows applications to scale up when the workload increases and scale down when the workload decreases. This approach optimizes resource utilization and minimizes costs by preventing over-provisioning or under-provisioning. By responding to changing resource requirements, applications can maintain optimal performance and reliability.

Auto-Scaling Strategies

Various auto-scaling strategies cater to diverse application needs. These strategies provide different levels of control and flexibility for adjusting resources.

- Based on CPU utilization: This strategy automatically scales resources based on the average CPU utilization of the application instances. When the utilization surpasses a predefined threshold, more instances are launched; conversely, fewer instances are terminated when the utilization drops below a specified level. This strategy is simple to implement and often provides good performance for applications with predictable workloads.

- Based on network traffic: This approach automatically scales resources based on the volume of network traffic directed towards the application. High traffic volumes trigger the launch of new instances, ensuring the application can handle the load effectively. Conversely, low traffic levels lead to the termination of instances, reducing unnecessary costs. This strategy is particularly useful for applications with highly variable traffic patterns.

- Based on custom metrics: This strategy allows scaling based on custom metrics relevant to the application. For instance, a specific application metric, such as the number of requests processed per second, could trigger the scaling process. This approach provides greater granularity and adaptability, accommodating the specific requirements of various applications.

Configuring Auto-Scaling Policies

Properly configuring auto-scaling policies is vital for effective resource management. Policies dictate the conditions under which scaling actions occur.

- Defining scaling triggers: Defining the specific conditions that initiate scaling is critical. These triggers can be based on CPU utilization, network traffic, or custom metrics. Accurate and appropriate thresholds are essential for optimal scaling behavior.

- Setting scaling actions: The scaling actions specify how the system should respond to the defined triggers. These actions might involve launching or terminating instances, adjusting instance types, or performing other actions to adjust resources.

- Defining scaling limits: Setting limits on the maximum and minimum number of instances helps prevent excessive scaling or resource exhaustion. These limits ensure that the scaling process remains controlled and prevents unintended consequences.

Cost Optimization with Auto-Scaling

Auto-scaling contributes significantly to cost optimization by preventing over-provisioning of resources. During periods of low demand, instances are scaled down, reducing unnecessary costs. This approach ensures that the resources consumed align with the actual workload, leading to significant cost savings. The dynamic adjustments allow for optimal resource utilization across different workloads, resulting in greater cost efficiency.

Implementing Auto-Scaling in Cloud Platforms

Different cloud platforms offer varying approaches to auto-scaling. Understanding these nuances is essential for efficient implementation.

- Amazon Web Services (AWS): AWS offers Auto Scaling, a service that allows users to automatically adjust the capacity of their EC2 instances based on predefined policies. It provides various scaling strategies, including scaling based on CPU utilization, load balancer metrics, and custom metrics.

- Microsoft Azure: Azure offers similar capabilities through its autoscaling features. It enables scaling based on metrics like CPU utilization and request rates, offering robust and flexible scaling options.

- Google Cloud Platform (GCP): GCP provides auto-scaling capabilities for various compute resources, such as virtual machines and Kubernetes Engine deployments. It enables scaling based on numerous metrics, including CPU utilization, request rate, and custom metrics.

Comparison of Auto-Scaling Options

The following table summarizes the auto-scaling options for different cloud platforms.

| Cloud Platform | Auto-Scaling Options | Key Features |

|---|---|---|

| AWS | Auto Scaling | Flexible scaling strategies, wide range of metrics, robust service |

| Azure | Autoscale | Integration with other Azure services, diverse scaling triggers |

| GCP | Auto Scaling | Integration with various GCP services, custom metric support |

Testing and Deployment Strategies

Effective testing and deployment are critical for successful scalable cloud application development. They ensure that applications function as intended in the cloud environment, meet performance requirements, and can be reliably deployed across different platforms and configurations. Robust testing strategies also reduce the risk of unforeseen issues during production deployment.

Testing Methodologies for Scalable Cloud Applications

Comprehensive testing is essential to validate the functionality, performance, and reliability of scalable cloud applications. Different testing methodologies are employed to achieve this. Unit testing, for instance, verifies the individual components of the application, while integration testing ensures seamless interaction between these components. System testing assesses the entire application’s functionality, and user acceptance testing (UAT) verifies the application meets user requirements.

Performance testing, crucial for scalable applications, simulates real-world loads to identify potential bottlenecks and optimize performance under stress. Security testing validates the application’s resistance to various security threats and vulnerabilities. Load testing is a vital aspect, simulating expected user loads to ensure the application handles the volume without performance degradation.

Different Deployment Strategies for Cloud Applications

Various deployment strategies cater to the diverse needs of cloud applications. Blue/green deployments involve deploying a new version (green) alongside the existing version (blue) and then switching traffic to the new version. This approach minimizes downtime and allows for rollback if issues arise. Canary deployments gradually introduce a new version to a small subset of users, allowing for early identification of problems and gradual scaling of the deployment.

Rolling deployments sequentially deploy new versions to different instances, enabling continuous operation. These deployment strategies are tailored to the specific requirements of the application and the level of risk tolerance.

Importance of Continuous Integration and Continuous Delivery (CI/CD)

Continuous Integration and Continuous Delivery (CI/CD) pipelines are essential for streamlining the development and deployment process in cloud environments. CI/CD automates the building, testing, and deployment of applications, enabling faster release cycles and reducing errors. Frequent integration and testing help catch issues early in the development process, minimizing the impact of later problems. Automated testing and deployment ensure consistent quality and minimize manual intervention, reducing human error.

Best Practices for Automated Testing in Cloud Environments

Automated testing is crucial for scalable cloud applications. Test automation frameworks provide tools to automate tests, saving time and resources. These frameworks can be integrated with CI/CD pipelines, ensuring tests run automatically after every code change. Using cloud-based testing services allows for scaling and flexibility in testing resources, mimicking real-world conditions. Implementing comprehensive test coverage for various functionalities, including performance, security, and load, is a best practice.

Methods for Testing and Deploying Scalable Applications in Different Cloud Environments

Different cloud providers offer unique services and tools for testing and deployment. For example, AWS offers tools like Elastic Beanstalk for deploying applications, while Azure provides Azure DevOps for CI/CD pipelines. These tools are integrated with testing frameworks, facilitating automation. Testing in a staging environment, mirroring the production environment, is a crucial step. Testing and deployment procedures should be well-documented and repeatable across different cloud platforms.

Comparison of Deployment Strategies

| Deployment Strategy | Description | Advantages | Disadvantages |

|---|---|---|---|

| Blue/Green | Deploy new version alongside existing, switch traffic. | Minimal downtime, rollback option. | Requires infrastructure for both versions. |

| Canary | Gradually introduce new version to small subset of users. | Early problem detection, gradual scaling. | Can be complex to manage traffic distribution. |

| Rolling | Sequentially deploy new versions to different instances. | Continuous operation, less downtime. | More complex to manage than other strategies. |

Case Studies and Real-World Examples

Understanding the practical application of scalability in cloud applications is crucial. Real-world case studies provide invaluable insights into how companies have successfully designed and implemented scalable solutions, demonstrating the tangible benefits of these strategies. These examples showcase the adaptability and resilience of well-architected cloud applications in responding to fluctuating demands and supporting sustained growth.Successful cloud application design often involves a careful balance of technical choices and strategic business considerations.

By examining how various companies have addressed scalability challenges, we can identify best practices and avoid common pitfalls. These case studies illuminate how scalability can drive business objectives, allowing for increased efficiency, reduced costs, and a stronger competitive position.

Successful Cloud Application Design for Scalability

Numerous companies have demonstrated the power of scalable cloud applications to fuel growth and maintain efficiency. A prime example is Netflix, which utilizes a microservices architecture and sophisticated load balancing techniques to handle massive traffic fluctuations during peak viewing periods. Their architecture enables rapid scaling of resources, ensuring consistent service quality for millions of users globally.

Case Studies of Companies Leveraging Scalability

Amazon Web Services (AWS) itself is a prime example of a company whose infrastructure is built upon scalability principles. Its cloud platform allows businesses of all sizes to deploy and scale applications easily, demonstrating the impact of a scalable cloud foundation. Another prominent example is Spotify, which utilizes a highly scalable architecture to handle massive streaming demands. Their success demonstrates the crucial role of load balancing and distributed caching in handling the significant volume of user requests.

Lessons Learned from Case Studies

Key lessons from these case studies highlight the importance of careful planning and adaptability. Understanding user traffic patterns and anticipating future growth are crucial in designing scalable systems. Furthermore, choosing the right cloud platform and adopting a microservices architecture can significantly improve scalability.

Role of Scalability in Achieving Business Goals

Scalability plays a critical role in achieving various business goals. For instance, it allows businesses to handle increased demand without experiencing service disruptions. This enables sustained growth and improves customer satisfaction. Scalable applications can also lead to cost optimization by dynamically adjusting resources based on real-time needs.

How Different Applications Utilize Approaches to Improve Scalability

Different applications utilize various approaches to improve scalability. Web applications, for instance, often leverage load balancing to distribute traffic across multiple servers, ensuring responsiveness and preventing overload. Streaming services frequently utilize caching strategies to store frequently accessed data, reducing latency and improving performance.

Table Illustrating Use Cases and Applications of Scalability

| Application Type | Scalability Approach | Benefits |

|---|---|---|

| E-commerce | Load balancing, database sharding | Handles high transaction volumes, ensures consistent performance during peak seasons. |

| Streaming Services | Content caching, distributed servers | Maintains low latency and high throughput for streaming media. |

| Social Media Platforms | Database sharding, message queuing | Handles massive user interactions and data volume, maintains platform responsiveness. |

| Cloud-based Infrastructure | Automated scaling, resource pooling | Dynamically adjusts resources to meet fluctuating demand, cost-effective resource utilization. |

Last Recap

In conclusion, this guide provides a structured approach to designing scalable cloud applications, covering crucial aspects from platform selection to performance optimization. By understanding the core principles and best practices Artikeld here, readers can confidently develop applications capable of handling future growth and evolving business needs. The practical examples and real-world case studies will further enhance the understanding and application of these concepts.

Q&A

What are the key differences between IaaS, PaaS, and SaaS in terms of scalability?

IaaS (Infrastructure as a Service) offers the highest level of scalability, as you have complete control over the underlying infrastructure. PaaS (Platform as a Service) provides a platform for deploying applications, with scalability managed by the platform provider. SaaS (Software as a Service) offers the least control over scalability, as the application and its infrastructure are managed by the provider.

What are some common security vulnerabilities in scalable cloud applications?

Common vulnerabilities include misconfigured access controls, insecure APIs, and insufficient data encryption. Lack of proper monitoring and response to security threats are also major concerns.

How can I choose the right load balancer for my application?

The choice depends on factors like expected traffic volume, application needs, and budget. Consider features like connection handling, session persistence, and health checks. Consult the cloud platform’s load balancer documentation for detailed information on choosing the appropriate type for your application.