Optimizing Network Paths for Peak Cloud Application Performance

Cloud application performance hinges on efficient network paths, making optimization a critical priority for modern organizations. This article explor...

Discover the latest insights, tutorials and expert analysis on cloud computing, serverless architecture and modern technology solutions.

Stay updated with the latest trends and insights in cloud computing technology

Cloud application performance hinges on efficient network paths, making optimization a critical priority for modern organizations. This article explor...

The FinOps community offers a dynamic space for professionals to optimize cloud spending and enhance financial accountability. This article provides a...

This article provides a comprehensive guide to building a serverless image processing service, exploring the core concepts, benefits, and various use...

Effectively managing cloud costs is crucial for maximizing the value of your cloud investments. This guide provides a comprehensive overview of the ch...

Cloud adoption is a transformative process for businesses, yet its complexities can be challenging to navigate. This article introduces the cloud adop...

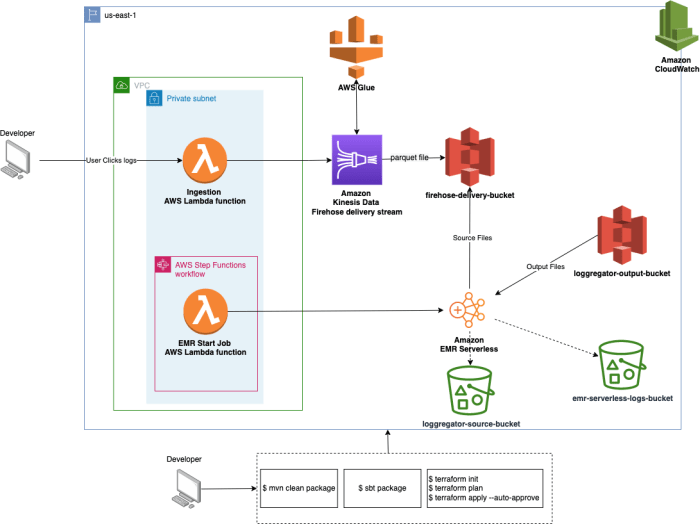

This article delves into the world of serverless frameworks, exploring their role in streamlining deployment automation for modern applications. From...

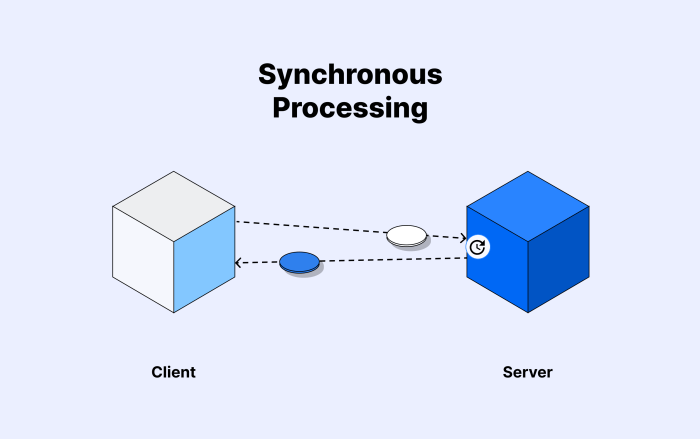

Explore the core principles and historical significance of message queues in this insightful guide to asynchronous communication. Discover how these p...

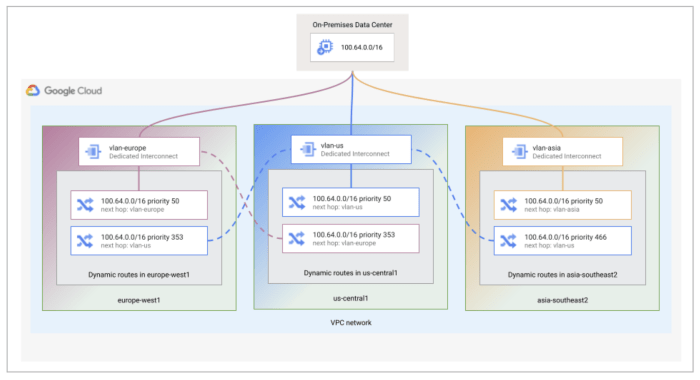

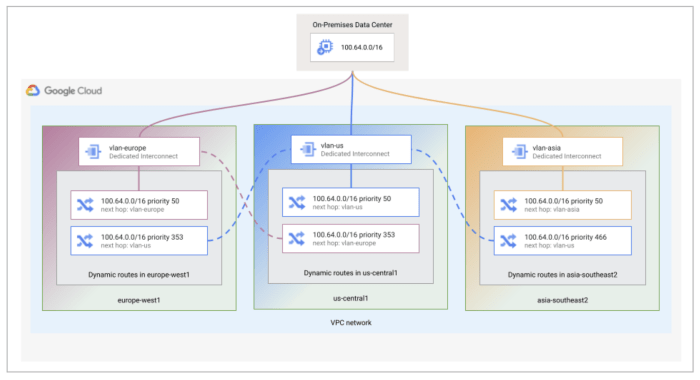

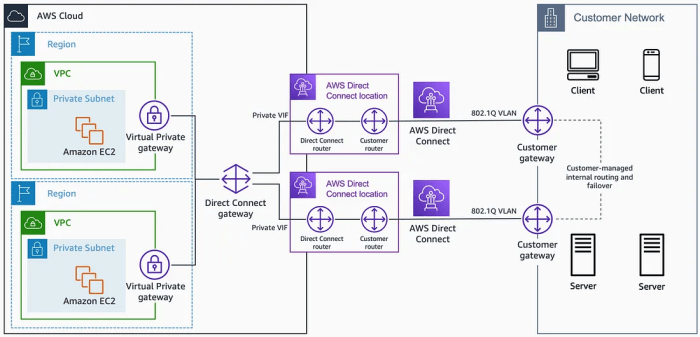

This comprehensive guide explores Direct Connect and ExpressRoute, essential services for establishing secure and reliable hybrid cloud connectivity....

This comprehensive article delves into the transformative technology of homomorphic encryption, exploring its core functionality, diverse types, and u...

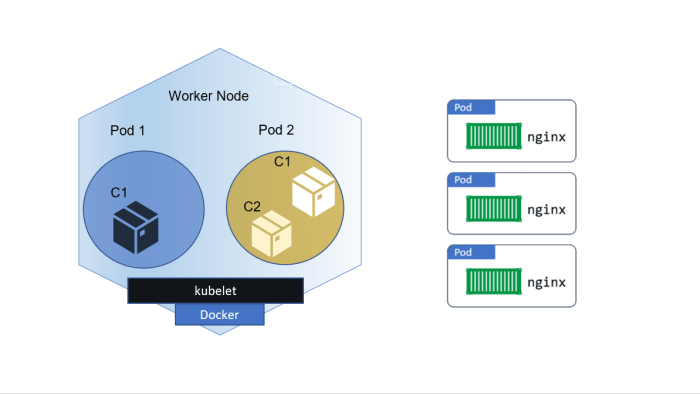

Kubernetes excels at orchestrating container lifecycles, providing the foundation for efficient application deployment, management, and scaling. This...

Kubernetes rightsizing for pods and nodes is a crucial strategy for maximizing resource efficiency and minimizing costs within your Kubernetes deploym...

This article provides a comprehensive overview of Dead-Letter Queues (DLQs), critical components for managing failed message invocations in messaging...