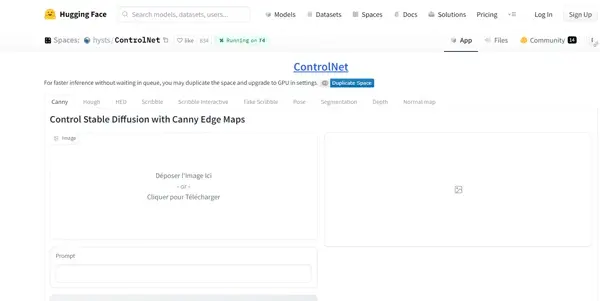

ControlNet

A neural network to control and add additional conditions in Stable Diffusion

ControlNet: Enhancing Stable Diffusion with Precise Control

ControlNet is a powerful neural network extension designed to significantly enhance the capabilities of Stable Diffusion, a popular text-to-image generation model. Instead of relying solely on textual prompts, ControlNet allows users to add precise control over the generated images by incorporating additional conditions, effectively guiding the diffusion process. This opens up a wide range of creative possibilities and expands the practical applications of Stable Diffusion.

What ControlNet Does

ControlNet takes Stable Diffusion's existing functionality and augments it. It acts as a conditioning network, taking a pre-processed "control" image as input alongside the text prompt. This control image acts as a guide, influencing the generated image's composition, pose, depth, edges, or other aspects depending on the chosen ControlNet model. Essentially, it allows users to specify how the image should look, not just what it should depict.

Main Features and Benefits

Precise Image Control: The primary benefit is the ability to achieve far more precise control over the generated output. Instead of hoping the text prompt accurately conveys your vision, you can provide a reference image to guide the model.

Variety of Control Models: ControlNet offers numerous pre-trained models, each specializing in a different type of control:

- Canny edge detection: ControlNet can use edge maps from an image to dictate the overall composition and structure.

- Depth maps: Provides control over the perspective and depth layering within the image.

- Human pose estimation: Allows for precise control over the pose and positioning of human figures.

- Segmented images: Utilizes semantic segmentation masks to control the placement and appearance of different objects within the scene.

- Scribble input: Allows for rough sketches to guide the image generation.

- OpenPose: Similar to human pose estimation, but offers even more detailed control.

Increased Artistic Flexibility: ControlNet enables the creation of images that would be extremely difficult or impossible to achieve using only text prompts. This expands the artistic possibilities and allows for more refined and intentional artwork.

Improved Consistency and Repeatability: By providing a control image, users can generate images with more consistency and repeatability, even with variations in the text prompt.

Ease of Integration: ControlNet is relatively easy to integrate into existing Stable Diffusion workflows. Various community extensions and guides simplify the setup process.

Use Cases and Applications

ControlNet's capabilities span a variety of fields:

- Art Creation: Generate highly stylized images with specific poses, compositions, and object arrangements.

- Character Design: Create consistent character designs with precisely controlled poses and outfits.

- Illustration: Generate detailed illustrations based on sketches or reference images.

- Game Asset Creation: Generate concept art and assets with specific features and compositions.

- Architectural Visualization: Create visualizations from sketches or blueprints.

- Image Inpainting and Outpainting: Use control images to seamlessly fill in missing parts of an image or extend its boundaries.

Comparison to Similar Tools

While other tools aim to enhance Stable Diffusion control, ControlNet stands out due to its versatility and the range of its pre-trained models. Some tools might focus on a single type of control (e.g., only pose control), while ControlNet provides a comprehensive suite of options. The open-source nature of ControlNet also fosters community contributions and continuous improvement.

Pricing Information

ControlNet is completely free to use. It's an open-source project, and the pre-trained models are readily available for download and integration.

In conclusion, ControlNet represents a significant advancement in AI-powered image generation. Its ability to incorporate diverse control inputs drastically increases the precision and creative freedom offered by Stable Diffusion, making it an invaluable tool for artists, developers, and anyone seeking more control over their image generation process.