The evolution of software architecture has presented a continuous challenge: how to balance the benefits of monolithic applications with the agility and scalability demanded by modern business needs. Best practices for modernizing a monolithic application represent a crucial strategy for organizations seeking to maintain legacy systems while embracing the advantages of cloud-native architectures. This exploration delves into the intricacies of this process, offering a structured approach to transforming complex, tightly-coupled systems into flexible, maintainable, and scalable components.

The journey from a monolithic structure to a modern, distributed architecture requires a systematic approach. It encompasses detailed assessments, strategic planning, and careful execution across various domains, from database modernization to API design and deployment strategies. This guide provides a deep dive into the critical steps and considerations, equipping readers with the knowledge to navigate the complexities of modernizing monolithic applications successfully.

Understanding Monolithic Applications and the Need for Modernization

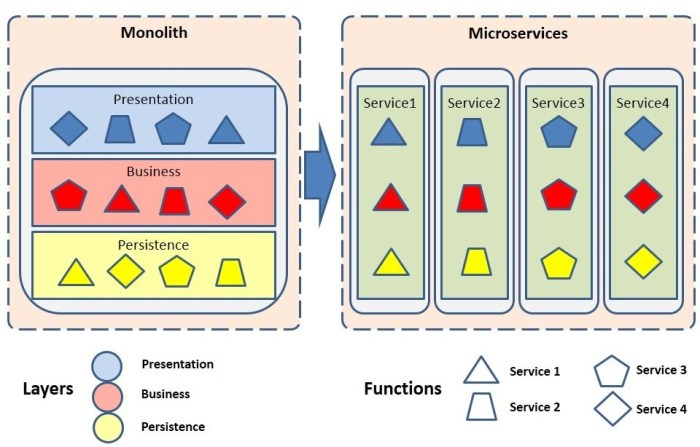

Modernizing a monolithic application is a significant undertaking, often driven by the limitations inherent in its architecture. This section explores the characteristics of monolithic systems, the challenges they present, scenarios where modernization is preferred, and the business justifications that typically fuel these initiatives. Understanding these aspects is crucial for making informed decisions about application evolution.

Core Characteristics of a Monolithic Application Architecture

A monolithic architecture is characterized by a single, unified codebase and deployment unit. This means all functionalities, from the user interface to data access, are tightly coupled and run within a single process. This design has both advantages and disadvantages, impacting scalability, maintainability, and development velocity.

- Single Codebase: The entire application resides in a single codebase. This can simplify initial development and deployment, especially for smaller projects. However, as the application grows, managing a large codebase becomes increasingly complex.

- Unified Deployment: Changes, regardless of their scope, require redeploying the entire application. This can lead to longer deployment cycles and increased risk of introducing errors.

- Tight Coupling: Components are highly interdependent. Modifying one part of the application can have unforeseen consequences in other areas, making testing and debugging more difficult.

- Single Technology Stack: The entire application typically relies on a single technology stack. This limits the flexibility to adopt newer technologies or choose the best tool for a specific task.

- Scalability Challenges: Scaling a monolithic application often requires scaling the entire application, even if only a specific part is experiencing high load. This can lead to inefficient resource utilization.

Common Challenges Faced by Organizations with Monolithic Systems

Organizations operating monolithic applications frequently encounter several operational and business-related hurdles. These challenges often stem from the architecture’s inherent limitations, affecting agility, efficiency, and the ability to adapt to changing market demands.

- Slow Development Cycles: The complexity of a large codebase and the need to redeploy the entire application for every change slows down development and release cycles. This reduces the organization’s ability to respond quickly to market opportunities.

- Difficult Maintenance: As the application grows, maintaining the codebase becomes increasingly challenging. Understanding the interdependencies between components and debugging issues can be time-consuming and resource-intensive.

- Limited Scalability: Scaling a monolithic application often requires scaling the entire application, even if only a small part is experiencing high load. This can lead to inefficient resource utilization and increased infrastructure costs.

- Technology Lock-in: Monolithic applications are typically built using a single technology stack. This limits the organization’s ability to adopt newer technologies or choose the best tool for a specific task.

- Increased Risk: Changes to the application can have unforeseen consequences, increasing the risk of introducing errors and impacting the overall stability of the system.

Scenarios Where Modernizing a Monolith is More Advantageous Than a Complete Rewrite

While a complete rewrite might seem appealing, modernizing a monolith offers a more pragmatic approach in certain situations. This approach allows organizations to leverage existing investments while mitigating risks and gradually improving the application.

- Significant Existing Investment: When a substantial investment has been made in the existing application, a complete rewrite can be costly and time-consuming. Modernization allows organizations to leverage existing functionality and reduce the risk of losing valuable intellectual property.

- Business Continuity Requirements: Rewriting an application can disrupt business operations. Modernization allows for incremental changes and deployments, minimizing downtime and ensuring business continuity.

- Limited Resources: A complete rewrite requires significant resources, including development teams, budget, and time. Modernization can be implemented incrementally, allowing organizations to allocate resources more efficiently.

- Regulatory Compliance: Certain industries have strict regulatory requirements that must be met. Modernization can help organizations comply with these regulations while avoiding the risks associated with a complete rewrite.

- Gradual Improvement: Modernization allows for a phased approach, enabling organizations to address specific pain points and improve the application incrementally. This approach provides flexibility and reduces the risk of a complete failure.

Business Drivers That Typically Initiate a Modernization Project

Modernization projects are often driven by specific business needs and challenges. These drivers reflect the desire to improve efficiency, reduce costs, and enhance the organization’s ability to compete in the market.

- Increased Agility and Time-to-Market: The need to respond quickly to market changes and release new features faster is a primary driver. Modernization enables faster development cycles and more frequent deployments.

- Cost Reduction: Modernization can help reduce infrastructure costs, improve resource utilization, and lower maintenance expenses. For example, by adopting cloud-native architectures, organizations can leverage pay-as-you-go pricing models and optimize resource allocation.

- Improved Scalability and Performance: The need to handle increasing user traffic and data volumes drives the need for improved scalability and performance. Modernization enables organizations to scale specific components of the application independently.

- Enhanced User Experience: Modernizing the user interface and improving application responsiveness can enhance the user experience and increase customer satisfaction.

- Compliance and Security: Meeting regulatory requirements and improving security posture are critical drivers. Modernization allows organizations to implement modern security practices and comply with industry standards.

Assessment and Planning: The Crucial First Steps

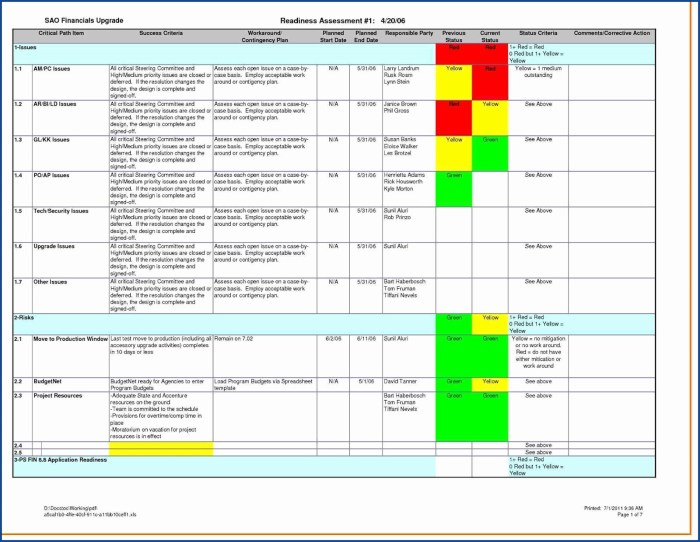

The initial phases of modernizing a monolithic application are critical for ensuring the success of the entire project. A comprehensive assessment and a well-defined plan are essential for mitigating risks, optimizing resource allocation, and maximizing the return on investment. This involves a deep dive into the current state of the application, a thorough analysis of its dependencies, and a strategic approach to prioritization.

Identifying Key Components of a Thorough Application Assessment Process

A robust application assessment process is multifaceted, encompassing various dimensions to provide a holistic understanding of the monolithic application. It serves as the foundation for informed decision-making throughout the modernization journey.

- Codebase Analysis: This involves evaluating the codebase’s size, complexity, and quality. Tools like SonarQube or static analysis tools are employed to identify code smells, vulnerabilities, and areas for improvement. This includes analyzing the programming languages, frameworks, and libraries used, assessing their versions, and identifying potential compatibility issues.

- Architecture Review: Examining the application’s architecture is crucial. This includes mapping out the components, their interactions, and the underlying infrastructure. The goal is to understand the overall structure and identify areas that are tightly coupled, creating bottlenecks or single points of failure.

- Performance Evaluation: Measuring the application’s performance under various load conditions is essential. This involves using performance testing tools like JMeter or LoadRunner to simulate user traffic and identify performance bottlenecks. Key metrics include response times, throughput, and resource utilization.

- Dependency Analysis: Understanding the application’s dependencies, both internal and external, is paramount. This includes identifying third-party libraries, APIs, and databases. The analysis must also consider the versions of these dependencies, their support lifecycles, and any potential security vulnerabilities.

- Business Value Assessment: Aligning modernization efforts with business goals is critical. This involves understanding the business processes supported by the application, identifying areas where modernization can improve efficiency, and quantifying the potential benefits.

- Security Audit: A thorough security audit is necessary to identify vulnerabilities and ensure the application’s security posture. This includes penetration testing, vulnerability scanning, and code reviews to address potential security risks.

- Infrastructure Evaluation: Assessing the underlying infrastructure is crucial. This includes evaluating the servers, network, and storage used by the application. The goal is to identify any infrastructure limitations or inefficiencies that could impact the modernization process.

Detailing the Process of Analyzing Dependencies Within a Monolithic Application

Dependency analysis is a critical aspect of the assessment phase, as it reveals the intricate relationships within the application and its external environment. A systematic approach ensures a clear understanding of the impact of any modernization efforts.

The process involves several steps:

- Dependency Discovery: Identifying all dependencies, including third-party libraries, frameworks, databases, and APIs. This can be achieved using dependency management tools, static code analysis, and manual code inspection.

- Dependency Mapping: Creating a visual representation of the dependencies, showing how they interact with each other and with the application’s components. Dependency diagrams and architecture diagrams are useful for this purpose.

- Dependency Versioning: Documenting the versions of all dependencies. This is crucial for identifying compatibility issues, security vulnerabilities, and potential upgrade paths.

- Dependency Risk Assessment: Evaluating the risks associated with each dependency. This includes assessing the security risks, the support lifecycle, and the potential impact of changes.

- Dependency Impact Analysis: Determining the impact of changes to a dependency on the application. This involves identifying the components that are affected by the change and assessing the potential risks.

- Dependency Remediation: Developing a plan to address any identified risks. This may involve upgrading dependencies, replacing dependencies, or isolating dependencies.

Consider a hypothetical e-commerce application. A dependency analysis might reveal that the application relies on a legacy payment gateway API with known security vulnerabilities. The analysis would then assess the impact of this dependency, including the risk of data breaches and compliance issues. The remediation plan could involve migrating to a more secure payment gateway API or isolating the legacy API behind a secure proxy.

Designing a Framework for Prioritizing Modernization Efforts Based on Business Value

Prioritization is a key element in modernizing monolithic applications, ensuring that resources are allocated effectively and that the most impactful changes are implemented first. A framework based on business value helps guide these decisions.

The framework incorporates these key elements:

- Business Value Definition: Defining what constitutes business value in the context of the application. This could include increased revenue, reduced costs, improved customer satisfaction, or enhanced compliance.

- Impact Assessment: Assessing the potential impact of each modernization effort on the defined business value metrics. This involves quantifying the benefits, such as the expected increase in revenue or the reduction in operational costs.

- Effort Estimation: Estimating the effort required to implement each modernization effort. This includes the time, resources, and costs involved.

- Risk Assessment: Assessing the risks associated with each modernization effort. This includes the technical risks, the business risks, and the operational risks.

- Prioritization Matrix: Creating a prioritization matrix that combines the business value, effort, and risk assessments. This matrix can be used to rank the modernization efforts and determine their priority.

- Iterative Approach: Implementing the modernization efforts in an iterative manner, starting with the highest-priority efforts and continuously monitoring the results.

An example of applying this framework: Suppose a company’s monolithic application has a slow checkout process, negatively impacting customer satisfaction and sales. Modernizing the checkout process to improve speed and reliability would be prioritized due to its high business value (increased sales and customer satisfaction) and manageable effort and risk. A lower-priority effort might be refactoring a rarely used internal reporting module.

Organizing a Checklist for Documenting Current State, Risks, and Potential Solutions

A well-structured checklist is essential for capturing all the relevant information gathered during the assessment and planning phases. It serves as a central repository for documentation, ensuring consistency and facilitating communication among stakeholders.

The checklist should include the following sections:

- Application Overview: A brief description of the application, its purpose, and its key features.

- Architecture: A high-level overview of the application’s architecture, including its components and their interactions.

- Technology Stack: A detailed list of the technologies used by the application, including programming languages, frameworks, databases, and libraries.

- Dependencies: A comprehensive list of the application’s dependencies, including their versions and support lifecycles.

- Performance Metrics: Key performance metrics, such as response times, throughput, and resource utilization.

- Security Assessment: A summary of the security vulnerabilities identified during the security audit.

- Business Value Assessment: An assessment of the application’s business value and the potential benefits of modernization.

- Risks: A list of the risks associated with the application and the modernization process.

- Potential Solutions: A list of potential solutions to address the identified risks and improve the application’s performance and maintainability.

- Prioritization: The prioritization of modernization efforts based on business value, effort, and risk.

For example, under the “Risks” section, the checklist might include entries such as “Security vulnerabilities in a third-party library (CVE-2023-XXXX)” or “Performance bottlenecks in the database layer.” The “Potential Solutions” section would then propose specific actions, such as “Upgrade the vulnerable library to the latest version” or “Optimize database queries and indexes.” The checklist serves as a living document, updated throughout the modernization journey.

Choosing the Right Modernization Strategy

Selecting the appropriate modernization strategy is crucial for the successful transformation of a monolithic application. This decision significantly impacts the project’s cost, timeline, and the overall business value derived from the modernized application. A careful evaluation of various factors, including the application’s current state, business requirements, and available resources, is essential to making an informed choice.

Comparing and Contrasting Modernization Strategies

Several strategies can be employed to modernize a monolithic application, each with its own set of advantages and disadvantages. Understanding these differences is vital for selecting the most suitable approach.

- Rehosting (Lift and Shift): This involves migrating the application to a new infrastructure, typically a cloud environment, with minimal code changes. It focuses on moving the application “as is” to a new platform.

- Advantages: Relatively quick and inexpensive, minimizes disruption to the application’s functionality, and provides immediate benefits such as improved scalability and cost optimization.

- Disadvantages: Doesn’t address the underlying architectural limitations of the monolith; it’s primarily an infrastructure change. The application still suffers from the same scaling and maintainability challenges.

- Use Case: Ideal for applications that are stable and require immediate infrastructure benefits without significant code changes. Consider applications with predictable workloads.

- Re-platforming (Lift, Tinker, and Shift): This strategy involves migrating the application to a new platform and making some code changes to take advantage of platform-specific features.

- Advantages: Improves performance and scalability by leveraging the features of the new platform (e.g., database migration, containerization).

- Disadvantages: Requires more effort than rehosting, as code changes are necessary. The application still remains largely monolithic.

- Use Case: Suitable for applications that can benefit from platform-specific features like managed databases or container orchestration (e.g., migrating to a managed Kubernetes service).

- Refactoring: This involves restructuring the existing code to improve its internal structure and design without changing its external behavior.

- Advantages: Improves code quality, maintainability, and readability, making future changes easier.

- Disadvantages: Time-consuming and may not provide immediate benefits to end-users. Requires a deep understanding of the application’s code base.

- Use Case: Best suited for applications with complex code that needs to be improved to support future changes or enhancements. It’s a foundational step for more complex modernization strategies.

- Re-architecting: This strategy involves significantly changing the application’s architecture, often breaking it down into smaller, independent services.

- Advantages: Enables greater scalability, flexibility, and faster development cycles. Allows for independent deployments and technology choices for individual services.

- Disadvantages: The most complex and time-consuming strategy. Requires a significant investment in resources and expertise.

- Use Case: Suitable for applications that need to evolve rapidly, scale significantly, and adopt new technologies. This often involves transitioning to a microservices architecture.

- Replacing: This involves building a new application from scratch while retiring the existing monolith.

- Advantages: Allows for the adoption of modern technologies and architectures, addressing all the limitations of the legacy system.

- Disadvantages: The most disruptive and expensive strategy. Requires a significant investment in time, resources, and expertise. Risk of feature parity issues.

- Use Case: Appropriate for applications that are beyond repair or when the cost of modernizing the existing application exceeds the cost of building a new one. Consider cases where the existing codebase is unmaintainable or built on obsolete technologies.

Decision Matrix for Selecting the Appropriate Strategy

A decision matrix can help in systematically evaluating the various modernization strategies based on specific criteria. This provides a structured approach to making the best choice for a given scenario.

| Criteria | Rehosting | Re-platforming | Refactoring | Re-architecting | Replacing |

|---|---|---|---|---|---|

| Complexity | Low | Medium | Medium | High | High |

| Time to Market | Fastest | Fast | Medium | Slow | Slowest |

| Cost | Lowest | Medium | Medium | High | Highest |

| Risk | Low | Medium | Medium | High | Highest |

| Business Value | Low | Medium | Medium | High | Highest (potential) |

| Impact on Application Functionality | Minimal | Minor | None | Significant | Complete Rewrite |

The decision matrix helps to visualize the trade-offs associated with each strategy, enabling stakeholders to make informed decisions based on their priorities. The specific weighting of these criteria (e.g., time to market vs. cost) depends on the unique requirements of each project.

Evaluating the Technical Feasibility of Each Modernization Option

Assessing the technical feasibility of each modernization option involves evaluating the application’s current state, the required skills, and the availability of the necessary tools and technologies.

- Application Assessment: Conduct a thorough analysis of the existing application, including its codebase, architecture, dependencies, and performance characteristics.

- Skills Assessment: Evaluate the skills and expertise available within the organization. Identify any skill gaps and plan for training or external support.

- Technology Evaluation: Assess the suitability of various technologies for each modernization option. Consider factors such as compatibility, scalability, and maintainability.

- Dependency Analysis: Identify all external dependencies and assess their impact on the modernization effort. Ensure that all dependencies are compatible with the chosen strategy.

- Performance Testing: Conduct performance testing to understand the application’s current performance characteristics and identify areas for improvement.

- Security Review: Review the application’s security posture and identify any vulnerabilities. Ensure that the chosen modernization strategy addresses any security concerns.

For example, if an application relies heavily on an outdated framework, refactoring or re-architecting might be necessary to adopt a more modern framework. If the team lacks experience with containerization technologies, re-platforming or re-architecting might require significant training or the hiring of specialized consultants. Consider the real-world example of a financial institution modernizing its core banking system. The feasibility of re-architecting to a microservices architecture would depend on the team’s experience with distributed systems, message queues, and API design.

Considerations for Selecting a Suitable Target Architecture

The choice of target architecture significantly impacts the success of the modernization effort. The two most common target architectures are microservices and serverless.

- Microservices: This architecture involves breaking down the monolithic application into a collection of small, independent services that communicate with each other through APIs.

- Advantages: Increased scalability, faster development cycles, independent deployments, and the ability to use different technologies for different services.

- Disadvantages: Increased complexity in terms of distributed systems, inter-service communication, and monitoring.

- Use Case: Suitable for large, complex applications that need to evolve rapidly and scale significantly. Consider an e-commerce platform where different services (e.g., product catalog, shopping cart, payment processing) can be scaled independently.

- Serverless: This architecture involves running code without managing servers. Developers deploy code as functions that are triggered by events.

- Advantages: Reduced operational overhead, automatic scaling, and cost optimization.

- Disadvantages: Vendor lock-in, limited control over infrastructure, and potential cold start issues.

- Use Case: Suitable for applications with event-driven workloads, such as processing image uploads or handling API requests. A content delivery network (CDN) can use serverless functions to process and transform images on the fly.

The selection of a target architecture should align with the business goals and the application’s specific requirements. Factors to consider include scalability needs, development team expertise, and the desired level of operational control. For instance, a company with a small development team and limited DevOps experience might initially favor serverless solutions due to their ease of management. In contrast, a company with a larger team and more sophisticated needs might choose microservices to gain greater control over the application’s architecture and scalability.

Breaking Down the Monolith

Decomposing a monolithic application into a collection of smaller, independent services is a critical step in modernization. This process, often referred to as service decomposition, allows for increased agility, scalability, and resilience. It involves identifying logical service boundaries within the monolith, extracting these services, and managing data consistency across the newly created service landscape. The following sections detail the techniques and considerations involved in successfully breaking down a monolithic application.

Principles of Bounded Contexts and Microservice Design

The concept of a bounded context, introduced by Eric Evans in his book “Domain-Driven Design,” is fundamental to microservice architecture. A bounded context defines the specific area of responsibility for a microservice, including its data model, business logic, and language. This separation promotes modularity, enabling teams to work independently on individual services without being tightly coupled to other parts of the system.

Adhering to bounded contexts reduces the risk of unintended side effects when changes are made to a service, leading to improved maintainability and faster development cycles.

Identifying Logical Service Boundaries within a Monolith

Identifying logical service boundaries requires a thorough understanding of the application’s domain and its functionality. The goal is to group related functionalities into cohesive services that can evolve independently. This process involves several steps, starting with a detailed analysis of the existing codebase and its functionalities.

- Domain Analysis: Begin by mapping out the business domain, identifying key business processes, and the entities involved. This can be achieved through domain modeling techniques such as event storming or using domain-driven design principles. This analysis helps reveal natural groupings of functionality. For example, in an e-commerce application, distinct bounded contexts might include “Product Catalog,” “Order Management,” and “Customer Accounts.”

- Code Analysis: Examine the code to understand how different parts of the application interact. Analyze dependencies, identify shared libraries, and track data flows. Tools like static analysis tools and dependency graphs can be invaluable in this process. Look for areas where functionality is highly coupled, suggesting a need for decomposition.

- Functional Decomposition: Break down the application’s functionality into smaller, more manageable units. Group related functionalities based on their business purpose and their data requirements. For instance, functionalities related to product searching, displaying product details, and managing product inventory would logically belong to the “Product Catalog” service.

- Data Analysis: Examine the data model to identify data that is tightly coupled and data that can be logically separated. Identify the data entities associated with each functional unit. For example, data related to products, such as product descriptions, prices, and images, would be part of the “Product Catalog” service.

- Identify Communication Patterns: Determine how the services will communicate with each other. This can involve synchronous communication (e.g., REST APIs) or asynchronous communication (e.g., message queues). The choice of communication pattern impacts service design and data consistency strategies.

Step-by-Step Guide to Extracting Services from a Monolithic Application

Extracting services from a monolith is a gradual process that minimizes disruption and allows for iterative improvements. A phased approach is recommended, focusing on extracting services one at a time, testing them thoroughly, and then integrating them into the overall system.

- Identify Candidate Services: Based on the domain analysis, code analysis, and functional decomposition, identify the first service to extract. Choose a service with well-defined boundaries and minimal dependencies on other parts of the monolith. A good starting point is a service with a clear business function that can be easily isolated.

- Create a New Service: Set up a new service environment, including a separate codebase, build process, and deployment infrastructure. The new service can be built using a different technology stack if desired.

- Extract Functionality: Copy the code and data related to the chosen service from the monolith into the new service. This may involve refactoring the code to remove dependencies on the monolith.

- Implement Communication: Establish communication between the new service and the monolith. This could involve creating REST APIs or using message queues. Initially, the monolith might call the new service to perform its functions.

- Test the New Service: Thoroughly test the new service to ensure it functions correctly and integrates seamlessly with the monolith. This includes unit tests, integration tests, and end-to-end tests.

- Gradual Migration: Gradually migrate traffic from the monolith to the new service. This can be done using techniques like feature toggles or canary releases. Monitor the performance and stability of the new service during the migration.

- Remove Functionality from Monolith: Once the new service is stable and handles the traffic, remove the corresponding functionality from the monolith.

- Repeat: Repeat the process for other candidate services until the monolith is sufficiently decomposed.

Techniques for Managing Data Consistency During Decomposition

Maintaining data consistency is crucial when decomposing a monolith, as data might be distributed across multiple services. Several techniques can be employed to ensure data integrity, including the use of transactions, eventual consistency, and the Saga pattern.

- Transactions: When a single service is responsible for managing all the data related to a transaction, traditional ACID transactions can be used. For example, if an order creation service is responsible for creating an order and updating the customer’s information, it can use a database transaction to ensure that both operations succeed or fail together.

- Eventual Consistency: For operations that span multiple services, eventual consistency is often the preferred approach. This involves using asynchronous communication and message queues to propagate data changes across services. The system guarantees that data will eventually be consistent, even if there is a delay in propagation.

- Saga Pattern: The Saga pattern is a sequence of local transactions. Each local transaction updates data within a single service. If one transaction fails, the Saga executes compensating transactions to undo the changes made by the previous transactions. Sagas can be implemented using choreography (services react to events) or orchestration (a central service coordinates the transactions).

- Data Replication: Data replication can be used to create local copies of data in each service. This reduces latency and improves availability. However, it requires careful consideration of data synchronization and consistency.

- Idempotent Operations: Implement idempotent operations to handle potential failures during communication. An idempotent operation can be executed multiple times without changing the result beyond the first execution. This is critical in distributed systems, where network failures are common.

Database Modernization Strategies

Modernizing the database component of a monolithic application is a critical aspect of the overall modernization effort. The database often represents the core of the application’s data persistence and integrity, and its modernization can significantly impact the application’s performance, scalability, and maintainability. A well-executed database modernization strategy can unlock significant benefits, while a poorly planned one can lead to significant challenges and potential disruptions.

Identifying Challenges of Modernizing a Monolithic Database

The modernization of a monolithic database presents a unique set of challenges, often stemming from the database’s size, complexity, and tight coupling with the application’s logic. These challenges require careful consideration and planning to ensure a successful outcome.

- Data Volume and Complexity: Monolithic databases often accumulate vast amounts of data over time, along with complex schemas and relationships. This complexity can make it difficult to understand the existing data model, identify dependencies, and plan for schema changes. The larger the dataset, the longer migration processes will take, potentially impacting business operations.

- Application Coupling: The database is often tightly coupled with the application code. This means that changes to the database schema can require significant modifications to the application code, and vice versa. Identifying and managing these dependencies can be a complex and time-consuming task.

- Downtime Requirements: Migrating a large database can require downtime, during which the application is unavailable. Minimizing downtime is critical, especially for applications that serve critical business functions. Strategies like zero-downtime migrations and blue/green deployments are often employed to mitigate this risk.

- Performance Bottlenecks: Monolithic databases can become performance bottlenecks, particularly as the application’s user base and data volume grow. Identifying and resolving these bottlenecks can be challenging, often requiring careful analysis of query performance and database configuration.

- Vendor Lock-in: Monolithic applications often rely on a single database vendor. Modernizing the database may involve migrating to a different database system, which can introduce vendor lock-in if not carefully planned. This requires a careful assessment of the features, costs, and support offered by different vendors.

- Security and Compliance: Modernization efforts must address security vulnerabilities and ensure compliance with industry regulations. Data security and privacy are paramount, requiring thorough consideration of data encryption, access controls, and audit trails.

Providing Examples of Database Refactoring Patterns

Database refactoring involves making incremental changes to the database schema and code to improve its design, performance, or maintainability without altering its external behavior. Several refactoring patterns can be applied to monolithic databases to prepare them for modernization.

- Extract Table: This pattern involves creating a new table and moving a subset of data from an existing table into the new table. This is useful for breaking down large tables into smaller, more manageable ones, improving query performance, and isolating specific data sets. For example, if a `Customers` table contains columns related to both customer contact information and order history, you could extract the order history into a separate `CustomerOrders` table.

- Introduce Lookup Table: This pattern involves creating a new table to store lookup values, such as status codes or category names. This improves data consistency and reduces redundancy. For example, instead of storing the status as text, a lookup table for order statuses (e.g., “Pending,” “Shipped,” “Delivered”) can be used, improving query performance and data integrity.

- Split Table: This pattern involves dividing a table into two or more tables, often based on usage patterns or data characteristics. This can improve performance by reducing the amount of data that needs to be read for a given query. A common example involves splitting a wide table with many columns into a core table and a related table containing less frequently accessed data.

- Introduce Surrogate Key: This pattern involves adding a new, artificial key (e.g., an integer or GUID) to a table to serve as the primary key. Surrogate keys can simplify data modeling, improve query performance, and provide a stable identifier for data, regardless of changes to the underlying business keys.

- Denormalize Data: This pattern involves adding redundant data to a table to improve query performance. While denormalization can increase data redundancy and potential for inconsistencies, it can significantly speed up read operations. This pattern is typically used strategically, such as duplicating a customer’s name in the `Orders` table to avoid a join when displaying order details.

- Partitioning: This involves dividing a large table into smaller, more manageable pieces (partitions) based on a specific criteria, such as date or geographic region. Partitioning improves query performance by enabling the database to access only the relevant partitions.

Sharing Methods for Migrating Data to a New Database System

Migrating data from an existing database to a new system is a critical step in database modernization. Several methods can be employed, each with its own trade-offs in terms of downtime, complexity, and data consistency.

- Full Export and Import: This method involves exporting all data from the source database and importing it into the target database. This is the simplest approach but can result in significant downtime, especially for large datasets.

- Change Data Capture (CDC): CDC techniques track changes made to the source database and replicate those changes to the target database in near real-time. This approach minimizes downtime and allows for a gradual migration. Common CDC tools include Debezium, AWS DMS, and Oracle GoldenGate.

- Dual Write: This method involves writing data to both the source and target databases simultaneously. This allows the application to continue operating while the data is being migrated. Once the data is fully migrated, the application can be switched to using the target database exclusively. This approach requires careful planning to ensure data consistency between the two databases.

- Bulk Copy: Bulk copy tools are optimized for transferring large amounts of data quickly. These tools often provide parallel processing capabilities to speed up the migration process.

- Federated Queries: Some database systems allow querying data across multiple databases. Federated queries can be used to access data in both the source and target databases during the migration process, allowing for a gradual transition.

Discussing Strategies for Dealing with Database Schema Changes During Modernization

Database schema changes are often necessary during modernization to align with the new database system or to improve the database’s design. Managing these changes requires careful planning and execution to avoid data loss or application downtime.

- Schema Evolution Tools: Use tools that manage database schema changes, such as Flyway, Liquibase, or Alembic. These tools allow for versioning schema changes and applying them in a controlled manner.

- Backward Compatibility: Design schema changes to be backward compatible with the existing application code. This allows the application to continue operating while the schema changes are being applied. This might involve adding new columns with default values, or providing default values for removed columns.

- Blue/Green Deployments: Implement a blue/green deployment strategy. This involves maintaining two identical environments (blue and green). Schema changes are applied to the green environment, and then traffic is gradually switched to the green environment after thorough testing. This minimizes downtime and allows for easy rollback if issues arise.

- Canary Releases: Introduce schema changes in a small subset of the production environment (the canary) to test their impact before rolling them out to the entire system. Monitor performance and data integrity in the canary environment before proceeding with a full deployment.

- Data Validation: Implement data validation checks to ensure that the data in the database meets the requirements of the new schema. This can help identify and correct data inconsistencies before they cause problems.

- Automated Testing: Develop comprehensive automated tests to verify that the schema changes do not break existing functionality or introduce data integrity issues. This includes unit tests, integration tests, and end-to-end tests.

- Rollback Plans: Prepare a detailed rollback plan in case the schema changes cause problems. This plan should include steps to revert the schema changes and restore the database to its previous state.

API Design and Integration

Modernizing a monolithic application frequently necessitates the creation and integration of Application Programming Interfaces (APIs) to facilitate communication between microservices and external clients. Effective API design and integration are crucial for ensuring the modularity, scalability, and maintainability of the modernized system. This section Artikels best practices for designing and managing APIs in a microservices architecture.

Designing APIs for Microservices

Designing APIs for microservices involves careful consideration of several factors to ensure they are efficient, resilient, and aligned with the principles of microservices. The goal is to create APIs that expose the functionality of each service in a clear, concise, and self-describing manner.To achieve this, consider these key principles:

- API Granularity: APIs should be designed to expose a specific, well-defined set of functionalities. Each API should represent a single responsibility, minimizing the scope and complexity of the service. This principle, known as the Single Responsibility Principle, ensures that changes to one service are less likely to impact others. For example, a “user service” might have separate APIs for creating users, retrieving user profiles, and updating user preferences.

- Resource-Oriented Design: APIs should be resource-oriented, meaning that they expose and manipulate resources. Resources are nouns representing data or entities, such as “users,” “products,” or “orders.” Use standard HTTP methods (GET, POST, PUT, DELETE) to perform actions on these resources. This approach promotes consistency and predictability in API usage.

- Idempotency: API operations should be idempotent where possible. An idempotent operation can be executed multiple times without changing the result beyond the initial execution. This is particularly important for operations that modify data. For instance, a “PUT” request to update a resource should be idempotent; multiple identical requests should result in the same outcome.

- Versioning: APIs should be versioned to allow for changes and updates without breaking existing clients. Versioning can be implemented through URL paths (e.g., `/v1/users`), headers (e.g., `Accept: application/vnd.mycompany.users.v1+json`), or query parameters (e.g., `/users?version=1`). Versioning enables developers to introduce new features and bug fixes without forcing clients to immediately update their integrations.

- Data Formats: Choose appropriate data formats for API requests and responses. JSON (JavaScript Object Notation) is a widely adopted format due to its simplicity and human readability. Consider using Protocol Buffers (Protobuf) for high-performance APIs, especially when dealing with large data payloads. Protobuf offers efficient serialization and deserialization, reducing bandwidth usage and improving performance.

- API Contracts: Define API contracts using tools like OpenAPI (formerly Swagger) or RAML (RESTful API Modeling Language). These contracts provide a machine-readable description of the API, including its endpoints, request and response formats, and authentication requirements. API contracts are invaluable for generating documentation, testing API implementations, and enabling client-side code generation.

Service Communication Strategies

Selecting the appropriate communication strategy is critical for the performance, scalability, and reliability of a microservices architecture. Several options are available, each with its own advantages and disadvantages.

- REST (Representational State Transfer): REST is a widely used architectural style for building APIs. It relies on standard HTTP methods and is suitable for many use cases. RESTful APIs are easy to understand and implement. However, REST can sometimes suffer from performance issues when handling complex data relationships or requiring frequent communication between services.

- gRPC (gRPC Remote Procedure Calls): gRPC is a high-performance, open-source RPC framework developed by Google. It uses Protocol Buffers for defining service interfaces and data formats, enabling efficient communication with low overhead. gRPC is well-suited for internal service-to-service communication, especially when high throughput and low latency are critical.

- Message Queues (e.g., Apache Kafka, RabbitMQ): Message queues provide asynchronous communication between services. Services publish messages to a queue, and other services consume those messages. Message queues enable loose coupling, improve fault tolerance, and facilitate scalability. They are well-suited for handling event-driven architectures and decoupling services. However, message queues can introduce complexity in terms of managing message brokers and ensuring message delivery.

- GraphQL: GraphQL is a query language for APIs that allows clients to request only the data they need. It provides flexibility and efficiency by reducing over-fetching and under-fetching of data. GraphQL is suitable for building complex APIs that require data aggregation from multiple sources. However, GraphQL can introduce complexity in terms of schema design and performance optimization.

The choice of communication strategy depends on factors such as performance requirements, data complexity, and the need for asynchronous processing. Consider these points when selecting a strategy:

- Latency Sensitivity: For latency-sensitive applications, gRPC or REST with careful optimization might be preferred.

- Asynchronous Operations: Message queues are ideal for asynchronous operations, such as background processing or event-driven workflows.

- Data Complexity: GraphQL is well-suited for applications that require complex data aggregation and customized data retrieval.

- Service Coupling: Message queues promote loose coupling, improving fault tolerance and scalability.

API Security Considerations

Securing APIs is paramount to protect sensitive data and prevent unauthorized access. A robust security strategy should encompass several layers of protection.Common API security considerations include:

- Authentication: Verify the identity of API clients. Common authentication methods include:

- API Keys: Simple to implement but less secure. Suitable for internal or less sensitive APIs.

- OAuth 2.0: Widely used for delegated authorization. Provides a secure way for clients to access resources on behalf of a user without sharing their credentials.

- JWT (JSON Web Tokens): A compact and self-contained way for securely transmitting information between parties as a JSON object. JWTs are commonly used for stateless authentication.

- Authorization: Determine what resources a client is allowed to access. This involves defining roles, permissions, and access control policies. Implement role-based access control (RBAC) or attribute-based access control (ABAC) to manage access rights effectively.

- Input Validation: Validate all API inputs to prevent injection attacks (e.g., SQL injection, cross-site scripting). Sanitize inputs to remove malicious code or unexpected characters.

- Rate Limiting: Limit the number of requests a client can make within a given time period to prevent abuse and denial-of-service (DoS) attacks.

- Encryption: Use HTTPS (SSL/TLS) to encrypt all API traffic, protecting data in transit.

- Monitoring and Logging: Implement comprehensive monitoring and logging to detect and respond to security threats. Log all API requests, responses, and errors. Monitor API performance and usage patterns to identify anomalies.

- Regular Security Audits: Conduct regular security audits to identify vulnerabilities and ensure compliance with security best practices.

- API Gateway: Use an API gateway to centralize security policies, manage authentication, authorization, and rate limiting. API gateways provide a single point of entry for all API traffic, simplifying security management.

API Documentation and Versioning

Effective API documentation and versioning are essential for ensuring that APIs are usable, maintainable, and evolve gracefully over time. Clear and comprehensive documentation helps developers understand how to use the API, while versioning allows for introducing changes without breaking existing clients.To ensure effective documentation, follow these practices:

- Use a Standard Format: Adopt a standard format for API documentation, such as OpenAPI (formerly Swagger). This allows for automated generation of documentation, client libraries, and API testing tools.

- Comprehensive Descriptions: Provide clear and concise descriptions of each API endpoint, including its purpose, parameters, request and response formats, and error codes.

- Examples: Include code examples in multiple programming languages to illustrate how to use the API.

- Interactive Documentation: Offer interactive documentation that allows developers to test API endpoints directly from the documentation.

- Versioning: Implement a clear versioning strategy.

- URL-based versioning: Include the version number in the URL (e.g., `/v1/users`).

- Header-based versioning: Use custom headers (e.g., `Accept: application/vnd.mycompany.users.v1+json`).

- Versioning in the request body: Include the version number in the request body (e.g., JSON).

- Change Logs: Maintain a change log that details all API changes, including new features, bug fixes, and breaking changes.

- Deprecation Policies: Clearly communicate deprecation policies for older API versions, providing ample notice before removing them.

- Automated Documentation Generation: Utilize tools to automatically generate documentation from API definitions (e.g., OpenAPI).

An example of an OpenAPI specification is:“`yamlopenapi: 3.0.0info: title: User API version: 1.0.0paths: /users: get: summary: Retrieve all users responses: ‘200’: description: Successful operation content: application/json: schema: type: array items: $ref: ‘#/components/schemas/User’ post: summary: Create a user requestBody: required: true content: application/json: schema: $ref: ‘#/components/schemas/User’ responses: ‘201’: description: User created content: application/json: schema: $ref: ‘#/components/schemas/User’components: schemas: User: type: object properties: id: type: integer format: int64 description: The user’s ID username: type: string description: The user’s username email: type: string format: email description: The user’s email address“`This example provides a basic structure for documenting an API.

It defines the API’s title, version, endpoints, request and response formats, and data schemas. Tools can parse this specification to generate interactive documentation and client libraries.

Deployment and Infrastructure Considerations

Modernizing a monolithic application necessitates a significant shift in deployment strategies and infrastructure management. This transition is crucial for achieving the agility, scalability, and resilience that microservices-based architectures offer. Careful consideration of deployment pipelines, infrastructure-as-code, cloud-native services, and containerization are essential for a successful modernization journey.

Automated Deployment Pipelines

Automated deployment pipelines are critical for efficiently and reliably deploying modern applications. These pipelines streamline the build, test, and deployment processes, reducing manual intervention and minimizing the risk of errors. Implementing robust pipelines is fundamental to maintaining a rapid release cadence and ensuring application stability.

- Continuous Integration (CI): CI involves automatically building and testing code changes as they are integrated into a shared repository. This allows for early detection of integration issues and facilitates rapid feedback. A common CI process involves these steps:

- Developers commit code changes to a version control system (e.g., Git).

- The CI server (e.g., Jenkins, GitLab CI, CircleCI) automatically detects the changes.

- The CI server builds the application and runs automated tests (unit tests, integration tests).

- If the build and tests pass, the CI server can trigger further actions, such as creating a deployable artifact.

- Continuous Delivery (CD): CD builds upon CI by automating the release of validated code changes to production-like environments. CD ensures that the application is always in a deployable state. The steps include:

- The CI pipeline produces a deployable artifact.

- The CD pipeline automatically deploys the artifact to a staging environment.

- Automated tests (e.g., end-to-end tests, performance tests) are run in the staging environment.

- If the tests pass, the CD pipeline deploys the artifact to production.

- Continuous Deployment: This is an extension of CD where every code change that passes the automated tests is automatically deployed to production. This requires a high degree of automation, robust testing, and monitoring.

- Benefits of Automated Deployment Pipelines:

- Faster Release Cycles: Automating the deployment process significantly reduces the time it takes to release new features and bug fixes.

- Reduced Errors: Automated testing and deployment steps minimize the risk of human error, leading to more reliable deployments.

- Increased Efficiency: Automation frees up developers and operations teams to focus on more strategic tasks.

- Improved Collaboration: Pipelines facilitate better collaboration between development and operations teams by providing a shared, automated process.

Infrastructure-as-Code Tools

Infrastructure-as-code (IaC) allows you to manage and provision infrastructure resources programmatically, using code. This approach treats infrastructure as a version-controlled asset, enabling automation, repeatability, and consistency across environments. IaC is particularly valuable when modernizing applications, as it allows you to rapidly provision and scale infrastructure for microservices.

- Terraform: Terraform, developed by HashiCorp, is a popular IaC tool that enables you to define and manage infrastructure using declarative configuration files. It supports a wide range of cloud providers (AWS, Azure, Google Cloud) and on-premise infrastructure.

- Example: A Terraform configuration file can be used to define the resources required for a microservice, such as virtual machines, load balancers, and databases.

Terraform then uses this configuration to provision and manage these resources in the cloud.

- Example: A Terraform configuration file can be used to define the resources required for a microservice, such as virtual machines, load balancers, and databases.

- AWS CloudFormation: AWS CloudFormation is a service provided by Amazon Web Services (AWS) that allows you to define and manage infrastructure as code on AWS. It uses templates written in JSON or YAML to describe the resources you want to create.

- Example: A CloudFormation template can be used to create a Kubernetes cluster, deploy containerized microservices, and configure networking and security settings.

- Azure Resource Manager (ARM) Templates: Azure Resource Manager (ARM) templates are used to define and manage infrastructure as code on Microsoft Azure. They are written in JSON and allow you to define the resources and their configurations.

- Example: An ARM template can be used to create a virtual network, deploy virtual machines, and configure storage accounts.

- Benefits of Infrastructure-as-Code:

- Automation: Automates the provisioning and management of infrastructure, reducing manual effort.

- Repeatability: Ensures consistent infrastructure deployments across different environments (development, testing, production).

- Version Control: Infrastructure configurations can be version-controlled, allowing for tracking changes and rollback capabilities.

- Scalability: Easily scale infrastructure resources to meet the demands of your application.

Managing and Scaling Microservices in a Cloud Environment

Cloud environments provide the scalability and flexibility needed to effectively manage and scale microservices. Several methods and services are available to support the efficient operation of microservices in the cloud.

- Container Orchestration: Container orchestration platforms, such as Kubernetes, automate the deployment, scaling, and management of containerized applications. Kubernetes can handle the following:

- Service Discovery: Kubernetes automatically discovers and manages the network addresses of microservices, allowing them to communicate with each other.

- Load Balancing: Kubernetes distributes traffic across multiple instances of a microservice to ensure high availability and performance.

- Scaling: Kubernetes automatically scales the number of microservice instances based on demand, ensuring that the application can handle varying levels of traffic.

- Health Checks: Kubernetes monitors the health of microservices and automatically restarts unhealthy instances.

- Cloud-Native Load Balancers: Cloud providers offer load balancing services that can distribute traffic across multiple instances of a microservice. These services often provide features such as health checks, SSL termination, and traffic management rules.

- Example: AWS Elastic Load Balancing (ELB), Azure Load Balancer, and Google Cloud Load Balancing.

- Service Meshes: Service meshes, such as Istio and Linkerd, provide advanced features for managing and securing microservices communication. They offer features such as:

- Traffic Management: Advanced traffic routing, traffic splitting, and fault injection.

- Security: Mutual TLS (mTLS) for secure communication between microservices.

- Observability: Monitoring, logging, and tracing of microservices.

- Autoscaling: Autoscaling allows you to automatically adjust the number of microservice instances based on demand. Cloud providers offer autoscaling services that monitor metrics such as CPU utilization, memory usage, and request rates.

- Example: AWS Auto Scaling, Azure Autoscale, and Google Cloud Autoscaler.

Containerization and Orchestration

Containerization and orchestration are essential for modernizing applications, enabling efficient deployment, scaling, and management of microservices.

- Containerization with Docker: Docker is a popular containerization platform that allows you to package applications and their dependencies into isolated containers. Docker containers provide several benefits:

- Portability: Containers can run consistently across different environments, ensuring that the application behaves the same way regardless of where it is deployed.

- Isolation: Containers isolate applications from each other and the underlying infrastructure, improving security and resource utilization.

- Efficiency: Containers are lightweight and consume fewer resources than virtual machines.

- Orchestration with Kubernetes: Kubernetes is a container orchestration platform that automates the deployment, scaling, and management of containerized applications. Kubernetes provides the following features:

- Deployment Automation: Kubernetes automates the deployment of containers, ensuring that they are running and available.

- Scaling: Kubernetes can automatically scale the number of container instances based on demand.

- Self-Healing: Kubernetes automatically restarts unhealthy containers and replaces failed instances.

- Service Discovery: Kubernetes provides service discovery, allowing containers to find and communicate with each other.

- Benefits of Containerization and Orchestration:

- Improved Resource Utilization: Containers are more efficient than virtual machines, leading to better resource utilization.

- Faster Deployments: Containers can be deployed quickly and easily, reducing deployment times.

- Increased Scalability: Kubernetes makes it easy to scale applications up or down based on demand.

- Simplified Management: Kubernetes simplifies the management of containerized applications, automating many of the tasks associated with deployment and scaling.

- Real-World Example: Consider a large e-commerce platform that is modernizing its monolithic application into microservices. By using Docker for containerization and Kubernetes for orchestration, they can easily deploy and scale individual microservices, such as the product catalog, shopping cart, and payment processing services. This allows them to respond quickly to changes in demand, improve application performance, and increase overall resilience. For instance, during a major sales event, the platform can automatically scale the product catalog and payment processing services to handle the increased traffic, ensuring a smooth user experience.

Testing and Quality Assurance in a Modernized Environment

Modernizing a monolithic application into a microservices architecture fundamentally changes the landscape of testing and quality assurance. The distributed nature of microservices introduces new complexities, necessitating a shift in testing strategies and tools. Rigorous testing is crucial to ensure the reliability, performance, and maintainability of the modernized application. A robust testing strategy is not just about verifying individual components but also about validating the interactions between them and the overall system behavior.

Types of Testing Applicable to a Microservices Architecture

The transition to a microservices architecture requires a multifaceted testing approach. Different types of testing serve specific purposes, ensuring comprehensive coverage of the application’s functionality, performance, and resilience. This includes unit, integration, end-to-end, performance, and security testing.

- Unit Testing: This involves testing individual microservices in isolation. The focus is on verifying the internal logic and behavior of each service’s components, such as functions, classes, and modules. Unit tests are typically automated and executed frequently during development. For example, a user service might have unit tests to validate user registration, login, and profile update functionalities. A test might verify that a function that calculates the age correctly handles various date inputs and edge cases, like leap years.

- Integration Testing: Integration tests verify the interactions between different microservices. These tests ensure that services communicate and collaborate correctly, exchanging data and achieving the desired outcomes. This type of testing can use techniques such as contract testing. An example could involve testing the interaction between an order service and a payment service, ensuring that payment processing is initiated and completed successfully when an order is placed.

- End-to-End (E2E) Testing: E2E tests simulate user interactions across the entire application, verifying the complete workflow from start to finish. These tests often involve multiple microservices and external dependencies, like databases and third-party APIs. For instance, an E2E test might simulate a user logging in, browsing products, adding items to a cart, and completing a purchase. This tests the entire customer journey.

- Performance Testing: Performance tests evaluate the performance characteristics of the application, such as response time, throughput, and resource utilization under various load conditions. This includes load testing to simulate expected user traffic and stress testing to identify the system’s breaking point. Performance testing helps to identify bottlenecks and optimize service performance. For example, load testing might simulate thousands of concurrent users browsing products to assess the system’s ability to handle peak traffic during a sales event.

- Security Testing: Security testing focuses on identifying and mitigating security vulnerabilities. This involves assessing the application’s defenses against common threats, such as injection attacks, cross-site scripting (XSS), and unauthorized access. Security testing can include penetration testing, vulnerability scanning, and static code analysis. For example, penetration testing might attempt to exploit vulnerabilities in authentication mechanisms or data storage to assess the application’s resilience to attacks.

Integration Testing Strategies for Microservices

Integration testing in a microservices environment requires careful planning and execution due to the distributed nature of the system. Various strategies can be employed to effectively test the interactions between services, ensuring they work together seamlessly. These strategies include contract testing, consumer-driven contracts, and service virtualization.

- Contract Testing: Contract testing verifies that services adhere to the agreed-upon contracts for communication. These contracts define the structure and format of data exchanged between services. Contract testing helps to prevent integration issues by ensuring that services understand and can process each other’s data. For example, a contract test might verify that a product service correctly responds to a request from an order service, providing the necessary product details in the expected format.

Tools like Pact and Spring Cloud Contract are commonly used for this.

- Consumer-Driven Contracts: Consumer-driven contracts (CDCs) focus on the consumer’s perspective, defining contracts based on how consumers use the services. This approach ensures that services provide the data and functionality required by their consumers. CDCs help to reduce the risk of breaking changes by aligning service contracts with consumer needs. For example, an order service might define a contract specifying the data required from a product service to display product information in the order details.

- Service Virtualization: Service virtualization involves creating virtual representations of dependent services. This allows for testing a service in isolation, without requiring the actual dependent services to be available. Service virtualization is useful for testing services that rely on external APIs or databases that are not always accessible or easy to control. For example, a payment service can be tested using a virtualized payment gateway, simulating different payment scenarios and responses.

Techniques for Monitoring and Logging Microservices

Effective monitoring and logging are essential for understanding the behavior of microservices in production. These techniques provide insights into performance, errors, and user behavior, enabling proactive problem detection and resolution. Centralized logging, distributed tracing, and real-time monitoring are critical components of a robust monitoring and logging strategy.

- Centralized Logging: Centralized logging involves collecting logs from all microservices and storing them in a central location. This allows for easy searching, filtering, and analysis of log data. Tools like the ELK stack (Elasticsearch, Logstash, and Kibana) and Splunk are commonly used for centralized logging. Centralized logging facilitates the identification of errors, performance bottlenecks, and security issues across the entire system.

For example, a centralized log can show all the errors generated by all the services in a specific time frame.

- Distributed Tracing: Distributed tracing tracks the flow of requests across multiple microservices. This allows for identifying the root cause of performance issues and errors by tracing the path of a request through the system. Tools like Jaeger, Zipkin, and Datadog are used for distributed tracing. Distributed tracing provides visibility into service dependencies and helps to optimize request processing. For example, distributed tracing can help identify a slow-performing service that is causing delays in a critical user workflow.

- Real-Time Monitoring: Real-time monitoring involves continuously collecting and analyzing metrics from microservices. This provides insights into system performance, resource utilization, and user behavior in real-time. Monitoring tools often include dashboards and alerting capabilities to notify operators of critical issues. For example, real-time monitoring can alert operators when a service’s error rate exceeds a predefined threshold, indicating a potential problem.

Strategies for Ensuring Code Quality Throughout the Modernization Process

Maintaining high code quality is crucial during the modernization process. This involves adopting practices that promote clean, maintainable, and testable code. Code reviews, static code analysis, automated testing, and continuous integration/continuous delivery (CI/CD) are essential for ensuring code quality.

- Code Reviews: Code reviews involve having other developers review code changes before they are merged into the codebase. This helps to identify potential bugs, coding style violations, and security vulnerabilities. Code reviews promote knowledge sharing and ensure consistency in code quality. For example, a code review might identify a potential security flaw in a newly added authentication mechanism.

- Static Code Analysis: Static code analysis involves using automated tools to analyze code for potential issues, such as coding style violations, security vulnerabilities, and performance bottlenecks. These tools can identify issues early in the development process. Tools like SonarQube, ESLint, and Checkstyle are commonly used for static code analysis. Static code analysis helps to enforce coding standards and improve code quality. For example, static code analysis can detect the use of deprecated functions or coding style inconsistencies.

- Automated Testing: Automated testing is a cornerstone of ensuring code quality. Automated tests, including unit, integration, and end-to-end tests, are executed automatically as part of the development process. This allows for quickly identifying and addressing issues. Automated testing ensures that code changes do not break existing functionality. For example, a CI/CD pipeline can automatically run unit tests and integration tests whenever code changes are committed.

- Continuous Integration/Continuous Delivery (CI/CD): CI/CD is a software development practice that automates the build, test, and deployment processes. CI/CD pipelines ensure that code changes are integrated, tested, and deployed frequently and reliably. CI/CD pipelines help to catch errors early in the development cycle and accelerate the delivery of new features. For example, a CI/CD pipeline might automatically build, test, and deploy a microservice whenever code changes are pushed to the source repository.

Team Structure and Cultural Shifts

Modernizing a monolithic application necessitates significant adjustments to team structures and organizational culture. This transition, often overlooked, is crucial for realizing the full benefits of the modernization effort. Failure to adapt can lead to bottlenecks, resistance to change, and ultimately, project failure. A successful modernization journey requires a proactive approach to reshape teams, foster a collaborative environment, and equip individuals with the necessary skills to thrive in the new paradigm.

Impact of Modernization on Team Structures and Roles

Modernization projects fundamentally alter the roles and responsibilities within development teams. The monolithic structure, often characterized by specialized teams working in silos, gives way to more agile and cross-functional teams. This shift is driven by the need for faster iteration cycles, improved collaboration, and increased ownership of specific functionalities.The following are key changes to consider:

- Shift from Functional Silos to Cross-Functional Teams: Instead of separate teams for frontend, backend, and database, modernization often encourages the formation of teams responsible for specific business capabilities or microservices. Each team typically comprises developers, testers, and operations personnel, enabling end-to-end ownership and faster feedback loops.

- Emergence of DevOps Roles: Modernization projects heavily rely on DevOps principles, leading to the rise of dedicated DevOps engineers or the integration of DevOps practices into existing roles. These professionals are responsible for automating infrastructure, managing deployments, and ensuring the smooth operation of the application in production. They act as a bridge between development and operations.

- Increased Specialization in Specific Technologies: While cross-functional teams are essential, modernization also introduces the need for specialization in specific technologies, such as containerization (e.g., Docker, Kubernetes), cloud platforms (e.g., AWS, Azure, GCP), and API management. Experts in these areas provide guidance, support, and training to the broader team.

- Changes in Testing Responsibilities: The shift to microservices and continuous integration/continuous delivery (CI/CD) necessitates a change in testing practices. Teams become responsible for writing and maintaining their own unit, integration, and end-to-end tests. Test automation becomes paramount, and testing roles may evolve to include responsibilities for designing and implementing automated test suites.

- Focus on Business Domain Knowledge: As teams become responsible for specific business capabilities, there is an increased emphasis on domain knowledge. Developers need to understand the business logic and requirements to effectively build and maintain the application. This often involves close collaboration with business analysts and product owners.

Designing a Plan for Fostering a DevOps Culture Within the Organization

Implementing a DevOps culture requires a strategic plan that addresses people, processes, and technology. This plan should be iterative, starting with pilot projects and gradually expanding across the organization. The goal is to break down silos, promote collaboration, and automate processes to achieve faster release cycles and improved application stability.Key elements of a DevOps culture implementation plan include:

- Executive Sponsorship and Buy-In: Securing support from executive leadership is crucial for driving cultural change. Leaders must champion the DevOps initiative, allocate resources, and communicate the vision to the entire organization.

- Defining DevOps Principles and Values: Clearly articulate the core principles and values of DevOps, such as collaboration, automation, continuous improvement, and shared responsibility. This provides a common framework for decision-making and behavior.

- Establishing Cross-Functional Teams: Organize teams around business capabilities or microservices, including representatives from development, operations, and testing. This promotes shared ownership and reduces handoffs.

- Implementing Automation: Automate as many processes as possible, including build, testing, deployment, and infrastructure provisioning. This reduces manual errors, speeds up release cycles, and improves efficiency.

- Adopting Continuous Integration and Continuous Delivery (CI/CD): Implement a CI/CD pipeline to automate the software delivery process. This enables frequent and reliable releases, allowing for faster feedback and quicker responses to market demands.

- Implementing Infrastructure as Code (IaC): Manage infrastructure using code, enabling version control, automation, and reproducibility. This allows for consistent and repeatable deployments.

- Monitoring and Observability: Implement comprehensive monitoring and logging to track application performance, identify issues, and gain insights into user behavior. This enables proactive problem-solving and continuous improvement.

- Promoting Collaboration and Communication: Foster a culture of collaboration and communication through regular meetings, shared tools, and open communication channels. This helps break down silos and facilitates knowledge sharing.