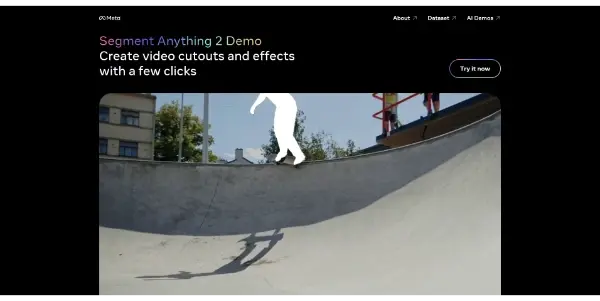

Sam2 by Meta

An open-source model created by Meta AI that lets you segment any object in an image or video. Simply point and click on an element to create a precise mask

Sam2: Meta's Open-Source AI for Precise Object Segmentation

Meta AI's Sam2 (Segment Anything Model 2) represents a significant advancement in image and video segmentation technology. This open-source model empowers users to create highly accurate masks around any object within an image or video with remarkable ease. Unlike many other segmentation tools requiring complex parameters or extensive training, Sam2 offers a simple, intuitive point-and-click interface for precise object isolation.

What Sam2 Does

Sam2 excels at semantic segmentation, the task of assigning a label (in this case, a mask) to each pixel in an image or video frame. Its core functionality is to generate precise masks around objects simply by pointing and clicking on them. This interactive process allows for quick and accurate segmentation, even for complex scenes with overlapping or irregularly shaped objects. The model intelligently understands context and object boundaries, resulting in clean and refined masks.

Main Features and Benefits

- Intuitive Interface: The point-and-click interface makes Sam2 incredibly user-friendly, requiring minimal technical expertise.

- High Accuracy: The model produces highly accurate masks, even for challenging objects and scenes.

- Versatility: Sam2 works effectively on both images and videos, providing consistent performance across different media types.

- Open-Source: The open-source nature of Sam2 fosters collaboration and allows for further development and customization by the community.

- Speed and Efficiency: While precise benchmarks depend on hardware, Sam2 is designed for relatively fast processing, making it suitable for various applications.

- Adaptability: The model can be fine-tuned for specific tasks or datasets to enhance its performance in particular domains.

Use Cases and Applications

The versatility of Sam2 opens doors for numerous applications across diverse fields:

- Image Editing: Precisely select and edit specific objects in photos, simplifying complex tasks like background removal or object manipulation.

- Video Editing: Isolate and track objects in videos for effects, animation, or content creation.

- Robotics: Enable robots to better understand their environment by accurately segmenting objects for manipulation or navigation.

- Medical Imaging: Assist in medical image analysis by accurately segmenting organs, tissues, or anomalies for diagnosis and treatment planning.

- Autonomous Driving: Improve object detection and recognition in self-driving vehicles for safer and more efficient navigation.

- Content Creation: Streamline the creation of digital content by simplifying object selection and manipulation for designers and artists.

- Agricultural Applications: Identify and count plants, detect diseases, or analyze crop yields from aerial imagery.

Comparison to Similar Tools

Sam2 distinguishes itself from other segmentation tools through its ease of use and high accuracy. While other tools may require extensive training data or complex parameter tuning, Sam2's intuitive interface allows even non-experts to achieve excellent results. Compared to older, more computationally intensive segmentation methods, Sam2 offers a significant improvement in speed and efficiency without compromising accuracy. Specific comparisons to other tools like those offered by Google or other AI companies would require a detailed benchmark study, but Sam2’s open-source nature and point-and-click interface are key differentiators.

Pricing Information

Sam2 is completely free to use. Being open-source, the model's code and pre-trained weights are publicly available, eliminating licensing fees or subscription costs. This accessibility makes Sam2 a powerful and cost-effective tool for a wide range of users and applications.