I-JEPA

An LLM model that learns by imitating the real world. This AI is faster and more efficient than other similar AIs.

I-JEPA: A Novel Approach to Large Language Model Training

I-JEPA (Image Joint Embedding Predictive Architecture) represents a significant advancement in the field of Large Language Models (LLMs). Unlike many LLMs that rely heavily on massive datasets of text and code, I-JEPA adopts a unique approach, learning by imitating the real world through the process of image prediction. This innovative methodology leads to a model that is both faster and more efficient than its predecessors, opening up exciting new possibilities for various applications.

What I-JEPA Does

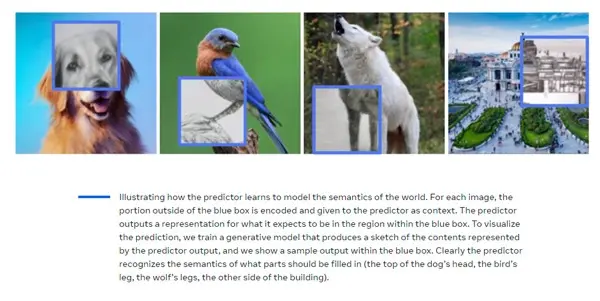

I-JEPA is a powerful LLM that leverages a predictive framework. Instead of directly learning from massive text corpora, it learns by predicting missing or corrupted parts of images. This process involves training the model to generate plausible image completions based on partially observed data. The underlying principle is that by accurately predicting the visual world, I-JEPA implicitly learns a rich representation of the underlying structure and relationships within data, which can then be applied to various tasks beyond image completion. This fundamentally different training paradigm allows I-JEPA to achieve impressive results with less computational resources compared to traditional LLMs.

Main Features and Benefits

- Efficient Training: I-JEPA's unique training methodology requires significantly less computational power and data compared to traditional LLMs, resulting in faster training times and reduced environmental impact.

- Improved Performance: By focusing on predicting realistic image completions, I-JEPA implicitly learns robust representations that translate to improved performance on various downstream tasks.

- Versatility: While initially focused on image processing, the learned representations in I-JEPA can be applied to a wider range of tasks, including text generation and other modalities, showing potential for cross-modal applications.

- Generalizability: The model demonstrates strong generalizability, meaning it can adapt to new tasks and datasets with minimal fine-tuning.

- Novel Approach: Its unique approach of learning from image prediction offers a new perspective on LLM training, paving the way for more efficient and effective models.

Use Cases and Applications

The efficiency and versatility of I-JEPA open doors to several practical applications:

- Image Inpainting and Restoration: Filling in missing or damaged parts of images with realistic detail.

- Image Generation: Creating new images based on learned patterns and structures.

- Super-resolution: Enhancing the resolution of low-resolution images.

- Data Augmentation: Generating synthetic data for training other machine learning models.

- Cross-Modal Applications: Potential for extending its capabilities to other data types, such as text and audio, enabling new applications in areas like machine translation and speech synthesis.

Comparison to Similar Tools

While direct comparisons are difficult without specific benchmarks against other LLMs, I-JEPA's key differentiator lies in its training methodology. Traditional LLMs, like GPT models, rely on vast text datasets and consume immense computational resources. I-JEPA's focus on image prediction offers a more efficient alternative, potentially achieving comparable or even superior performance with significantly reduced computational needs. Future research will likely provide clearer comparisons against existing LLMs.

Pricing Information

I-JEPA is currently available for free. However, access may be limited through research collaborations or specific pre-trained model releases. The future pricing model, if any, is yet to be announced.

Conclusion

I-JEPA represents a compelling new direction in LLM development. Its innovative approach to learning through image prediction showcases the potential for developing more efficient and effective AI models. While still a relatively new technology, its ability to achieve high performance with reduced computational costs makes it a promising tool with vast potential applications across various domains. As research continues, we can expect further improvements and expansion of I-JEPA's capabilities.